Inspired by Slime Volleyball Gym, I built a 3D Volleyball environment using Unity's ML-Agents toolkit. The full project is open-source and available at: 🏐 Ultimate Volleyball.

If you're interested in an overview of the implementation details, challenges and learnings — read on! For a background on ML-Agents, please check out my Introduction to ML-Agents article.

Versions used: Release 18 (June 9, 2021)

Python package: 0.27.0

Unity package: 2.1.0

🥅 Setting up the court

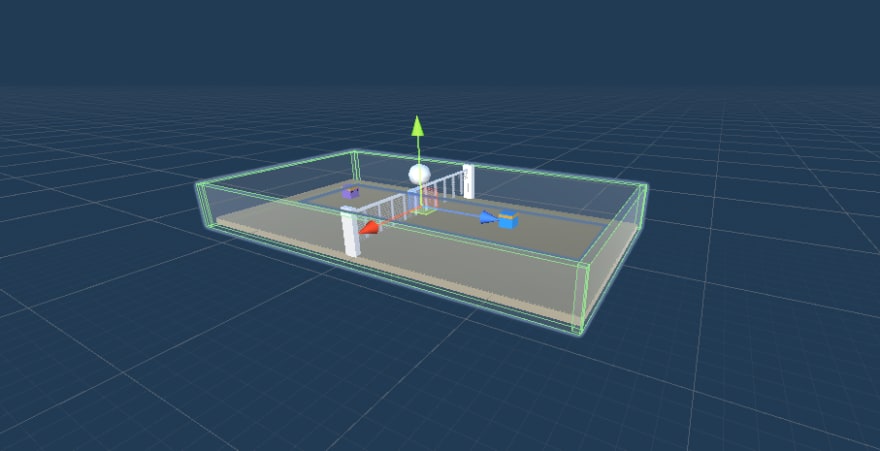

Here's what I used:

- Agent Cube prefabs from the ML-Agents sample projects

- Volleyball prefab & sand material from this free Beach Essentials Asset Pack

- Net, walls and floor made up of resized & rotated cube objects (net material from Free Grids & Nets Materials Pack)

- Thin floor representing the blue and purple "goals"

Some other implementation details:

- The agent cubes have a sphere collider to help control the ball trajectory

- I used 2 layers for the ground: the thin 'goals' on top, and the walkable floor below it. Since the goal colliders are set as 'triggers' (to check when the ball hits the floor), they will no longer register collisions. Hence the walkable floor is used for collision physics instead.

- I also added an invisible boundary around the court. I found that during training, agents may shy away from learning to hit the ball at all if you penalize them for hitting the ball out of bounds.

📃 Scripts

4 scripts were used to define the environment:

-

VolleyballAgent.cs(Link)- Defines observations

- Controls agents' movement & jump actions

-

VolleyballController.cs(Link)- Checks whether the ball has hit the floor, and triggers allocation of rewards in

VolleyballEnv.cs

- Checks whether the ball has hit the floor, and triggers allocation of rewards in

-

VolleyballEnv.cs(Link)- Randomises spawn positions

- Controls starting/stopping of an episode

- Allocates rewards to agents

-

VolleyballSettings.cs(Link)- Contains basic settings e.g. agent's run speed and jump height

🤖 Agents

I planned to train the agents using PPO + Self-Play. To set this up:

- Set 'Team Id' under Behavior Parameters to identify opposing teams (0 = blue, 1 = purple)

- Ensure the environment is symmetric

Observations:

Total observation space size: 11

- Rotation (Y-axis) - 1

- 3D Vector from agent to the ball - 3

- Distance to the ball - 1

- Agent velocity (X, Y & Z-axis) - 3

- Ball velocity (X, Y & Z-axis) - 3

An alternative to vector observations is to use Raycasts.

Available actions

4 discrete branches:

- Move forward, backward, stay still (size: 3)

- Move left, right, stay still (size: 3)

- Rotate left, right, stay still (size: 3)

- Jump, no jump (size: 2)

🍭 Rewards

Self-play is finicky, and I had many unstable runs. From the ML-Agents documentation of Self-Play, they suggest keeping the rewards simple (+1 for the winner, -1 for the loser) and allowing for more training iterations to compensate.

📚 Resources

For others looking to build their own environments, I found these resources helpful:

- Hummingbirds Course by Unity (unfortunately outdated, but still useful).

- ML-Agents Sample Environments (particularly the Soccer & Wall Jump environments)

👟 Next steps

Thanks for reading!

Next, I'll share my learnings training an agent using PPO + self-play.

If you're interested in trying out the environment yourself, you can submit your model to play against others. Sign up to get notified when submissions open.

Any feedback and suggestions are welcome.

Top comments (0)