Federated Learning is a machine learning technique that enables data models to obtain experience from different data sets located in different sites (e.g. local data centres, a central server) without sharing the training data. Federated Learning, thus, allows personal data to remain on local sites, reducing the possibility of personal data breaches. It is a decentralised form of machine learning. Google introduced this concept in 2016 in a paper titled, ‘Communication Efficient Learning of Deep Networks from Decentralized Data. This and another research paper titled ‘Federated Optimization: Distributed Machine Learning for On-Device Intelligence.’ provided the first definition of Federated Learning.

Then, in 2017, Google, in a blog post, ‘Federated Learning: Collaborative Machine Learning without Centralized Training Data,’ explained in detail the nuances of this technique.

Need for Federated Learning

Most algorithm-based solutions today- spam filters, chatbots, recommendation tools, etc.- actively use artificial intelligence to solve modern world solutions. These are based on learning from data — Heaps of training data fed as input for the algorithm to learn and make decisions.

Many of these applications were trained on data available in one place. Unfortunately, gathering all the data at one location is practically impossible in today’s world of myriad applications. Getting data at a single site also brings an additional overhead of sharing the data via secure paths in such vulnerable times. However, today’s Artificial Intelligence is shifting towards adopting a decentralized approach. The new-age AI models are being trained collaboratively on edge or at the source, with data from cell phones, laptops, private servers, etc. This evolved form of Artificial Intelligence (AI) training is called federated learning, and it’s becoming the standard for meeting a raft of new regulations for handling and storing private data. By processing data at the edge, federated learning offers a way to capture raw data streaming from sensors on various touch points such as satellites, machines, servers, and many smart devices.

The Federated Learning Market

According to Research and Markets, the Global Federated Learning Market size is expected to reach $198.7 million by 2028, rising at a market growth of 11.1% CAGR during the forecast period. The growing need for improved data protection and privacy and the increasing requirement to adapt data in real-time to optimize conversions automatically are driving the advancement of the federated learning solutions market. Moreover, by retaining data on devices, these solutions assist organizations in leveraging machine learning models, boosting the federated learning market forward.

How does Federated Learning Work?

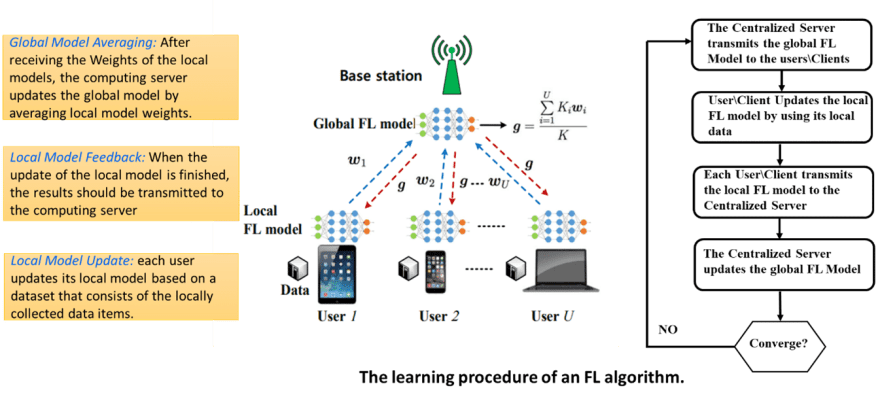

Federated learning allows AI algorithms to gain experience from a vast range of data located at different sites. The approach enables several organizations to collaborate on developing models without directly sharing sensitive clinical data.

The Federated Learning process has two steps: Training and Inference.

Training:

The local machine learning models are initially trained on local heterogeneous datasets and create local training datasets in each user’s device. The parameters of the models are exchanged between local data centres periodically. Usually, these parameters are encrypted before exchanging, improving data protection and cybersecurity.

After a shared global model is built, the characteristics of the global model are shared with local data centres to integrate the global model into their ML local models. The global model will combine the learning from the local models and, as a result, get a holistic view.

Inference:

In inference, the model is stored on the user’s device. Thus, predictions are quickly prepared using the model on the user device.

Use-cases of Federated Learning

Federated learning methods are critical in supporting privacy-sensitive applications where the training data is distributed at the network edge. The models that come out of this are trained on various data, all without compromising privacy.

Some of the use cases of Federated Learning are:

1. Mobile/Smartphone Applications:

One of the initial applications of federated learning involves building models based on user behaviour from smartphone usage, such as for next typed-word prediction, face detection to unlock phones, voice recognition, etc. Google uses federated learning to improve on-device machine learning models like “Hey Google” in Google Assistant, which allows users to issue voice commands.

2. Quality Inspection in Manufacturing Sector:

The introduction of federated learning is advantageous for enterprises, manufacturing organizations and research institutions to collaborate on applications such as quality inspection, anomaly detection, object detection, etc. Use cases where the error/faulty images in the production line are few in number and also fewer in variety; using federation learning, multiple parties can collaborate to train a robust quality inspection model for similar products or use cases.

3. Healthcare:

Hospitals deal with a humongous amount of patient data for predictive healthcare applications. They must operate under strict privacy laws and practices, and any slip may bring legal, administrative, or ethical challenges. Federated learning serves as a solution for such kind of applications that require data to remain local. It effectively reduces the strain on the network and enables private learning between various devices/organizations.

4. Autonomous Vehicles:

Federated learning makes real-time predictions possible, which is one of the USPs for developing autonomous cars. Information such as real-time updates on the road and traffic conditions faster decision-making. This can provide a better and safer self-driving car experience. Research, Real-time End-to-end federated learning: An automotive case study has proved that federated learning can reduce training time in wheel steering angle prediction in self-driving vehicles.

5. Federated Learning for Security and Communications:

Privacy preservation, safe multiparty processing, and cryptography are some confidentiality technologies that can be utilized to improve the data protection possibilities of federated learning. An IEEE Study introduces Federated Learning-based distributed learning architecture in 6G. In this architecture, many decentralized devices associated with different services can collaboratively train a shared global model (e.g., anomaly detection, recommendation system, next-word prediction, etc.) by using locally collected datasets.

6. Federated Learning in Wireless Networks:

Federated Learning can handle resource allocation, signal detection, and user behaviour prediction problems in future 6G networks. FL algorithms can address various resource management problems, such as distributed power control for multi-cell networks, joint user association and beamforming design, & dynamic user clustering. Users’ quality-of-service (QoS) can be predicted using FL, where each BS uses the FL algorithm based on some stored information. All BSs transmit the FL model results to a server to obtain a unified FL model. FL algorithms can be utilized to automatically design the BS codebooks and decoding strategy of users to minimize the bit error rate.

(Read: Machine Learning Based Network Traffic Anomaly Detection)

Challenges in Federated Learning

1. Systems Heterogeneity:

A network consists of different devices, and the storage, computational, and communication capabilities of each device in federated networks may differ due to heterogeneity in hardware (CPU, memory), network connectivity, and power (battery level).

2. Statistical Heterogeneity:

Devices generate and collect data in a non-identically distributed manner across the network. This data generation paradigm will not be aligned with frequently-used Independent and Identically Distributed Data (IID) distribution.

3. Security:

Any Malicious user can add a security threat by Poisoning. Poisoning comes in two forms:

• Data Poisoning: During a Federated training process, several devices can participate by contributing their on-device training data. Here, it is challenging to detect & prevent malicious devices from sending fake data to poison the training process. This process poisons the model.

• Model Poisoning: In this form, malicious clients modify the received model by tampering with its parameters before sending it back to the central server for aggregation. As a result, the global model is severely poisoned with invalid gradients during the aggregation process.

Conclusion:

Federated learning is facilitating the evolution of ML approaches within businesses. Organizations are pushing efforts towards a thorough investigation of federated learning. Using FL, companies may reinforce to re-look at their existing algorithms and improve their AI applications. A challenge that may risk the adoption of this technology is “trust”. Researchers are looking at multiple ways and incentives to discourage parties from contributing phoney data to sabotage the model or dummy data to reap the model’s benefits without putting their data at risk.

`

Top comments (0)