If you are confused about how OpenAI APIs work, this post will give you a high overview and explain to you how you can utilize the Basic Chat Completion API.

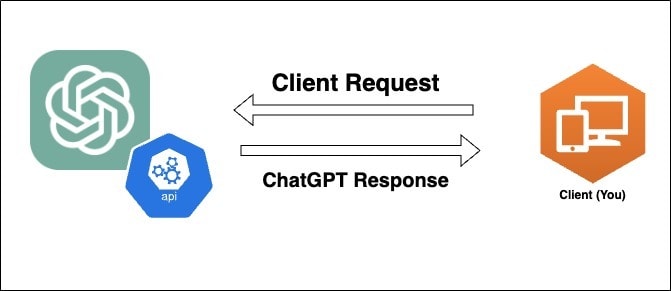

Just like any other RESTful APIs you have worked with before, all we do is setup a request from our client and send the payload to OpenAI’s server and we will receive a response back from them based on the query we sent.

But what do you put in the client request?

The client request is composed of the instructions that we want OpenAI to process and then send back a response based off this payload. For OpenAI, the payload will consist of the model we want to use, messages (prompt, assistant and user messages) and temperature.

As seen above, messages will contain three sets of roles, system, assistant, and user. All the messages should be added to this list if you are working with a chatbot that needs to have the memory of previous chats between user and assistant.

For the temperature, the lower the temperature the more predictable and repetitive the responses returned will be. 0.7 is the recommended temperature but tune it to fit your needs.

I hope you have learned something new!

Don’t forget to follow my socials for more of these short tutorials.

Top comments (2)

Thanks for this post

Hope you learned something from it. ❤️