Earlier this week Genderify, an AI-driven platform that “identifies” users’ gender based on their name, launched and listed themselves on ProductHunt. They received a lot of very valid criticism both on ProductHunt and other social media, but I think they’re worthy of some more criticism from me on here as well.

Let’s start by taking a closer look at what Genderify really does. This is what they state on their ProductHunt page:

Genderify is an AI-based platform that instantly identifies the person’s gender by their name, username, or email. Our system can check an unlimited number of names, usernames, and emails to determine even the false ones and most incomprehensible combinations.

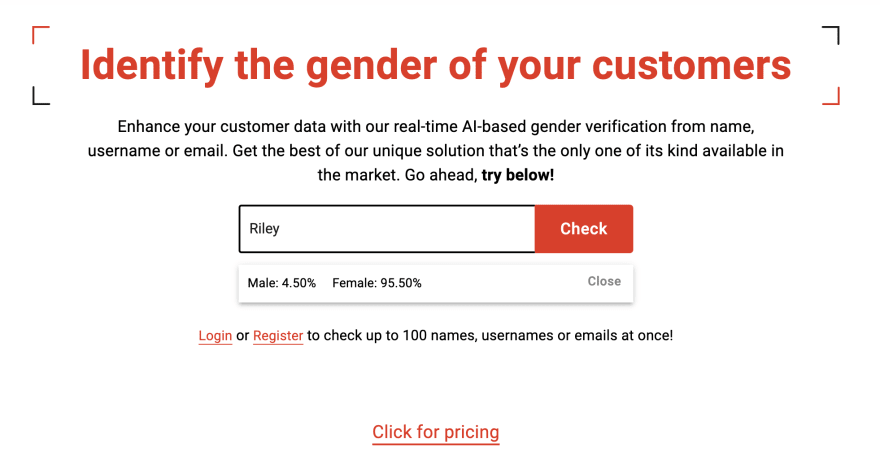

In practice, this means that you can enter any name, username or email address, and their platform will return two numbers: how confident they are that the person is male or female.

They make those predictions using data they obtained from publicly available governmental sources, and information from social networks.

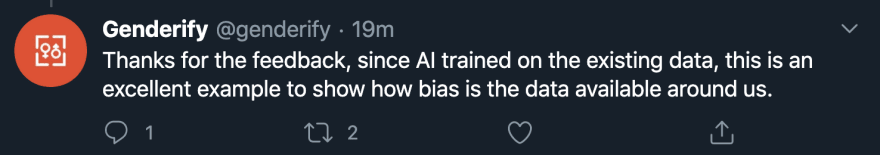

They’re not clear how they obtained this information though, or which social networks they’re talking about, so it’s hard to go into detail about their training data, but they definitely did start with a biased dataset, even admitting so themselves on Twitter.

Most surveys, sign-up forms and government data don’t include genders outside of the binary. As I talked about in a previous article, the majority of forms only offer two options: male or female, entirely erasing non-binary people from their training data.

Then there’s also the issue that whether names are considered “male”, “female” or “neutral” is dependent on country, culture and language. It’s unclear how those are all represented in the datasets they used, but there’s a good chance that it’s heavily skewed towards the American perspective, if that’s where the majority of their data is collected.

So that biased, incorrect and incomplete dataset is what will decide which gender a user has based on their name or email address. What could possibly go wrong?

As of now, their AI only outputs numbers for two options, male and female. By doing so they not only erase, but also misgender, everyone outside of the gender binary.

A non-binary person will be given a male or female label based on their name. Even if they have a name that to most people in their culture is considered neutral, the system will still say they’re “most likely male” or “most likely female”.

This is very similar to giving people only two options to choose from on a form, which is already bad, with the difference being that an automated guess takes all choice away from the user and just assumes gender, making Genderify even worse.

Being misgendered is harmful enough already as it is, we don’t need tech companies to provide it as a service.

They are consistent though, as their predictions are wildly inaccurate for everyone, and don’t just affect trans and non-binary people. Some men got classified as women, Hillary Clinton was assumed to be a man, so was Oprah Winfrey, trash is considered male and male is considered female. I also tried to run the same name (Mathilde) once by itself, and once with a last name included (Mathilde Fossheim). Mathilde was labeled as a woman (85%), Mathilde Fossheim was labeled as a man (77%).

And as was pointed out by several Twitter users, they give very biased and sexists results for personality traits and professions as well. Adding a Dr. title to someone’s name instantly changes their assumed gender from female to male, nurse is labeled as female while doctor is labeled as male, wise is male and pretty is female, and the list goes on.

It’s not particularly clear what they expect people to use this API for, since in general it’s cheaper, easier and more accurate to just ask people their gender (or even better, to not collect any gender information at all).

Some are suggesting their service could mainly be “useful” for automating marketing and advertising, but there focusing on gender can again lead to reinforcing stereotypes.

But nothing is restricting their software from being used for other purposes. They praise themselves for having an easy to use API, starting from as low as $10 after the free trial expires. This means anyone can set it up and run an analysis on any dataset they have access to.

In those instances, being mislabeled by the system could for example mean being addressed with the wrong pronouns, losing access to information (think of the “women only” app that used facial recognition to determine whether you were allowed access), or facing discrimination based on assumed gender.

They now also provide the option to correct them if they mislabeled anyone, saying they’re “going to improve [their] gender detection algorithms for the LGBTQ+ community”.

This means that at some point they could even try to predict whether someone is more likely to be non-binary or trans based on their name. Given the harassment trans people already face (just earlier a Trump rally chanted to “Kill transgenders!”), providing free software to anyone that wants to try and identify them is outright dangerous.

Products like Genderify are harmful. They’re built on top of biased and inaccurate data, by people who seem to have no interest in risk management or the societal impact of their product, and released for basically no cost into the open for anyone to use.

I talked a lot about Genderify in this article, but the same criticism goes for a lot of other tech products. In 2017, researchers tried to build an AI that based on pictures predicts if someone’s gay, and just as recent as June 2020 a tool to depixelate faces was released. Then there’s also the examples of law enforcement using racist AI, racist AI being used for dynamic pricing and racist search engines, and the list goes on.

We really need to do better as an industry, and make ethics and risk management a larger priority. We can’t keep building unethical, discriminatory, racist, sexist, homophobic and transphobic products. If it’s not ethical, inclusive and accessible, it’s not innovation.

More reading material:

- Algorithms of oppression (book)

- Automating inequality (book)

- Race after technology (book)

- Weapons of math destruction (book)

- Technically wrong (book)

- How surveillance has always reinforced racism (article)

- Data science ethics (course)

- Tarot cards of tech (tool)

Edit 1: At the time of writing more issues related to Genderify popped up. For example, they show a list of live requests (including full names and gender prediction) on the front page of their website, making it disappointing with regards to privacy as well.

Edit 2: A bit more research into their company also showed that they’re owned by SmartClick, an Armenian company creating AI solutions for a wide range of industries, creating products that predict tax fraud and face obstruction detection systems. @GameDadMatt on Twitter posted his research into their company as well.

Edit 3: Genderify is meanwhile removed from Twitter, ProductHunt and SmartClick’s LinkedIn profile, and the website seems to be taken offline as well. No official statement has been made by them yet, and it’s unclear whether they killed the product entirely, or will still be selling it to their customers, or re-release it later under a different name again.

Either way, this kind of tech is harmful, both because of its own biases and the possibility it gives third parties to cause (un)intentional harm to minoritized communities.

This was originally posted on fossheim.io, with early access through Patreon.

Latest comments (4)

Well, at least it got taken down already. That didn't take long. It gives me some hope that so many people identified it as a bad idea from the start.

It made me happy as well how fast it was taken down. I wish they had not removed all of their accounts and all the 'proof' of which company it came from though. But yes, gives me hope too that a lot of people realized how bad it was and spoke up.

There are SO MANY issues with this and I'm glad people are talking about them (and hopefully, shutting it down).

What came to mind when I saw this was that it was an odd, possibly unethical cash-grab. I could see marketers wanting to know if someone is more likely to be male or female based on their available data, just to be able to pump (sell) them more relevant content. From a marketing perspective, I also see this as risky. If someone has a more "male" name, what if they are buying traditionally "female" products? Now you're totally off on messaging and probably lost a customer.

That's a good point as well. Even ethics aside, given how often it gets it wrong it sounds way too inaccurate to be actually useful for marketing as well. So I totally can see how someone could lose customers that way