We're more than a few episodes in at this point, so I thought I could write a little about my process for creating a podcast episode. Different people have different processes and I (for one) love to rad about how other folks are doing, so I thought I'd share how I do it.

It Starts With An Idea

Each time that I have an idea for an episode, I make a note of that idea. The idea might be a few words, a topic name, or it might be fully flesdhed out. Either way, it get's noted.

This usually takes the form of a markdown file on my phone or laptop (which ever I happen to have closer to hand). These files are automatically added to a remote sync service, so that I can access the file across all of my devices.

I then start adding bullet point ideas to that markdown file. These bullet points list the ideas and topics that I want to cover in the episode; I use them to create a transcript for the episode at a later stage.

As an example, here are the bullet points which became Episode 2 - Getting Started with .NET Core:

- How to get started in .NET Core?

- What tools do I need?

- If it's cross platform, are there tools available everywhere?

- Do I even need the tooling installed?

- Docker (for a future episode) can be used rather than installing the tooling

- Mention Jess Fraz and her amazing dockerfiles git repo

- dot.net/core has a "try it in the browser" system

- allows folks to try .NET core in their browser without having to install anything

- what limitations are there?

- What can I make in .NET Core?

Once I've added enough bullet points to the idea, I create a card for it on a kanban service that I use. This helps me to keep track of the episode as it moves from initial idea to a recorded episode.

As with all kanban systems, I have a number of columns. These are (in order):

- Initial Idea

- Fleshed Out

- Trascript Written

- Recorded

- Edited

- Uploaded

- Scheduled

- Live

Fleshing It Out

Each week, I spend a few hours fleshing out the ideas that I have in my backlog. I'll pick a single idea and start writing about it. When I do this, my goal is to write around 2,000 words about it.

I create a heading for each of my first level bullet points and paste any ancestor bullet points into it. Using those ancestor bullet points, I'll focus specifically on that section.

Once the fleshed out version of the idea is written, I give each section the once-over - checking for obvious spelling and grammar issues. Then I'll move on to the transcript phase.

Transcription

This is where the real effort comes in.

I take the fleshed out version of the idea, which is mostly unrelated paragraphs, and turn it into a single narrative. I'm not brilliant at writing, but I try to have a single through line in each monologue: "What do I need to know to understand the basics of this topic?"

I'll work on the transcript until I have something which is around 4,000 words. Once it's at that stage, I'll read it aloud - this helps me to spot where commas, full stops, and semi-colons should be added.

this is the process that I use for all of my blog posts, whether they're for my personal blog, The Waffling Taylors, A Journey in .NET Core, or at dev.to

Recording

This is the bit you're really here for, right?

When the episode is ready to record, I fire up my 2014 Mac Book Air, connect my Blue Yeti microphone, and fire up Audacity. I plug my Bludio UFO headphones into the Blue Yeti and start monitoring the micrphone in Audacity.

By this point, I've made sure that my phone is on "do not disturb", and that I've muted any other devices in the room.

I then pick a few sentences from the transcription and start reading them aloud. This allows me to make sure that my volume levels are right, that I'm the right distance from the mic, and that there isn't any background noise.

When I'm ready to start, I unplug my Bludio UFOs from the mic and connect them to my laptop via BlueTooth. I hit the record button in Audacity and leave it recording background noise for around 10-15 seconds. I can use this background noise during the edit phase to remove any background noise that I'm unable to hear, but which my Blue Yeti can pick up.

I then read the entire transcript out loud. Sometimes it takes a few attempts (either I'll get interupted, or I'll find an issue in the transcript and have to edit it).

Once the recording is done, it's saved to disk.

Editing

When I'm ready to edit an episode, the first thing that I do is remove any background noise.

this is what the 10-15 seconds of silence is for at the beginning; and is a tip that I received from both Allen Underwood and Steve "Ardalis" Smith

Essentially, I select the 10-15 seconds of silence, then use Audacity's built in noise remover to get a noise profile for that silence. Then I apply that noise removal profile to the rest of the recording. et voila, no more background noise.

After removing the, now useless, empty 10-15 seconds, I apply a high pass filter.

For those who don't know, a high pass filter allows all sounds above a given frequency to pass through it untouched, but will reduce the amplitude of any sounds which are below the given frequency.

it allows the high frequencies to pass, you see

This is becasue my voice is a little deep and I want listeners to be able to hear me properly. I ususally pick 800hz with a roll off of 6dB. I have no idea whether these are the "right" values to pick, but they seem to give me what I want, so I use them.

Then I go through the episode and take out any verbal flubs ("umm"s and "err"s), and listen for any notes that I'd given myself ("take this bit out, Jamie", "music in here", etc.).

The final step for editing is to go through and add the snippets of music that I use. I've had a theme song created and I, essentially, just slice that up into sections. Anywhere that I use an h3 in the transcript, becomes a musical queue. I do this so that the listener has a chance to process what they've just heard - also it acts as a little break from me talking to them.

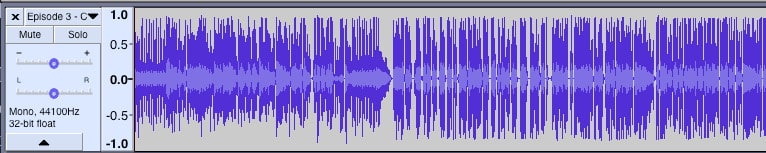

This results in a multi track recording which looks like the above screen shot.

Rendering

Once the edit job is done, I render it to a wave file (by going to "File > Export as Wav"). This creates one wave file on my machine with all tracks rendered down to stereo (i.e. two channels only).

I then listen to this file, all the way through, and make notes of any further edits that need to be made. If there are edits to be made, I reopen the Audacity project, make them, then export the project to wave again.

I iterate over this until I'm happy that the episode is good to go.

Once that's done I use an app called Auphonic Leveller.

this is based on a tip from Jay Miller

Auphonic does a lot of stuff, but I use it to remove any extra distortion or noise, covert to mp3, and to volume level the entire recording. I've found that recordings that I put through Auphonic come out clearer and louder than anything I can do by hand, but your mileage my vary.

as you can see, the episode is now fully volume levelled; listeners will no longer have to keep altering the volume of their podcatcher just to hear what I'm saying

I also ensure that Auphonic creates an MP3 at 96kbps. I chose this bit rate because the majority of the episode content is speech, so it doesn't need to be any higher than 96, really. I could drop it to around 56, but then the music snippets would suffer.

I've found that 96kbps works for me, but it might not work for you. Use whichever value gives you the best render. Remember, it's your show and you should be the QA on it. Don't compromise on file size, if it means that you aren't happy with the audio quality

Once that's done, it's ready to be uploaded and show notes created.

Show Notes

Here's another reason why I write the episode before I record it: I already have the show notes. All I need to do is add any useful links, and post them.

That's about it, really.

List of Tools

Here's a handy list of tools that I use:

- 2014 Macbook Air

- Blue Yeti

- Bludio UFO

- Audacity

- Auphonic Leveller

Top comments (8)

Thank you for this! Nice to see how other podcasters work :)

I've also published some days ago a tutorial on how we record and broadcast live our podcast (Podcast Science, on air since 2010), you can find it here. We have a pretty different setup since we record and broadcast live and our team is distributed, but you can probably find some useful stuff.

I remember when I first got talking to Jay Miller about podcasting - it was around the same time that he had me on his Productivity in Tech show. He told me about Audio Hijack, Loopback, and SoundFlower.

I really should look into these further. I'm also thinking of adding a hardware recorder to my current set up, because Audacity crashed during a save (shortly after recording) and wiped out two hours of content:

Interesting, I never had any crash with audio hijack. For the hardware recorder I've try to use Zoom R16 and Zoom H6 but I don't think it's possible to do hardware recording AND usb mic at the same time...

The crash was from Audacity, when saving to disk; then it crashed again when trying to recover. Which was a little annoying. But, I might be able to recover it all, because all of the au files (the audio tracks are split into small chunks when saving) are present but the Audacity Project file is broken.

That's probably the process that I'm going to use, I just need to decide on which of the two devices to use.

You might want to look into using REAPER or another DAW for recording. You can set things up so that when you click record and start talking, it will add things like a noise gate (removing background noise) and other effects automatically in real time.

The saved recording will have all of that applied. Then your editing process becomes removing human errors, not processing audio and this will drastically simplify and reduce the time it takes to edit.

You can even set things up so that you can redirect that real-time processed audio to another application as microphone input, so you can get high quality Skype calls, or anything else.

That's what I end up doing for my video courses. REAPER's audio gets sent directly to Camtasia. I do zero audio processing afterwards.

I've been wanting to investigate Reaper for some time now, but I've been put off by the sheer scale of what it can do. It looks so complex to use.

Would you recommend a specific set (or subset) of tutorials on it, or would you recommend just diving in and getting my hands dirty with it?

OMG Auphonic is fantastic! Used it this morning to clean up a couple of files, this is amazing and saved my tons of work out outsourceing cost. Thank you so much.

It really is wizardry. I wish that I knew how it does what it does, but I'm a little happy being ignorant... for now