Just a head's up before we begin, this is a re-post of an article I wrote over at my .NET Core blog - which can be found at dotnetcore.gaprogman.com

I recently wrote a little about getting started with docker. Last week, I wrote about how you could go about dockerising a .NET Core application. This week, I thought I'd talk a little about how it works. Firstly, this isn't going to be an in-depth analysis of precisely how docker does it's thing

if you really wanted to, you could look through the source to find all that out

but it will be more than enough for anyone who is getting into using docker and containers for their applications.

also, if you wanted a humorous take on docker, you couldn't do much better than this XKCD

What Is a Container?

A container is everything that your application relies on to run. It's a full description of the runtime, and all dependencies for your application

external DLLs, packages, etc.

What's great about containers, at least in the docker world, is that the entire stack that your application requires can be spun up as a single process on your server. I mentioned in part one of this series that containers aren't a new technology. In fact, their history goes all the way back to the 1970s

here's a link to a fantastic blog post which talks about their history

Crash Recovery

Why is this a big thing? Well, imagine that you have a Windows server with IIS installed on it, and that a large number of your applications are running on that server. If the Operating System crashes or becomes unresponsive for some reason, then all of your applications running on that server are taken down. Where as, if something within a container crashes or becomes unresponsive, then the only thing you lose is the application within that container.

Boot Time

The other benefit is start up time. In our previous example (Window Server, IIS, AppPools, etc.), we'd have to wait for Windows Server to reboot, IIS to provision ports and such, App Pools to start, then your application to start. These days, with a powerful enough server, that should be less than 10-15 seconds. But with a container, it's almost instantaneous. This is because the hard part of getting the runtime ready is done when you create the docker image that the container is based on. Speaking of which

Containers? Images? What?

I've done this a little backwards. I should have discussed what containers and images were first. Oh well, there's no better time than the present I suppose

other than earlier on, I guess

Images

First there was the image, and it was good. A docker image is a based off of plain text file (called a dockerfile) which describes how docker will build your image. This can be either the build or runtime stack for your application. In the previous part of this series of blog posts, we built a image for dwCheckApi. The dockerfile describes which other images yours is based on, it also describes where to get the binaries for your application and dependencies from. Let's take a look at the dockerfile we created for dwCheckApi again:

FROM microsoft/dotnet:2.1-sdk-alpine AS build

# Set the working directory witin the container

WORKDIR /src

# Copy all of the source files

COPY *

# Restore all packages

RUN dotnet restore ./dwCheckApi/dwCheckApi.csproj

# Build the source code

RUN dotnet build ./dwCheckApi/dwCheckApi.csproj

# Ensure that we generate and migrate the database

WORKDIR ./dwCheckApi.Persistence

RUN dotnet ef database update

# Publish application

WORKDIR ..

RUN dotnet publish ./dwCheckApi/dwCheckApi.csproj --output "../../dist"

# Copy the created database

RUN cp ./dwCheckApi.Persistence/dwDatabase.db ./dist/dwDatabase.db

# Build runtime image

FROM microsoft/dotnet:2.1-aspnetcore-runtime-alpine AS app

WORKDIR /app

COPY --from=build /dist .

ENV ASPNETCORE_URLS http://+:5000

ENTRYPOINT ["dotnet", "dwCheckApi.dll"]

Aside from special images called "scratch"

as in "from scratch"

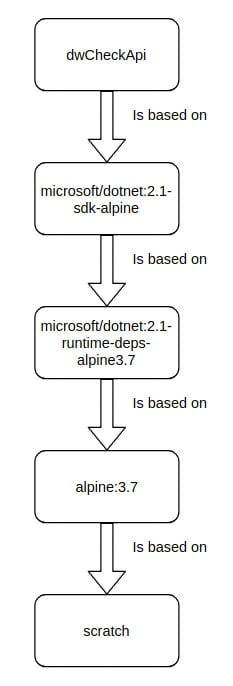

all images are based their foundations on other images. The docker image for dwCheckApi is based on one that Microsoft have provided ("microsoft/dotnet:2.1-sdk-alpine"), which itself is based on another that Microsoft have supplied ("microsoft/dotnet:2.1-runtime-deps-alpine3.7"); which is based on another supplied by docker ("alpine:3.7"), which is based on yet another image (itself bundled with docker) called "scratch". There's a lot of text in that single sentence, so here's the same information, but presented graphically:

We spared no expense

When we tell docker to build our image with the following command:

docker build .

We tell it (via the dockerfile) to get all of the "layers" which build up our base image (all the way down to "alpine:3.7") before building. You can see this happening if you watch the console output

In the above screen shot, docker is downloading all of the images that dwCheckApi's image depends on. Each of these images will be added to the docker image cache as separate layers.

Layers?

(Almost) Everything you do in a dockerfile is stored as a separate layer, these layers are then combined to create an image. The result of each docker command will create a layer, and each layer will be internally labelled by the checksum which represents the changes which are direct result of that command. For instance (and turning back to dwCheckApi for a second), the following docker command:

# Restore all packages

RUN dotnet restore ./dwCheckApi/dwCheckApi.csproj

tell docker to run a .NET Core restore action. When this action completes, docker will:

- look at the changes made

- create a checksum which represents those changes

- store those changes in our new image

- apply the checksum to them as a label

it's a little more complex than that, but that's all you need to know for now

If you tell docker to build your image, you should get something similar to the following output:

Step 11/29 : RUN dotnet restore ./dwCheckApi/dwCheckApi.csproj --force --no-cache

---> Running in 56ec006e6f2f

Restoring packages for /build/dwCheckApi.DAL/dwCheckApi.DAL.csproj...

Restoring packages for /build/dwCheckApi.Common/dwCheckApi.Common.csproj...

Installing System.Data.Common 4.3.0.

Installing SQLitePCLRaw.core 1.1.5.

Installing Microsoft.EntityFrameworkCore.Relational 2.0.0-preview1-final.

Installing Microsoft.Data.Sqlite.Core 2.0.0-preview1-final.

...

Installing Microsoft.Build 15.7.0-preview-000011-1378327.

Installing Microsoft.NETCore.DotNetHostPolicy 2.0.0.

Restore completed in 29.21 sec for /build/dwCheckApi/dwCheckApi.csproj.

Removing intermediate container 56ec006e6f2f

---> 3dcfa76ef35d

I've snipped out most of the .NET restore chaff

But if you then run the same command again, the output will look similar to this:

Step 11/29 : RUN dotnet restore ./dwCheckApi/dwCheckApi.csproj --force --no-cache

---> Using cache

---> 3dcfa76ef35d

don't be fooled by the inclusion of --_no-cache, as that's a .NET Core switch and has nothing to do with docker_

We can see that docker has detected that the output of the restore action would be the same as the previous run, so it doesn't bother to perform it, and loads the cached output instead. Leveraging this can be incredibly useful for optimising your docker images

something we'll come to in the next part of this series

But if that's what an image is, then what's a container?

Containers?

A container is a running instance of an image. You can have as many containers as you'd like

as long as you have the memory available

and they can all running the same image or different ones. When you're ready to run your image in a container, you'll first need to ensure that it has been tagged during building.

Tags?

We've seen tags already. At the very top of the dwCheckApi dockerfile, we said:

FROM microsoft/dotnet:2.1-sdk-alpine AS build

Which tells docker to get a docker image from the "microsoft/dotnet" repository which has been tagged "2.1-sdk-alpine". A tag is king of like a label, and they can be useful if you want to supply different docker images with different environments. For instance, if you take a look at the list of available docker build images for .NET Core

over at Microsoft's repo for the dotnet image

you'll see that there are a selection of them tagged for use with Windows and some for use with different flavours of Linux, and with different versions of the SDK and runtime. For instance, I can see the following combinations:

this list is correct at the time of writing

- Debian Stretch with SDK version 2.1

- Debian Jessie with SDK version 2.1

- Debian Jessie with SDK verison 1.19

- Windows Nano Server with SDK version 2.1

- Windows Server with SDK 2.0

and that's not all

Each of these images are built differently, and have different software installed, with different capabilities and requirements. Depending on how you have your server set up, and what your local build pipeline is, you'll want to pick the image with the tags which match. But why did I just say:

and what your local build pipeline is

Well, if you're going to be using docker and containerisation as part of your DevOps plan, then you'll need to be using it to build and test locally. Otherwise how can you be absolutely certain that the container that you create actually work.

Back To Containers... kind of

Building an image and supplying it with a tag is pretty simple. Using dwCheckApi as an example, we could use the following command to tag our image as we build it:

docker build . -t dwcheckapi:latest

This command tells docker to build our dockerfile and tag it with "dwcheckapi:latest",

we can only use lowercase characters in tags for docker images

we can check on our built images by issuing the following command:

docker image ls

Which might return something similar to the following:

REPOSITORY TAG IMAGE ID CREATED SIZE

dwcheckapi latest a879054aefb1 About an hour ago 168MB

microsoft/dotnet 2.1-sdk-alpine c751b3a7f4de 2 weeks ago 1.46GB

microsoft/dotnet 2.1-aspnetcore-runtime-alpine 4933ffee8f5b 2 weeks ago 163MB

The first line contains the container details for dwCheckApi

In the above listing there are three images:

- dwcheckapi:latest

- microsoft/dotnet:2.1-sdk-alpine

- microsoft/dotnet:2.1-aspnetcore-runtime-alpine

The first image is the one which will host and run dwCheckApi, but what are the others? They're the build image ("microsoft/dotnet:2.1-sdk-alpine") and the runtime image ("microsoft/dotnet:2.1-aspnetcore-runtime-alpine"). The first of these ("microsoft/dotnet:2.1-aspnetcore-runtime-alpine") has only been used to build our application. The relevant lines from the dockerfile are:

FROM microsoft/dotnet:2.1-sdk-alpine AS build

# Set the working directory witin the container

WORKDIR /src

# Copy all of the source files

COPY *

# Restore all packages

RUN dotnet restore ./dwCheckApi/dwCheckApi.csproj

# Build the source code

RUN dotnet build ./dwCheckApi/dwCheckApi.csproj

# Ensure that we generate and migrate the database

WORKDIR ./dwCheckApi.Persistence

RUN dotnet ef database update

# Publish application

WORKDIR ..

RUN dotnet publish ./dwCheckApi/dwCheckApi.csproj --output "../../dist"

# Copy the created database

RUN cp ./dwCheckApi.Persistence/dwDatabase.db ./dist/dwDatabase.db

The lines are those which use the "microsoft/dotnet:2.1-sdk-alpine" image. After which, it's no longer needed, as we then define the runtime for the application and copy the published version of the application to it:

# Build runtime image

FROM microsoft/dotnet:2.1-aspnetcore-runtime-alpine AS app

WORKDIR /app

COPY --from=build /dist .

ENV ASPNETCORE_URLS http://+:5000

ENTRYPOINT ["dotnet", "dwCheckApi.dll"]

Because the final image's "entry point" is defined within the section that we have defined as "app"; "microsoft/dotnet:2.1-aspnetcore-runtime-alpine" and the files we copy from the "build" section are the only ones included in the resulting image. "microsoft/dotnet:2.1-aspnetcore-runtime-alpine" weighs in at 163MB, and the published app, packages, and database for dwCheckApi take up a further 5MB of space. So the resulting image is 168MB, regardless of the fact that we had to use a 1.46GB image in order to build it. This means that containers which run our application will need 168MB of RAM in order to host and run our application.

Back To Containers (Proper)

Tagged images can be run within a container with the following command:

docker run --rm -p 5000:5000 dwcheckapi:latest --name running-dwcheckapi-instance

This tells docker to create a container

which, you'll remember, is just a process on your machine

and runs the image within it. As part of the set up, docker can create a separate virtual network within docker app-space and host your container there. The great thing about this, is that your container will not appear on the same network as any other containers on your system - as far as the containers are concerned, they may as well be running on completely different machines.

An Example

Let's say that you had two applications:

- A UI

- An API

Let's also say, for simplicity's sake, that the API doesn't need access to the outside world. After containerising them and running them in docker, you could end up with something which looks like this:

From right to left, we have:

- The wider network (LAN / WAN / Internet

- A firewall

- A server (showing cat images)

- A docker network (hosted on the server with the cat image)

- A UI container

- An API container

In this situation, we have a docker network with only one entry point (supplied by the UI container). This means that messages coming into the server can only reach the API container via the UI container - effectively locking it off from the outside world

there are situations where you _can _access the API Container - but they're pretty specific

You'll still need to use a reverse proxy on the server, but there are applications (most of which have been dockerised) which can deal with all of this for you, too.

Conclusion

This should be enough information for you to start understanding docker a little better. I've covered dockerfiles, images and containers. I've even touch on docker networks, at little. For more information on how docker works, I would take a look at the following links:

Top comments (0)