By observing 100,000's of serverless backend components monitored for 2+ years at Dashbird, experience shows that Serverless infrastructure failure boils down to:

- Throughput and concurrency limitations due to scalability mismatches;

- Increased latency & associated timeout errors;

- Inadequate management of resource allocation;

Isolated faults become causes of widespread failure due to dependencies in our cloud architectures (ref. Difference of Fault vs. Failure). If a serverless Lambda function relies on a database that is under stress, the entire API may start returning 5XX errors.

You may think this is just a fact of life, but we can dodge or at least mitigate these failures in many cases.

Serverless is not a magical silver bullet. These services have their limitations, especially to scalability capacities. AWS Lambda, for example, can increase concurrency level up to a certain level per minute. Throw in 10,000 concurrent requests out of thin air and it will throttle.

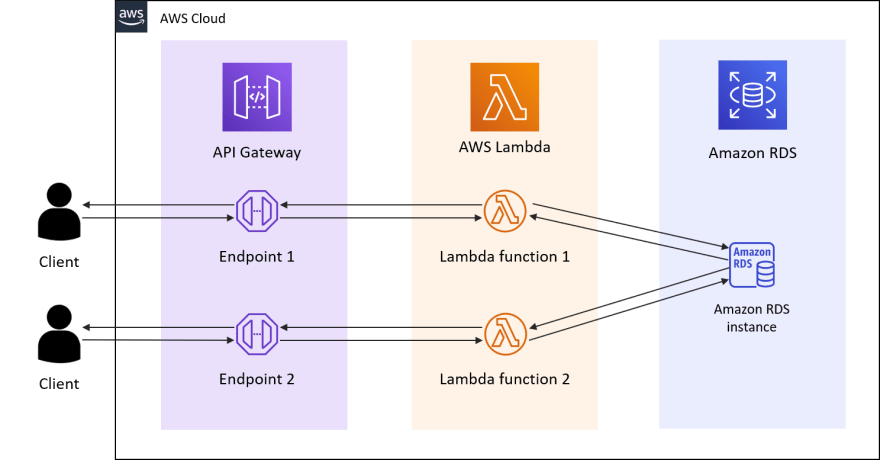

A typical architecture looks like this:

It usually works well under a low scale. Put in more load a single component's fault can bring the whole implementation to its knees.

Consider this scenario: due to market reasons, API Endpoint 1 starts receiving an unusual amount of requests. Your clients are generating more data and your backend needs to store it in the RDS instance. Relational databases usually don't scale linearly to the I/O level, so we can expect an increase in query latency during this peak demand. API Endpoint 1 or Lambda function 1 will start timing out at some point due to the database delays.

Another possible fault scenario is throttling from Lambda function 1 due to a rapid increase in concurrency.

Not only API Endpoint 1 will become unavailable to clients, but also the second endpoint. In the first scenario, Endpoint 2 also relies on the same RDS instance. In the second scenario, Lambda function 1 will consume the entire concurrency limits for your AWS account, causing Lambda function 2 to throttle requests as well.

We can avoid this by decoupling the API Endpoint 1 and Lambda function 1. In the example, our clients are only sending information that needs to be stored, but no processing and customized response are needed. Here is an alternative architecture:

Instead of sending requests directly from API Endpoint 1 to the Lambda function 1, we first store all requests in a highly-scalable SQS queue. The API can immediately return a 200 message to clients. The Lambda function 1 will later pull messages from the queue in a rate that is manageable for its own concurrency limits and the RDS instance capabilities.

With this modification, the potential for widespread failure is minimized by having a queue absorbing peaks in demand. SQS standard queues can handle nearly unlimited throughput. At the same time, all components serving Endpoint 2 can continue to work normally, since data consumption by the Lambda function 1 is smoothed out.

This is a simplified example, there are several aspects to consider in terms of potential failure points and architectural improvements. We hosted a webinar to cover these topics in much more depth, which you can watch here or below:

Top comments (0)