One of my colleagues shared this article with me a few days ago and having read through it (and many others like it before), I felt like I needed to provide hopefully a more balanced perspective to this age old debate based on my own experiences and learnings. So in this post, that’s what I am going to attempt to do.

Successful Startup != Microservices

The author shares this link to a security audit of start up codebases and emphasises on point number 2 of that article. The point being that all successful startups kept code simple and steered clear of microservices until they knew better.

I can see why, microservices are an optimisation pattern an org may need to apply when they scale beyond a certain size. When you are a fledgling startup with limited funding and an uncertain future, microservices is the last thing you should be worried about (and preferably not at all). All the time and money at this stage should be spent on generating value and treating your employees well (the latter is not optional…ever).

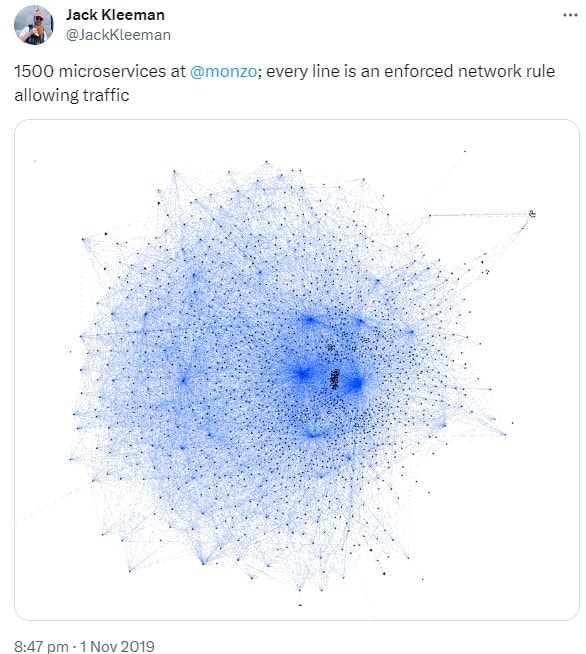

Here’s an example of a modern digital bank that went all in with microservices from the get go. They boast about their 1500+ microservices that a while ago invited some flak on Twitter. From my limited point of view on their context, this looks like insanity and though they touch on all the challenges that come with this kind of architecture, I can’t help but think that somewhere deep down they go, “Wish we hadn’t done this so soon!” But I am willing to give them the benefit of the doubt that they did their due diligence whilst evolving into a complex architecture and building a platform to support it.

Microservices Make Security Audit Harder

If I have 1500+ services potentially written in different languages spread across of hundreds if not thousands of repositories, my job as a security auditer just got exponentially harder!  The author even mentions that in point 7 of his list: Monorepos are easier to audit. Monzo’s 1500+ Go services are in a mono-repo, so that’s one down I guess.

The author even mentions that in point 7 of his list: Monorepos are easier to audit. Monzo’s 1500+ Go services are in a mono-repo, so that’s one down I guess.

Also the security attack surface area also gets that much wider, can you ensure all 1500+ of your microservices leverage security hardened platform and industry best practices in a standard way? Do you even know what those are? What about the dependencies (direct and transitive) each of those services take on external code?

I think these are probably the most significant drivers for a security professional to gripe about microservices, but the more you distribute the more standardisation on the platform front you need. You don’t want to be reinventing the wheel, especially when it comes to security so the more sensible defaults you can bake into the platform the better and easier it might be to audit it.

Do we really need microservices?

I agree with the author that in some cases there can be “a dogma attached to not starting out with microservices on day one – no matter the problem“. Just because someone else (usually multi-billion dollar organisations with global footprint and tens of thousands of engineers, think FAANG) is doing microservices, doesn’t mean my 5 person startup also needs microservices.

But I have to add a bit of nuance here, an org doesn’t have to reach FAANG scale to realise they need to rearchitect. If my org is growing in terms of revenue, size and technology investment, then asking the following kinds of questions regularly is a part of engineering due diligence:

- Is the current monolithic architecture with a shared database still the right thing to do?

- Are we facing challenges in some areas where our current architecture is impeding our value delivery? If so, what might be some ways to alleviate that pain?

- How much longer this system can keep growing with the same pace as the org and still be maintainable and agile?

Agile organisations and agile architectures are the ones that can evolve with time and need. The complexity of the architecture should be commensurate with the organisation’s growth rate and ambitions. No more, no less.

How web cos grow into microservices?

None of the web cos evolved to microservices over night, it was a long arduous journey over decades (far shorter than most employees’ tenure in an organisation btw). Here’s E-bay’s journey to microservices, here’s Netflix’s and here’s Amazon’s. In all cases you will notice that even though today they are microservice behemoths, they started the thinking and doing the groundwork many years prior when they were much smaller compared to today. Amazon for example started their thinking back in 1998, a full 25 years ago, which ultimately resulted in the manifesto linked above.

This is a testament to their forward thinking and agility that helped them survive and succeed, if they had waited until they got to today’s scale (assuming they ever managed to reach there in the first place), to start decomposing their architecture for growth and evolution, they probably won’t have made it.

So just fiendishly touting “there is nothing wrong with monolith” or “don’t do microservices” without justifying the arguments or clarifying the nuances is no different than someone wanting to have 1500+ microservices because someone else is doing it.

Look at where you are and where do you want to be

Its also true that many organisations are still monolithic-ish (from a technical pov) for example StackOverflow and Shopify and there’s probably more. But its not like StackOverflow will not ever entertain the possibility, they have multiple teams that are responsible for various parts of the SO so if they need to scale and increase the fault tolerance of a specific set of teams, they can always factor services out.

The article also gives examples of Instagram and Threads but what it omits is that Threads is built on top of Meta’s massive platform that is a collection of different and largely reusable services. Can you imagine the complexity of building something like that from ground up?

I can be pro-monolith and pro-large shared databases as an organisation as long as I regularly and critically review my architecture to sense signs of troubles and be mature enough to evolve it into a better state.

Problems with Distribution

Here’s where I probably agree somewhat with the author but I also think these are not problems unique to microservices:

Say goodbye to DRY

Somewhat yes but mostly no! It depends on what is being duplicated and can it really be considered duplication. If its knowledge of a domain concept that’s being duplicated then that’s bad and usually an indication of incorrect boundaries. If its data contract on provider and consumer ends, that’s not really a duplication.

This is also not a service architecture only problem, given a sufficiently large monolithic codebase (and depending on how well its modularised) I can bet you can duplicate knowledge in a monolith as well because in a rush to delivery, that’s just how engineers behave. Granted, it might be easier to spot and remedy if all code is in one place than if its spread across multiple codebases but then, that’s what you want to do even in a service architecture i.e. combine logically related codebases to reduce knowledge duplication. Nothing about many service architecture stops you from combining services when you need to.

Matter of fact, in my teams we’re simplifying our many-service architecture to a smaller set of carefully combined services. Note: services are not going anywhere, they are just getting a little less…micro. We are still working to decompose our shared monolithic e-commerce database by defining ownership boundaries around business capabilities

When combining services is not really an option, creating packaged libraries for common functionality and pushing them up to a central package registry for easier reuse, is the next best thing.

Developer ergonomics will crater

Yep! For new joiners in a team, even with all the support, guidance and onboarding, knowing the whole landscape can be quite daunting. And yes, over time you tend to start building a solid mental model and you can find the exact line of code in the exact service that lies on the critical path with a 2 minute Github search, but it still can be a long time before that happens.

Not to mention the time wasted just trying to get a service that doesn’t get changed often, to run and working on an engineer’s machine because people forget things they don’t look at and the environment also changes.

But once again, having a monolith doesn’t make it magically easier, especially if the monolith is sufficiently large. I would still need to make sure all the configuration for all the modules, is set up to bring up the system locally regardless of whether or not I need to touch that part of the system. With separate services, you only pay that cost for the module that you need to work on. Of course a lot depends on how the monolith is designed.

Integration tests – LOL

Yeah, kind of! But I would challenge this by saying that meaningful and fast integration testing in any sizeable organisation (think 40 different domains and 500+ engineers) long left the building. Integration testing though useful shouldn’t be the only way we test our code, monolith or microservices, because unless you are building your own payment gateway or geocoding platform, even your monolith will have external dependencies. You can forget about being able to do reliable and fast integration testing.

I would hate to see your testing code if the only tests you ever have are opaque integration tests with complicated dependency setup. How would one even reason about those tests? And if I can’t reason about them I would probably disable them or remove them or they’d get flaky overtime, in which case I’d have even less confidence to deploy changes. Having said that, the more dependencies you have (e.g. with microservices) the harder integration testing becomes but the same is true with “the more integration tests you have the harder it can be to maintain those tests”.

“observability” is the new “debugging in production”

Observability as a practice is not restricted to microservices or monoliths. Its just a sensible thing to want to do to get visibility into what the system is doing and how is it performing over time. It is essential for debugging production systems (mono or micro). You can’t step-thru debug code in production (though I have done it in past with Visual Studio Remote Debugging feature, back then it wasn’t a nice experience). Even if you could debug that way, the problem may not always be replicable in production because the environment is not 100% predictable and that’s why I rely on logs and metrics to observe the system performance over time and create a direction for my debugging or understand its rhythm.

No integration test can give you the profile over time that good monitoring does, because integration tests are a snapshot in time. Production is where the software is really tested, so yes I do want good observability in order to understand my system and troubleshoot it effectively.

What about just “services”?

Services are about org design and business capabilities

Just because an org might not be planet scale doesn’t mean they can’t benefit from decomposing large systems into smaller ones to gain autonomy and resilience.

What an organisation should invest in is identifying how value flows through it, what people are empowered to and have the capability to, make decisions and draw contextual boundaries around those groups. Creating a stable platform that minimises reinventing the wheel, is also crucial as the org grows otherwise the amount of rework/grunt work across multiple services alone will be a drag and they will be writing about how microservices failed them.

The ideas in Team Topologies book talk about this kind of org design that allows a better implementation of Conway’s Law. Domain Driven Design talks about bounded contexts that create these relatively autonomous zones within an organisation that are coupled loosely from a functional and technical perspective.

Focusing on flow of value and organisation design should result in sensibly sized services that are driven by domain boundaries instead of technical wet dreaming. Micro, nano, pico or…mega…is irrelevant, because any change in the service granularity will/should be triggered by changes to the business value flow it delivers so a service should be as big as it needs to be. Splitting services for its own sake or combining services for its own sake (because you drank too much of the “nothing wrong with monolith” kool-aid), is ill-informed and cargo-culting. That’s a sure fire way to the madness the author is talking about.

How does one determine value flow and create better boundaries?

This needs its own post (or ten) so I will leave a cop-out list of other buzzwords to consider:

- Value stream mapping

- Big Picture Event Storming

- Context Mapping

- Domain modeling

N.B. Initial boundaries you will draw will probably be wrong, so be prepared to revisit and refactor them. You don’t want to stick with ineffective boundaries for too long.

In Closing…

- Many-service architecture (I am not calling it microservices anymore), is definitely a scaling and optimisation pattern that shouldn’t be applied haphazardly or lightly just because you think it puts you in the cool kid category. It does add complexity because of many moving parts, increases the failure modes to consider and might even negatively impact the system performance

- Pay attention to business capabilities and ownership boundaries (i.e. bounded contexts) by identifying flow of value in the org

- Create services in correspondence to the bounded contexts and be prepared to redraw the boundaries and rearchitect both ways, that is:

- If you do this due diligence then you can even design a modular monolith to start with and split when actually needed, and

- Armed with those insights you can even combine multiple services into fewer to align better with the contextual boundaries.

- Sometimes team reorganisation can cause reallocation of capabilities across portfolios, if you have a scruffy monolith then splitting out services to hand over might be harder than if you already had services.

- You cannot have a loosely coupled services architecture if you are still sharing the monolithic database. If you are carving out services from the monolith, also take your data with you. Shared databases start out innocently enough when the org is small and simple but they are like bear cubs, eventually they get bigger, scarier, teethier and then they are no fun. Make breaking the monolithic database up a part of your engineering strategy

- The organisation needs to have or be willing to build, a certain level of engineering maturity and leadership to execute a successful many service architecture evolution that is built on top of a stable platform

- Thoughtlessly designed monolith is just as bad as thoughtlessly designed microservices.

Top comments (0)