One of most difficult parts for Data Scientists to start their job is to build their environment. Most of them do not know or do not care how the underlying infrastructure to host all these cloud resources and/or services they require, like Databricks Workspaces, clusters, storage, etc. are built.

And that means that somebody else would have to build the initial environment for them or they would be forced to learn and create it by themselves.

The first reaction is to build it manually using whatever portal the cloud provider has. But creating these complex environments manually leads to future problems. One misconfiguration could lead to a cascade of errors that ultimately would impact the results you are expecting from analyzing the data you are ingesting.

Initially it would take weeks or sometimes even months before you could get a properly configured and ready to use environment where you could ingest, analyze, and visualize the information you want.

It takes multiple iterations to get to that point and in my experience once the initial environment is created, nobody wants to touch it for fear of breaking it, especially if you are doing it manually.

The goal here is to reproduce these environments regardless of the stage in the development cycle. We want that level of consistency, so you never heard that bugs in production are not able to reproduce in Dev/Test. And let’s not forget about efficiency, these complex environments are not cheap they usually have multiple cloud resources involved in their architecture. That if left idle can easily cost you a lot!

So, there is a big opportunity here to help Data Scientists do their work faster and apply some best practices along the way.

My Workflow

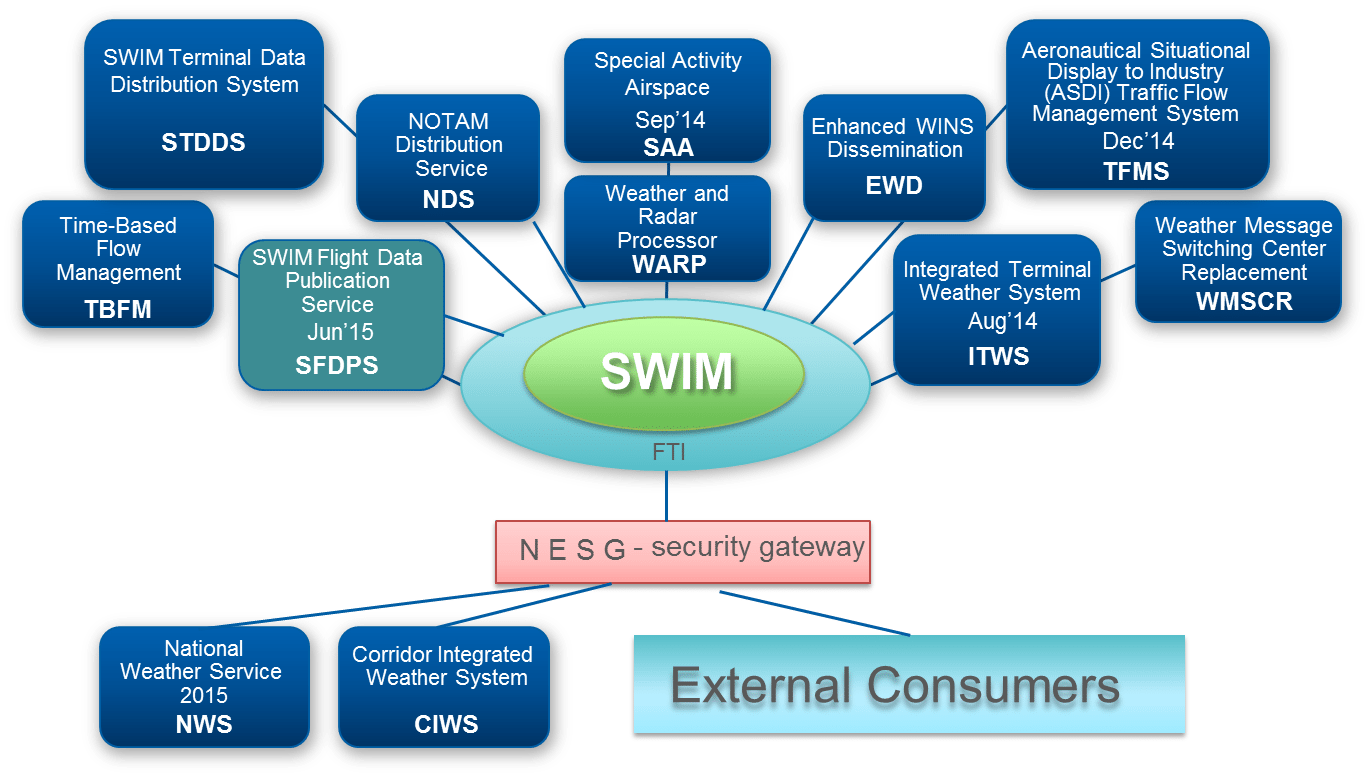

We are going to use the System Wide Information Management (SWIM) Cloud Distribution Service (SCDS) which is a Federal Aviation Administration (FAA) cloud-based service that provides publicly available FAA SWIM content to FAA approved consumers via Solace JMS messaging.

This project will allow us to have a clean Data Analytics environment connected to the System Wide Information Management (SWIM) Program which would allow us to analyze flight data in almost real time.

This project shows how easy is to build Data Analytics environments using Chef Infra, Chef InSpec, Terraform, GitHub and the brand new Databricks resource provider.

Everything is done using Chef Manage, GitHub Actions, Terraform Cloud and GitHub Code Spaces so we can have all our development environment in the cloud, and we do not have to manage any of it.

We focused on automating these 3 categories:

Infrastructure itself - which includes all the initial networking, subnets, security groups, VMs, Databricks Workspace, Storage, everything.

Kafka - Now we are on the post provisioning configurations which includes Kafka installation, configuration along with its software requirements. SWIM required configuration to access specific data sources like TFMS. You know, having the right configuration files and in the right place. The solace connector to ingest the data and its required dependencies.

and finally, DataBricks - Which involves Cluster creation and configuration, required libraries like sparkXML to parse and analyze the data and import initial notebooks.

Submission Category

DIY Deployments

Maintainer Must-Haves

Yaml File or Link to Code

-

Reusable Workflows

Performs static code analysis on a given repository using tflint.

A wrapper workflow for Terraform that creates Azure cloud and other resources using the terraform flow.

-

Main Workflows:

- terraform-azure.yml

Deploys all the required azure infrastructure, networking, security groups, subnets, a kafka VM, Databricks workspace, cluster and a test notebook.

- chef-kafka.yml

Does all the postprovisioning configurations for Kafka VM using chef so it can connect to SWIM and ingest all messages in real time.

Here is the Official repository:

Chambras

/

DataAnalyticsEnvironments

Chambras

/

DataAnalyticsEnvironments

Automated real time streaming ingestion using Kafka and Databricks

Automating Data Analytics Environments

This demo project shows how to integrate Chef Infra, Chef InSpec, Test Kitchen, Terraform, Terraform Cloud, and GitHub Actions in order to fully automate and create Data Analytics environments.

This specific demo uses FAA's System Wide Information System (SWIM) and connects to TFMS ( Traffic Flow Management System ) using a Kafka server More information about SWIM and TFMS can be found here.

It also uses a Databricks cluster in order to analyze the data.

Project Structure

This project has the following folders which make them easy to reuse, add or remove.

├── .devcontainer

├── .github

│ └── workflows

├── LICENSE

├── README.md

├── Chef

│ ├── .chef

│ ├── cookbooks

│ └── data_bags

├── Infrastructure

│ ├── terraform-azure

│ └── terraform-databricks

└── Notebooks

SWIM Architecture

Architecture

This is the architecture of the project. Which is basically a publish and subscribe architecture.

CI/CD pipeline Architecture

…

Additional Resources / Info

Existing Actions that have been used in the workflows:

- actions/checkout@v2

- actions/cache@v2

- actions/github-script@v5

- hashicorp/setup-terraform@v1

- terraform-linters/setup-tflint@v1

- actionshub/chef-install@v2

My open source re-usable workkflows:

Top comments (0)