Recently, I integrated Amazon Bedrock Knowledge Base as a RAG (Retrieval-Augmented Generation) system into a chatbot, adding functionality that suggests multiple response options to the user's latest message. I'd like to share various techniques I used to improve suggestion accuracy.

You might think that GPT or Claude could easily provide this information, but there's knowledge beyond these LLMs' cutoff dates, so I believe this article will be helpful.

The complete code is in the "Implementation Example: Bedrock Knowledge Base Service and Client" section. If that's all you're interested in, feel free to skip ahead (examples are in TypeScript).

Also, if you have better approaches or other suggestions, I'd appreciate your comments as they help me learn!

1. Selecting Foundation Models as a Parser (FMP) When Creating a Knowledge Base

When choosing S3 as a data source, I selected Foundation Models as a Parser (FMP) to accurately index information exported from Notion (markdown and images).

This is effective when handling documents with screenshots or diagrams, as text information within images is also indexed and becomes searchable.

2. Optimizing the Number of Search Results

Using OpenSearch Serverless as a knowledge base, I found that the default number of search results (5) was sometimes insufficient to retrieve adequate information for queries.

By increasing the numberOfResults parameter in vectorSearchConfiguration from the default 5 to 10, the failure rate of responses decreased.

retrievalConfiguration: {

vectorSearchConfiguration: {

numberOfResults: 10, // Set vector search results to 10 to reduce answer failure rate

}

}

Increasing the number of search results slightly increases processing time and response time (about 1 second in my case), but the benefits of improved answer quality and reduced failure rate outweighed this drawback. Depending on your use case, you might want to adjust this between 5 and 20.

3. Utilizing Query Decomposition

For complex questions or queries containing multiple elements, Bedrock's query decomposition feature can improve search accuracy.

orchestrationConfiguration: {

queryTransformationConfiguration: {

type: 'QUERY_DECOMPOSITION' as const

}

}

When query decomposition is enabled, composite questions like "What's the difference between A and B?" are broken down into sub-queries such as "What is A?", "What is B?", and "What are the differences between A and B?", with searches performed for each separately. This allows for retrieving more relevant information.

Note

After enabling query decomposition, the response time increased by about 5 seconds. For applications requiring real-time responses, you may want to carefully consider activating this feature.

4. Dynamically Including Conversation History in System Prompts

By utilizing textPromptTemplate in generationConfiguration to dynamically construct system prompts, more natural conversations become possible. Especially by including conversation history, context-aware responses can be generated.

The following code dynamically includes conversation history (excluding the latest user message) in the system prompt:

private buildPrompt(chats: Array<ChatEntity>): { systemPrompt: string; userPrompt: string } {

const latestVisitorChatIndex = [...chats].reverse().findIndex((chat) => chat.sender === 'visitor')

if (latestVisitorChatIndex === -1) {

throw new BadRequestException('Could not find the latest message; AI cannot generate response suggestions')

}

const latestVisitorChat = chats[chats.length - 1 - latestVisitorChatIndex]

const previousChats = chats.slice(0, chats.length - 1 - latestVisitorChatIndex)

const formattedHistory =

previousChats.length > 0

? previousChats.map((chat) => `${chat.sender}: ${chat.chat ?? ''}`).join('\n')

: 'No previous conversation history available.'

const systemPrompt = `

Human: You are a question answering agent. I will provide you with a set of search results and a user's question.

Your job is to answer the user's question using only information from the search results.

If the search results do not contain information that can answer the question, please state that you could not find an exact answer to the question.

Do not include any explanations, introductions, or additional text before or after the JSON output.

Your task is to respond to potential customers in a polite and professional manner that builds trust.

Each response should be concise (up to 3 lines).

Always include a word of gratitude or empathy in response to the customer's statement.

Here are the search results in numbered order:

$search_results$

Here is the conversation history:

${formattedHistory}

Here is the user's question:

$query$

Generate the response in Japanese language.

Ensure the response follows this exact JSON format:

{"replies":["Reply1","Reply2","Reply3"]}

Assistant:

`.trim()

return {

systemPrompt,

userPrompt: latestVisitorChat.chat ?? ''

}

}

The buildPrompt function creates a system prompt (systemPrompt) and user prompt (userPrompt) to pass to the bedrockKnowledgeBaseService.

const { systemPrompt, userPrompt } = this.buildPrompt(chats)

const llmResponse = await this.bedrockKnowledgeBaseService.queryKnowledgeBase(systemPrompt, userPrompt)

Note

When using a custom prompt template, the default $output_format_instructions$ is overwritten, which means citations (reference information) will not be included in the response. If citation information is needed, you must include $output_format_instructions$ in your prompt, but this creates a trade-off where complete customization of the response format becomes difficult.

Currently with Bedrock, it's challenging to simultaneously obtain both a custom format (like JSON) and citation information, so you need to decide priorities based on your requirements.

Knowledge base prompt templates: orchestration & generation

5. Hybridizing Keyword Search and Vector Search

Bedrock Knowledge Base allows for hybrid search combining vector search and keyword search.

retrievalConfiguration: {

vectorSearchConfiguration: {

numberOfResults: 10,

overrideSearchType: 'HYBRID' as const

}

}

Vector search excels at semantic similarity, while keyword search is strong in exact keyword matching. Combining both increases the likelihood of extracting more relevant information.

By default, Bedrock decides the search strategy for you, so I've kept the default settings for now.

KnowledgeBaseVectorSearchConfiguration

6. Reranking (Unverified)

I haven't tested this due to cost concerns, but reranking search results can rearrange them by relevance. Reranking uses a dedicated reranker model, meaning you'll be using a different model alongside Claude.

retrievalConfiguration: {

vectorSearchConfiguration: {

numberOfResults: 10,

overrideSearchType: 'HYBRID' as const

},

rerankingConfiguration: {

type: 'BEDROCK_RERANKING_MODEL' as const,

bedrockRerankingConfiguration: {

modelConfiguration: {

modelArn: 'arn:aws:bedrock:region:account:reranker/model-id'

},

numberOfRerankedResults: 5 // Number of results to return after reranking

}

}

}

Reranking more precisely reevaluates the relevance of search results to the query. While vector search and keyword search find rough relevance, the reranker model understands and evaluates the semantic relationship between the query and documents more deeply.

Note

Using reranking increases costs and processing time. However, by narrowing down to more relevant results, the input to the LLM can be optimized, potentially improving answer accuracy. I haven't tried this yet but am considering it for future improvements.

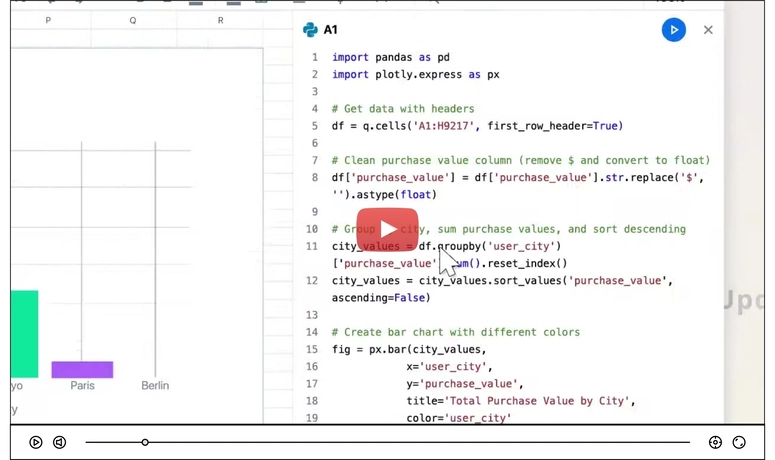

Implementation Example: Bedrock Knowledge Base Service and Client

Here's a TypeScript implementation example applying the optimizations mentioned above:

Bedrock Knowledge Base Service

@Injectable()

export class BedrockKnowledgeBaseService {

constructor(private readonly bedrockKnowledgeBaseClient: BedrockKnowledgeBaseClient) {}

/**

* Query LLM (Claude) based on the knowledge base

* @param systemPrompt System prompt

* @param userPrompt User's query

* @returns AI response text

*/

async queryKnowledgeBase(systemPrompt: string, userPrompt: string): Promise<string> {

try {

const ragConfig = {

type: 'KNOWLEDGE_BASE' as const,

knowledgeBaseConfiguration: {

knowledgeBaseId: process.env.BEDROCK_KNOWLEDGE_BASE_ID,

modelArn: process.env.BEDROCK_KNOWLEDGE_BASE_MODEL_ARN,

orchestrationConfiguration: {

queryTransformationConfiguration: { type: 'QUERY_DECOMPOSITION' as const }

},

retrievalConfiguration: {

vectorSearchConfiguration: {

numberOfResults: 10,

overrideSearchType: 'HYBRID' as const

}

},

generationConfiguration: {

promptTemplate: {

textPromptTemplate: systemPrompt

},

inferenceConfig: {

textInferenceConfig: {

maxTokens: 500,

temperature: 0.2,

topP: 0.5,

topK: 10

}

}

}

}

}

const input: RetrieveAndGenerateCommandInput = {

input: { text: userPrompt },

retrieveAndGenerateConfiguration: ragConfig

}

const response = await this.bedrockKnowledgeBaseClient.sendQuery(input)

if (!response) {

throw new InternalServerErrorException('Failed to get a valid response from Bedrock.')

}

return response

} catch (error) {

console.error('Error querying Bedrock API:', error)

throw new InternalServerErrorException('Failed to get response from Bedrock.')

}

}

}

Bedrock Knowledge Base Client

@Injectable()

export class BedrockKnowledgeBaseClient {

private readonly client: BedrockAgentRuntimeClient

constructor() {

this.client = new BedrockAgentRuntimeClient({

region: process.env.REGION

})

}

/**

* Query Bedrock knowledge base

* @param input RetrieveAndGenerateCommandInput

* @returns AI response

*/

async sendQuery(input: RetrieveAndGenerateCommandInput): Promise<string> {

try {

const command = new RetrieveAndGenerateCommand(input)

const response = await this.client.send(command)

if (!response.output?.text) {

throw new InternalServerErrorException('Failed to get a valid response from Bedrock.')

}

return response.output.text

} catch (error) {

console.error('Error querying Bedrock API:', error)

throw new InternalServerErrorException('Failed to get response from Bedrock.')

}

}

}

Future Considerations

I wondered if there's a way to exclude unnecessary results by adjusting search scores (threshold settings), but it seems this isn't possible with Bedrock Knowledge Base (please correct me if I'm wrong).

As mentioned in the article, query decomposition significantly increased latency (over 10 seconds until response), so I'm currently considering solutions. Options include using Bedrock in the Virginia region and accepting the overhead of cross-region communication while using models like 3.5 Haiku, or importing open-source models like Llama through custom model import. The limited number of models available in the Tokyo region is currently challenging.

Also, since our knowledge base data is centralized in Notion and we're currently manually exporting markdown and PNG files, I'd like to build a system that automatically retrieves data using the Notion API to reduce this manual effort.

Currently, we're only doing online evaluation, so I'd also like to implement offline automatic evaluation (LLM as a Judge). There are many tasks ahead!

Top comments (2)

thanks for sharing!

Thanks for your comment!