In Part 1, we talked about the origins of the Site-to-Site VPN Service in AWS. As consumers began to scale in the early days, they faced tunnel sprawl, performance constraints, and the need for a simplified design. AWS responded with Transit Gateway. How did Transit Gateway simplify architecture leading to smoother operations, better network performance, and a scalable blueprint for the future network?

Pre Transit Gateway

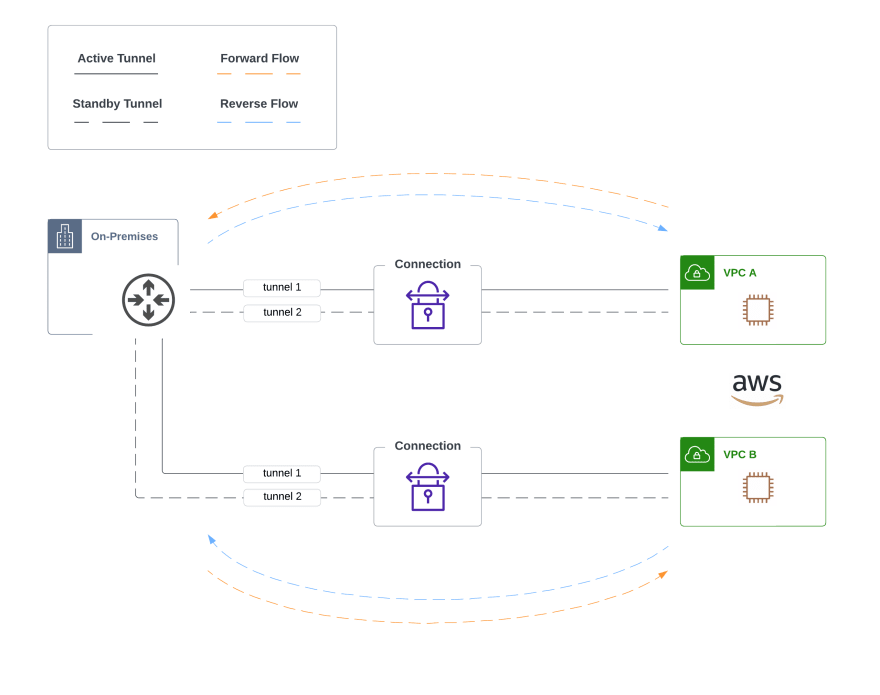

Security teams in the early days would often balk at the idea of using VPC peering without having a centralized transit hub (where the hybrid connectivity was landed). Since VPC couldn't do any advanced packet forwarding natively, many designs would do transitive routing on the customer gateway. Traffic patterns looked something like this:

Observations

In this design, the tunnels exist from the customer gateway all the way to the VPC. In this one-to-one relationship, there is no intelligent way to manage the complexity of incremental connections as you grow. Traffic also exits AWS even when the destination is another VPC which is inefficient. Also, since one tunnel is actively forwarding traffic at a time, you are limited to ~ 1.25 Gbps.

Transit VPC

To solve some of the shortcomings with VPC peering and tunneling to each VPC directly, Transit VPC was born. This solution deploys a hub-and-spoke design that reminds me of my data center networking days when we connected sites to data centers with all the glory of active/standby and boxes everywhere. Want to connect another network? Deploy another few boxes! Sometimes this is unavoidable, but any opportunity I get not to manage additional appliances or agents, I take it.

When I prototyped this design for the first time, we used Cisco CSRs deployed in a Transit VPC. This acted as the hub, and each spoke VPC's VGW had two tunnels to the CSRs with BGP running over IPsec. Since VGW doesn't support ECMP, this gives us active/standby out of the box. This design does perform transitive routing in the hub which keeps spoke-to-spoke traffic in the cloud.

Appliance Sprawl

In this design, you are responsible for provisioning the appliances (across multiple availability zones in a given VPC). This means you have to do the routine software upgrades along with the emergency firefighting when new CVEs are uncovered. Also, running this design at scale is problematic. As the number of VPCs grow, the number of appliances grow. Imagine the above diagram with 60 VPCs attached.

Enter Transit Gateway

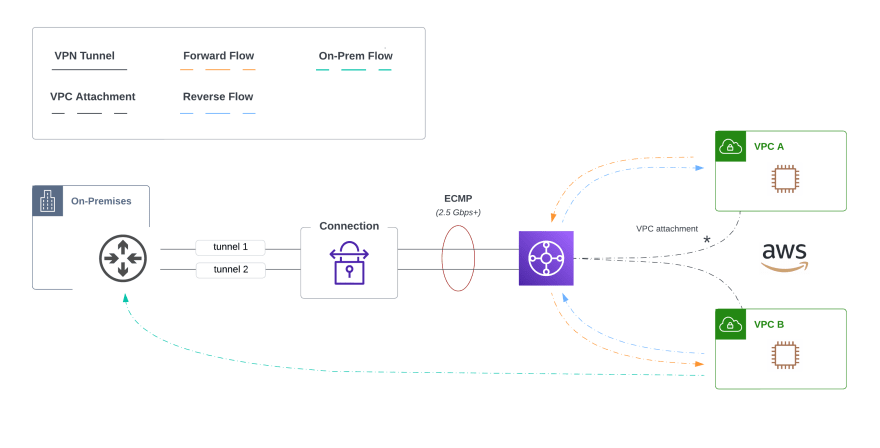

AWS Transit Gateway (TGW) works as a managed distributed router, enabling you to attach multiple VPCs in a hub + spoke architecture. These VPCs have reachability to each other (with the TGW doing the transitive routing). All traffic remains on the global AWS backbone. The service is regionally scoped; however, you can route between transit gateways in different AWS regions using Inter-Region Peering.

You connect various network types to TGW with the appropriate attachments. The various attachment types can be found here. Since we are talking about the evolution of site-to-site VPN design, we would use the TGW VPN Attachments to terminate our VPNs and TGW VPC Attachments for connecting VPCs.

Simplified Connectivity

Keeping things simple has a lot of benefits. This includes reducing the number of tunnels you have to manage while getting more from them. Sometimes you find that less is more, especially knowing that someone has to do operations!

Ludwig Mies van der Rohe

Ludwig was a German-American architect who adopted the motto less is more to describe the aesthetic of minimalist architecture. In the network, a design should be as simple as possible while meeting requirements.

When using Virtual Private Gateway (VGW), we know the tunnel spans from the customer gateway to redundant public endpoints in different AZs. With this new design, tunnels would land directly on the transit gateway. This also enables us to leverage a single VPN connection for all of our VPCs back to on-premises. We can also use ECMP to aggregate the bandwidth of both tunnels in the connection.

Accelerated Connections

When using transit gateway, you can enable acceleration when adding the TGW attachment. An accelerated connection uses AWS Global Accelerator to route traffic from your on-premises network to the closest AWS edge location. Your traffic is then optimized once it lands on AWS's global network. Let's examine using acceleration with TGW versus no acceleration with VGW:

When to Accelerate

If the sites being connected are close to a given AWS region where your resources exist, you will see similar performance between both of these options. As distance increases, additional hops over the public internet are introduced, which can increase latency and impact reliability.

This is where acceleration shines. It works by building accelerators that enable you to attach redundant anycast addresses from the edge network. These addresses act as your entrypoint to the VPN tunnel endpoints and then proxies packets at the edge to applications running in a given AWS region.

How much improvement?

AWS boasts up to 60% better performance for internet traffic when using Global Accelerator. You can learn more about how this is accomplished along with the criteria for measurement here.

Conclusion

Understanding your organization's goals and how the network needs to support them is no trivial task. This is where "it depends" rears its proverbial ugly head. In the world of cloud networking, complexity, data transfer, and compounding cost, it pays to think through the design and weigh the trade-offs. One thing is certain -- AWS's site-to-site VPN and other adjacent services have evolved, providing the means to construct high-performing networks with a global scale and amazing user experience.

Top comments (0)