We are going to learn the top algorithm's running time that every developer should be familiar with. Knowing these time complexities will help you to assess if your code will scale. Also, it's handy to compare different solutions for the same problem. By the end, you would be able to eyeball different implementations and know which one will perform better.

To clarify some concepts used in the rest of the post:

- The time complexity is not about timing how long the algorithm takes. Instead, how many operations are executed.

- The number of instructions executed by a program is affected by the size of the input (and how their elements are arranged).

- Big O notation is used to classify algorithms using the input size

n. E.g. O(n) or O(n2).

Before we dive in, here is the Big O cheatsheet and examples that we are going to cover on this post. Click on them to jump to the implementation. 😉

| Big O Notation | Name | Example(s) |

|---|---|---|

| O(1) | Constant | # Odd or Even number, # Look-up table |

| O(log n) | Logarithmic | # Finding element on sorted array with binary search |

| O(n) | Linear | # Find max element in unsorted array, # Duplicate elements in array with Hash Map |

| O(n log n) | Linearithmic | # Sorting elements in array with merge sort |

| O(n2) | Quadratic | # Duplicate elements in array **(naïve)**, # Sorting array with bubble sort |

| O(n3) | Cubic | # 3 variables equation solver |

| O(2n) | Exponential | # Find all subsets |

| O(n!) | Factorial | # Find all permutations of a given set/string |

Now, Let's go one by one and provide code examples!

O(1) - Constant time

O(1) describes algorithms that takes the same amount of time to compute regardless of the input size.

For instance, if a function takes the identical time to process 10 elements as well as 1 million items, then we say that it has a constant growth rate or O(1). Let’s see some cases.

Odd or Even

Find if a number is odd or even.

function isEvenOrOdd(n) {

return n % 2 ? 'Odd' : 'Even';

}

console.log(isEvenOrOdd(10)); // => Even

console.log(isEvenOrOdd(10001)); // => Odd

Advanced note: you could also replace n % 2 with the bit AND operator: n & 1. If the first bit (LSB) is 1 then is odd otherwise is even.

It doesn't matter if n is 10 or 10,001, it will execute line 2 one time.

Do not be fool by one-liners. They don't always translate to constant times. You have to be aware of how they are implemented.

If you have a method like Array.sort() or any other array or object methods you have to look into the implementation to determine its running time.

Primitive operations like sum, multiplication, subtraction, division, modulo, bit shift, etc have a constant runtime. This can be shocking!

If you use the schoolbook long multiplication algorithm, it would take O(n2) to multiply two numbers. However, most programming languages limit numbers to max value (e.g. in JS: Number.MAX_VALUE is 1.7976931348623157e+308). So, you cannot operate numbers that yield a result greater than the MAX_VALUE. So, primitive operations are bound to be completed on a fixed amount of instructions O(1) or throw overflow errors (in JS, Infinity keyword).

This example was easy. Let's do another one.

Look-up table

Given a string find its word frequency data.

const dictionary = {the: 22038615, be: 12545825, and: 10741073, of: 10343885, a: 10144200, in: 6996437, to: 6332195 /* ... */};

function getWordFrequency(dictionary, word) {

return dictionary[word];

}

console.log(getWordFrequency(dictionary, 'the'));

console.log(getWordFrequency(dictionary, 'in'));

Again, we can be sure that even if the dictionary has 10 or 1 million words, it would still execute line 4 once to find the word. However, if we decided to store the dictionary as an array rather than a hash map, then it would be a different story. In the next section, we are going to explore what's the running time to find an item in an array.

Only a hash table with a perfect hash function will have a worst-case runtime of O(1). The perfect hash function is not practical, so there will be some collisions and workarounds leads to a worst-case runtime of O(n). Still, on average the lookup time is O(1).

O(n) - Linear time

Linear running time algorithms are very common. Linear runtime means that the program visits every element from the input.

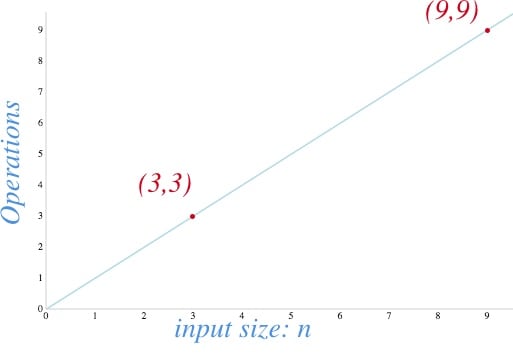

Linear time complexity O(n) means that as the input grows, the algorithms take proportionally longer to complete.

Some examples:

The largest item on an unsorted array

Let's say you want to find the maximum value from an unsorted array.

function findMax(n) {

let max;

let counter = 0;

for (let i = 0; i < n.length; i++) {

counter++;

if(max === undefined || max < n[i]) {

max = n[i];

}

}

console.log(`n: ${n.length}, counter: ${counter}`);

return max;

}

How many operations will the findMax function do?

Well, it checks every element from the input n. If the current element is bigger than max it will do an assignment.

Notice that we added a counter so it can help us count how many times the inner block is executed.

If you get the time complexity it would be something like this:

- Line 2-3: 2 operations

- Line 4: a loop of size n

- Line 6-8: 3 operations inside the for-loop.

So, this gets us 3(n) + 2.

Applying the Big O notation that we learn in the previous post, we only need the biggest order term, thus O(n).

We can verify this using our counter. If n has 3 elements:

findMax([3, 1, 2]);

// n: 3, counter: 3

or if n has 9 elements:

findMax([4,5,6,1,9,2,8,3,7])

// n: 9, counter: 9

Now imagine that you have an array of one million items, it will perform one million operations. If we plot it n and findMax running time we will have a graph like a linear equation.

O(n2) - Quadratic time

A function with a quadratic time complexity has a growth rate of n2. If the input is size 2, it will do 4 operations. If the input is size 8, it will take 64, and so on.

Here are some code examples of quadratic algorithms:

Has duplicates

You want to find duplicate words in an array. A naïve solution will be the following:

function hasDuplicates(n) {

const duplicates = [];

let counter = 0;

for (let outter = 0; outter < n.length; outter++) {

for (let inner = 0; inner < n.length; inner++) {

counter++;

if(outter === inner) continue;

if(n[outter] === n[inner]) {

return true;

}

}

}

console.log(`n: ${n.length}, counter: ${counter}`);

return false;

}

Time complexity analysis:

- Line 2-3: 2 operations

- Line 5-6: double-loop of size n, so

n2. - Line 7-13: has ~3 operations inside the double-

We get 3n^2 + 2.

Again, when we using Big O notation, we drop all constants and leave the most significant term: n^2. So, it would be O(n^2).

We are using a counter variable to help us verify. The hasDuplicates function has two loops. If we have an input of 4 words, it will execute the inner block 16 times. If we have 9, it will perform counter 81 times and so forth.

hasDuplicates([1,2,3,4]);

// n: 4, counter: 16

and with n size 9:

hasDuplicates([1,2,3,4,5,6,7,8,9]);

// n: 9, counter: 81

Let's see another example.

Bubble sort

We want to sort the elements in an array.

function sort(n) {

for (let outer = 0; outer < n.length; outer++) {

let outerElement = n[outer];

for (let inner = outer + 1; inner < n.length; inner++) {

let innerElement = n[inner];

if(outerElement > innerElement) {

// swap

n[outer] = innerElement;

n[inner] = outerElement;

// update references

outerElement = n[outer];

innerElement = n[inner];

}

}

}

return n;

}

Also, you might notice that for a colossal n, the time it takes to solve the problem increases a lot. Can you spot the relationship between nested loops and the running time? When a function has a single loop, it usually translates to a running time complexity of O(n). Now, this function has 2 nested loops and quadratic running time: O(n2).

O(nc) - Polynomial time

Polynomial running is represented as O(nc), when c > 1. As you already saw, two inner loops almost translate to O(n2) since it has to go through the array twice in most cases. Are three nested loops cubic? If each one visit all elements, then yes!

Usually, we want to stay away from polynomial running times (quadratic, cubic, nc …) since they take longer to compute as the input grows fast. However, they are not the worst.

Triple nested loops

Let's say you want to find the solutions for a multi-variable equation that looks like this:

3x + 9y + 8z = 79

This naive program will give you all the solutions that satisfy the equation where x, y and z < n.

function findXYZ(n) {

const solutions = [];

for(let x = 0; x < n; x++) {

for(let y = 0; y < n; y++) {

for(let z = 0; z < n; z++) {

if( 3*x + 9*y + 8*z === 79 ) {

solutions.push({x, y, z});

}

}

}

}

return solutions;

}

console.log(findXYZ(10)); // => [{x: 0, y: 7, z: 2}, ...]

This algorithm has a cubic running time: O(n3).

Note: We could do a more efficient solution but for the purpose of showing an example of a cubic runtime is good enough.

O(log n) - Logarithmic time

Logarithmic time complexities usually apply to algorithms that divide problems in half every time. For instance, let's say that we want to look for a word in an old fashion dictionary. It has every word sorted alphabetically. There are at least two ways to do it:

Algorithm A:

- Start at the beginning of the book and go in order until you find the contact you are looking for.

Algorithm B:

- Open the book in the middle and check the first word on it.

- If the word that you are looking for is alphabetically bigger, then look to the right. Otherwise, look in the left half.

Which one is faster? The first algorithms go word by word O(n), while the algorithm B split the problem in half on each iteration O(log n). This 2nd algorithm is a binary search.

Binary search

Find the index of an element in a sorted array.

If we implement (Algorithm A) going through all the elements in an array, it will take a running time of O(n). Can we do better? We can try using the fact that the collection is already sorted. Later, we can divide in half as we look for the element in question.

function indexOf(array, element, offset = 0) {

// split array in half

const half = parseInt(array.length / 2);

const current = array[half];

if(current === element) {

return offset + half;

} else if(element > current) {

const right = array.slice(half);

return indexOf(right, element, offset + half);

} else {

const left = array.slice(0, half)

return indexOf(left, element, offset);

}

}

const directory = ["Adrian", "Bella", "Charlotte", "Daniel", "Emma", "Hanna", "Isabella", "Jayden", "Kaylee", "Luke", "Mia", "Nora", "Olivia", "Paisley", "Riley", "Thomas", "Wyatt", "Xander", "Zoe"];

console.log(indexOf(directory, 'Hanna')); // => 5

console.log(indexOf(directory, 'Adrian')); // => 0

console.log(indexOf(directory, 'Zoe')); // => 18

Calculating the time complexity of indexOf is not as straightforward as the previous examples. This function is recursive.

There are several ways to analyze recursive algorithms like Master Method which are outside the scope of this post. As a rule of thumb, whenever you see an algorithm dividing the input in half it probably involves some log n runtime. Since the work done outside the recursion is constant, then we have a runtime of O(log n).

O(n log n) - Linearithmic

Linearithmic time complexity it's slightly slower than a linear algorithm but still much better than a quadratic algorithm (you will see a graph comparing all of them at the very end of the post).

Mergesort

What's the best way to sort an array? Before, we proposed a solution using bubble sort that has a time complexity of O(n2). Can we do better?

We can use an algorithm called mergesort to improve it.

This's how it works:

- We are going to divide the array recursively until the elements are two or less.

- We know how to sort 2 items, so we sort them iteratively (base case).

- The final step is merging: we merge in taking one by one from each array such that they are in ascending order.

Here's the code for merge sort:

function sort(n) {

const length = n.length;

// base case

if(length === 1) {

return n;

}

if(length === 2) {

return n[0] > n[1] ? [n[1], n[0]] : [n[0], n[1]];

}

// slit and merge

const mid = length/2;

return merge(sort(n.slice(0, mid)), sort(n.slice(mid)));

}

function merge(a = [], b = []) {

const merged = [];

// merge elements on a and b in asc order. Run-time O(a + b)

for (let ai = 0, bi = 0; ai < a.length || bi < b.length;) {

if(ai >= a.length || a[ai] > b[bi]) {

merged.push(b[bi++]);

} else {

merged.push(a[ai++]);

}

}

return merged;

}

As you can see, it has two functions sort and merge. Merge is an auxiliary function that runs once through the collection a and b, so it's running time is O(n). Sort is a recursive function that splits the array in half each time, the total runtime of the mergesort is O(n log n).

Note: If you want to see the full explanation check out Master Method for mergesort.

O(2n) - Exponential time

Exponential (base 2) running time means that the calculations performed by an algorithm double every as the input grows.

Subsets of a Set

Finding all distinct subsets of a given set. For instance, let's do some examples to try to come up with an algorithm to solve it:

getSubsets('') // => ['']

getSubsets('a') // => ['', 'a']

getSubsets('ab') // => ['', 'a', 'b', 'ab']

Did you notice any pattern?

- The first returns have an empty element.

- The second case returns the empty element + the 1st element.

- The 3rd case returns precisely the results of 2nd case + the same array with the 2nd element

bappended to it.

What if you want to find the subsets of abc? Well, it would be exactly the subsets of 'ab' and again the subsets of ab with c appended at the end of each element.

As you noticed, every time the input gets longer the output is twice as long as the previous one. Let's code it op:

function getSubsets(n = '') {

const array = Array.from(n);

const base = [''];

const results = array.reduce((previous, element) => {

const previousPlusElement = previous.map(el => {

return `${el}${element}`;

});

return previous.concat(previousPlusElement);

}, base);

console.log(`getSubsets(${n}) // ${results.slice(0, 15).join(', ')}... `);

console.log(`n: ${array.length}, counter: ${results.length};`);

return results;

}

If we run that function for a couple of cases we will get:

getSubsets('') // ...

// n = 0, f(n) = 1;

getSubsets('a') // , a...

// n = 1, f(n) = 2;

getSubsets('ab') // , a, b, ab...

// n = 2, f(n) = 4;

getSubsets('abc') // , a, b, ab, c, ac, bc, abc...

// n = 3, f(n) = 8;

getSubsets('abcd') // , a, b, ab, c, ac, bc, abc, d, ad, bd, abd, cd, acd, bcd...

// n = 4, f(n) = 16;

getSubsets('abcde') // , a, b, ab, c, ac, bc, abc, d, ad, bd, abd, cd, acd, bcd...

// n = 5, f(n) = 32;

As expected, if you plot n and f(n), you will notice that it would be exactly like the function 2^n. This algorithm has a running time of O(2^n).

Note: You should avoid functions with exponential running times (if possible) since they don't scale well. The time it takes to process the output doubles with every additional input size. But exponential running time is not the worst yet; there are others that go even slower. Let's see one more example in the next section.

O(n!) - Factorial time

Factorial is the multiplication of all positive integer numbers less than itself. For instance:

5! = 5 x 4 x 3 x 2 x 1 = 120

It grows pretty quickly:

20! = 2,432,902,008,176,640,000

As you might guess, you want to stay away if possible from algorithms that have this running time!

Permutations

Write a function that computes all the different words that can be formed given a string. E.g.

getPermutations('a') // => [ 'a']

getPermutations('ab') // => [ 'ab', 'ba']

getPermutations('abc') // => [ 'abc', 'acb', 'bac', 'bca', 'cab', 'cba' ]

How would you solve that?

A straightforward way will be to check if the string has a length of 1 if so, return that string since you can't arrange it differently.

For strings with a length bigger than 1, we could use recursion to divide the problem into smaller problems until we get to the length 1 case. We can take out the first character and solve the problem for the remainder of the string until we have a length of 1.

function getPermutations(string, prefix = '') {

if(string.length <= 1) {

return [prefix + string];

}

return Array.from(string).reduce((result, char, index) => {

const reminder = string.slice(0, index) + string.slice(index+1);

result = result.concat(getPermutations(reminder, prefix + char));

return result;

}, []);

}

If print out the output, it would be something like this:

getPermutations('ab') // ab, ba...

// n = 2, f(n) = 2;

getPermutations('abc') // abc, acb, bac, bca, cab, cba...

// n = 3, f(n) = 6;

getPermutations('abcd') // abcd, abdc, acbd, acdb, adbc, adcb, bacd...

// n = 4, f(n) = 24;

getPermutations('abcde') // abcde, abced, abdce, abdec, abecd, abedc, acbde...

// n = 5, f(n) = 120;

I tried with a string with a length of 10. It took around 8 seconds!

time node ./lib/permutations.js

# getPermutations('abcdefghij') // => abcdefghij, abcdefghji, abcdefgihj, abcdefgijh, abcdefgjhi, abcdefgjih, abcdefhgij...

# // n = 10, f(n) = 3,628,800;

# ./lib/permutations.js 8.06s user 0.63s system 101% cpu 8.562 total

I have a little homework for you...

Can you try with a permutation with 11 characters? ;) Comment below what happened to your computer!

All running complexities graphs

We explored the most common algorithms running times with one or two examples each! They should give you an idea of how to calculate your running times when developing your projects. Below you can find a chart with a graph of all the time complexities that we covered:

Mind your time complexity!

You can find all these examples and more in the Github repo:

amejiarosario

/

dsa.js-data-structures-algorithms-javascript

amejiarosario

/

dsa.js-data-structures-algorithms-javascript

🥞Data Structures and Algorithms explained and implemented in JavaScript + eBook

Data Structures and Algorithms in JavaScript

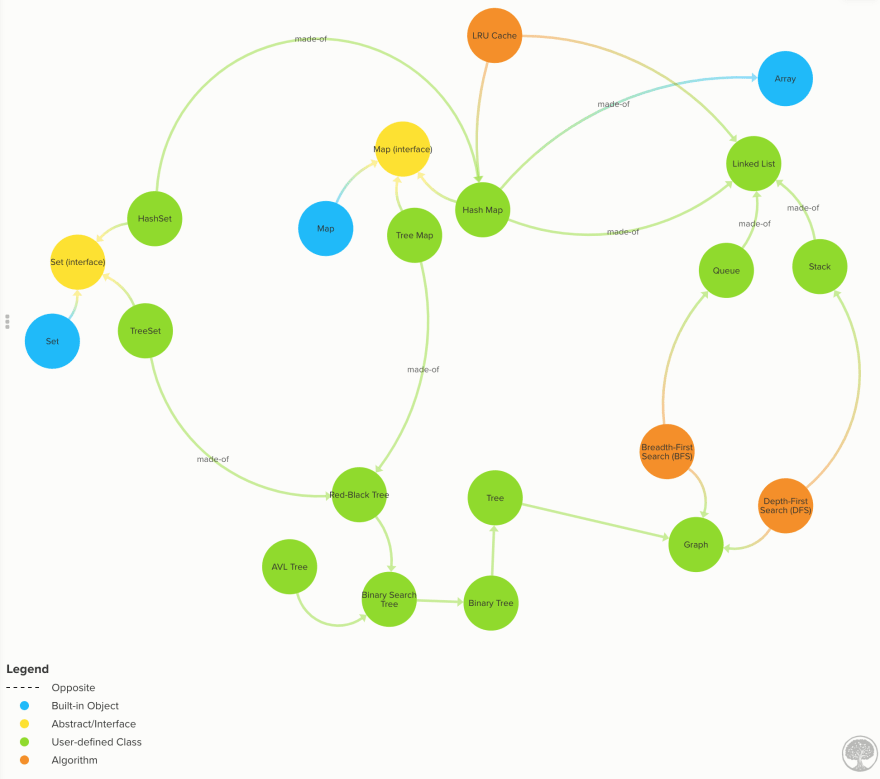

This is the coding implementations of the DSA.js book and the repo for the NPM package.

In this repository, you can find the implementation of algorithms and data structures in JavaScript. This material can be used as a reference manual for developers, or you can refresh specific topics before an interview. Also, you can find ideas to solve problems more efficiently.

Table of Contents

Installation

You can clone the repo or install the code from NPM:

npm install dsa.js

and then you can import it into your programs or CLI

const { LinkedList, Queue, Stack } = require('dsa.js');

For a full list of all the exposed data structures and algorithms see.

Features

Algorithms are an…

Top comments (5)

Oh my! There is a very good explanation with great examples. I struggled to grasp these concepts but your article made it much more simpler to get it.

Awesome! I think the examples are helpful for understanding new concepts

Excelent explanation, it helped me so much. Thank you!

You are welcome! I'm glad to hear that

Awesome!!! A great article.