Demonstration of an Anime Recommendation System with PySpark in SageMaker v1.0.0-alpha01

Understanding the Dataset

Our journey begins with understanding the dataset. We will be using the MyAnimeList dataset sourced from Kaggle, which contains valuable information about anime titles, user ratings, and more. This dataset will serve as the foundation for our recommendation system.

Preprocessing the Data

Before diving into model building, we will preprocess the dataset to ensure it is clean and structured for analysis. While the preprocessing steps have already been completed, we will briefly discuss the importance of data preprocessing in the context of recommendation systems.

for detailed preprocessing check out this notebook

anime-recommendation-system.ipynb

TODO: Add all preprocessing step-by-step with explanations

Visualization

Visualizing the preprocessed data can provide valuable insights into the distribution of anime ratings, user preferences, and other patterns. We will utilize various visualization techniques to gain a better understanding of our dataset.

You can find data visualization techniques applied to better understand the content of the data in anime-recommendation-system.ipynb. You can find one of them below as example.

TODO: Add all visualizations step-by-step with explanations and insights

Recommendation System

We employed the Alternating Least Squares (ALS) algorithm to build the recommendation system using PySpark. We used the mean square error algorithm to find the best model. We calculated how accurately it measured the real value by training the data allocated for the test and estimating the remaining data.

Since these parameters had the lowest mean square error, we created a model with these parameters to obtain more accurate results.

rank, iter, lambda_ = 50, 10, 0.1

model = ALS.train(rating, rank=rank, iterations=iter, lambda_=lambda_, seed=5047)

Cloud Infrastructure Setup with Terraform

First of all we need to upload preproocessed data to a s3 bucket. Use the following commands. You can reach to upload.py in there.

$ cd sagemaker

$ python upload_data.py -n 'anime-recommendation-system' -r 'eu-central-1' -f '../preprocessed_data'

../preprocessed_data\user.csv 4579798 / 4579798.0 (100.00%)00%)

After that run terraform commands to create an Amazon SageMaker Notebook Instance:

$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding latest version of hashicorp/aws...

- Installing hashicorp/aws v5.41.0...

- Installed hashicorp/aws v5.41.0 (signed by HashiCorp)

...

$ terraform plan

...

Plan: 4 to add, 0 to change, 0 to destroy.

$ terraform apply --auto-approve

aws_iam_policy.sagemaker_s3_full_access: Creating...

aws_iam_role.sagemaker_role: Creating...

aws_iam_policy.sagemaker_s3_full_access: Creation complete after 1s [id=arn:aws:iam::749270828329:policy/SageMaker_S3FullAccessPoliciy]

aws_iam_role.sagemaker_role: Creation complete after 1s [id=AnimeRecommendation_SageMakerRole]

aws_iam_role_policy_attachment.sagemaker_s3_policy_attachment: Creating...

aws_sagemaker_notebook_instance.notebookinstance: Creating...

aws_iam_role_policy_attachment.sagemaker_s3_policy_attachment: Creation complete after 1s [id=AnimeRecommendation_SageMakerRole-20240317120654686300000001]

aws_sagemaker_notebook_instance.notebookinstance: Still creating... [10s elapsed]

...

Apply complete! Resources: 4 added, 0 changed, 0 destroyed.

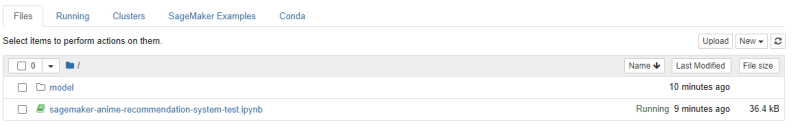

Create a new conda_python3 notebook and Run sagemaker-anime-recommendation-system.ipynb step by step on the Notebook Instance

You can look at the sagemaker-anime-recommendation-system-test.html file to see the output.

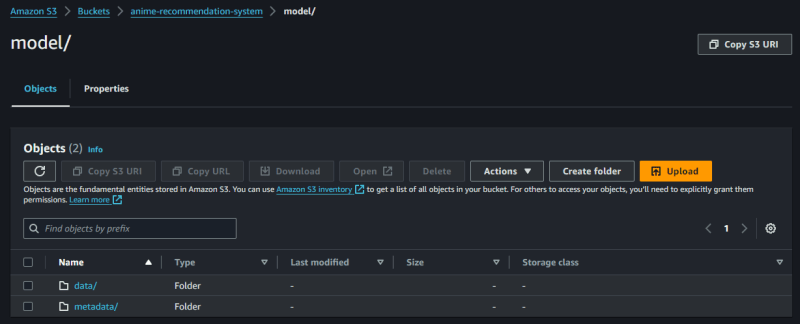

In this code, model data is saved locally and then uploaded properly to an s3 bucket.

model.save(SparkContext.getOrCreate(), 'model')

import boto3

import os

# Initialize S3 client

s3 = boto3.client('s3')

# Upload files to the created bucket

bucketname = 'anime-recommendation-system'

local_directory = './model'

destination = 'model/'

for root, dirs, files in os.walk(local_directory):

for filename in files:

# construct the full local path

local_path = os.path.join(root, filename)

relative_path = os.path.relpath(local_path, local_directory)

s3_path = os.path.join(destination, relative_path)

s3.upload_file(local_path, bucketname, s3_path)

To load and test the model, run the test_another_instance.ipynb jupyter notebook

In this code, model data is retrieved from the s3 bucket and loaded using the Matrix Factorization Model

import boto3

import os

s3_resource = boto3.resource('s3')

bucket = s3_resource.Bucket('anime-recommendation-system')

for obj in bucket.objects.filter(Prefix = 'model'):

if not os.path.exists(os.path.dirname(obj.key)):

os.makedirs(os.path.dirname(obj.key))

bucket.download_file(obj.key, obj.key)

from pyspark import SparkContext

from pyspark.mllib.recommendation import MatrixFactorizationModel

m = MatrixFactorizationModel.load(SparkContext.getOrCreate(), 'model')

As a result, I explained how to create the model by loading the model data into each separate environment, making the model easier to use, and processing it with SageMaker's notebook instance. See you in another article...👋👋

Top comments (0)