Introducton

We in trackxy were migrating from microservices managed each by its own team to our Kubernetes cluster, where we have our services managed.

Problem

- First, we need to know what schema migration is

schema migration (also database migration, database change management) refers to the management of incremental, reversible changes and version control to relational database schemas. A schema migration is performed on a database whenever it is necessary to update or revert that database's schema to some newer or older version.

Migrations are performed programmatically by using a schema migration tool. When invoked with a specified desired schema version, the tool automates the successive application or reversal of an appropriate sequence of schema changes until it is brought to the desired state.

source

- So, as mentioned above schema migration is good right? yes it's When we have one instance of the program which runs the schema migration we're free to migrate it anytime we need and we're not worried about syncing or which instance will migrate first but when instances are more than 1 we face race condition and problem with which instance should run the migration

Brainstorming

In this section, we'll try to engage you in our thinking process and the pros and cons of each solution and why we chose the solution we did.

Encaspulating Migration inside image

- we first thought about encapsulating the migration process inside the

imageitself but there are 3 reasons why we didn't go for this option- SoC (Separation of Concerns), because it's not the image duty to migrate the database

- Race condition when we have several replicas of the image need to migrate at the same time and Kubernetes pods creation time can't be controlled

- Ambiguous behaviors, multiple replicas trying to migrate at the same time leads to ambiguous behaviors which we couldn't track and we chose not to go forward with this because the deployment component in Kubernetes creates pods in arbitrary order and all pods can start the migration process and make inconsistency in the database

- due to the reasons we mentioned above we decided not to go forward with this solution

This diagram shows the race condition happens when 3 replicas tries to migrate schema at the same time

This diagram shows the race condition happens when 3 replicas tries to migrate schema at the same time

InitContainers

- It's nearly similar to the previous solution and has the same cons

Kubernetes job

A Job creates one or more Pods and will continue to retry execution of the Pods until a specified number of them successfully terminate. As pods successfully completed, the Job tracks the successful completions. When a specified number of successful completions is reached, the task (ie, Job) is complete. Deleting a Job will clean up the Pods it created.

So as mentioned above the Kubernetes job functionality is to create one pod which do a specific job then dies without restarting, and that's exactly what we needed to run our migration once and in one pod only

Our Solution

prerequisites

- This solution assumes you have a good background about docker, Kubernetes and helm

- Having

kubectlon your local machine and configured to your cluster - You have a Kubernetes up and running -we're not discussing configuring k8s cluster here-

- if not you can use Minikube

- We will use PHP/Laravel here to demonstrate our solution

- we have a PHP/Laravel pod

- we configured our Database with PHP

- Our Database up and running -either in the cluster or outside the cluster-

Use case

- We need to add a new table to our database

php artisan make:migration create_flights_table

- Migration should be done and the PHP/Laravel image redeployed, this where our solution comes to solve this problem

Configuration

- we eventually went with the Kubernetes job solution

- This is an example of what we have done to migrate the database

- you have to have a

values.yamlfile to refer to the values we referred to here using HELM

apiVersion: batch/v1

kind: Job

metadata:

name: {{ include "APPLICATION.fullname" . }}-database-migration-job

spec:

template:

spec:

containers:

- name: {{ include "APPLICATION.fullname" . }}-database-migration-job

image: "{{ .Values.databaseMigration.image }}:{{.Values.databaseMigration.imageTag}}"

imagePullPolicy: {{ .Values.image.pullPolicy }}

args:

- php

- artisan

- migrate

env:

- name: ENV_VARIABLE

value: "false"

envFrom:

- configMapRef:

name: {{ include "APPLICATION.fullname" . }}

- secretRef:

name: {{ .Values.laravel.secrets_names.laravel_secrets}}

restartPolicy: Never

Run

- to run the job and deploy using HELM we run in the root directory

helm upgrade --install -n [NAMESPACE] api .

- to run the job using

kubectl, you have to fill the variables instead of usingvalues.yaml

kubectl apply -f job.yaml

Testing

- You can see the job and the pod it looks after like so:

kubectl get jobs

kubectl get pods

-

we can see that the pod for the job is created, ran and stopped after completing

-

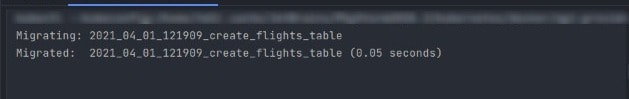

we can see the logs of the pod to make sure that our migration is done well

kubectl logs [pod-name]

invoking job

First we need to know that Kubernetes jobs don't rerun by default, we found that we have to deploy the job every single time you deploy so we decided to redeploy every time using our CI/CD pipeline - outside this article scope -, you can contact me to help you setup your pipeline

author

written by me Ahmed Hanafy feel free to contact me if you have any questions

Top comments (5)

You can even run the job as part of your helm chart. Then you can set the job as a pre hook in helm which will make sure to run the job before anything else and only go forward with deployment when the job succeeds.

NB: remember to create any secrets, configmaps and etc (using a pre hook with less value than the job). that the job will need before running the job itself.

yes I found this solution too, but we prefered to invoke the job from the CI pipelines instead

You did clarify, thank you

Regarding the db migration we limited it to the production db only

Our dev env has it’s migration managed in other way -not sure how but i think it’s handled using docker-compose -

Will check the Java library thank you very much

This is my first article and I’m still junior SWE learning DevOps so I hope my article -in which i put alot of effort - helped you a bit

Thanks

We assumed we won’t have breaking changes in database and older versions of services will use the newer version of database without problems

I have no idea if there’s libraries that does so if you can point me to one it’ll be very helpful

Tbh I don’t understand your point about setup script, can you explain more for me

Maybe this could be solved by introducing a db migration framework? A centralised software that applies changes to DB, just to name a few: liquibase.org/, flywaydb.org/, or (shameless plug): github.com/lukaszbudnik/migrator