This article was originally published on ScraperAPI

Whether you’re an investor tracking your portfolio, or an investment firm looking for a way to stay up-to-date more efficiently, creating a script to scrape stock market data can save you both time and energy.

In this tutorial, we’ll build a script to track multiple stock prices, organize them into an easy-to-read CSV file that will update itself with the push of a button, and collect hundreds of data points in a few seconds.

Building a Stock Market Scraper With Requests and Beautiful Soup

For this exercise, we’ll be scraping investing.com to extract up-to-date stock prices from Microsoft, Coca-Cola, and Nike, and storing it in a CSV file. We’ll also show you how to protect your bot from being blocked by anti-scraping mechanisms and techniques using ScraperAPI.

Note: The script will work to scrape stock market data even without ScraperAPI, but will be crucial for scaling your project later.

Although we’ll be walking you through every step of the process, having some knowledge of the Beautiful Soup library beforehand is helpful. If you’re totally new to this library, check out our beautiful soup tutorial for beginners. It’s packed with tips and tricks, and goes over the basics you need to know to scrape almost anything.

With that out of the way, let’s jump into the code so you can learn how to scrape stock market data.

1. Setting Up Our Project

To begin, we’ll create a folder named “scraper-stock-project”, and open it from VScode (you can use any text editor you’d like). Next, we’ll open a new terminal and install our two main dependencies for this project:

pip3 install bs4

pip3 install requests

After that, we’ll create a new file named “stockData-scraper.py” and import our dependencies to it.

import requests

from bs4 import BeautifulSoup

With Requests, we’ll be able to send an HTTP request to download the HTML file which is then passed on to BeautifulSoup for parsing. So let’s test it by sending a request to Nike’s stock page:

url = 'https://www.investing.com/equities/nike'

page = requests.get(url)

print(page.status_code)

By printing the status code of the page variable (which is our request), we’ll know for sure whether or not we can scrape the page. The code we’re looking for is a 200, meaning it was a successful request.

Success! Before moving on, we’ll pass the response stored in page to Beautiful Soup for parsing:

soup = BeautifulSoup(page.text, 'html.parser')

You can use any parser you want, but we’re going with html.parser because it’s the one we like.

Related Resource: What is Data Parsing in Web Scraping? [Code Snippets Inside]

2. Inspect the Website’s HTML Structure (Investing.com)

Before we start scraping, let’s open https://www.investing.com/equities/nike in our browser to get more familiar with the website.

As you can see in the screenshot above, the page displays the company’s name, stock symbol, price, and price change. At this point, we have three questions to answer:

Is the data being injected with JavaScript?

What attribute can we use to select the elements?

Are these attributes consistent throughout all pages?

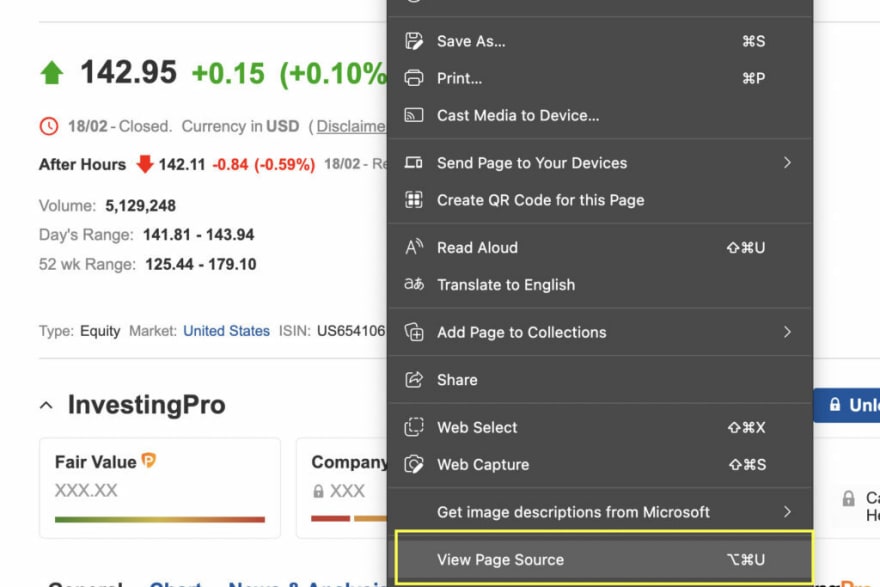

Check for JavaScript

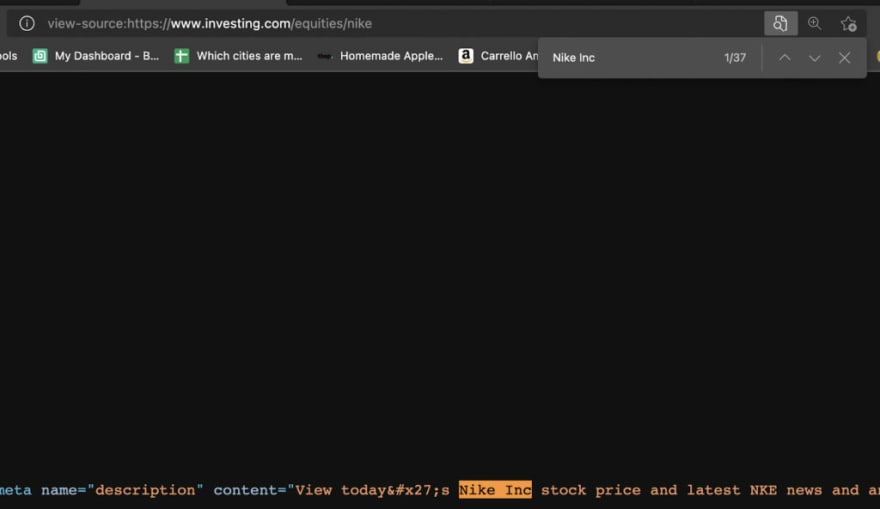

There are several ways to verify if some script is injecting a piece of data, but the easiest way is to right-click, View Page Source.

It looks like there isn’t any JavaScript that could potentially interfere with our scraper. Next we’ll do the W same for the rest of the information. We didn’t find any additional JavaScript we’re good to go.

Note: Checking for JavaScript is important because Requests can’t execute JavaScript or interact with the website, so if the information is behind a script, we would have to use other tools to extract it, like Selenium.

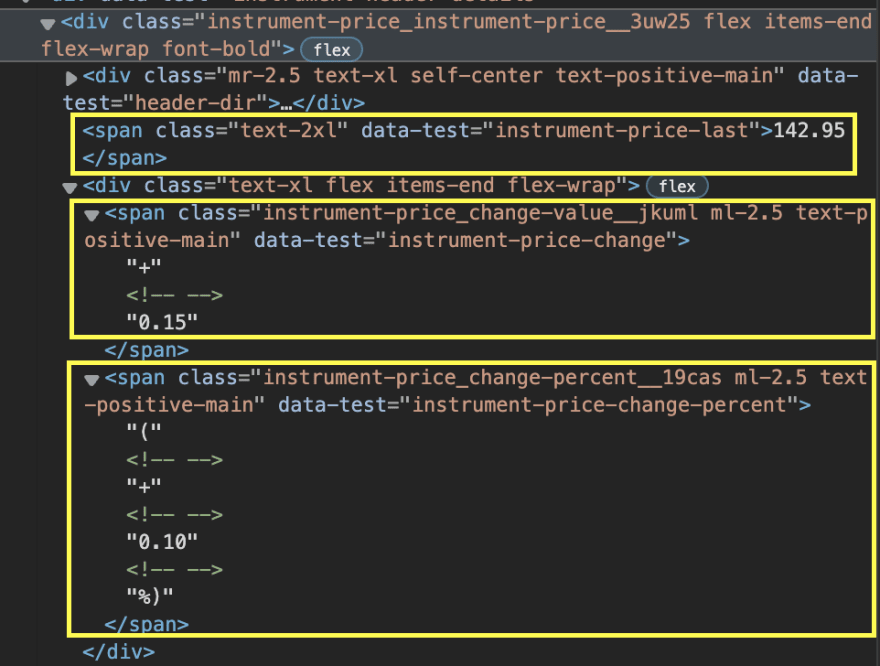

Picking the CSS Selectors

Now let’s inspect the HTML of the site to identify the attributes we can use to select the elements.

Extracting the company’s name and the stock symbol will be a breeze. We just need to target the H1 tag with class ‘text-2xl font-semibold instrument-header_title__GTWDv mobile:mb-2’.

However, the price, price change, and percentage change are separated into different spans.

What’s more, depending on whether the change is positive or negative, the class of the element changes, so even if we select each span by using their class attribute, there will still be instances when it won’t work.

The good news is that we have a little trick to get it out. Because Beautiful Soup returns a parsed tree, we can now navigate the tree and pick the element we want, even though we don’t have the exact CSS class.

What we’ll do in this scenario is go up in the hierarchy and find a parent div we can exploit. Then we can use find_all(‘span’) to make a list of all the elements containing the span tag – which we know our target data uses. And because it’s a list, we can now easily navigate it and pick those we need.

So here are our targets:

company = soup.find('h1', {'class': 'text-2xl font-semibold instrument-header_title__GTWDv mobile:mb-2'}).text

price = soup.find('div', {'class': 'instrument-price_instrument-price__3uw25 flex items-end flex-wrap font-bold'}).find_all('span')[0].text

change = soup.find('div', {'class': 'instrument-price_instrument-price__3uw25 flex items-end flex-wrap font-bold'}).find_all('span')[2].text

Now for a test run:

print('Loading: ', url)

print(company, price, change)

And here’s the result:

3. Scrape Multiple Stocks

Now that our parser is working, let’s scale this up and scrape several stocks. After all, a script for tracking just one stock data is likely not going to be very useful.

We can make our scraper parse and scrape several pages by creating a list of URLs and looping through them to output the data.

urls = [

'https://www.investing.com/equities/nike',

'https://www.investing.com/equities/coca-cola-co',

'https://www.investing.com/equities/microsoft-corp',

]

for url in urls:

page = requests.get(url)

soup = BeautifulSoup(page.text, 'html.parser')

company = soup.find('h1', {'class': 'text-2xl font-semibold instrument-header_title__GTWDv mobile:mb-2'}).text

price = soup.find('div', {'class': 'instrument-price_instrument-price__3uw25 flex items-end flex-wrap font-bold'}).find_all('span')[0].text

change = soup.find('div', {'class': 'instrument-price_instrument-price__3uw25 flex items-end flex-wrap font-bold'}).find_all('span')[2].text

print('Loading: ', url)

print(company, price, change)

Here’s the result after running it:

Awesome, it works across the board!

We can keep adding more and more pages to the list but eventually, we’ll hit a big roadblock: anti-scraping techniques.

4. Integrating ScraperAPI to Handle IP Rotation and CAPCHAs

Not every website likes to be scraped, and for a good reason. When scraping a website, we need to have in mind that we are sending traffic to it, and if we’re not careful, we could be limiting the bandwidth the website has for real visitors, or even increasing hosting costs for the owner. That said, as long as we respect web scraping best practices, we won’t have any problems with our projects, and we won’t cause the sites we’re scraping any issues.

However, it’s hard for businesses to differentiate between ethical scrapers and those that will break their sites. For this reason, most servers will be equipped with different systems like

Browser behavior profiling

CAPTCHAs

Monitoring the number of requests from an IP address in a time period

These measures are designed to recognize bots, and block them from accessing the website for days, weeks, or even forever.

Instead of handling all of these scenarios individually, we’ll just add two lines of code to make our requests go through ScraperAPI’s servers and get everything automated for us.

First, let’s create a free ScraperAPI account to access our API key and 5000 free API credits for our project.

Now we’re ready to add to our loop a new params variable to store our key and target URL and use urlencode to construct the URL we’ll use to send the request inside the page variable.

params = {'api_key': '51e43be283e4db2a5afb62660xxxxxxx', 'url': url}

page = requests.get('http://api.scraperapi.com/', params=urlencode(params))

Oh! And we can’t forget to add our new dependency to the top of the file:

from urllib.parse import urlencode

Every request will now be sent through ScraperAPI, which will automatically rotate our IP after every request, handle CAPCHAs, and use machine learning and statistical analysis to set the best headers to ensure success.

Quick Tip: ScraperAPI also allows us to scrape a dynamic site by setting ‘render’: true as a parameter in our params variable. ScraperAPI will render the page before sending back the response.

5. Store Data In a CSV File

Tos tore your data in an easy-to-use CSV file, simply add these three lines between your URL list and your loop:

file = open('stockprices.csv', 'w')

writer = csv.writer(file)

writer.writerow(['Company', 'Price', 'Change'])=

This will create a new CSV file and pass it to our writer (set in the writer variable) to add the first row with our headers.

It’s essential to add it outside of the loop, or it will rewrite the file after scraping each page, basically erasing previous data and giving us a CSV file with only the data from the last URL from our list.

In addition, we’ll need to add another line to our loop to write the scraped data:

writer.writerow([company.encode('utf-8'), price.encode('utf-8'), change.encode('utf-8')])

And one more outside the loop to close the file:

file.close()

6. Finished Code: Stock Market Data Script

You’ve made it! You can now use this script with your own API key and add as many stocks as you want to scrape:

dependencies

import requests

from bs4 import BeautifulSoup

import csv

from urllib.parse import urlencode

list of URLs

urls = [

'https://www.investing.com/equities/nike',

'https://www.investing.com/equities/coca-cola-co',

'https://www.investing.com/equities/microsoft-corp',

]

starting our CSV file

file = open('stockprices.csv', 'w')

writer = csv.writer(file)

writer.writerow(['Company', 'Price', 'Change'])

looping through our list

for url in urls:

#sending our request through ScraperAPI

params = {'api_key': '51e43be283e4db2a5afb62660fc6ee44', 'url': url}

page = requests.get('http://api.scraperapi.com/', params=urlencode(params))

#our parser

soup = BeautifulSoup(page.text, 'html.parser')

company = soup.find('h1', {'class': 'text-2xl font-semibold instrument-header_title__GTWDv mobile:mb-2'}).text

price = soup.find('div', {'class': 'instrument-price_instrument-price__3uw25 flex items-end flex-wrap font-bold'}).find_all('span')[0].text

change = soup.find('div', {'class': 'instrument-price_instrument-price__3uw25 flex items-end flex-wrap font-bold'}).find_all('span')[2].text

#printing to have some visual feedback

print('Loading :', url)

print(company, price, change)

#writing the data into our CSV file

writer.writerow([company.encode('utf-8'), price.encode('utf-8'), change.encode('utf-8')])

file.close()

Wrapping Up: considerations when running your stock market data scraper

You need to remember that the stock market isn’t always open. For example, if you’re scraping data from NYC’s stock exchange, it closes at 5 pm EST on Fridays and opens on Monday at 9:30 am. So there’s no point in running your scraper over the weekend. It also closes at 4 pm so that you won’t see any changes in the price after that.

Another variable to keep in mind is how often you need to update the data. The most volatile times for the stock exchange are opening and closing times. So it might be enough to run your script at 9:30 am, at 11 am, and at 4:30 pm to see how the stocks closed. Monday’s opening is also crucial to monitor as many trades occur during this time.

Unlike other markets like Forex, the stock market typically doesn’t make too many crazy swings. That said, oftentimes news and business decisions can heavily impact stock prices – take Meta shares crash or the rise of GameStop share price as examples – so reading the news related to the stocks you are scraping is vital.

We hope this tutorial helped you build your own stock market data scraper, or at least pointed you in the right direction. In a future tutorial, we’ll build on top of this project to create a real-time stock data scraper to monitor your stocks, so stay tuned for that!

![Cover image for How to Scrape Stock Market Data in Python [Practical Guide, Plus Code]](https://media2.dev.to/dynamic/image/width=1000,height=420,fit=cover,gravity=auto,format=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fmrwiv267641psnfv7hq1.jpg)

Top comments (0)