An end-to-end demo of a simple IoT system to stream and visualize heart rate data.

on [Unsplash](https://unsplash.com?utm_source=medium&utm_medium=referral)](https://res.cloudinary.com/practicaldev/image/fetch/s--1Yug8xAX--/c_limit%2Cf_auto%2Cfl_progressive%2Cq_auto%2Cw_880/https://cdn-images-1.medium.com/max/10368/0%2Am_6w4yHF3UKsXlLA)

Thanks to the growing popularity of fitness trackers and smartwatches, more people are tracking their biometrics data closely and integrating IoT into their everyday lives. In my search for a DIY heart rate tracker, I found an excellent walkthrough from Brandon Freitag and Gabe Weiss, using Google Cloud services to stream data from a Raspberry Pi with a heart rate sensor to BigQuery via IoT Core and Cloud Dataflow.

](https://res.cloudinary.com/practicaldev/image/fetch/s--T-TO1Y8w--/c_limit%2Cf_auto%2Cfl_progressive%2Cq_auto%2Cw_880/https://cdn-images-1.medium.com/max/2000/0%2APOR1pmV-c868f6KM.png) Image Credit: Google Codelab

Image Credit: Google Codelab

Although Cloud Dataflow supports streaming inserts to BigQuery, I wanted to take this opportunity to try out a new time-series database I came across called QuestDB. QuestDB is a fast open-source time-series database with Postgres compatibility. The live demo on the website queried the NYC taxi rides dataset with over 1.6 billion rows in milliseconds, so I was excited to give this database a try. To round out the end-to-end demo, I used Grafana to pull and visualize data from QuestDB.

Prerequisites

NodeJS v14+

Docker

Optional: *Raspberry Pi kit*

In this tutorial, we will use a Debian image to run simulated heart rate data through IoT Core. If you wish to send real sensor data from Raspberry Pi, purchase the optional kit listed above and follow the install instructions.

Google Cloud Setup

In order to use Cloud IoT Core and Cloud Pub/Sub, you need to first create a Google Cloud Platform account and a new project (mine is called questdb-iot-demo ). Then, enable IoT Core, Compute Engine, and Pub/Sub APIs under APIs & Services -> Enable APIs and Services -> Search for APIs & Services:

IoT Core Setup

IoT Core is Google’s fully-managed IoT service to help securely connect and manage IoT devices. In this demo, we will create a registry called heartrate and send MQTT data. Click on “Create Registry” and set the Registry ID and Region based on the geographic region closest to you (for me it was us-central1):

Next, we need to configure a Pub/Sub topic to publish device data to. Under “Select a Cloud Pub/Sub topic”, click on “Create a Topic” and give it the Topic ID heartratedata :

Once the “Registry properties” and “Cloud Pub/Sub topics” are configured, click on “Create”.

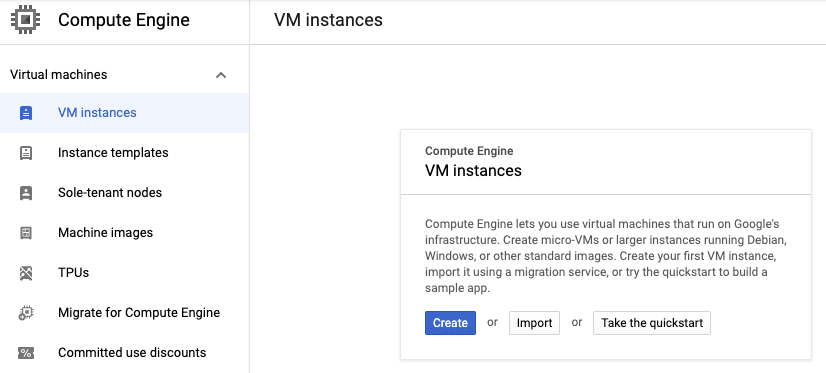

Compute Engine Setup

Now it’s time to add our simulated device. In order for our device to communicate with IoT Core, we need to add a public key. Head over to Compute Engine -> Create.

The default options (e2-medium, Debian 10 image, us-central1) will work for our simulator (*Note: make sure to match the region with the IoT Core registry region if you chose something other than us-central1 *). Once the VM is ready, click on the SSH button under “Connect” and install the project code:

# Install git

sudo apt-get update

sudo apt-get install git

# Clone project code

git clone [https://github.com/googlecodelabs/iotcore-heartrate](https://github.com/googlecodelabs/iotcore-heartrate)

cd iotcore-heartrate

# Install all the core packages

chmod +x initialsoftware.sh

./initialsoftware.sh

# Generate the keys

chmod +x generate_keys.sh

./generate_keys.sh

# View the keys (highlighting will copy the contents)

cat ../.ssh/ec_public.pem

IoT Device Setup

Once you have the ec_public.pem key, head back to the IoT Core Registry. Under “Devices”, click on “Create a Device”. For Device ID, enter raspberryHeartRate and expand the “Communication, Cloud Logging, Authentication” pull down:

Under Authentication, change the “Public key format” to ES256 and paste in the key from our VM or Raspberry Pi and click “Create”:

Pub/Sub Setup

Finally, we need to create a subscription to our Pub/Sub topic to pull messages and insert into QuestDB. Head over to “Pub/Sub” and click on our heartratedata topic. Name the subscription questdb and click create.

QuestDB Setup

At this point, we have everything on Google Cloud to send data to our Pub/Sub topic. Now we need to write some code to take those messages and insert them into QuestDB. Let’s start by starting up QuestDB via Docker.

docker run -p 9000:9000 -p 8812:8812 questdb/questdb

The above command pulls the latest QuestDB image (v5.0.6) and maps port 9000 for the console UI and port 8812 for Postgres operations. Open up the QuestDB console at (http://127.0.0.1:9000/) and create our heart_rate table:

CREATE TABLE heart_rate(sensorID STRING, uniqueID STRING, timecollected TIMESTAMP, heartrate DOUBLE);

(Note: the UI doesn’t automatically refresh so if you don’t see the heart_rate table populated on the tables panel, click on the refresh icon above the tables.)

Pub/Sub to QuestDB

Since there’s no native integration for Pub/Sub, we will need to write a simple program to listen to new Pub/Sub messages and insert the data into QuestDB. I’m using NodeJS v14.15.4, but you can use similar client libraries for Pub/Sub and Postgres to achieve the same.

First, configure the gcloud sdk to authenticate with your GCP project without having to download a service account (see Simple GCP Authentication with Service Accounts for more details).

# Set default profile

$ gcloud auth application-default login

Next, create a new NodeJS workspace and install @google-cloud/pubsub and pg libraries. You can use the code below to listen to Pub/Sub and stream to QuestDB.

Sending Data

Finally, we are ready to send the simulated data. Switch back to the Compute Engine and ssh into the VM again. Issue the command below to send the data to our IoT Core device:

$ python heartrateSimulator.py --project_id=questdb-iot-demo --registry_id=heartrate --device_id=raspberryHeartRate --private_key_file=../.ssh/ec_private.pem

If successful, you should see some logs like:

...

Publishing message #544: '{"sensorID": "heartrate.raspZero", "heartrate": 72.56881801680139, "uniqueID": "c1ca9656-671f-4fa7-8c03-12fdfb4f422f-heartrate.raspZero", "timecollected": "2018-07-07 20:54:50"}'Publishing message #545: '{"sensorID": "heartrate.raspZero", "heartrate": 72.8324264524384, "uniqueID": "8d6337b7-204f-4209-88c0-46a79d1911bb-heartrate.raspZero", "timecollected": "2018-07-07 20:54:59"}'

Finished.

Now run our NodeJS code and we should see data populated in QuestDB:

Visualizing Data with Grafana

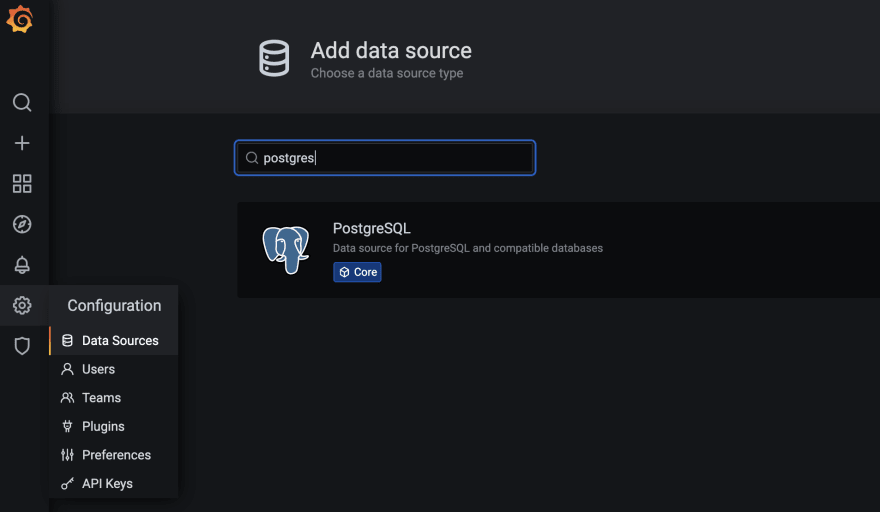

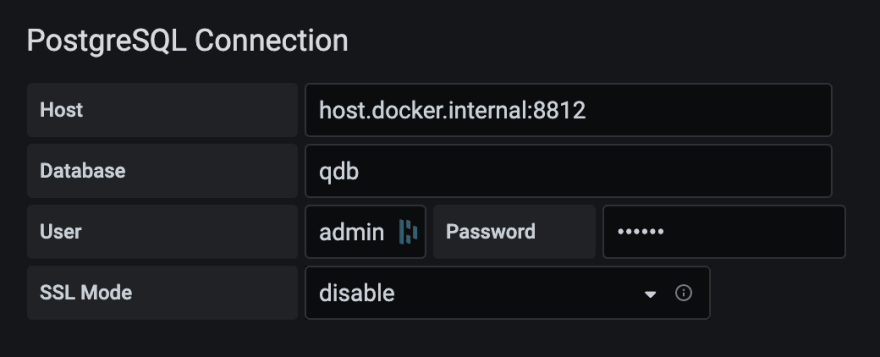

Although QuestDB console provides some default visualizations out of the box, to simulate a more realistic scenario of combining all the metrics to Grafana, we’ll set up a Postgres data source and visualize our heart rate data.

Download Grafana and login at http://localhost:3000/login with admin/admin

$ docker run -p 3000:3000 grafana/grafana

Under “Configuration” -> “Data Sources”, search for PostgreSQL.

For PostgreSQL connection, enter the following (password: quest) and save

Finally, let’s create a dashboard. Create a dashboard and add the following SQL query:

SELECT

timecollected AS "time",

heartrate

FROM heart_rate

ORDER BY time;

Now we see the sample heart rate data:

As a side note, the same chart can be drawn on QuestDB console:

At this point, we have an end-to-end system of a device securely sending data via IoT Core and streaming data into QuestDB. We can extend this example to multiple devices by adding them under IoT Core and scaling our server to using pooled connections to more efficiently add data to QuestDB. At scale, we can also look at average heart rates instead of raw data points (e.g. avg(heartrate) as average_heartrate from heart_rate ).

Top comments (0)