I was drawn to Gatsby out of my love for React, and my desire to serve fast, perfomant, responsive applications. I started learning React just as the library started embracing ES6 classes, and just as create-react-app was taking off. It was a challenge at first to learn the simplicity of proxying to get the front end to run concurrently with a node / express API. And yet, to get from that point to server-side rendering took a little more research and effort. It has been fun learning with and from the larger coding community. I could continue to enumerate the other technical issues I have encountered and solved, and yet some others I still have to learn, but note something important so far regarding my journey—it has been a techinical-first-journey, focused on knowledge and skill of the various langauges and libraries, necessary yes, but perhaps not primary. The last thing on mind has been SEO , accessbility , and security.

Setting An SEO-First Mindset

I’ll leave accessibility and then security future posts, but what has impressed me thus far in my dive into the Gatbsy ecosystem has been the ease to configure search engine optimization. In fact, you can create an architecture for your site or app that is SEO driven long before developing your UI. I want to walk you through the things I have learned so far in optimizing a Gatsby site for SEO right from the start.

Before We Begin

You need to have some familiarity with Gatsby. To learn how to install the gatbsy-cli and create a new Gatsby project from one of the many Gatsby Starters, please consider completing the Gatsby tutorial.

Otherwise, pick a starter from the SEO category, and open the SEO component or just use the gatsby-starter-default by running from the command line:

gatsby new seo-blog https://github.com/gatsbyjs/gatsby-starter-default

The SEO component is dependent upon react-helmet, if your starter does not come with SEO initialized be sure to add it. We also want to add some other features like sitemap, google analytics, and RSS feed, for syndication. Finally, we need to create robots.txt to manage how search engines crawl the site. I’ve split up the commands below for spacing purposes, but they can all run as one yarn add command:

yarn add react-helmet gatsby-plugin-react-helmet

yarn add gatsby-plugin-feed gatsby-plugin-google-analytics gatsby-plugin-sitemap

yarn add gatsby-plugin-robots-txt

With your starter and these plugins installed, we are ready to set up our site for SEO Performance.

Setting up gatsby-config.js

Within the root of your new Gatsby project sits the file Gatsby uses to configure siteMetaData and plugins and several other important features.

This file, gatsby-config.js is going to do the heavy lifting of importing all your vital SEO related content into GraphQL or create necessary files directly (like robots.txt).

Site Metadata

Metadata is as it sounds, data that extends across or throughout the entirity of your site. It can be used anywhere, but will come most in handy when configuring your SEO component as well as Google Structured Data.

Initialize your metadata as an Object literal with key/value pairs that can be converted into <meta> tags, or can be passed to sitemaps or footers, wherever you might need global site data:

<meta name="title" content="My Super Awesome Site"/>

<meta name="description" content="My Super Awesome Site will blow your mind with radical content, extravagant colors, and hip designs."/>

Here is where you need to plan what might use across your site:

- title

- description

- keywords

- site verification

- social links

- other

With siteMetadata set up, your can now query this data to be used within your plugin initialization, even within the same gatsby-config.js file. I’ve organized my siteMetadata so that I can make the following query:

query: `

site {

siteMetadata {

title

description

author

siteUrl

siteVerification {

google

bing

}

social {

twitter

}

socialLinks {

twitter

linkedin

facebook

stackOverflow

github

instagram

pinterest

youtube

email

phone

fax

address

}

keywords

organization {

name

url

}

}

}

`

This query returns an object matching this query structure:

site: {

siteMetadata: {

title: String,

description: String,

author: String,

siteUrl: String,

siteVerification: {

google: String,

bing: String

},

social: {

twitter: String

},

socialLinks: {

twitter: String,

linkedin: String,

facebook: String,

stackOverflow: String,

github: String,

instagram: String,

pinterest: String,

youtube: String,

email: String,

phone: String,

fax: String,

address: String

},

keywords: [String],

organization: {

name: String,

url: String

}

}

}

Plugin Setup

We are going to focus on four plugins, each for the sitemap, RSS feed, robots.txt file, and Google Analytics (for tracking the SEO success of your site). We’ll initialize the plugins immediately following siteMetaData.

module.exports = {

siteMetadata: {

/ **SEE ABOVE** /

},

plugins: [/ **An ARRAY** /], }

-

gatsby-plugin-sitemap

The sitemap plugin can be used simply or with options. Generally, you want to include anything and everything with high quality content, and exclude duplicate content, thin content, or pages behind authentication. Gatsby provides a detailed walkthrough for setting up your sitemap.

plugins: [ { resolve: `gatsby-plugin-sitemap`, options: { exclude: [`/admin`, `/tags/links`] } }, ] -

gatsby-plugin-feed

An RSS feed helps with syndication of content on your site, like if you had a blog and wanted to cross-post to another better established blog, and it helps communicate changes to your site to search engines. This plugin allows you to create any number of different feeds utlizing the power of GraphQL to query your data. Some of what is below is dependent on how you structure your pages and posts in

gatsby-node.js. The feed will walk through each of your pages inmarkdowngenerate an XML RSS Feed. Gatsby provides a detailed walkthrough for adding an RSS feed.Note particularly the use of queries below to complete the feed.

{ resolve: `gatsby-plugin-feed`, options: { // graphQL query to get siteMetadata query: ` { site { siteMetadata { title description siteUrl site_url: siteUrl, author } } } `, feeds: [ // an array of feeds, I just have one below { serialize: ({ query: { site, allMarkdownRemark } }) => { const { siteMetadata : { siteUrl } } = site; return allMarkdownRemark.edges.map(edge => { const { node: { frontmatter: { title, date, path, author: { name, email }, featured: { publicURL }, featuredAlt }, excerpt, html } } = edge; return Object.assign({}, edge.node.frontmatter, { language: `en-us`, title, description: excerpt, date, url: siteUrl + path, guid: siteUrl + path, author: `${email} ( ${name} )`, image: { url: siteUrl + publicURL, title: featuredAlt, link: siteUrl + path, }, custom_elements: [{ "content:encoded": html }], }) }) }, // query to get blog post data query: ` { allMarkdownRemark( sort: { order: DESC, fields: [frontmatter___date] }, ) { edges { node { excerpt html frontmatter { path date title featured { publicURL } featuredAlt author { name email } } } } } } `, output: "/rss.xml", title: `My Awesome Site | RSS Feed`, }, ], }, }, -

gatsby-plugin-robots-txt

With

robots.txtfiles, you can instruct crawlers to ignore your site and/or individual paths, based on certain conditions. See the plugin description for more detailed use cases.

{ resolve: 'gatsby-plugin-robots-txt', options: { policy: [{ userAgent: '*', allow: '/' }] } }, -

gatsby-plugin-google-analytics

Google analytics will help you track how users find and interact with your site, so you can manage and update your site over time for better SEO results. Verify your site with Google and store your analytics key here:

{ resolve: `gatsby-plugin-google-analytics`, options: { trackingId: ``, }, },

Optimizing the SEO Component

The secret sauce behind the SEO Component is the well-known react-hemlet package. Every page and every template imports the SEO Component and thus you can pass specific information to adjust the metadata for each public facing page.

Use your imagination—what can you pass to this component to create the perfect SEO enabled page? Is the page a landing page, a blog post, a media gallery, a video, a professional profile, a product? There are 614 types of schemas listed on https://schema.org. You can pass specific schema related information to the SEO component.

If any of these values you pass to the component, a StaticQuery would fill in what’s missing with siteMetaData. From this data, react-helmet creates all the <meta> tags, including open graph and twitter card tags, and pass relevant data to another component that returns Google Structured Data.

Rather than including a long code-snippet, refer back to the Before We Begin section, or refer to the gatsby-plugin-react-helment page for installation. But please note, the structure of my SEO Component follows that outlined by this excellent post by Dustin Schau.

As I have tinkered with the SEO Component, here are the features I added:

- Additional Fields Passed to Component to: distinguish between types of pages, such as blog posts, to handle authors of content other than main site author, and to handle changes in date modified for pages and posts. More could be added in the future.

function SEO({

description,

lang,

meta,

keywords,

image,

title,

pathname,

isBlogPost,

author,

datePublished = false,

dateModified = false

}) {

- Setting

og:typeconditionally

{

property: `og:type`,

content: isBlogPost ? `article` : `website`,

},

- Always setting an

altproperty on the image object

// ALWAYS ADD IMAGE:ALT

{ property: "og:image:alt", content: image.alt },

{ property: "twitter:image:alt", content: image.alt },

- Handling Secure Images

.concat(

// handle Secure Image

metaImage && metaImage.indexOf("https") > -1

? [

{ property: "twitter:image:secure_url", content: metaImage, },

{ property: "og:image:secure_url", content: metaImage },

]

: []

)

- Adding a Component to handle

Google Structured Datausing schema.org categories. I came across an example of such a component from reading through various documentation and articles, I can’t recall where I saw the link first, but I borrowed and adapted from Jason Lengstorf. I made two small adaptations to his excellent work.

Configuring the SchemaOrg Component

You will import and call the SchemaOrg Component from within the SEO Component and place it just after the closing tag of the Helmet Component and pass the following properties:

function SEO(.....) {

...

return (

<> {/* Fragment Shorthand */}

<Helmet

{/* All the Meta Tag Configuration */}

/>

<SchemaOrg

isBlogPost={isBlogPost}

url={metaUrl}

title={title}

image={metaImage}

description={metaDescription}

datePublished={datePublished}

dateModified={dateModified}

canonicalUrl={siteUrl}

author={isBlogPost ? author : siteMetadata.author}

organization={organization}

defaultTitle={title}

/>

</>

)

}

I won’t copy and paste the entire SchemaOrg Component here. Grab it from the link above, and give Jason Lengstorf some credit in your code. Below are the few additions I made:

- I added author email to the Schema. This will come from

siteMetadatafor most pages and from postfrontmatterfrom blog posts. This will support multiple authors for your site and each page can reflect that uniquely if you so choose.

author: {

"@type": "Person",

name: author.name,

email: author.email,

},

- I updated the organization logo from a simple URI to an

ImageObjecttype. While theStringURI is acceptable to theOrganizationtype, Google has specific expectations and was throwing an error until I changed it to anImageObject.

publisher: {

"@type": "Organization",

url: organization.url,

logo: {

"@type": "ImageObject",

url: organization.logo.url,

width: organization.logo.width,

height: organization.logo.height

},

name: organization.name,

},

- Add

dataModifiedto reflect changes to the page after initial publication for theBlogPostingtype.

datePublished,

dateModified,

When you have more complexity within you site, you can pass flags to this Component to return differing types of schema as needed. But no matter what you do, do not put your site into production without first passing through the script generated by the component to the Google Structured Data Testing Tool.

Concluding Thoughts

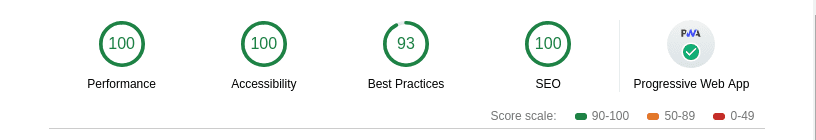

When I configured my site according to the plan I outlined above, not only do I get image rich, descriptive Social Sharing cards, I get perfect SEO scores when running a Lighthouse Audit on my site:

You also see that I scored 100% for Accessibility on my site. This is so easy to score with Gatsby as well, and I’ll talk about what I learned on this topic in the future.

Originally Posted on my blog at https://www.wesleylhandy.net/blog/seo-accessibility-first-gatsby.html

Top comments (1)

excellent post. I searched gatsby SEO in google and your blog(wesleylhandy.net/blog/seo-accessib...) came as first result. I used it to improve my site. Keep on the great work.