In my company we're JavaScript fans, and we're working on providing the JavaScript community with cool products to broaden its use to all computing areas. Artificial Intelligence (AI) is currently one of the areas mainly addressed by Python. JavaScript has everything you would need, in the browser or in the cloud thanks to tensorflow.js. This article is an introduction to AI for JavaScript users. You will find everything to get started with basic notions in mind. We will introduce AI concepts, Google's TensorFlow framework, and then the AI stack for JavaScript: TensorFlow.js.

AI concepts

Artificial intelligence, and artificial neural networks (NN) in particular, gained increasing adoption in many applications over the last 5 years. It is the result of the convergence of 2 main evolutions: availability of efficient architectures in the cloud and key innovations in neural network training algorithms. It opened new ways like deep learning (a term designating neural networks that includes several hidden layers between inputs and outputs).

A neural network is built with several neuron layers to constitute a ready-to-use AI model. Previously limited to a few layers, they gained in complexity, depth, efficiency and precision.

A key step has been accomplished in machine vision with efficient convolutional neural networks, whose architecture is inspired from the human visual cortex.

Machine vision and image processing are today primary fields for NN, and many pre-trained models of variable complexity exist, as well as collections of training images (like MNIST or imageNet models).

Perceptron and layers

Without getting into details, the basic artificial neuron (perceptron) model encountered in most neural networks is shown in the figure below and operates as follows:

- it takes multiple input values

- it multiplies each input by a weight value

- it sums all these individual products

- it generates an output from this sum through an activation function, which generally normalizes output values and reduces their spread.

Single perceptron model

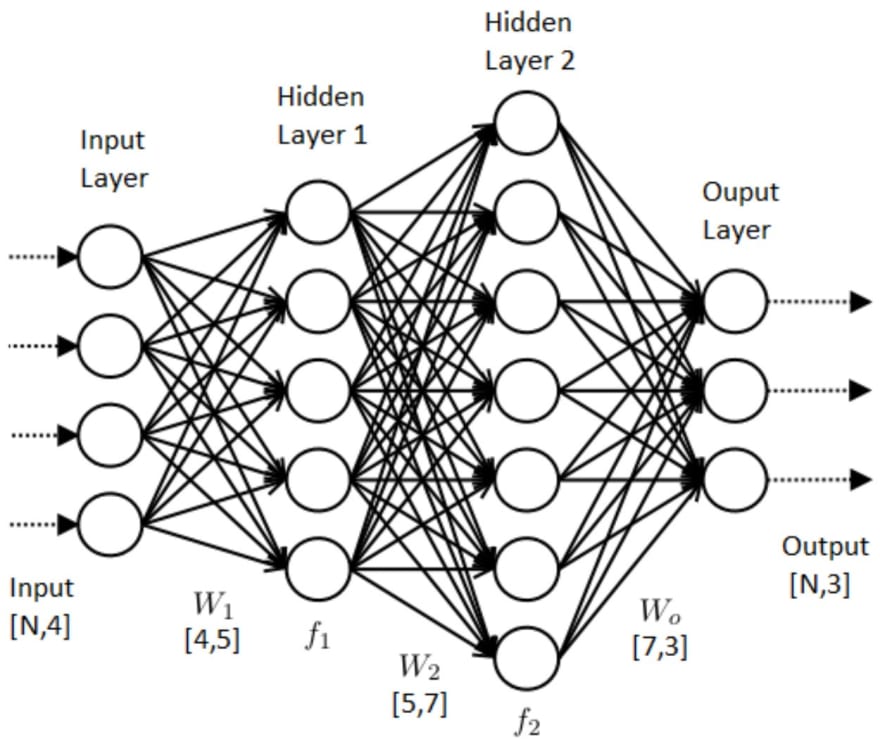

Various network architectures are obtained by replicating, interconnecting and cascading such cells, as shown in the figure below:

A fully-connected 15-perceptron topology

Inference and training

The "direct" operation of a neural network, that consists in applying series of unknown inputs to a network whose weights are defined in order to obtain outputs (such as a prediction of next data, a classification of an image, an indication on whether a specific pattern exists in an image, etc ….) is called " inference".

The operation that consists of computing the weight values of a neural network, usually by submitting series of inputs together with their expected output values is called " training".

Training of deep networks is performed by calculating the difference, or "gradient", between the output(s) of the network for a given input and the expected output. This gradient is then split in gradient contributions of every weight of the output layer, and then down to all layers of the network. This process is usually called "gradient backpropagation algorithm". The weights are then adjusted to minimize the gradients in an iterative algorithm on multiple inputs, with optimization policies that are tunable by the user.

Neural network model examples

Many types and topologies of Neural Networks (NN) can be built, and ongoing researches continuously improve and enrich existing NN collections available. Deep networks can be built by assembling reused modules (pre-trained or not) proven to be efficient at a given task.

However, typical module architectures exist that turn out to be efficient at specific tasks.

In particular :

- fully connected networks: in these, all outputs of a layer are connected to all inputs of the next layer, which makes the "treillis" connection complex for deep structures of this type and makes training tricky to tune. They are often used as final decision layers on top of other structures.

- convolutional networks: directly inspired from the human visual cortex, they are very efficient at image analysis (filtering, features detection), can be easily replicated and grouped to analyze picture regions and separate channels, and stacked in deep structures without prohibitive complexity. Moreover, pre-trained submodules for a specific feature, for instance, can advantageously be reused in different networks, which reduces training times. They are usually topped by a few fully-connected layers depending on the required final outputs.

- recurrent networks: these introduce memory elements which make them able to analyze and predict time series in the broad sense, which can go from text analysis (sentiment classification) to music creation and stock prices trends prediction.

- residual networks: those are networks (or assemblies of networks) of any type, in which the results of a given layer are added to the result of a particular layer ("skip connections"), which accelerates training in very deep networks.

TensorFlow framework

Increasing adoption of AI in big data processing pulled in the need for frameworks that are efficient at creating/editing complex networks, manipulating various types of data sets (multidimensional matrices being a baseline), and performing inference and training operations from an outline view without having to explicitly express every neuron operation and related algorithms.

As Google has been pioneering deployment of AI in its infrastructures for a long time, it open-sourced its home-brewed internal framework in 2015 under the "TensorFlow" name (referred to here under as TF).

Built on top of Python, an environment widely adopted by scientific and data analysts for its simplicity and plethoric availability of math and array-specific libraries, TF first consisted of a declarative-style API. It was very comprehensive and versatile, and allowed to solve almost any problem of tensor (multidimensional matrix) calculus, including of course complex neural networks.

Later on, Google included an imperative mode (for more "intuitive" programming and more straightforward debugging) and Keras API, a third party higher-level function set that makes neural network development, training and inference easier.

Note that Python TF provides a C++ optimized computing backend and a CUDA-based backend for platforms equipped with NVIDIA GPUs to provide performant inferences or trainings.

TensorFlow.js (using JavaScript)

In 2018, a JavaScript version of TensorFlow was released: TensorFlow.js , to enable its use in browsers or Node.js. When launched, it supported imperative execution mode and Keras API but was missing full support of "legacy" python TF functionalities. TensorFlow.js ramped up rapidly, release after release, towards alignment with Keras python API.

Although TensorFlow.js supports all advanced functionalities and algorithms for both inference and training, it is mainly used for inference of pre-trained models in web browsers, which was improved by a webGL-based computation backend to take advantage of GPUs within the browser.

Good examples are:

- Magenta.js (music and art using machine learning): a Google research project on creative neural networks (https://magenta.tensorflow.org/) that offers open-source tools, models and demos (interactive music composition, amongst others).

- Coco-ssd: Object detection in webcam-streamed images using mobilenet NN.

On Node.js side, TensorFlow.js is available in 2 versions. The whole stack of the first version is fully written in JavaScript while the second uses the same C++ backend and CUDA-based backend for NVIDIA GPUs as the python version of TensorFlow.

The TensorFlow.js team recently released a Wasm backend (optimizing performance on browsers through native C++ kernels without using a GPU), and will release soon a WebGpu backend (evolution of webGL standard).

In this second part article, we describe how to operate TensorFlow.js, using models coming from Python TensorFlow or ready-to-use models in the browser and in the cloud using our WarpJS product. JavaScript fans will have everything to start entering this area. In the meantime, visit https://www.tensorflow.org/js for API documentation and installation guidelines, including tutorials, guides and demos.

About the author

Dominique d'Inverno holds a MSC in telecommunications engineering. After 20 years of experience including embedded electronics design, mobile computing systems architecture and mathematical modeling, he joined ScaleDynamics team in 2018 as AI and algorithm development engineer.

Top comments (0)