Background

The real-time onscreen subtitle is a must-have function in an ordinary video app. However, developing such a function can prove costly for small- and medium-sized developers. And even when implemented, speech recognition is often prone to inaccuracy. Fortunately, there's a better way — HUAWEI ML Kit, which is remarkably easy to integrate, and makes real-time transcription an absolute breeze!

Introduction to ML Kit

ML Kit allows your app to leverage Huawei's longstanding machine learning prowess to apply cutting-edge artificial intelligence (AI) across a wide range of contexts. With Huawei's expertise built in, ML Kit is able to provide a broad array of easy-to-use machine learning capabilities, which serve as the building blocks for tomorrow's cutting-edge AI apps. ML Kit capabilities include those related to:

Ø Text (including text recognition, document recognition, and ID card recognition)

Ø Language/Voice (such as real-time/on-device translation, automatic speech recognition, and real-time transcription)

Ø Image (such as image classification, object detection and tracking, and landmark recognition)

Ø Face/Body (such as face detection, skeleton detection, liveness detection, and face verification)

Ø Natural language processing (text embedding)

Ø Custom model (including the on-device inference framework and model development tool)

Real-time transcription is required to implement the function mentioned above. Let's take a look at how this works in practice:

Now let's move on to how to integrate this service.

Integrating Real-Time Transcription

Steps

1.Registering as a Huawei developer on HUAWEI Developers

2.Creating an app

Create an app in AppGallery Connect. For details, see Getting Started with Android.

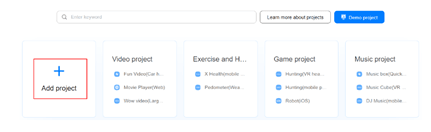

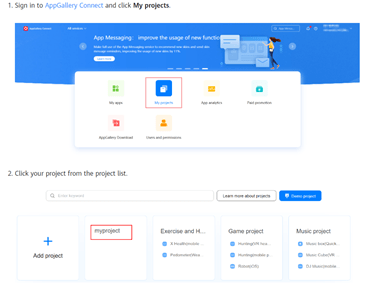

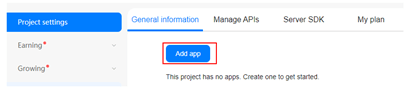

We've provided some screenshots for your reference:

- Enabling ML Kit

- Integrating the HMS Core SDK

Add the AppGallery Connect configuration file by completing the steps below:

n Download and copy the agconnect-service.json file to the app directory of your Android Studio project.

n Call setApiKey during app initialization.

To learn more, go to Adding the AppGallery Connect Configuration File.

n Add build dependencies.

implementation 'com.huawei.hms:ml-computer-voice-realtimetranscription:2.2.0.300'

n Add the AppGallery Connect plugin configuration.

Method 1: Add the following information under the declaration in the file header:

apply plugin: 'com.huawei.agconnect'

Method 2: Add the plugin configuration in the plugins block.

plugins {

id 'com.android.application'

// Add the following configuration:

id 'com.huawei.agconnect'

}

Please refer to Integrating the Real-Time Transcription SDK to learn more.

- Setting the cloud authentication information

When using on-cloud services of ML Kit, you can set the API key or access token (recommended) in either of the following ways:

Access token

You can use the following API to initialize the access token when the app is started. The access token does not need to be set again once initialized.

MLApplication.getInstance().setAccessToken("your access token");

API key

You can use the following API to initialize the API key when the app is started. The API key does not need to be set again once initialized.

MLApplication.getInstance().setApiKey("your ApiKey");

For details, see Notes on Using Cloud Authentication Information.

Code Development

l Create and configure a speech recognizer.

MLSpeechRealTimeTranscriptionConfig config = new MLSpeechRealTimeTranscriptionConfig.Factory()

// Set the language. Currently, this service supports Mandarin Chinese, English, and French.

.setLanguage(MLSpeechRealTimeTranscriptionConstants.LAN_ZH_CN)

// Punctuate the text recognized from the speech.

.enablePunctuation(true)

// Set the sentence offset.

.enableSentenceTimeOffset(true)

// Set the word offset.

.enableWordTimeOffset(true)

// Set the application scenario. MLSpeechRealTimeTranscriptionConstants.SCENES_SHOPPING indicates shopping, which is supported only for Chinese. Under this scenario, recognition for the name of Huawei products has been optimized.

.setScenes(MLSpeechRealTimeTranscriptionConstants.SCENES_SHOPPING)

.create();

MLSpeechRealTimeTranscription mSpeechRecognizer = MLSpeechRealTimeTranscription.getInstance();

l Create a speech recognition result listener callback.

// Use the callback to implement the MLSpeechRealTimeTranscriptionListener API and methods in the API.

protected class SpeechRecognitionListener implements

MLSpeechRealTimeTranscriptionListener{

u/Override

public void onStartListening() {

// The recorder starts to receive speech.

}

u/Override

public void onStartingOfSpeech() {

// The user starts to speak, that is, the speech recognizer detects that the user starts to speak.

}

u/Override

public void onVoiceDataReceived(byte[] data, float energy, Bundle bundle) {

// Return the original PCM stream and audio power to the user. This API is not running in the main thread, and the return result is processed in a sub-thread.

}

u/Override

public void onRecognizingResults(Bundle partialResults) {

// Receive the recognized text from MLSpeechRealTimeTranscription.

}

u/Override

public void onError(int error, String errorMessage) {

// Called when an error occurs in recognition.

}

u/Override

public void onState(int state,Bundle params) {

// Notify the app of the status change.

}

}

The recognition result can be obtained from the listener callbacks, including onRecognizingResults. Design the UI content according to the obtained results. For example, display the text transcribed from the input speech.

mSpeechRecognizer.setRealTimeTranscriptionListener(new SpeechRecognitionListener());

l Call startRecognizing to start speech recognition.

mSpeechRecognizer.startRecognizing(config);

l Release resources after recognition is complete.

if (mSpeechRecognizer!= null) {

mSpeechRecognizer.destroy();

}

l (Optional) Obtain the list of supported languages.

MLSpeechRealTimeTranscription.getInstance()

.getLanguages(new MLSpeechRealTimeTranscription.LanguageCallback() {

u/Override

public void onResult(List<String> result) {

Log.i(TAG, "support languages==" + result.toString());

}

u/Override

public void onError(int errorCode, String errorMsg) {

Log.e(TAG, "errorCode:" + errorCode + "errorMsg:" + errorMsg);

}

});

We've finished integration here, so let's test it out on a simple screen.

Tap START RECORDING. The text recognized from the input speech will display in the lower portion of the screen.

We've now built a simple audio transcription function.

Eager to build a fancier UI, with stunning animations, and other effects? By all means, take your shot!

For more information, please visit:

Real-Time Transcription

Documentation on the HUAWEI Developers website

HUAWEI Developers official website

Redditto join developer discussions

GitHub or Gitee to download the demo and sample code

Stack Overflow to solve integration problems

Top comments (0)