In this blog post, I'll describe how I added support for subtitles in the Channel Engine so it could play subtitle tracks. I will also assume that the reader is somewhat familiar with the HLS streaming format and Channel Engine or has at least read the documentation in the Channel Engine git repo, or this article link beforehand.

Introduction

The Eyevinn Channel Engine is an Open-Source service that works well with VODs,

But before we get into it, I need to clarify what I mean when I say "subtitle tracks" and "subtitle groups", as I will be using these words throughout this post.

In an HLS multivariant playlist, you can have a media item with the attribute TYPE=SUBTITLES with a reference to a media playlist containing the subtitle segments. This is what I will be referring to as a "subtitle track". Multiple subtitle tracks can exist in the HLS multivariant playlist. These tracks can be grouped/categorized, by the media item's GROUP-ID attribute. Subtitle tracks that have the same GROUP-ID value are what I will refer to as a "subtitle group". In other words, a subtitle group consists of one or more subtitle tracks. GROUP-IDs are an HLS requirement for media items.

Challenges

The task in question will have some implementation challenges.

A few things needed to be taken into account. Namely:

- How to handle subtitle tracks between different VODs.

- How to handle VODs without subtitles.

- How to handle VODs where subtitle segments are longer than video segments.

- How to handle subtitle groups.

- How to handle subtitle tracks with the same language.

Delimitations

The implemented solution in its current state did not cover every edge case. Meaning that some points mentioned in Challenges have yet to be addressed. However, the implementation works fairly well for the most basic case and can be extended in the future to handle more edge cases.

My solution will when faced with multiple subtitle tracks with the same name, it will pick the first one. Also when a subtitle track that was not defined before the start shows up it will be ignored.

Proposed solutions will be mentioned in the Future Work chapter.

Implementation

The following steps give an overview of how I added support for subtitles in the Channel Engine.

Step 1: Adding subtitle media items to the multivariant playlist based on a set of predefined subtitle languages.

To address the challenge of how the client is to select a certain subtitle group and subtitle track, I implemented a method that works like audio. To read more about the audio implantation, read this article

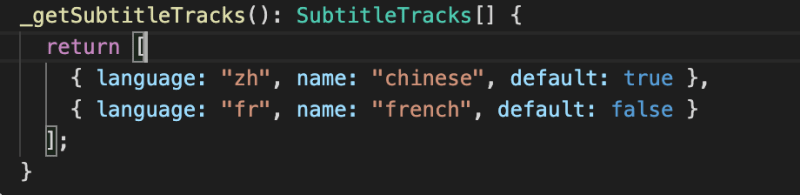

The plan was to let the client select a track based on what's been predefined. So to have it work as it does for VOD profiles, I needed to extend the ChannelManager class with an extra function.

We are doing this to solve the issue where VODs has different languages.

Media Items are added to the multivariant playlist with attribute values set according to a predefined JSON object, defined in a _getSubtitleTracks() function in the ChannelManager class/object.

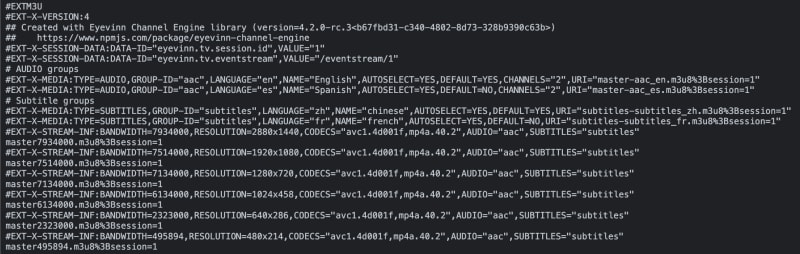

Now the resulting multivariant playlist looks something like this...

Note: Notice that GROUP-ID is not a field in the subtitleTrack JSON, and so the GROUP-ID in the multivariant playlist media items is actually permanently set by the his-vodtolive library. this is done to handle VODs without subtitles. See Delimitations.

Step 2 Make it possible to load subtitles in hls-vodtolive

Now that we know what segments the client is looking for, how do we find them? This is where the Eyevinn dependency package hls-vodtolive comes into play.

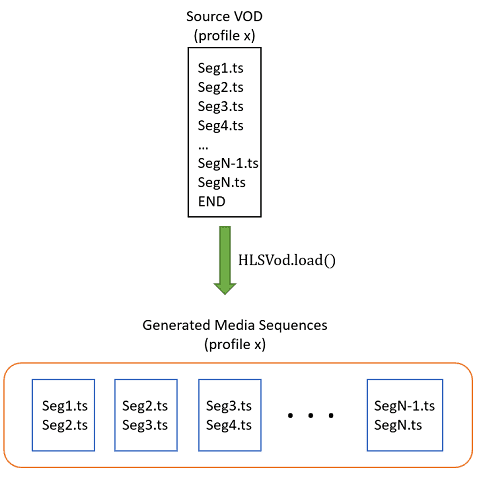

In short, the hls-vodtolive package creates an HLSVod class/object which given a VOD multivariant playlist as input, will load and store all segments referenced in that playlist into a JSON object organized by profiles. An HLSVod object will also divide the segments into an array of subsets, that we call media sequences. So each subset/media sequence will be used to create a pseudo-live-looking media playlist.

There were three important things that needed to be done here.

Load subtitle segments from source VOD and create a pseudo-live-looking subtitle playlist.

What to do when a VOD does not have subtitles?

The reason we need to do something about a VOD without subtitles is that the client is expecting subtitles and if we do not provide them the client will start buffering until subtitles are provided.

We solved it by looking at the source VOD and then creating dummy subtitle segments that have the same duration as the video segments and for the duration of the entire VOD. The dummy segments contain a URL to an endpoint that needs to be defined in the options when creating an instance of hls-vodtolive. the handler of the endpoint should return empty WebVTT segments.How to handle subtitle segments that have a longer duration than video segments.

Why are we doing this, the answer is that we are creating a live stream, and when subtitles are used in a live stream they need to have the same duration as the other media.

We solved this by creating smaller segments out of a bigger segment or vice versa. the newer segments have a URL to an endpoint where a WebVTT file can be sliced into smaller files.

The URL has a few query parameters that help the endpoint to know which files/files to slice from and at what time in the VOD

Step 3 add dummy endpoint and slice endpoint to the Channel Engine

The Channel Engine was extended with two endpoints the dummy endpoint and the slice endpoint. As previously mentioned the dummy endpoint only returns an empty WebVtt file which only contains the following lines

WEBVTT

X-TIMESTAMP-MAP=MPEGTS:0,LOCAL:00:00:00.000

The slice endpoint on the other hand is a bit more complex, it needs to be able to fetch and parse a WebVTT file and also be able to extract and return the relevant subtitles lines.

Future Work

- Add support for subtitle characteristics Adding support for characteristics would enable you to have the same language multiple times, eg simplistic Swedish and Swedish.

Top comments (0)