People usually start by crawling, then walk, progress to running, and some do stranger things, like Parkour.

Software Developers usually start by coding, then testing, progress to TDD, and some do stranger things, like testing tests.

Welcome! 🤩 This is the first article in a short series, providing more information about "tests of tests." 🧪

I will discuss the motivations, alternatives, and detail the ways we do it in Python projects at Trybe.

How I came to know "tests of tests"

Nice to meet you, I'm Bux! At the moment I'm writing this text, I am an Instruction Specialist (i.e., a teacher and content producer) at Trybe, a Brazilian technology school, where I have been working for nearly 3 years.

In our Web Development course, students work on projects in which we assess if they have learned the content. These projects have automated tests, and the student must implement the necessary code to pass the tests in order to "pass the exam".

For example: when teaching Flask, we can have a project that requires the implementation of a CRUD for songs, and we create tests that validate the requirements of this CRUD. If the student's implementation passes our tests, they are approved. ✅

But what happens when we teach students how to create automated tests? How do we evaluate if they have created adequate tests?

What are tests of tests

In summary, "tests of tests" are the code we write to answer the last question, which is: have software tests been created that meet the specified requirements?

This is a question that any team of developers (or quality analysts) deeply concerned with the quality of the developed test can come across. But in our team's case, the intention was to "just" assess if the class can create good software tests.

Imagine, for example, a student needing to create tests for a function that searches for books, based on a string in their title, in the database. This search may have more details, such as being case-insensitive, returning paginated content, etc. I need to have an (automated) way to ensure that the student has created tests for this function.

What would you do?

Before moving on in the reading, take a moment to reflect: how would you do this? 🤔

Alternatives

Test Coverage Tool

This is one of the simplest ways to validate whether a code section is being tested. Most modern languages have ways to check how many and which lines of the source code are being tested when running a test.

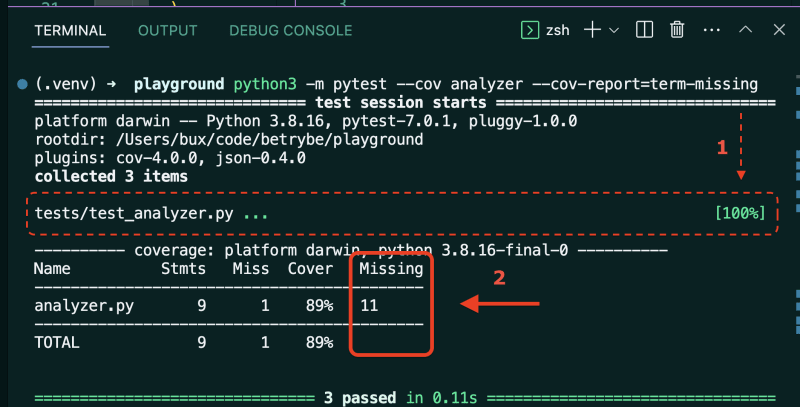

In Python, we can use the Pytest plugin called pytest-cov (which, under the hood, uses coverage.py). With a few parameters, we can find out which lines of a specific file are "uncovered" by a specific test.

I can then run the student's tests and approve them if they have 100% coverage! 🎉

🟢 Advantages: It is simple to build and maintain, and it can be applied to virtually any context. Furthermore, the tool provides direct and explicit feedback (essential in an educational context).

🔴 Disadvantages: Test coverage is not a foolproof metric of test quality, as we can achieve 100% coverage without making a single

assert.

Mutation Testing Tool

🌟 Mutation tests work on a simple but brilliant idea:

Unit tests should pass with the correct implementation of that unit, and should fail with incorrect implementations of that unit.

Libraries like mutmut can do this for us: the tool generates mutations in the source code and runs the tests again. Mutations are made at the level of the Abstract Syntax Tree (AST), such as changing comparators (< to >=) or booleans (True to False).

A good test is one that fails for all mutations. If your test continues to pass for some of the mutations, it means that it can be improved by validating more use cases.

🟢 Advantages: We can have more confidence that good tests are being created by the student, not just that the function/unit is being executed.

🔴 Disadvantages: Mutation testing tools add complexity to the testing process, which can impact the learning experience (remember, we want to use them in didactic projects). Additionally, the mutations offered by these tools are limited, generic, and have no 'strategy,' which can lead to a slowdown in the process.

Customized Mutation Tests

The idea behind the previous alternative is, as I mentioned, brilliant! 🌟

Customized mutation (a concept I'm probably creating now, with the help of ChatGPT) uses the same idea but with a different implementation: instead of automatically generating various random mutations in a file, we choose exactly the mutations we want using test doubles.

Picture this scenario: we ask students to create tests for the following Queue class:

class Queue():

def __init__(self):

self.__data = []

def __len__(self):

return len(self.__data)

def enqueue(self, value):

self.__data.append(value)

def dequeue(self):

try:

return self.__data.pop(0)

except IndexError:

raise LookupError("Queue is empty")

What we will do, then, is to create "broken" versions of the Queue class (with strategic mutations) and run the tests again. If the tests are well-written, they should fail with the mutations.

A possible mutated class for this case is:

from src.queue import Queue

# Notice that I'm using inheritance to reuse the methods

# of the original class and perform a mutation only

# in the 'dequeue' method.

class WrongExceptionQueue(Queue):

def dequeue(self):

try:

return self.__data.pop(0)

except IndexError:

raise ValueError("Queue is empty")

In other words, if the student didn't create a test that validates the type of exception thrown by the dequeue method, they will not be approved.

🟢 Advantages: We continue to have confidence that good tests are being created by the student, not just that the function/unit is being executed. Moreover, we have more freedom to create complex and strategic mutations for each requirement, and we don't have to run unnecessary mutations (which don't add to learning), saving time and computational resources. As a bonus, we can provide extremely customized feedback (essential in the learning process), such as: "Did you remember to validate which exception is raised in the

dequeuemethod?"🔴 Disadvantages: Effort is required to think and code the mutations, as they will not be generated automatically. Additionally, there is no library (or at least we didn't find it) that facilitates the configuration of this type of test.

If it doesn't exist, we can create it! 🚀

And that's what we did: we created the necessary code to apply customized mutations in the tests. 🤓

In the next article in this series, I will detail how the 1st version (we are already moving towards the 3rd) of our "tests of tests" with customized mutations works. Until then, I'd like to know: how would you implement this functionality?

See you soon! 👋

Top comments (0)