There is perhaps nothing more satisfying than seeing the pieces of a puzzle come together. This is the case for actual puzzles, which I am pretty bad at because I always seem to lose pieces under the couch, and more metaphorical puzzles, which I am generally better at what with no pieces to lose in strange places.

The puzzle of learning is certainly one of the more complicated enigmas out there. Learning a new thing is hard because you’re trying to constantly piece together ideas and construct concepts without always necessarily knowing how those parts fit into the larger whole. It can be difficult to try to wrap your head around something new when you constantly find yourself wondering what this new thing has to do with the broader picture that you’re already familiar with. It’s a little bit like finding a random puzzle piece and then trying to find the pieces that fit around it, but not necessarily knowing how those pieces fit into the big picture.

The same is true for learning a specific topic, like computer science. Oftentimes, it can feel like you’re picking up little bit of information — a data structure here, an algorithm there — without always knowing how one piece connects to another. I tend to think that this is the reason that learning computer science is so hard: there just aren’t very many resources that can construct the picture of the field with all of these perfectly pieces fit into one another.

However, every so often, if you really stick with a topic for long enough, you’ll find that some pieces will start to come together. And as we round out this series together, it’s time for that to finally happen!

A translator we know and love

When we first started this series nearly a year ago, the very first topic that we explored was something that is often thought of as the “cornerstone” of computer science: binary. We learned that, at their very core, binary is the language that every computer speaks and understands. Our machines, at the end of the day, each run on ones and zeros.

Since then, we’ve explored different data structures, like trees, graphs, and linked lists, as well as sorting algorithms and traversal or searching algorithms. Now it’s time to finally bring it all together — or rather, bring it all back to binary.

We’ve gone around the world when it comes to core concepts in computing as well as computer science. But there’s one question that we haven’t really answered yet, even though it’s possible that we’ve been thinking about it this whole year: How on earth do we go from our code into the one’s and zero’s of our computer?

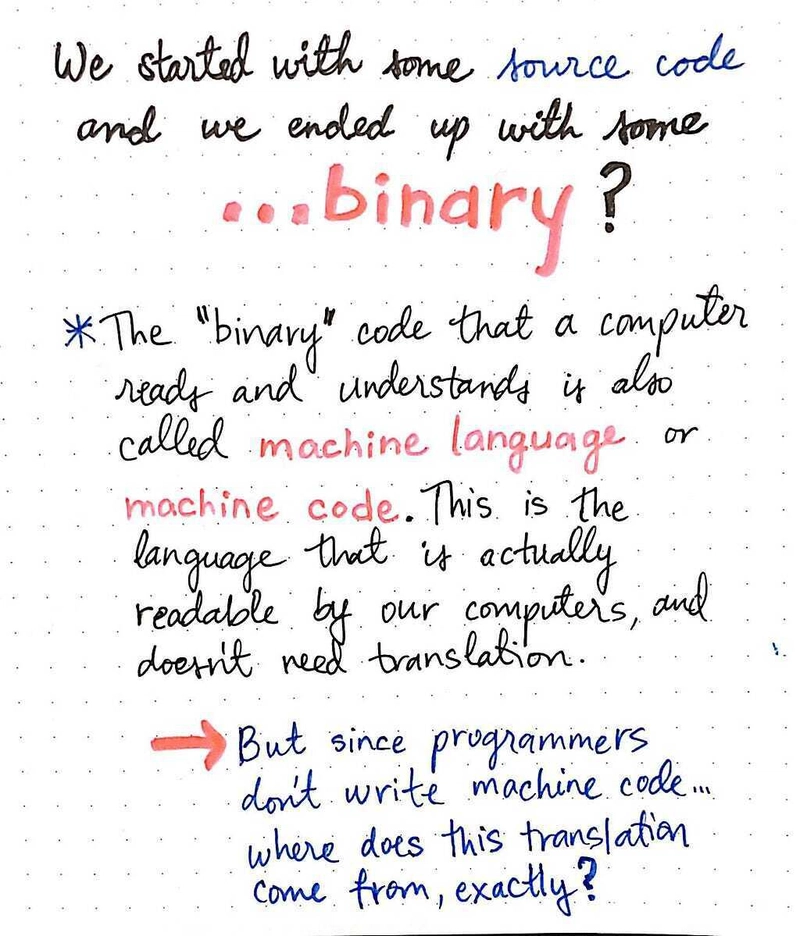

Well, before we get too far into how the code we write gets turned into binary, let’s clarify what we really mean when we use the term binary in this context. The “binary” code that a computer reads and understands is generally referred to as machine language or machine code , which is a set of instructions given to a machine and run by a its central processing unit (or CPU ).

An important thing to note is that there are different kinds of machine code, some of which are literally 0’s and 1’s, and others of which are decimals or hexadecimals (which we already know a lot about!). Regardless of which exact format a machine language is written in, it has to be fairly rudimentary because it needs to be understood by or computer. This is why machine languages are referred to as low level languages, because they need to be simplified enough to be processed by our machine’s CPU, which we already know is just a bunch of switches, internally.

We can think of low level languages as the “mother-tongues” of our computer; machine code should be directly readable by our machine, and shouldn’t ever need to be translated by them.

But how do we go from our code to a machine-friendly (machine code) version of the exact same thing? Well, the code that we write as programmers and the machine code that a computer’s processor reads are nothing more than two different types of languages. If we think about it, all we really need to do is translate between these two languages.

Now comes another problem: we have no idea how to translate between our code and machine code! Okay, just kidding — this isn’t really a problem. Because we have two helpful friends who can help us out here.

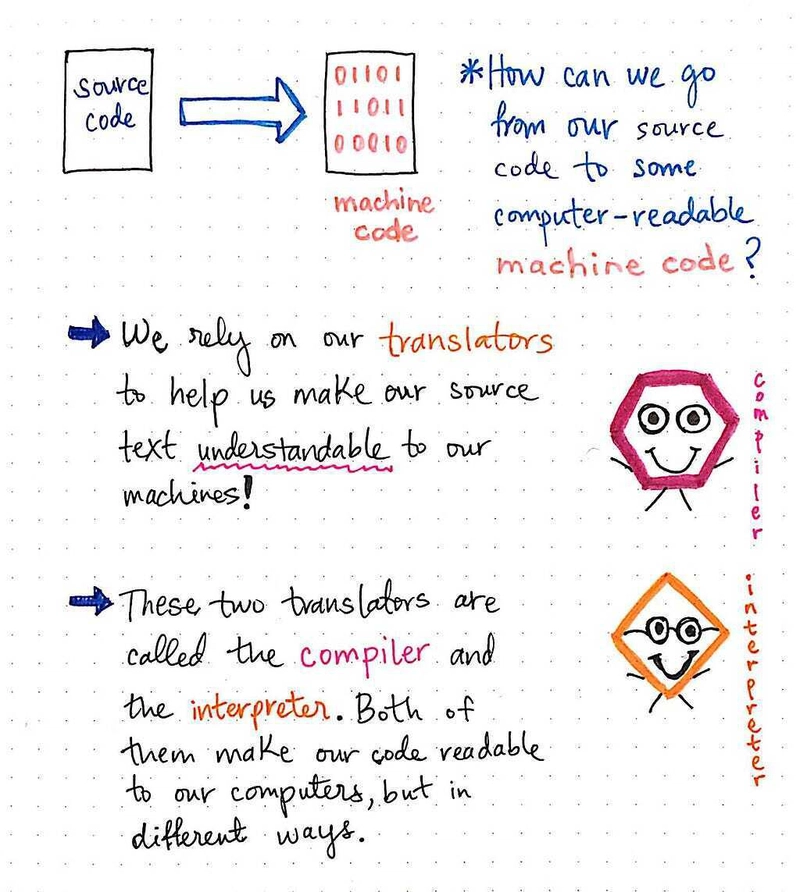

In order for us to transform our source code into some computer-readable machine code in a binary format, we’ll need to rely on translators to help us make our source text understandable to our machines.

A translator , which is also sometimes called a programming language processor, is nothing more than a program that translates a source language into a target language, while maintaining the logical structure of the code that it is translating.

We’re already a little bit familiar with one kind of translator already, even though we might not know it just yet. Previously in this series, we’ve looked at the lexical and syntax analysis phases (the front end) of the compiler, and the different data structures involved in the process.

As it turns out, the compiler is a kind of translator! There is another translator, too, whose name often gets thrown around in conjunction with the compiler’s, called the interpreter. Both the compiler and the interpreter make or code readable to our computers, but in very different ways.

But let’s first start with what we already know, first: the compiler.

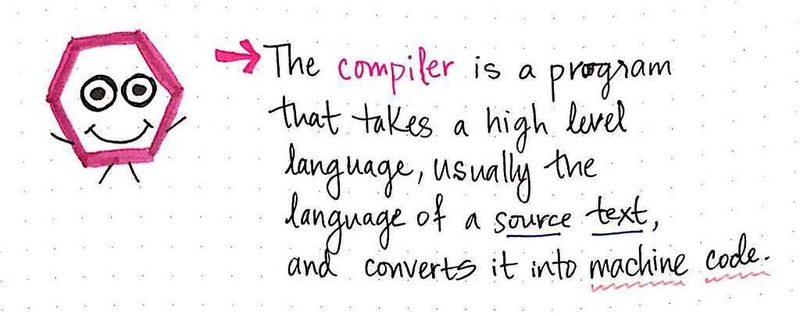

The compiler is nothing more than a program that takes a high level language — the language that we write our code in — and converts it into machine code. The compiler has many moving parts to it (or rather, within it), including, potentially, a scanner, a lexer/tokenizer, and a parser. But at the end of the day, even with its complexity, it’s just a program that turns our code into machine-readable code.

However, even though its job might seem simple when we put it like this, the way that a compiler does this important task is worth highlighting.

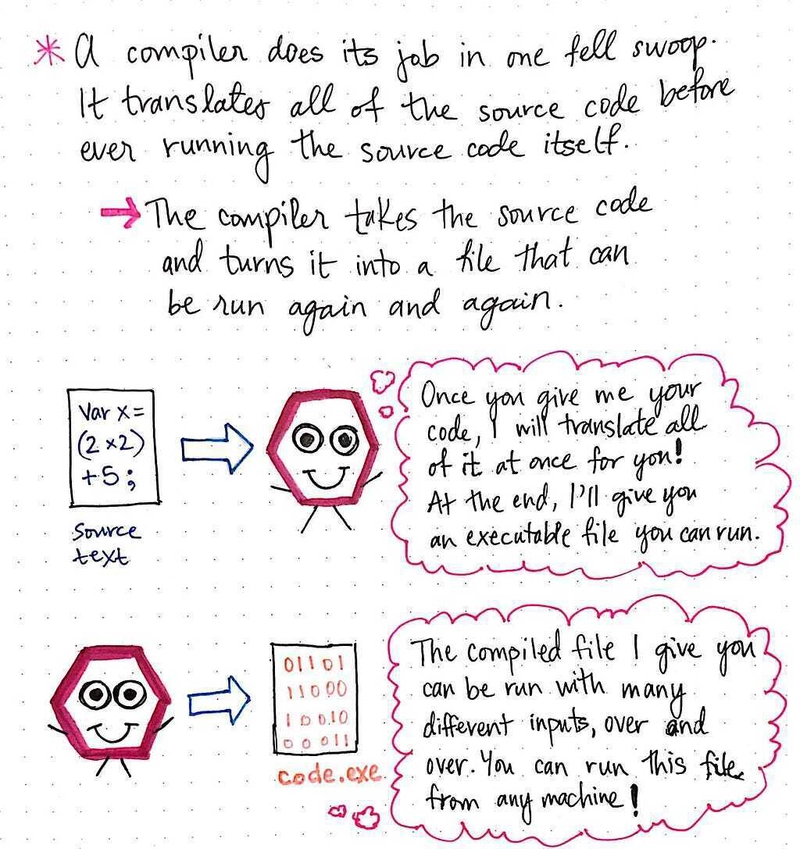

For most cases, the compiler does the job of translating our code into machine code in one fell swoop. In other words, the compiler translates all of a programmer’s source code before the source code can ever be executed or run. It takes our source code and converts it into a single file that is written in machine code. It is that very machine code file — called an executable file and often ending with an .exe extension — which actually allows us to run the original code that we wrote.

The most important idiosyncrasy of a compiler is the fact that it takes a source text, and translates it into machine code binary in “one shot”. It returns the translated, compiled file to the programmer, who will be able to run their code via the outputted executable.

The executable file returned by the compiler can be run again and again once it has been translated; the compiler doesn’t need to be around for any subsequent reruns!

Once the compiler translates all the source code into machine code, the compiler’s job is done. The programmer can run the compiled code as many times as they want, with whichever inputs that they’d like to use. They can also share this compiled code with others, without ever having to share their original source code.

The concept behind this specific translator— as well as the term “compiler” itself — was coined by the illustrious Grace Hopper , back in 1952, in the most interesting of circumstances.

At the time, Hopper had been working at the Eckert-Mauchly Computer Corporation, helping develop the UNIVAC I computer as a mathematician on the team. Effectively, she was working on turning mathematical code into its own language (A-0 System language).

However, she had bigger ideas. She wanted to write an entirely new programming language that would words in English, rather than the limited number of mathematical symbols. However, when she shared this idea with her colleages. They shot her down, and told her that her idea wasn’t possible because “computers didn’t understand English”. But she was not deterred.

After three years of working on this team, Hopper had her very first working compiler. But no one believed that she had actually done it! In her biography, Grace Hopper : Navy Admiral and Computer Pioneer, she explains:

I had a running compiler and nobody would touch it. … they carefully told me, computers could only do arithmetic; they could not do programs.

It’s a good thing that Grace Hopper didn’t listen to any of those nonbelievers, because she ended up continuing her work and developing one of the earliest high level programming languages, called COBOL. She also won the Presidential Medal of Freedom, among many, many, many other accomplishments.

Indeed, if she had listened to all of those pepole, she would have likely never taken computing to an entirely new level with her early work in constructing and designing the very first compiler. Grace Hopper’s work on the first compiler laid the groundwork for the another translator that came into existence a few years later: the interpreter.

Step-by-step translation

In 1958, a few years after Grace Hopper’s work on the compiler, some students at MIT were in a lab, working with an IBM 704 computer, a fairly new technology that had been introduced just four years earlier. One of these students, named Steve Russell, was working on a project called the MIT Artificial Intelligence Project, with his professor, John McCarthy.

Russell was working with the Lisp programming language at the time, and he had read a paper written by his professor on the subject. He came up with the idea to transform the eval function in Lisp into machine code, which set him on the path to creating the first Lisp interpreter, which was used to evaluate expressions in the language — the equivalent of running a program in Lisp at the time.

Indeed, Hopper’s work directly impacted Russell’s invention. The first version of the Lisp interpreter was compiled by hand. In an interview with the Computer History Museum in 2008, Russell explains how compilers played into his work at MIT:

And my remembrance is that John [McCarthy] sort of came in one day, in late September or October or something like, that with the universal M-expression, that is the Lisp interpreter written out as an M-expression, and we sort of looked at it and said, “Oh yeah, that’ll work,” and I looked at it and said, “Oh, that’s just a matter of doing more hand compiling, like I’ve been doing. I can do that.”> …I got something working before Christmas, which was a useable interpreter; no garbage collector, but there weren’t any big programs yet.

Russell and his colleagues would go on to hand-compile the first two version of the Lisp interpreter. Today, most programmers wouldn’t even dream of hand-compiling any of their code! Indeed, many of us interact with an interpreter and use it multiple times in application development — we just might not always be aware of it.

So, what exactly is an interpreter? It’s about time for an official definition!

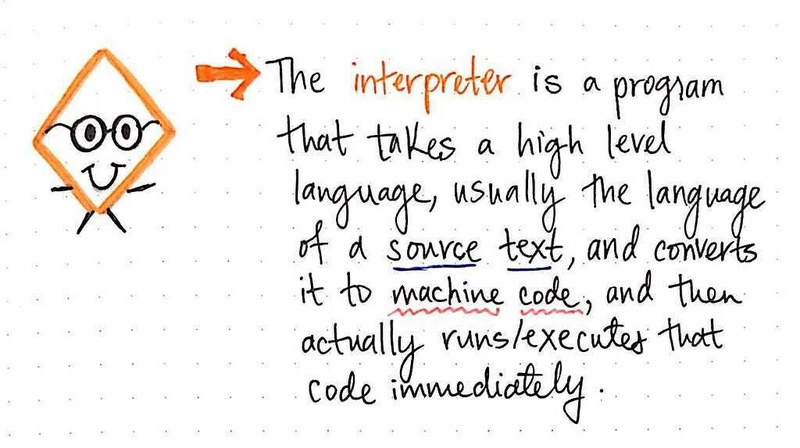

An interpreter is also a translator, just like a compiler, in that it takes a high level language (our source text) and converts it into machine code. However, it does something slightly different: it actually runs and executes the code that it translates immediately (inline) as it translates.

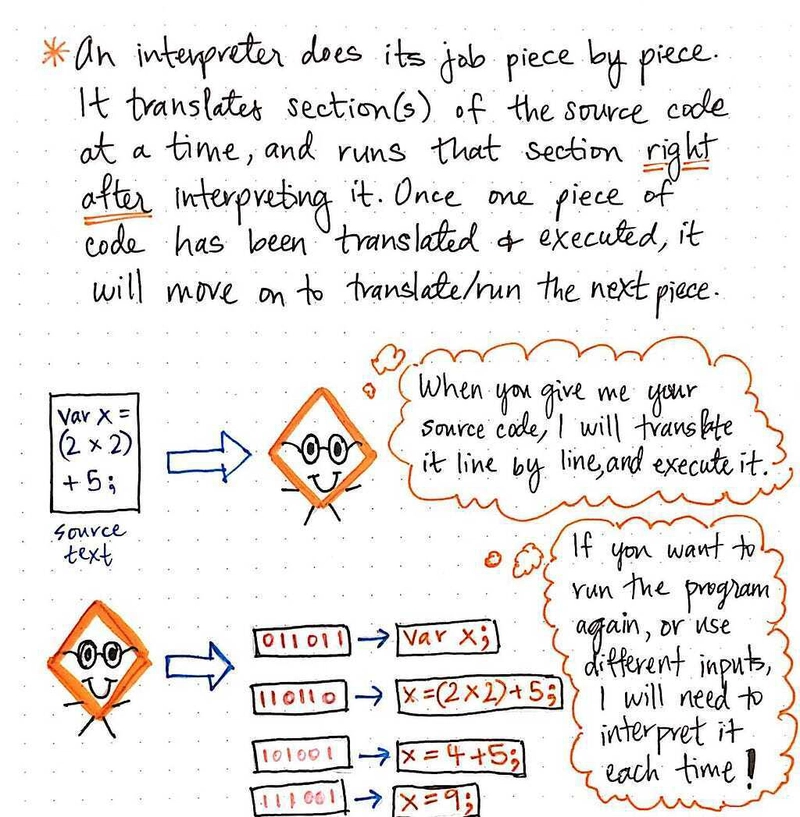

We can think of an interpreter as the more “methodical” translator in the family. Rather than doing the work of translating our code into machine language in one single shot, it’s far more systematic about how it works.

An interpreter does its job piece by piece. It will translate a section of our source text at a time, rather than translating it all at once.

Unlike a compiler, it doesn’t translate everything and hand over a file to us, the programmers, to execute. Instead, an interpreter will translate a single line/section of code at a time. Once it has finished translating one line, it will take the machine code version of it, and run it immediately.

Another way of thinking about it is that once a piece of code has been translated by the interpreter, only then can it be run. This seems fairly intuitive at first thought, because of course how can an interpreter run a line of code without knowing what it means in binary/machine code? But, if we think about it more deeply, there are other implications. Only once the interpreter finishes running one line of code successfully will it actually move onto the next line. We can imagine how this may or may not be a good thing, depending on what we’re trying to do.

For example, imagine we want to run our program with 10 different inputs. Our interpreter would have to run our program 10 times, interpreting it line by line, for each of our inputs. However, if we made a fatal flaw in our code, our interpreter could catch it for us the moment that it happens because it has literally just tried to run our (broken) line of code after it translated it!

By now, we might start to be able to see how both the interpreter and the compiler have tradeoffs. We’ve seen how different tools have pros and cons to them time and again in this series, and it’s probably a hallmark trait within the world of computer science. Keeping with this theme, let’s weigh the fundamental differences between interpretation and compilation as translation techniques.

Two translators, both alike in dignity

The differences between interpretation and compilation and their respective translators tells us a lot about how these two programs are implemented. If we compare these two methodologies, we’ll start to see how they both accomplish the same task, but in fundamentally very different ways.

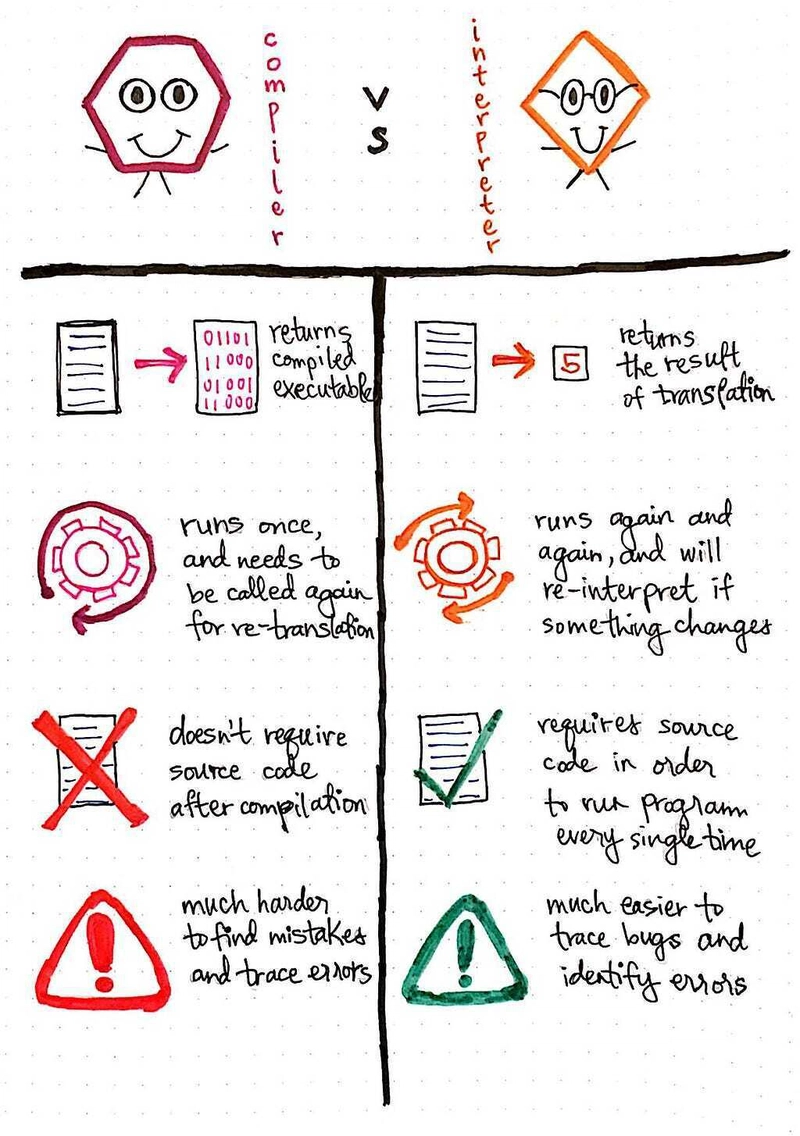

The illustration below illustrates this in a more obvious way.

- Returned result. While a compiler will take some source code and return a compiled, executable file, an interpreter will actually translate and then execute the source code itself, returning the result of the translation directly.

- Run frequency. A compiler will run only once, and will need to be called again to re-translate source code if it changes. On the other hand, an interpreter will run again and reinterpret source code when it changes; the interpreter “sticks around” to continually translate.

- Flexibility. The compiler translates the source code in one shot, which means that the source code isn’t required again after compilation. However, the interpreter does require the source code in order to translate and execute the program, every single time that it is ever run.

- Debugging. The compiler generally makes it more difficult to determine where mistakes occur in the source code, because the entire program has already been translated, and the error’s location might not be easily identifyable in the machine code. However, identifying errors is easier with an interpreter because it can maintain the location of an error or bug, and surface that issue to the programmer who wrote the code.

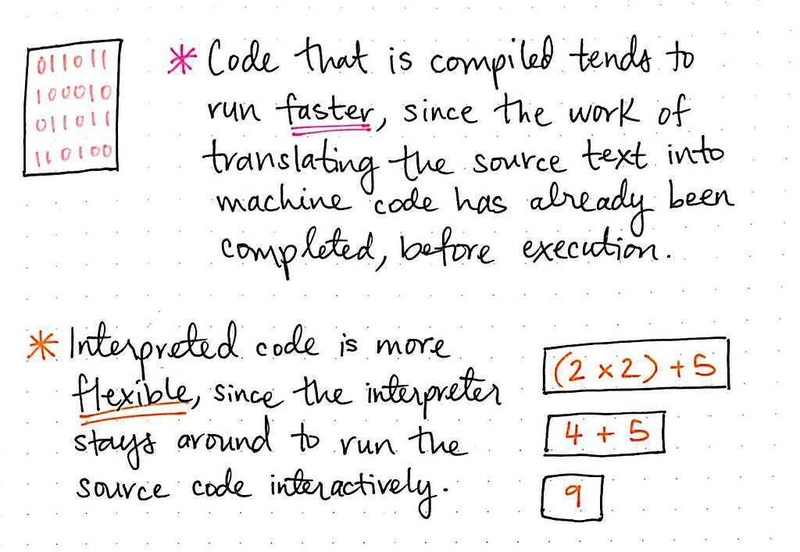

Because of these major differences, compiled code — code that is translated and run using the compilation process — tends to run a bit faster than interpreted code. This is because the work of translating the source text into machine code has already been done, before the code is ever executed.

On the flip side, interpreted code is far more flexible , since the interpreter stays around for the course of the translation “process” to read and process our code.

Flexibility in this context means being able to change our code and being able to immediately run it afterwards. There is no need to recompile our code if we make a change; the interpreter will just pick up on that chance, and reinterpret the code, making it a much more interactive form of translation. Using an interpreter makes it much easier to test out small (or big!) changes in a source file.

However, when it comes to interpretation, we actually need the source code in order to be able to do anything. It definitely is easier to test out changes and debug issues, but the source text has to be accessible, first and foremost. With compilation, this isn’t the case. Once we have compiled our code into an executable file, we don’t ever have to worry about the source code again — unless, of course, we need to recompile.

This can often make a compiler the “safer” choice, because our source code is not exposed; rather, the only output is the executable file itself, which is just 1’s and 0’s, and doesn’t ever show anyone how we wrote our code or what it actually ever said, since it’s all machine language at that point.

Compilation and interpretation play into our roles not just as developers of software, but also as consumers of it.

For example, whenever we download a file, or run a program from an .exe file, we’re relying on the creators of some software to have compiled an executable for us.

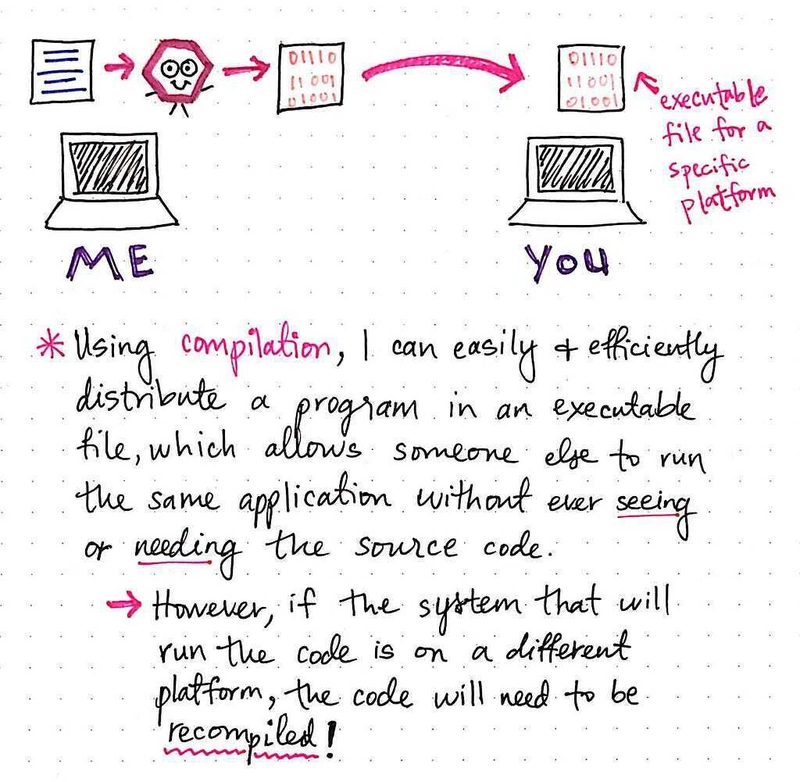

Using compilation, we can easily distribute a program as an executable file. This will allow someone else to run the exact same code as us, but without ever actually showing them or giving them our literal code itself. A consumer of a compiled file never needs to see a source text, because they can simply be given an executable file to run on their own, local machine.

However, the issue with distributing a compiled file is creating files that are compatible on different platforms (like a Windows operating system versus OS X). As programmers, it’s our job to make sure our compiled executables run successfully on various platforms, and sometimes, this means recompiling our code!

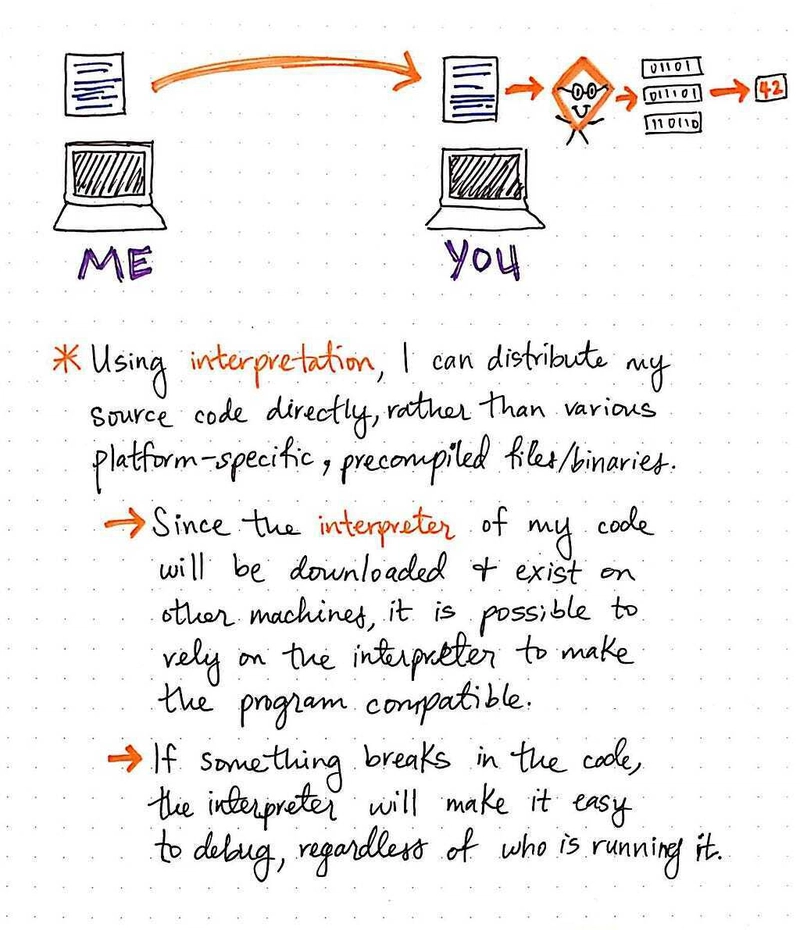

On the other hand, when we use interpretation, we can distribute our source code directly, rather than worrying about platform-specific executables, or thinking about how we’ll go about compiling binaries for everyone to use.

However, in this case, we’ll need the consumer of our program to download an interpreter — which often actually just comes along with a language — and be sure that the interpreter exists on their machine(s). Once they have the interpreter, they can see our original source code, take that code, and then rely on their own version of the interpreter to run it locally.

In this scenario, we rely on the interpreter to make the compatible on all platforms, and we, as programmers and consumers, don’t need to think about it. Also, if something goes wrong (either something in the source that we wrote, or something relating to their own platform), the consumer of our code can figure out what the issue is much more easily than with a compiled file. The interpreter will make it easy to debug any problems, regardless of who is running our code.

But, regardless of whether we choose compilation or interpretation, the end goal is the same: to speak a language that our computers can understand! As it turns out, at the end of the day, it really just all is binary.

Resources

Because both interpreters and compilers have been around for many years now, there are a good amount of resources out there on both of these types of translators. From an implementation perspective, there are many different approaches to writing a compiler versus an interpreter. But, if you’re looking to simply learn more or dig a little deeper, these resources are a good place to start.

- Interpreters and Compilers (Bits and Bytes, Episode 6), Bits and Bytes TVO

- The difference between a compiler and an interpreter, Gabriele Tomassetti

- Compilation vs Interpretation, BogeysDevTips

- Machine Code and High level Languages Using Interpreters and Compilers, Banchory Academy Computing Science

- The Programming Process, Professor Vern Takebayashi

- Why was the first compiler written before the first interpreter?, StackOverflow

Top comments (8)

This is such a random question (and I lovelovelove these posts) but I have been wondering about this ever since I read it: how'd you decide to make the compiler a hexagon and the interpreter a square?

I already used a rectangle, square, and circle for the scanner, tokenizer, and parser in a compiler...and I was running out of shapes 😛

Damn this was a brilliant read. And I'd just been thinking about this subject, I even made a thread about it:

dev.to/ben/explain-interpreted-pro...

Anything a computer "doesn't do" has to be thought of as a pretty temporary state of things me thinks.

Yay!! I'm so glad you liked it. 💛

Nice article.

This lays out the specturm of compilers and interpreters, which are kind of the two ideal forms. For people who wish to get into details, realize it is a kind of spectrum that modern languages use.

For example, compilation is done over many phases, producing intermediate results. Any of these could in theory be run on a virtual machine, or interpreter, or they could be compiled to machine code as needed.

It's even possible to interpret machine code, as Intel did with some of their Intel based Android tablets (since the machine code was expecting an Arm processor). The Valgrind toolset is also an interpreter, and really amazing profiler, for machine code.

What's important, and Vaidehi does a good job explaining this, are the different possibilities. Understand what transformations code goes through, and how it is run.

Amazing reading, thanks for this! =D

Awesome article ! I wanted to discover more about compilation and interpretation and this just answered so much question I asked myself, thanks !

Can someone explain me if we take an example of nodejs(an interpreting lang). A simple REST API code , each time a request on a route is made, the whole API code is interpreted and executed using the values passed on the route payload,headers,etc ???