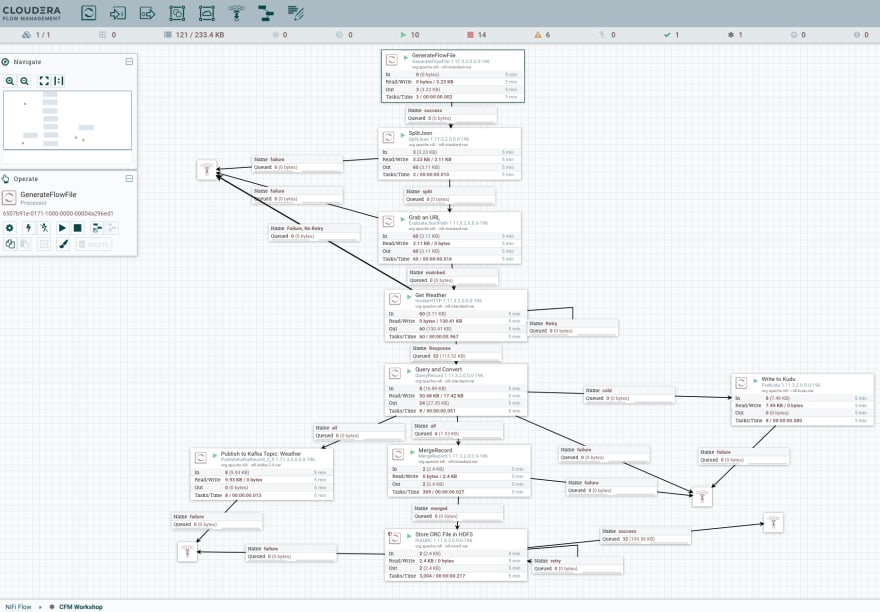

Use NiFi to call REST API, transform, route and store the data

Pick any REST API of your choice, but I have walked through this one to grab a number of weather stations reports. Weather or not we have good weather, we can query it anyway.

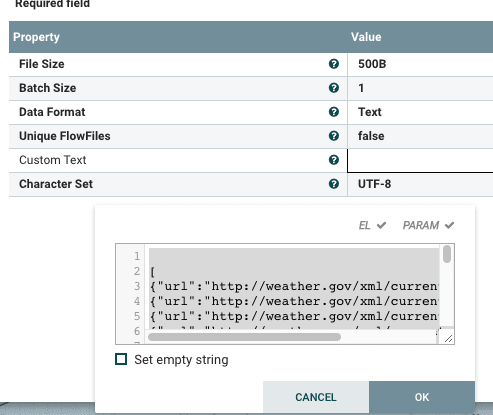

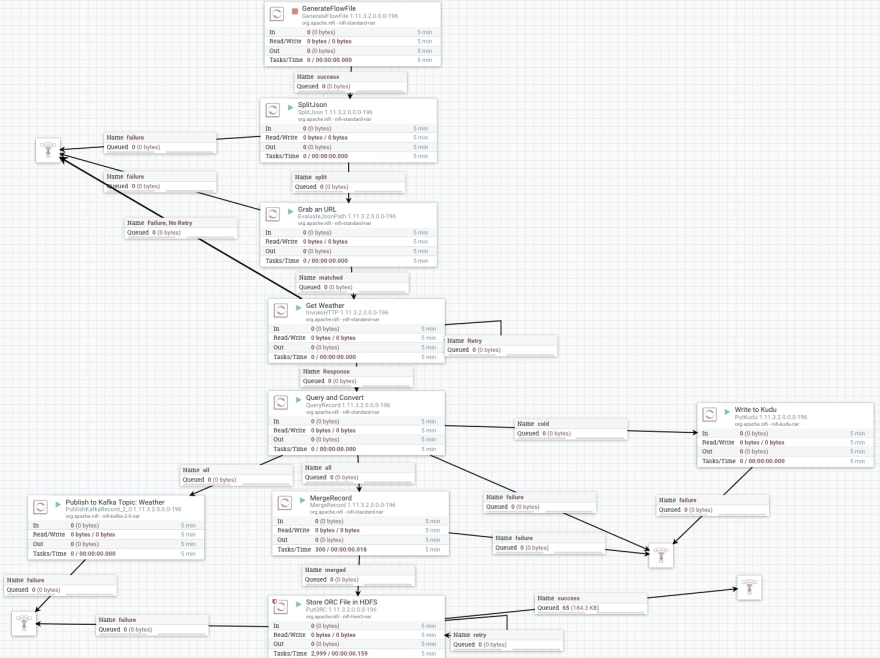

We are going to build a GenerateFlowFile to feed our REST calls.

[

{"url":"http://weather.gov/xml/current\_obs/CWAV.xml"},

{"url":"http://weather.gov/xml/current\_obs/KTTN.xml"},

{"url":"http://weather.gov/xml/current\_obs/KEWR.xml"},

{"url":"http://weather.gov/xml/current\_obs/KEWR.xml"},

{"url":"http://weather.gov/xml/current\_obs/CWDK.xml"},

{"url":"http://weather.gov/xml/current\_obs/CWDZ.xml"},

{"url":"http://weather.gov/xml/current\_obs/CWFJ.xml"},

{"url":"http://weather.gov/xml/current\_obs/PAEC.xml"},

{"url":"http://weather.gov/xml/current\_obs/PAYA.xml"},

{"url":"http://weather.gov/xml/current\_obs/PARY.xml"},

{"url":"http://weather.gov/xml/current\_obs/K1R7.xml"},

{"url":"http://weather.gov/xml/current\_obs/KFST.xml"},

{"url":"http://weather.gov/xml/current\_obs/KSSF.xml"},

{"url":"http://weather.gov/xml/current\_obs/KTFP.xml"},

{"url":"http://weather.gov/xml/current\_obs/CYXY.xml"},

{"url":"http://weather.gov/xml/current\_obs/KJFK.xml"},

{"url":"http://weather.gov/xml/current\_obs/KISP.xml"},

{"url":"http://weather.gov/xml/current\_obs/KLGA.xml"},

{"url":"http://weather.gov/xml/current\_obs/KNYC.xml"},

{"url":"http://weather.gov/xml/current\_obs/KJRB.xml"}

]

So we are using ${url} which will be one of these. Feel free to pick your favorite airports or locations near you. https://w1.weather.gov/xml/current_obs/index.xml

If you wish to choose your own data adventure, you can pick one of these others. You will have to build your own table if you wish to store it. They return CSV, JSON or XML, since we have record processors we don’t care. Just know which you pick.

https://min-api.cryptocompare.com/data/price?fsym=ETH&tsyms=BTC,USD,EUR

https://data.cdc.gov/api/views/cjae-szjv/rows.json?accessType=DOWNLOAD

https://api.weather.gov/stations/KTTN/observations/current * https://www1.nyc.gov/assets/tlc/downloads/csv/data_reports_monthly_indicators.csv

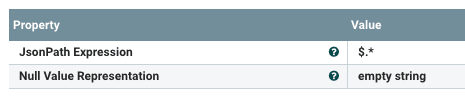

Then we will use SplitJSON to split the JSON records into single rows.

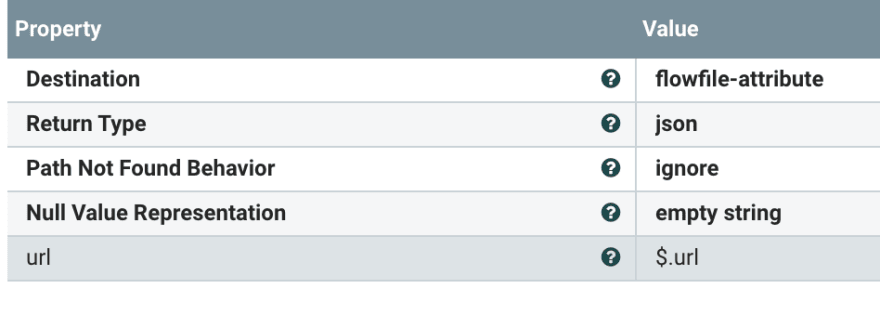

Then use EvaluateJSONPath to extract the URL.

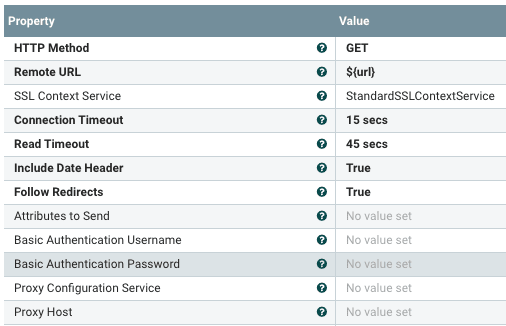

Now we are going to call those REST URLs with InvokeHTTP.

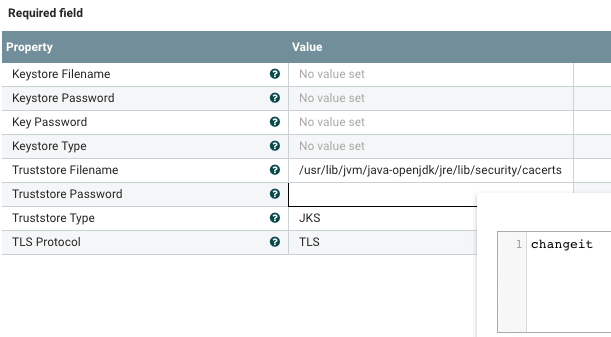

You will need to create a Standard SSL controller.

This is the default JDK JVM on Mac or some Centos 7. You may have a real password, if so you are awesome. If you don't know it, that's rough. You can build a new one with SSL.

For more cloud ingest fun, https://docs.cloudera.com/cdf-datahub/7.1.0/howto-data-ingest.html.

SSL Defaults (In CDP Datahub, one is built for you automagically, thanks Michael).

Truststore filename: /usr/lib/jvm/java-openjdk/jre/lib/security/cacerts

Truststore password: changeit

Truststore type: JKS

TLS Protocol: TLS

StandardSSLContextService for Your GET ${url}

We can tweak these defaults.

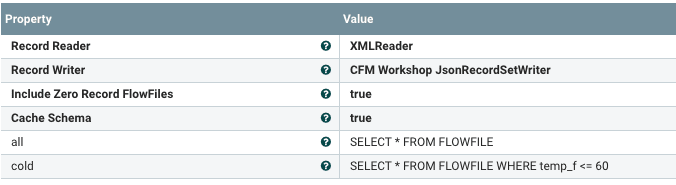

Then we are going to run a query to convert these and route based on our queries.

Example query on the current NOAA weather observations to look for temperature in fareneheit below 60 degrees. You can make a query with any of the fields in the where cause. Give it a try!

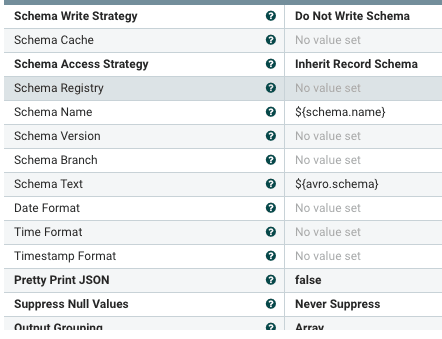

You will need to set the Record Writer and Record Reader:

Record Reader: XML

Record Writer: JSON

SELECT \* FROM FLOWFILE

WHERE temp\_f <= 60

SELECT \* FROM FLOWFILE

Now we are splitting into three concurrent paths. This shows the power of Apache NiFi. We will write to Kudu, HDFS and Kafka.

For the results of our cold path (temp_f ⇐60), we will write to a Kudu table.

Kudu Masters: edge2ai-1.dim.local:7051 Table Name: impala::default.weatherkudu Record Reader: Infer Json Tree Reader Kudu Operation Type: UPSERT

Before you run this, go to Hue and build the table.

CREATE TABLE weatherkudu

(`location` STRING,`observation\_time` STRING, `credit` STRING, `credit\_url` STRING, `image` STRING, `suggested\_pickup` STRING, `suggested\_pickup\_period` BIGINT,

`station\_id` STRING, `latitude` DOUBLE, `longitude` DOUBLE, `observation\_time\_rfc822` STRING, `weather` STRING, `temperature\_string` STRING,

`temp\_f` DOUBLE, `temp\_c` DOUBLE, `relative\_humidity` BIGINT, `wind\_string` STRING, `wind\_dir` STRING, `wind\_degrees` BIGINT, `wind\_mph` DOUBLE, `wind\_gust\_mph` DOUBLE, `wind\_kt` BIGINT,

`wind\_gust\_kt` BIGINT, `pressure\_string` STRING, `pressure\_mb` DOUBLE, `pressure\_in` DOUBLE, `dewpoint\_string` STRING, `dewpoint\_f` DOUBLE, `dewpoint\_c` DOUBLE, `windchill\_string` STRING,

`windchill\_f` BIGINT, `windchill\_c` BIGINT, `visibility\_mi` DOUBLE, `icon\_url\_base` STRING, `two\_day\_history\_url` STRING, `icon\_url\_name` STRING, `ob\_url` STRING, `disclaimer\_url` STRING,

`copyright\_url` STRING, `privacy\_policy\_url` STRING,

PRIMARY KEY (`location`, `observation\_time`)

)

PARTITION BY HASH PARTITIONS 4

STORED AS KUDU

TBLPROPERTIES ('kudu.num\_tablet\_replicas' = '1');

Let it run and query it. Kudu table queried via Impala, try it in Hue.

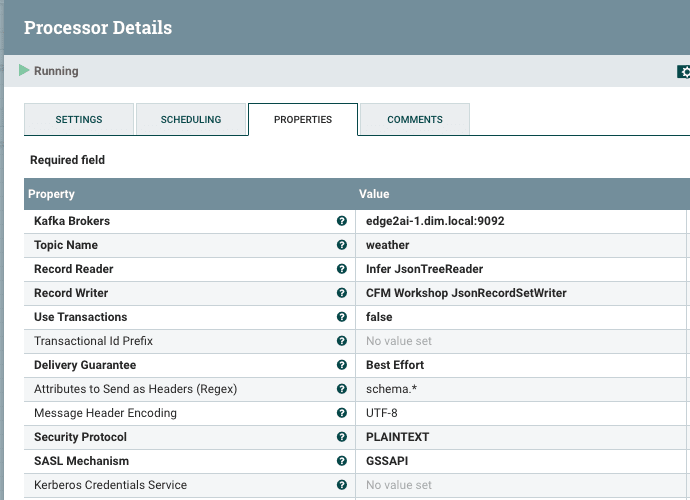

The Second fork is to Kafka , this will be for the 'all' path.

Kafka Brokers: edge2ai-1.dim.local:9092 Topic: weather Reader & Writer: reuse the JSON ones

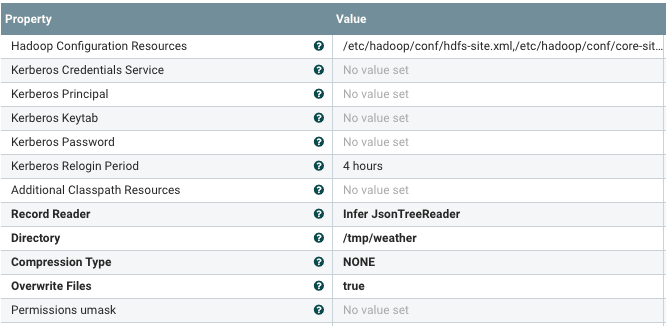

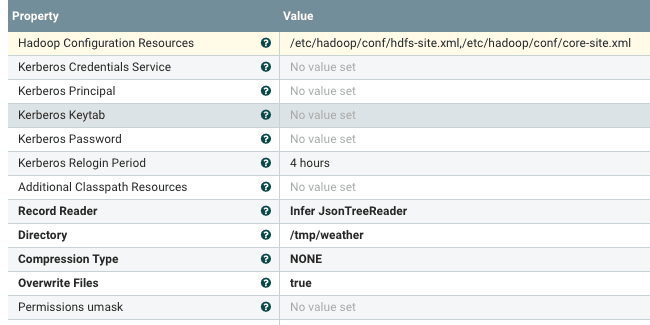

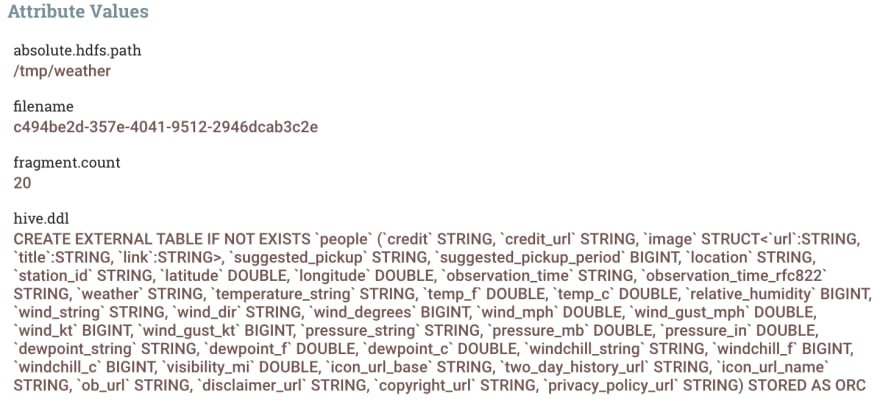

The Third and final fork is to HDFS (could be ontop of S3 or Blob Storage) as Apache ORC files. This will also autogenerate the DDL for an external Hive table as an attribute, check your provenance after running.

JSON in and out for record readers/writers, you can adjust the time and size of your batch or use defaults.

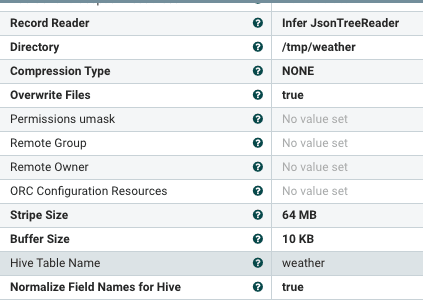

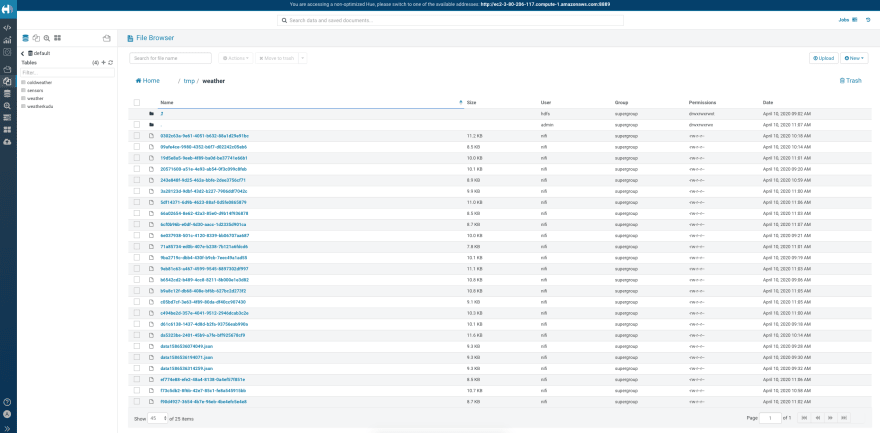

Hadoop Config: /etc/hadoop/conf/hdfs-site.xml,/etc/hadoop/conf/core-site.xml Record Reader: Infer Json Directory: /tmp/weather Table Name: weather

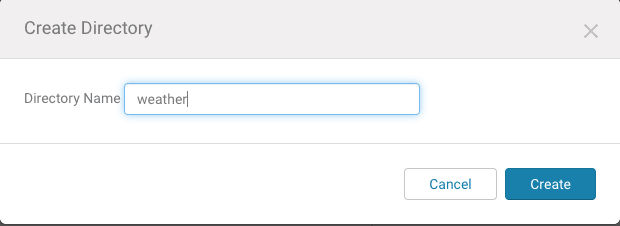

Before we run, build the /tmp/weather directory in HDFS and give it 777 permissions. We can do this with Apache Hue.

Once we run we can get the table DDL and location:

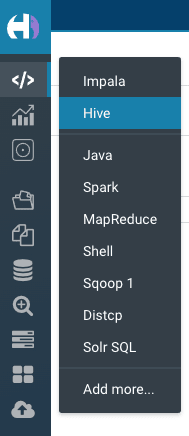

Go to Hue to create your table.

CREATE EXTERNAL TABLE IF NOT EXISTS `weather`

(`credit` STRING, `credit\_url` STRING, `image` STRUCT<`url`:STRING, `title`:STRING, `link`:STRING>, `suggested\_pickup` STRING, `suggested\_pickup\_period` BIGINT,

`location` STRING, `station\_id` STRING, `latitude` DOUBLE, `longitude` DOUBLE, `observation\_time` STRING, `observation\_time\_rfc822` STRING, `weather` STRING, `temperature\_string` STRING,

`temp\_f` DOUBLE, `temp\_c` DOUBLE, `relative\_humidity` BIGINT, `wind\_string` STRING, `wind\_dir` STRING, `wind\_degrees` BIGINT, `wind\_mph` DOUBLE, `wind\_gust\_mph` DOUBLE, `wind\_kt` BIGINT,

`wind\_gust\_kt` BIGINT, `pressure\_string` STRING, `pressure\_mb` DOUBLE, `pressure\_in` DOUBLE, `dewpoint\_string` STRING, `dewpoint\_f` DOUBLE, `dewpoint\_c` DOUBLE, `windchill\_string` STRING,

`windchill\_f` BIGINT, `windchill\_c` BIGINT, `visibility\_mi` DOUBLE, `icon\_url\_base` STRING, `two\_day\_history\_url` STRING, `icon\_url\_name` STRING, `ob\_url` STRING, `disclaimer\_url` STRING,

`copyright\_url` STRING, `privacy\_policy\_url` STRING)

STORED AS ORC

LOCATION '/tmp/weather'

You can now use Apache Hue to query your tables and do some weather analytics. When we are upserting into Kudu we are ensuring no duplicate reports for a weather station and observation time.

select `location`, weather, temp\_f, wind\_string, dewpoint\_string, latitude, longitude, observation\_time

from weatherkudu

order by observation\_time desc, station\_id asc

select \*

from weather

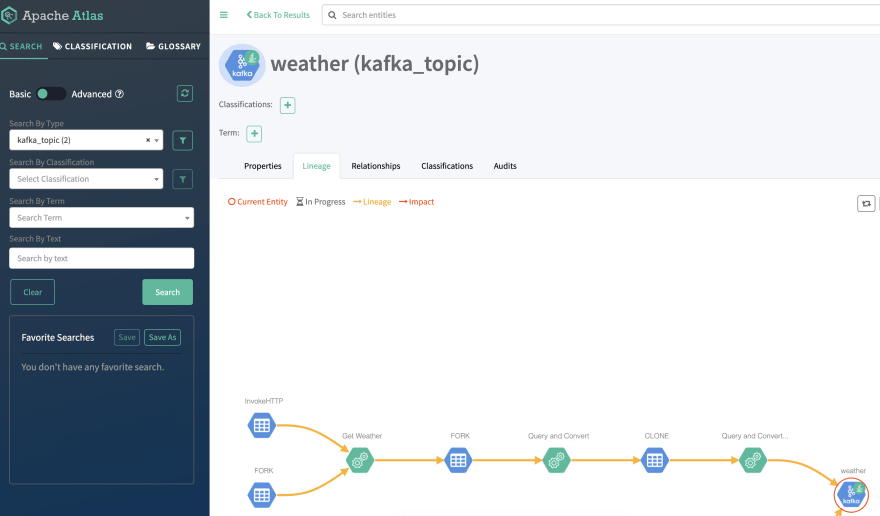

In Atlas, we can see the flow.

Top comments (1)

Great tutorial! Thank you!!