About a year ago, as I started doing some small recap on algorithms, I came to an interesting realization: as enterprise developers we don't give a lot of thought to the Big O Notation because we're constantly using and reusing frameworks and algorithms that are already implemented. It's certainly there in the back of our minds in order to be able to optimize, but it's not often "fully activated".

This year I'm preparing to become the buddy of a few students joining our company as part of their university studies. That means I'm putting together a study guide and an onboarding roadmap, but I also get to look back on all the things I wish I knew better when I was in their situation. Of course the learning path will not be that granular, but my mind wanders, so I dug up what used to be a tweet and turned it into a small article.

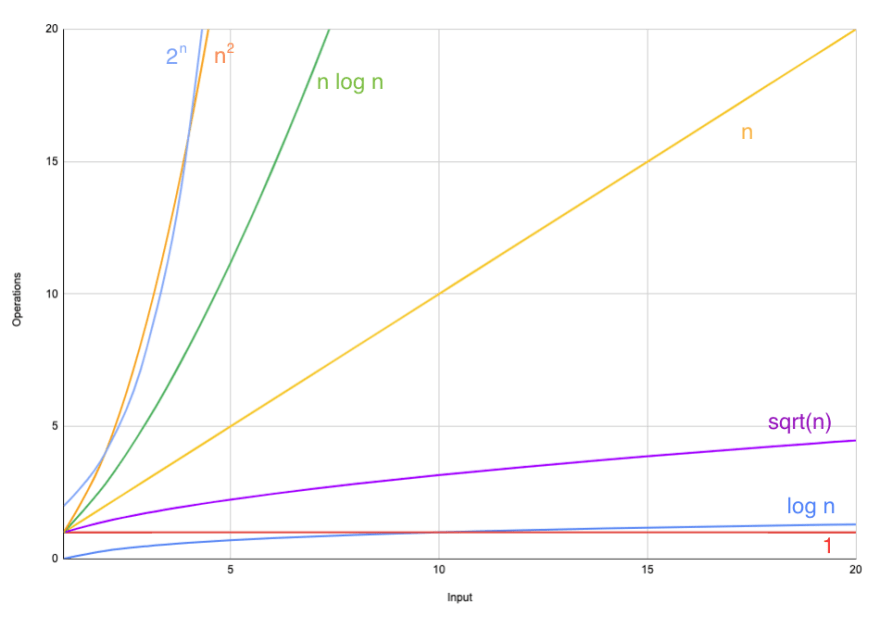

I hope this will be helpful for beginners, but also for devs that jumped straight into coding. And if you’re anything like me, you’ll enjoy following along with the growth chart.

Big O Notation

The Big O Notation is an abstract way to characterize algorithms according to how the running time or space (in memory or on disk) necessities grow as the size of the input grows.This is purely independent of programming languages, operating systems or hardware. Here is short sorted list of common growth rates:

Constant time - O(1) - The input size does not influence the required number of operations. Examples: printing a single value or accessing an array element by its index.

Logarithmic time - O(log n) - The number of required operations has a slow growth in relation to the input size. Example: binary search in a sorted array, where with each iteration the workload is being halved.

Square-root time - O(sqrt(n)) - The number or operations is proportional to the square root of the input size.Example: primality test.

Linear time - O(n) - The number of operations grows along with the size of the input.Examples: algorithms that involve iterating over a collection once, sequential search.

Log-linear time - O(n log n) - The number of operations grows proportionate to n log n. Example: merge sort.

Quadratic time - O(n2) - The number of operations is equal to a squared n, usually by running two nested for loops.Examples: bubble sort, insertion sort.

Exponential time - O(2n) - The number of operations doubles with every input increase.Example: finding Fibonacci numbers recursively.

Top comments (0)