Windows PowerShell is an extremely useful tool when it comes to quickly churning out useful bits of automation. If these scripts run unattended, we’d often sprinkle logs to aid troubleshooting. What one does with these logs totally depends on application, but we’ve seen some decent Sentinel deployments with alerting and hunting queries (which is beside the point of today’s post).

We recently had a mysterious issue where we tried ingesting a log file into Azure Log Analytics Workspace but it never came through…

Ingesting custom logs

Generally speaking, this is a very simple operation. Spin up log analytics workspace and add a “Custom Logs” entry:

Finally, make sure to install Monitoring Agent on target machine and that’s it:

Finally, make sure to install Monitoring Agent on target machine and that’s it:

After a little while, new log entries would get beamed up to Azure:

After a little while, new log entries would get beamed up to Azure:

Here we let our scripts run and went ahead to grab some drinks. But after a couple of hours and few bottles of fermented grape juice we realised that nothing happened…

Here we let our scripts run and went ahead to grab some drinks. But after a couple of hours and few bottles of fermented grape juice we realised that nothing happened…

What could possibly go wrong?

Having double- and triple- checked our setup everything looked solid. For the sake of completeness, our PowerShell script was doing something along the following lines:

$scriptDir = "."

$LogFilePath = Join-Path $scriptDir "log.txt"

# we do not overwrite the file. we always append

if (!(Test-Path $LogFilePath))

{

$LogFile = New-Item -Path $LogFilePath -ItemType File

} else {

$LogFile = Get-Item -Path $LogFilePath

}

"$(Get-Date -Format "yyyy-MM-dd HH:mm:ss") Starting Processing" | Out-File $LogFile -Append

# do work

"$(Get-Date -Format "yyyy-MM-dd HH:mm:ss") Ending Processing" | Out-File $LogFile -Append

Nothing fancy, just making sure timestamps are in a supported format. We also made sure we do not rotate the file as log collection agent will not pick it up. So, we turned to the documentation:

- The log must either have a single entry per line or use a timestamp matching one of the following formats at the start of each entry – ✓ check

- The log file must not allow circular logging or log rotation, where the file is overwritten with new entries – ✓ check

- For Linux, time zone conversion is not supported for time stamps in the logs – not our case

- and finally, the log file must use ASCII or UTF-8 encoding. Other formats such as UTF-16 are not supported – let’s look at that a bit closer

Figuring this out

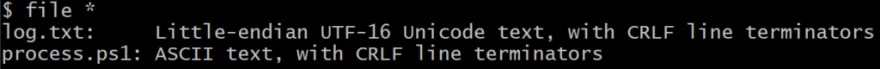

Looking at Out-File, we see that default Encoding is utf8NoBOM. This is exactly what we’re after, but examining our file revealed a troubling discrepancy:

That would explain why Monitoring Agent would not ingest our custom logs. Fixing this is rather easy, just set default output encoding at the start of the script:

That would explain why Monitoring Agent would not ingest our custom logs. Fixing this is rather easy, just set default output encoding at the start of the script: $PSDefaultParameterValues['Out-File:Encoding'] = 'utf8'.

But the question of how that could happen still remained…

Check your version

After a few more hours trying various combinations of inputs and PowerShell parameters, we checked $PSVersionTable.PSVersion and realised we ran PS5.1. This is where it started to click: documentation by default pointed us to the latest 7.2 LTS where the default encoding is different! Indeed, rewinding to PS5.1 reveals that the default used to be unicode: UTF-16 with the little-endian byte order.

Conclusion

Since PowerShell 7.x+ is not exclusive to Windows anymore, Microsoft seems to have accepted a few changes dependent on underlying behaviours of .NET frameworks these were built upon. There’s in fact an extensive list of breaking changes that mention encoding a few times. We totally support the need to advance tooling and converging tech. We, however, hope that as Monitoring Agent matures, more of these restrictions will get removed and this will not be an issue anymore. Until then – happy cloud computing!

Top comments (0)