TLDR;

I just publish my product Etalase that built with Remix and Hono. The problem comes from the Remix side which hosted on Vercel. For Hono, I use Deno as the runtime engine and hosted it on Deno Deploy combined with Supabase Database.

My Remix page is rendered ~500ms approximately when I back to previous page. For example, from home to products then back again to home. As my inspection, the problem comes from my Vercel region set to Washington while my Supabase and Deno are set to Singapore.

So, setting region for each Platform you use to same region should resolve this issue 😁.

Crab, why so slow?

I'm asking my self why my page get slower after adding some API calls into loader function. It approximately ~500ms. It's very slow for zero users (for now 😅). Is that because my API slow? nope, I tested it and it fast!

Does my loader not optimized yet? Yeah, it does, but this platform still zero users 😭. Such a beautiful realize...

Streaming tears from "I'm OK" promises

"OK FINE! Let's tune this" I said it to my self.

First, I use Promise.all() API to aggregate all promises together. I thought the loader slow because the promises running sequentially. After doing that, the result? it does not change anything 🙂.

"How about to streaming this?" I'm literally said it to my self.

Btw, Remix has streaming ability to stream your data into UI. As soon as your first chunk of data received by loader function it stream into the UI.

And after doing this 🥁🥁🥁...

It also does not change anything 🙂.

Let's drown draw

Enough for trying, let's analyzing.

First, I define the problem. it's SLOW. My expectation is FAST. I've tried two different method: Promise.all and Streaming and it fails.

Now let's make some scenario.

And focus to the problem.

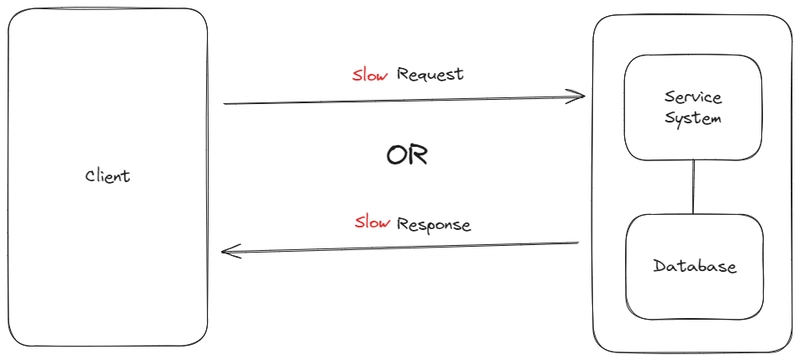

I use fetch to connect with my API that returns promise and make it parallel using Promise.all(). Since fetch is using HTTP based communication this can have two main problems: slow request times or slow response times.

But, based on my scenario above I have no problem for Browser and Postman. So what happen? OK Let's figure out what actually make HTTP based communication get slowed specially on the network side because I know my connection is good and data being transferred is small.

Oh, wait. Latency? I though I've got some light of God 😭

You know? The actual response times for your server is time (ms) = latency + server request or response times. As I remember the major problem for latency is a distance between client and the server itself.

Triggered

Wait 🤨 (100x)...

"What the default region of my Vercel serverless function?", I said.

I immediately open my Vercel Functions settings to check the region. And you know, it's "Washington, D.C., USA ( iad1 )" by default.

📖 Here's the reference for you

It took my three hours to debug. Thanks, btw 🙂.

Streaming tears (again, but really)

After realized that problem I change my serverless function to Singapore and apply it.

Also, I re-check all services are located to Singapore. And the final test is... "fast". I'm very happy about it and take some break to make a coffee.

To summarize, I give you some checklist if your Remix function slow even thought it isn't hosted on Vercel.

- Use

Promise.all(). If it can't help, use... - Remix

Streaming. Or even worst... - Check your client-server latency issue.

See ya!

Top comments (0)