Originally, I planned to only monitor network and onpage performance metrics of the top ten eCommerce sites on Cyber Monday. But after crunching the numbers, I found something I hadn't anticipated... third-party tracking tags are taxing a significant toll on page load times.

The chart above shows the top ten sites from shortest to longest DOM load time. I noticed that sites with more trackers had consistently longer DOM, total load, and finish times.

Actually, third-party trackers had more of an impact on load times than requests or page size! Some websites took over a minute to fully load because they were waiting on requests for tracking beacons.

You might know these trackers as pixels or beacons. They are very small scripts that capture information about website visitors and send that info to an external application for reporting.

You most likely use a few of these trackers on your website and think that since they are short scripts that make a quick external call and don’t change anything in the DOM, they must be harmless. Yes and no…

On their own, trackers usually won’t have any impact on user experience, since they run in the background. But, when you have dozens of requests for third-party tracking and each one takes a few hundred milliseconds to load, you could be adding valuable seconds to your load times.

After looking at the top ten sites, I found that on average these sites used 24 trackers, with the peak reaching 74 trackers on a single page. That's 74 requests that could be clogging up load times or blocking other resources from rendering.

Now these sites are not representative of the majority of the web, or even the kinds of projects you might be dealing with. But it's important to look at sites at this scale so that we can see how the little things can pile up and really impact user experience.

Before we get any deeper… we need to talk about the different kinds of load times. There are three different ways we measure page load:

- DOM Load: how long it takes the browser to render all the HTML elements and scripts.

- Page Load: DOM load + time it takes to download all the page content (images, video, etc.)

- Finish: How long it took to send and receive all requests on the page.

Third-party trackers usually won’t load until after the DOM is loaded, which is why you will see drastic differences between the DOM load and the finish time.

For example, Amazon’s DOM load took only 1.55 seconds whereas the total load time finished at over a minute. When we reran the results a second time with an Ad Blocker enabled, the finish time dropped to 45 seconds.

Now 45 seconds is still a really long finish time. The culprit was a single resource that took over 30 seconds to load and inevitably failed. In this case, the DOM load was not impacted.

Amazon was far from the only site affected by third-party trackers. After the first round of testing was completed, I went back and reran all the tests with an Ad Blocker enabled.

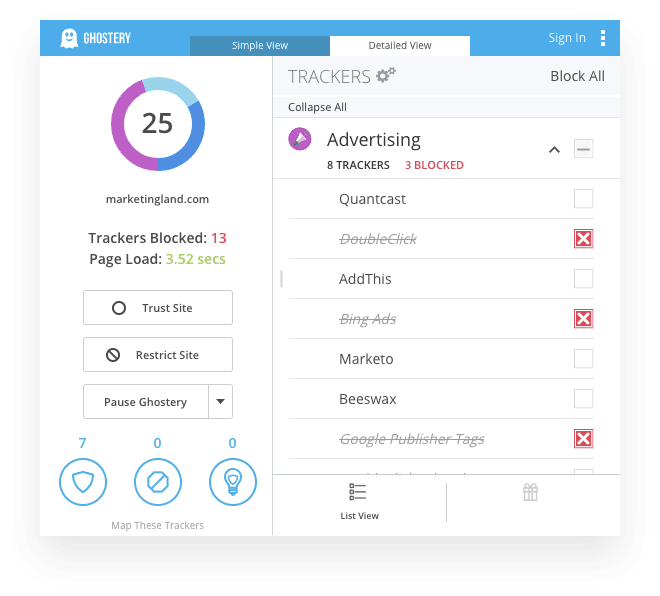

I use Ghostery, a Chrome extension that prevents ads from showing on web pages as well as restrict trackers from scraping sensitive information.

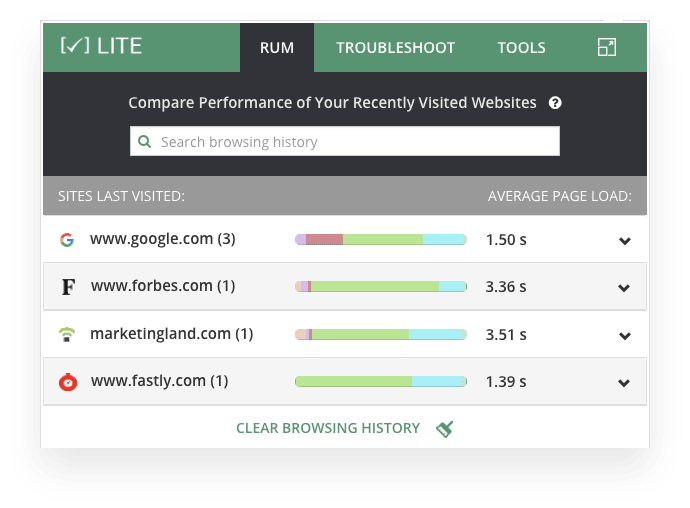

I also used Sonar Lite, a free Chrome extension that uses Real-Use Monitoring to capture page loads and request timings. Shameless plug, I also work for Constellix.. but it is a fantastic free tool that makes life easier than opening up dev tools and hard reloading the page every time I want performance metrics.

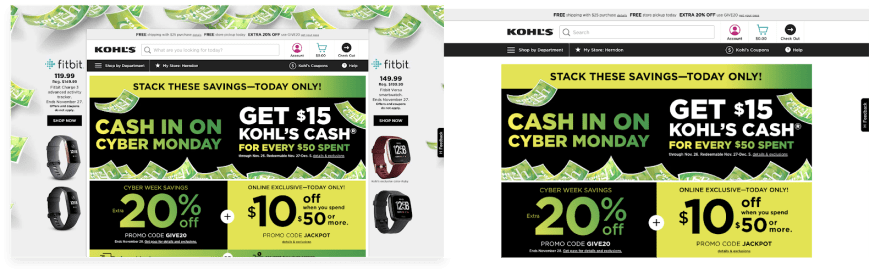

In most cases, I didn’t see much of an aesthetic difference with or without the Ad Blocker, with the exception of Kohl’s who turned their website background into an advertisement. Once I enabled Ghostery, it disappeared entirely.

And pages that took a long time to render the initial paint (how long it takes to render the visual elements in the browser) still took the same amount of time.

All of this was as expected... until I crunched the numbers and compared them to the first test:

- DOM loads reduced by an average of 8.9% when we used an ad blocker.

- Total page loads (including images) were reduced by a whopping 17%.

- Page size slashed by 24%

- Requests reduced by 43%

- Third party trackers were cut in half, down by 58%

You’ll notice that the number of trackers didn’t drop to zero. There are still some trackers being used even when we have the ad blocker enabled because these trackers are considered “essential” by Ghostery and all of our data is anonymized before being sent off.

But when you remove the majority of the trackers, all three load metrics, the number of requests, and page size dropped significantly.

So why should this matter to you? Well if you use third-party trackers, you need to be aware of the performance impact.

The websites we monitored in this study are the epitome of optimal web performance. They religiously use (and establish) the best practices for web performance and efficient web development. Despite that, they still suffer from the same pitfalls as everyone else due to overuse of third-party tracking scripts.

When I reran the tests with the trackers removed, the average DOM load time dropped from 1.9 to 1.7 seconds. That may seem inconsequential, but when you have thousands of millions of people using your site at the same time, every millisecond counts.

Experts estimated that over 75 million shoppers will take to the web for their Cyber Monday shopping. For the ten retail giants we monitored, that’s likely a couple million shoppers surfing their websites within a 24 hour period.

If page loads dip just the slightest bit, it could cost thousands in revenue.

That’s why these kinds of site are the best to learn from. They have teams of dozens of people working to keep their site lean and efficient, all to make sure performance doesn’t degrade when they are challenged by holiday shoppers.

Even if you don’t have the resources or man power that these ecommerce giants have, there is still so much you can learn from them and apply to your own development projects.

If there’s one thing you take away from this article, it is to be mindful of the trackers you use. Every pixel could impact performance, so don’t take those little scripts lightly.

Key Takeaways:

- Sites with more trackers tended to have longer DOM and finish load times.

- However, sites with more requests did not correlate with longer load times.

- Pages that didn’t complete all requests had longer load times.

- Lazy loading (loading images and scripts on scroll) improved page loads by a few hundred milliseconds to a few seconds

- 60% of the top websites had DOM load times under two seconds.

- Website sizes were unusually higher than previous years averaging 3.75 Mb, nearly double the recommended size of 2Mb.

- Trackers accounted for an average of a megabyte of website size.

The Results:

Here are the results from the two sets of tests. Each cell contains the difference between the first set of tests and the second set when I used an Ad Blocker. Red cells indicate an increase in load times or # of requests/trackers. Green cells indicate improvement and a reduction in load times or # of requests/trackers when we used an Ad Blocker.

Let me know what you think of this study. Were you surprised by the results? Did you see something in the data that I didn't catch? I'd love to hear your findings.

Top comments (0)