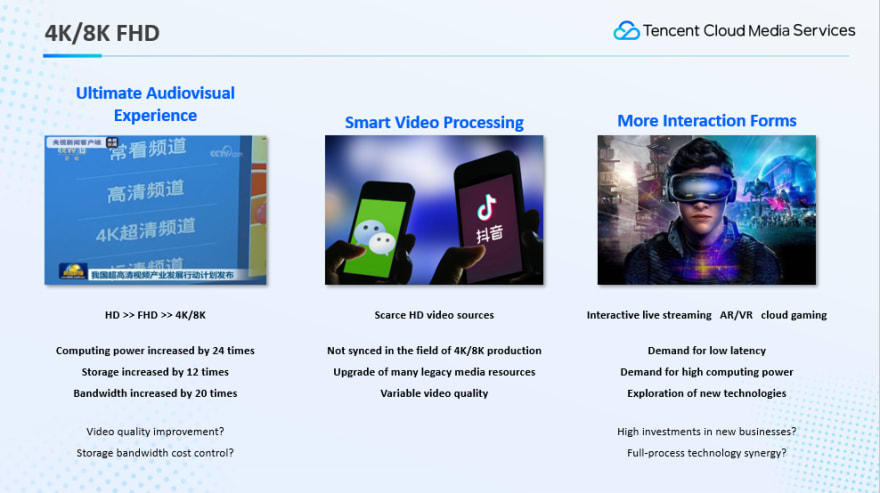

The support for higher video resolutions and definitions on devices has created higher demand for high definition and brought many challenges for 4K/8K videos with a super high resolution and bitrate. Today, we'll share some ideas about accelerating media digitalization through media processing capabilities.

In part 1, we will talk about the features of 4K/8K FHD videos and the problems holding back their wide application. Part 2 details the optimizations we've performed on encoders to make them more adapted to videos with a super high bitrate and resolution. Part 3 focuses on the architecture of the real-time 8K transcoding system for live streaming scenarios. And in the last part, we cover how to leverage media processing capabilities and image quality remastering technology to increase definition so that more FHD videos are available.

4K/8K FHD videos feature a super high definition, resolution, and bitrate. The latter two pose new challenges to downstream systems. In a live streaming system, video resolution and bitrate are closely related to the processing speed and performance consumption during transcoding. To support the real-time 8K transcoding system, both the encoding kernel and system architecture need to be redesigned. Currently, there are many hardware solutions dedicated to real-time 4K/8K encoding, but these solutions suffer from a poor compression rate compared with software encoding. To deliver 4K/8K definition, they require dozens of or even hundreds of megabytes for bitrate, posing a huge challenge to the entire transfer linkage and to the playback device. In addition, AR and VR are gaining momentum, which rely heavily on video encoding and transfer. As technologies advance, FHD videos will be an inevitable trend.

The second part shares some encoding optimizations and the performance delivered by our proprietary encoders.

Our team has independently developed encoding kernels for H.264, H.265, AV1, and the latest H.266. Proprietary encoders make it possible to design encoding features for real-world business scenarios and perform targeted optimizations. For example, during the Beijing Winter Olympics, the Tencent Cloud live streaming system sustained real-time 4K/8K encoding and compression and supported up to 120 fps for real-time encoding. To ensure real-timeness, many custom optimizations were made inside the encoder. V265, Tencent's proprietary H.265 encoder, overshadows the open-source X265 in terms of speed and compression rate. At the highest speed level, V265 is significantly faster than X265, delivering quick encoding at a high resolution. V265 also supports 8K/10-bit/HDR encoding. AV1 encoding is much more complicated than H.265 encoding. For FHD implementations, we've made many optimizations in engineering performance. Compared with the open-source SVT-AV1, TSC delivers 55% performance acceleration and 16.8% compression gain.

To implement quick encoding of FHD videos, we have made a few optimizations. The first is to increase the parallelism. The encoding process involves parallelism at the frame and macroblock levels. In real-time encoding at a high resolution, the frame architecture of the video sequence is tuned to increase inter-frame encoding parallelism. As for macroblock-level parallelism, tile encoding is supported for better row-level encoding parallelism. The second one relates to pre-analysis and post-processing. Encoders always involve a lookahead pre-analysis process before subsequent encoding operations. The look-ahead process tends to affect the parallelism of the entire linkage. Therefore, algorithms for pre-analysis and post-processing are simplified to accelerate the process. After these optimizations, the encoder delivers a faster processing speed and a higher level of parallelism.

Part 3 describes system architecture optimization. For live streaming scenarios, encoding kernel optimization alone is not enough to accommodate real-time 8K encoding and compression rate, which means the architecture of the entire system needs to be adjusted.

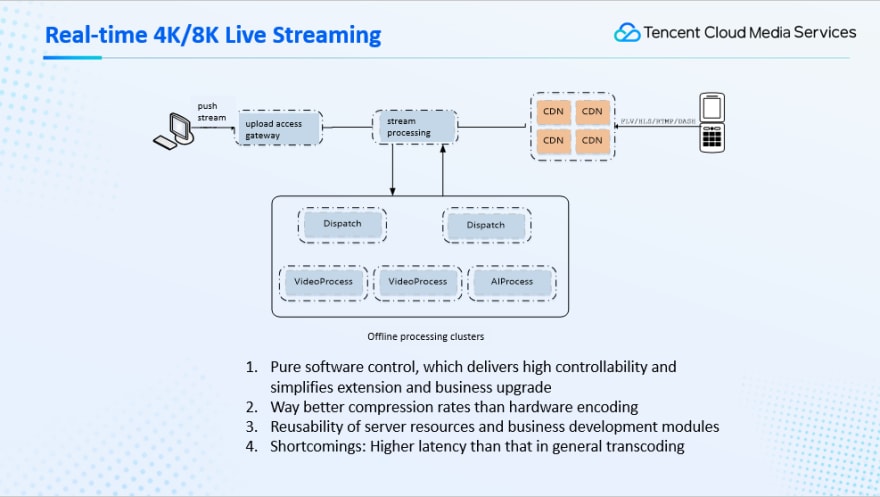

It is common practice to input the 8K AVS3 video source to the hardware encoder and output different channels of bitrate streams for delivery, such as 8K H.265, 4K H.265, 1080p H.264, and 720p H.264. This can help achieve the goal, but it also has many problems. First of all, 8K hardware encoders are generally expensive, especially 8K/AV1 ones with fewer options. Second, hardware encoders have a poor compression rate compared with optimized software encoders, as many acceleration algorithms not applicable to parallelism cannot be used for hardware encoding features. Third, hardware encoders often have custom architectures and chips, making them unable to quickly respond to different business scenarios. It's hard for hardware encoders to meet constantly evolving business requirements. If the same encoding effect can be achieved by software encoding, both the transcoding compression rate and business flexibility can be guaranteed.

To solve these problems, many adjustments are made to the architecture of the entire live streaming system. In a general live streaming system, streams are pushed to the upload access gateway, processed, transcoded, and then pushed to CDN for delivery and watching. For 8K video encoding, it's difficult for the current live stream processing linkage with only one server and one transcoding node to implement real-time software encoding. Against this backdrop, we've designed the FHD live stream processing platform.

In FHD live streaming, a transcoding node performs remuxing instead of transcoding, that is, it splits a pulled source stream into TS segments and sends them as files to the video transcoding processing cluster. The cluster can process TS segments in parallel to implement parallel encoding of multiple servers. Compared with the original single-linkage encoding with one server, this distributed method on multiple servers features pure software control and high flexibility. It's quite convenient for processing both capacity expansion and business upgrades. In addition, costs are reduced. The hybrid deployment of the offline transcoding and live streaming clusters allows for resource reuse within a larger scope of business, increasing the resource utilization. There are shortcomings, of course. The latency will be higher than that in a standard transcoding process. To implement parallel transcoding, remuxing is performed before stream processing, during which independent TS segments are generated after a period of wait time, thus leading to a higher but acceptable latency. When HLS is used by the downstream services for live streaming, there won't be an obvious change in the latency.

Live 4K/8K FHD videos are converted into parallel and independent offline transcoding tasks by the offline processing cluster through parallel encoding. Top Speed Codec capabilities can be used within the offline transcoding node, where when transcoding is performed, the bandwidth can be saved by more than 50% at the same subjective quality.

Compared with hardware encoders, the compression rate is improved by more than 70%. That is, through the aforementioned system solution, streaming live 4K/8K FHD videos requires only 30% of the hardware encoding bitrate at the same image quality level; TSC can improve the subjective quality by more than 20% at the same bitrate.

Inside each independent offline transcoding node along the linkage, video sources are decoded when they are received, and they are categorized by scene using different encoding policies. Scene detection is then performed, including noise detection and glitch detection, to analyze the noise and glitches in the video sources for subsequent encoding optimization. Before the encoding, the detected noise and glitches will be removed; after the image quality remastering of the video sources, perceptual encoding analysis is performed, where ROI areas in the image are analyzed, such as the face area and areas with complicated or simple textures. For those with complicated textures, some textures may be covered, and the bitrate can be reduced appropriately. For those with simple textures that are sensitive to the human eye, blocking artifacts will have a significant impact. In this case, the control analysis of perceptual encoding, or JND capabilities, can be used. Based on ROI and JND results, the encoder kernel can better assign the bitrates to the macroblocks during encoding.

Currently, many playback devices support 4K, but not all video sources are 4K. With Tencent Cloud’s media processing capabilities, video sources can be upgraded to 4K to deliver a truly 4K viewing experience.

A 4K FHD video is usually generated in the following steps. First, the video source is analyzed for noise, compression, and other distortion. Then, comprehensive data degradation is performed based on the analysis result, including noise removal, texture enhancement, and noise suppression. It is important to note that if certain parts of the image are well processed, such as areas containing human faces or text, which are more sensitive to the human eye, the overall viewing experience can be enhanced greatly.

After detail enhancement, the color will be corrected. HDR capabilities are widely used in 4K/8K videos, and SDR to HDR conversion can be performed for many video sources with no HDR playback effects to deliver a high-resolution and truly vivid 4K effect.

During video super-resolution, we cannot achieve the ideal effect by using only one model. Specifically, a general model can be used for the background or the entire image, and another model needs to be used for areas with faces and text. The two models should be combined to deliver the final enhancement effect. As the facial features are fixed and provide sufficient prior information for video super-resolution, dedicated efforts can be made to enhance this area to significantly improve the viewing experience.

Top comments (0)