Hello, my name is Teakowa, this article will introduce to you how our virtual starry sky builds a web front-end automated publishing pipeline based on GitHub Action, and the problems we encountered.

Some Prerequisites

Our development is All in GitHub, if you want to do CI /CD integration on GitHub in 2022, then I don't have any reason not to recommend GitHub Action, GitHub Action very convenient, it integrates natively with GitHub, the community is active, the configuration is simple (not necessarily), and it is the most suitable for us at present.

Our release process is relatively simple, the PR will be released immediately after the merger:

Automation version

Before the production release process, there is another step, which is to automatically generate the version. All our projects follow the semantic version specification to release candidates, and use semantic-release to automate this process. Whenever our Pull Request is merged into the main branch, Release Workflow will run, and automatically create new tags and releases according to the rules.

.github/workflows/release.yml

name: Release

on:

push:

branches:

- main

- rc

- beta

- alpha

workflow_dispatch:

jobs:

release:

name: Release

uses: XNXKTech/workflows/.github/workflows/release.yml@main

secrets:

CI_PAT: ${{ secrets.CI_PAT }}

Here we use GitHub's reusable workflow, the complete reusable workflow is as follows:

name: Release

on:

workflow_call:

outputs:

new_release_published:

description: "New release published"

value: ${{ jobs.release.outputs.new_release_published }}

new_release_version:

description: "New release version"

value: ${{ jobs.release.outputs.new_release_version }}

new_release_major_version:

description: "New major version"

value: ${{ jobs.release.outputs.new_release_major_version }}

new_release_minor_version:

description: "New minor version"

value: ${{ jobs.release.outputs.new_release_minor_version }}

new_release_patch_version:

description: "New patch version"

value: ${{ jobs.release.outputs.new_release_patch_version }}

new_release_channel:

description: "New release channel"

value: ${{ jobs.release.outputs.new_release_channel }}

new_release_notes:

description: "New release notes"

value: ${{ jobs.release.outputs.new_release_notes }}

last_release_version:

description: "Last release version"

value: ${{ jobs.release.outputs.last_release_version }}

inputs:

semantic_version:

required: false

description: Semantic version

default: 19

type: string

extra_plugins:

required: false

description: Extra plugins

default: |

@semantic-release/changelog

@semantic-release/git

conventional-changelog-conventionalcommits

type: string

dry_run:

required: false

description: Dry run

default: false

type: string

secrets:

CI_PAT:

required: false

GH_TOKEN:

description: 'Personal access token passed from the caller workflow'

required: false

NPM_TOKEN:

required: true

env:

GITHUB_TOKEN: ${{ secrets.GH_TOKEN == '' && secrets.CI_PAT || secrets.GH_TOKEN }}

GH_TOKEN: ${{ secrets.GH_TOKEN == '' && secrets.CI_PAT || secrets.GH_TOKEN }}

token: ${{ secrets.GH_TOKEN == '' && secrets.CI_PAT || secrets.GH_TOKEN }}

jobs:

release:

name: Release

runs-on: ubuntu-latest

outputs:

new_release_published: ${{ steps.semantic.outputs.new_release_published }}

new_release_version: ${{ steps.semantic.outputs.new_release_version }}

new_release_major_version: ${{ steps.semantic.outputs.new_release_major_version }}

new_release_minor_version: ${{ steps.semantic.outputs.new_release_minor_version }}

new_release_patch_version: ${{ steps.semantic.outputs.new_release_patch_version }}

new_release_channel: ${{ steps.semantic.outputs.new_release_channel }}

new_release_notes: ${{ steps.semantic.outputs.new_release_notes }}

last_release_version: ${{ steps.semantic.outputs.last_release_version }}

steps:

- name: Checkout

uses: actions/checkout@v3.0.2

with:

persist-credentials: false

fetch-depth: 0

- name: Use remote configuration

if: contains(fromJson('["XNXKTech", "StarUbiquitous", "terraform-xnxk-modules"]'), github.event.repository.owner.name) == true

run: |

wget -qO- https://raw.githubusercontent.com/XNXKTech/workflows/main/release/.releaserc.json > .releaserc.json

- name: Semantic Release

uses: cycjimmy/semantic-release-action@v2

id: semantic

with:

semantic_version: ${{ inputs.semantic_version }}

extra_plugins: |

@semantic-release/changelog

@semantic-release/git

conventional-changelog-conventionalcommits

dry_run: ${{ inputs.dry_run }}

env:

GITHUB_TOKEN: ${{ env.GH_TOKEN }}

NPM_TOKEN: ${{ secrets.NPM_TOKEN }}

By reusable workflow, we can maintain only one workflow and let all codebase reuse, reduce our maintenance cost, after all, it is very painful to change the workflow one by one after more repository 😑

It also unifies the releaserc.json used by each warehouse, allowing us to further reduce maintenance costs.

More about our reusable workflow practices will be published if there is a chance, welcome to pay attention.

Automatic publishing

First the complete workflow:

Our Production workflow is triggered by the release event, which is done by the GitHub webhook and doesn't require our attention, but we also use workflow_dispatch events that allow us to run the Production workflow manually:

.github/workflows/production.yml

name: Production

on:

release:

types: [ published ]

workflow_dispatch:

Build

The first is the Build part, which needs to compile the front-end static resource files for subsequent workflow processing:

jobs:

build:

name: Build

runs-on: ubuntu-latest

timeout-minutes: 10

steps:

- name: Split string

uses: jungwinter/split@v2

id: split

with:

separator: '/'

msg: ${{ github.ref }}

- name: Checkout ${{ steps.split.outputs._2 }}

uses: actions/checkout@v3

with:

ref: ${{ steps.split.outputs._2 }}

- name: Setup Node ${{ matrix.node }}

uses: actions/setup-node@v3.3.0

with:

node-version: ${{ matrix.node }}

cache: npm

- name: Setup yarn

run: npm install -g yarn

- name: Setup Nodejs with yarn caching

uses: actions/setup-node@v3.3.0

with:

node-version: ${{ matrix.node }}

cache: yarn

- name: Cache dependencies

uses: actions/cache@v3.0.4

id: node-modules-cache

with:

path: |

node_modules

key: ${{ runner.os }}-yarn-${{ hashFiles('**/yarn.lock') }}

restore-keys: |

${{ runner.os }}-yarn

- name: Install package.json dependencies with Yarn

if: steps.node-modules-cache.outputs.cache-hit != 'true'

run: yarn --frozen-lockfile

env:

PUPPETEER_SKIP_CHROMIUM_DOWNLOAD: true

HUSKY_SKIP_INSTALL: true

- name: Cache build

uses: actions/cache@v3.0.4

id: build-cache

with:

path: |

build

key: ${{ runner.os }}-build-${{ github.sha }}

- name: Build

if: steps.build-cache.outputs.cache-hit != 'true'

run: yarn build

Here are a few places to expand on, our Checkout section is not quite the same as the common one, which may be just one checkout.

- uses: actions/checkout@v2

But we encountered a small problem, checkout by default will use the default branch set in the code repository, and some of our CD workflow are workflow_dispatch and other such push and release events coexist. we can not use the default parameters, we must pass a ref in to ensure that the action will be in accordance with our expectations checkout, we have tried to do support for release.

- name: Get the version

id: get_version

run: echo ::set-output name=VERSION::${GITHUB_REF/refs\/tags\//}

However, it turns out that it doesn't work for other events because the webhook playload for other events doesn't have /refs/tags in it, which results in not getting the ref we expect when the webhook event is something other than a release.

$GITHUB_REF is available, but not in the format we expect, because the content of $GITHUB_REF varies depending on the event.

For example, workflow_dispatch supports selecting branch and tag to run manually, then $GITHUB_REF may be refs/heads/main or refs/tags/v1.111.1-beta.4.

So in the end I decided to be brutal, and since $GITHUB_REF will exist regardless of any event, just split it:

- name: Split string

uses: jungwinter/split@v2

id: split

with:

separator: '/'

msg: ${{ github.ref }}

I don't care whether it is main or v1.111.1, because we can always checkout the correct ref as we expect.

- name: Checkout ${{ steps.split.outputs._2 }}

uses: actions/checkout@v3

with:

ref: ${{ steps.split.outputs._2 }}

Next is the caching and compilation step. Since the Build part is only responsible for compilation, we only need to cache the result of Build and let subsequent workflows reuse it through action cache.

It is important to make efficient use of caching, and there are two key locations in the Build process where caching is used.

- Project dependencies

- Compile file

Project dependencies

As we all know, the dependency of front-end projects is a cosmic problem.

We can't reinstall project dependencies at every Build, that would make the whole Workflow execution very long (and the bill would be long)

That's why the project dependency cache is set in Build workflow.

- name: Cache dependencies

uses: actions/cache@v3.0.4

id: node-modules-cache

with:

path: |

node_modules

key: ${{ runner.os }}-yarn-${{ hashFiles('**/yarn.lock') }}

restore-keys: |

${{ runner.os }}-yarn

- name: Install package.json dependencies with Yarn

if: steps.node-modules-cache.outputs.cache-hit != 'true'

run: yarn --frozen-lockfile

env:

PUPPETEER_SKIP_CHROMIUM_DOWNLOAD: true

HUSKY_SKIP_INSTALL: true

Briefly introduce the magic of actions/cache :

- 10 GB cache capacity per repository

- Unlimited of cache keys

- Each cache key is valid for a minimum of 7 days (will be deleted if there is no access within 7 days)

- If the capacity limit is exceeded, GitHub will save the latest cache and delete the previous one

My God these rules we can theoretically reuse a cache infinitely across multiple workflows!

The path, key, and restore-keys of the cache are set to maximize the reuse of the project dependency cache, where key and restore-keys are critical:

-

Key- the key used to recover and save the cache -

Restore-keys- If thekeydoes not have a cache hit, it will find and restore the cache in order according to the provided recovery key, and thecache-hitwill returnfalse.

The key is the basis for making sure we can get the most out of the cache. For yarn or npm, it's just a matter of hashing the lock file and setting the cache key:

${{ runner.os }}-yarn-${{ hashFiles('**/yarn.lock') }}

If the file hash of the lock is consistent, it will hit the cache directly, but if the file hash is inconsistent and no cache is hit, do we have no node_modules at this point?

No, by setting the restore-keys to ${{runner.os}} -yarn , even if the key misses the cache, we can still recover most of the cache from the previous key:

At this point, cache-hit returns false , no cache hit, so we do yarn install dependencies, but only install changed dependencies, which ensures that even if no cache hit, we still don't need to reinstall all dependencies from start to finish.

These two steps exist in the CI process of all our projects (depending on the language details, but the essence is to ensure that multiple workflows reuse the cache), for example, Build/ESLint/Test workflow will contain these two steps, and these CI workflows will be executed in the PR Review link. Basically, the project depends on the cache from PR.

When the cache hits, the action will skip the following dependency installation steps and restore the node_modules directly to the project directory:

And we update dependencies periodically, we can use a single dependency cache for a very long time, so we get a highly reusable project dependency cache (and short bills)

Compile file

The static resource cache of the project is also a problem. We want to compile and reuse it multi-line like the project depends on the cache to save unnecessary build time, so here we also use the cache for compilation:

- name: Cache build

uses: actions/cache@v3.0.4

id: build-cache

with:

path: |

build

key: ${{ runner.os }}-build-${{ github.sha }}

- name: Build

if: steps.build-cache.outputs.cache-hit != 'true'

run: yarn build

However, there is no restore-keys set here, because there may be new changes in the compilation process every time, resulting in inconsistent compiled filenames. We can't use a lock like relying on the cache to judge. Here, we directly give up the restore-keys and use Git SHA as the key to compile the cache. Just ensure that the subsequent workflow and workflow re-run can use the compilation cache.

At this point, the mission of Build is completed, and the next step is to deploy.

TCB

Our front-end projects are all based on TCB (Tencent Cloudbase) and Kubernetes deployment, TCB is responsible for the main user access, Kubernetes play the role of hot backup, when we find that there is a problem with the TCB version, it will directly cut to the Kubernetes through the CDN node, and try to ensure that user access is not affected.

The deployment of TCB uses a reusable workflow and also uses our own GitHub Action Runner:

cloudbase:

name: TCB

needs:

- build

uses: XNXKTech/workflows/.github/workflows/cloudbase.yml@main

with:

runs-on: "['self-hosted']"

environment: Production

environment_url: https://demo.xnxktech.net

secrets:

SECRET_ID: ${{ secrets.TCB_SECRET_ID }}

SECRET_KEY: ${{ secrets.TCB_SECRET_KEY }}

ENV_ID: ${{ secrets.ENV_ID }}

The reusable workflow of TCB can be seen in this details section, and it will not be shown in full here.

Why not use the action provided by TCB official?

As mentioned earlier, one of the reasons why we use GitHub Action is that the community is active, there are a lot of Actions on GitHub that can be used directly, and even Actions can be used through Docker.

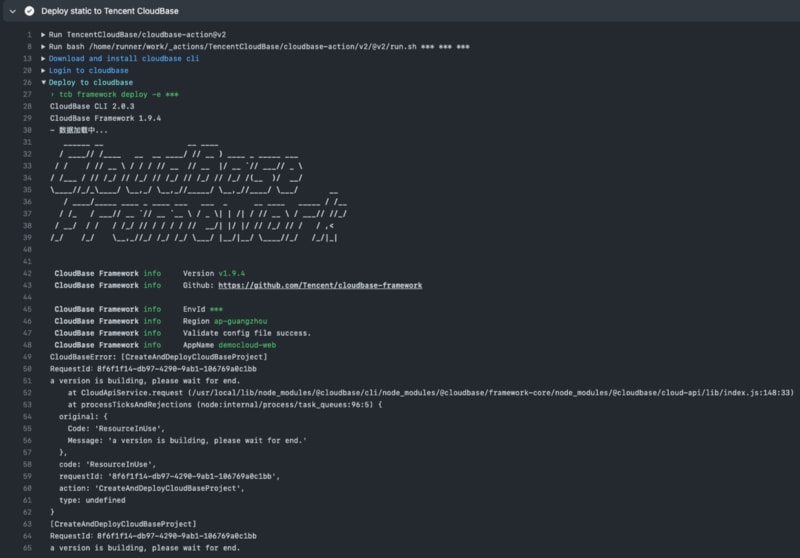

TCB officially provides a cloudbase-action to implement the deployment of TCB. But we found some problems in use:

- Every execution needs to be downloaded @cloudbase/cli > Yeah, I don't want to spend that time

- Deployment failed without error

>

- Sometimes deployment is very, very slow > This is not to blame TCB, GitHub Action's machines are all in North America, it is fine to push to china during the day, and I don't know if it is the international network speed limit or what at night. We once waited for half an hour for the release and it has not been pushed to china… The above problem is the reason why we package TCB Action ourselves. Our solution is to build our own Runner in Hong Kong and build TCB into the Runner system image to ensure the deployment efficiency of TCB

In this way, the deployment time of TCB can be controlled at about 1 minute

Kubernetes

deploy:

name: Kubernetes

needs:

- build

uses: XNXKTech/workflows/.github/workflows/deploy-k8s.yml@main

with:

environment: Production

environment_url: https://prod.example.com

secrets:

K8S_CONFIG: ${{ secrets.K8S_CONFIG }}

Our Kubernetes deployment is also a reusable workflow, and since our Docker image compilation is a separate workflow (at a different price), it's not covered here.

Static

Our front-end projects involve a large number of static files such as js/ css /image, we will use a CDN domain name to deploy:

static:

name: Static

needs:

- build

uses: XNXKTech/workflows/.github/workflows/uptoc.yml@main

with:

cache_dir: build

cache_key: build

dist: build/static

saveroot: ./static

bucket: qc-frontend-assets-***

secrets:

UPTOC_UPLOADER_AK: ${{ secrets.TENCENTCLOUD_COS_SECRET_ID }}

UPTOC_UPLOADER_SK: ${{ secrets.TENCENTCLOUD_COS_SECRET_KEY }}

Without exception this is also a reusable workflow, and we use the Action provided by saltbo/uptoc to upload these static files to the object store and provide access to the CDN.

CDN

When the TCB/Kubernetes /Static are successfully executed, it will enter the CDN refresh step. We use the CDN components provided by Serverless:

app: app

stage: prod

component: cdn

name: cdn

inputs:

area: mainland

domain: prod.example.com

origin:

origins:

- prod-example.tcloudbaseapp.com

originType: domain

originPullProtocol: http

onlyRefresh: true

refreshCdn:

urls:

- https://prod.example.com/

cdn:

runs-on: ubuntu-latest

needs:

- deploy

- cloudbase

- static

name: Refresh CDN

steps:

- name: Checkout

uses: actions/checkout@v2.4.0

- name: Setup Serverless

uses: teakowa/setup-serverless@v2

with:

provider: tencent

env:

TENCENT_APPID: ${{ secrets.TENCENTCLOUD_APP_ID }}

TENCENT_SECRET_ID: ${{ secrets.TENCENTCLOUD_SLS_SECRET_ID }}

TENCENT_SECRET_KEY: ${{ secrets.TENCENTCLOUD_SLS_SECRET_KEY}}

SERVERLESS_PLATFORM_VENDOR: tencent

- name: Refresh CDN

run: sls deploy

Define a CDN through Serverless to perform a "refresh only" operation on our CDN to ensure that new versions are released to the user as soon as possible.

Notification

After the current Workflow is successfully executed, the last Workflow will send the execution result of the entire Production to our channel through the Lark robot:

notification:

name: Deploy notification

needs:

- cdn

uses: XNXKTech/workflows/.github/workflows/lark-notification.yml@main

with:

stage: Production

secrets:

LARK_WEBHOOK_URL: ${{ secrets.SERVICE_UPDATES_ECHO_LARK_BOT_HOOK }}

Now, the release of our entire Production is completed.

End

This is our practice for front-end releases. Currently, all of our front-end projects go live in about 5 minutes at most (including CDN node refreshes), allowing users to see our new changes faster, while unifying the CI/CD process for all front-end projects through reusable workflows also reduces maintenance costs for the team.

However, I personally feel that there are some small problems:

- I think the whole Production workflow execution efficiency can be further improved, for example, the self-built runner can be covered to more jobs, and some of our strong dependencies can be integrated into the self-built runner image to save the time of action setup.

- Inadequate engineer intervention in the online environment, for example, if there is a problem online and you need to cut traffic/versions, you can't easily do it through GitHub Action.

Written by XNXK's Teakowa in Chengdu

Top comments (0)