You’ve got a problem to solve and turned to Google Cloud Platform and follow GCP security best practices to build and host your solution. You create your account and are all set to brew some coffee and sit down at your workstation to architect, code, build, and deploy. Except… you aren’t.

There are many knobs you must tweak and practices to put into action if you want your solution to be operative, secure, reliable, performant, and cost effective. First things first, the best time to do that is now – right from the beginning, before you start to design and engineer.

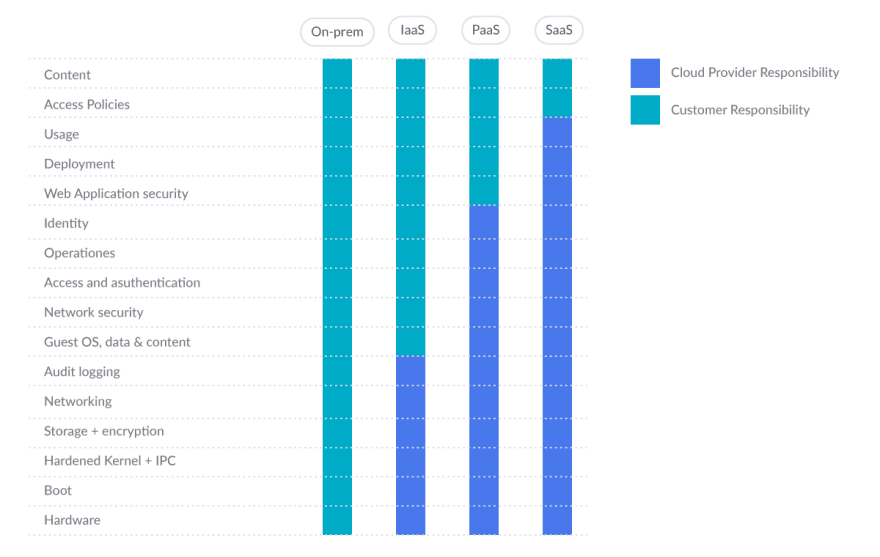

GCP shared responsibility model

The scope of Google Cloud products and services ranges from conventional Infrastructure as a Service (IaaS) to Platform as a Service (PaaS) and Software as a Service (SaaS). As shown in the figure, the traditional boundaries of responsibility between users and cloud providers change based on the service they choose.

At the very least, as part of their common responsibility for security, public cloud providers need to be able to provide you with a solid and secure foundation. Also, providers need to empower you to understand and implement your own parts of the shared responsibility model.

Initial setup GCP security best practices

First, a word of caution: Never use a non-corporate account.

Instead, use a fully managed corporate Google account to improve visibility, auditing, and control of access to Cloud Platform resources. Don’t use email accounts outside of your organization, such as personal accounts, for business purposes.

Cloud Identity is a stand-alone Identity-as-a-Service (IDaaS) that gives Google Cloud users access to many of the identity management features that Google Workspace provides. It is a suite of secure cloud-native collaboration and productivity applications from Google. Through the Cloud Identity management layer, you can enable or disable access to various Google solutions for members of your organization, including Google Cloud Platform (GCP).

Signing up for Cloud Identity also creates an organizational node for your domain. This helps you map your corporate structure and controls to Google Cloud resources through the Google Cloud resource hierarchy.

Now, activating Multi-Factor Authentication (MFA) is the most important thing you want to do. Do this for every user account you create in your system if you want to have a security-first mindset, especially crucial for administrators. MFA, along with strong passwords, are the most effective way to secure user’s accounts against improper access.

Now that you are set, let’s dig into the GCP security best practices.

Identity and Access Management (IAM)

GCP Identity and Access Management (IAM) helps enforce least privilege access control to your cloud resources. You can use IAM to restrict who is authenticated (signed in) and authorized (has permissions) to use resources.

1. Ensure that MFA is enabled for all user accounts

Multi-factor authentication requires more than one mechanism to authenticate a user. This secures user logins from attackers exploiting stolen or weak credentials. By default, multi-factor authentication is not set.

Make sure that for each Google Cloud Platform project, folder, or organization, multi-factor authentication for each account is set and, if not, set it up.

2. Ensure Security Key enforcement for admin accounts

GCP users with Organization Administrator roles have the highest level of privilege in the organization.

These accounts should be protected with the strongest form of two-factor authentication: Security Key Enforcement. Ensure that admins use Security Keys to log in instead of weaker second factors, like SMS or one-time passwords (OTP). Security Keys are actual physical keys used to access Google Organization Administrator Accounts. They send an encrypted signature rather than a code, ensuring that logins cannot be phished.

Identify users with Organization Administrator privileges:

gcloud organizations get-iam-policy ORGANIZATION\_ID

Look for members granted the role ”roles/resourcemanager.organizationAdmin” and then manually verify that Security Key Enforcement has been enabled for each account. If not enabled, take it seriously and enable it immediately. By default, Security Key Enforcement is not enabled for Organization Administrators.

If an organization administrator loses access to their security key, the user may not be able to access their account. For this reason, it is important to configure backup security keys.

Other GCP security IAM best practices include:

- Service accounts should not have Admin privileges.

- IAM users should not be assigned the Service Account User or Service Account Token Creator roles at project level.

- User-managed / external keys for service accounts should be rotated every 90 days or less.

- Separation of duties should be enforced while assigning service account related roles to users.

- Separation of duties should be enforced while assigning KMS related roles to users.

- API keys should not be created for a project.

- API keys should be restricted to use by only specified Hosts and Apps.

- API keys should be restricted to only APIs that the application needs access to.

- API keys should be rotated every 90 days or less.

Key Management Service (KMS)

GCP Cloud Key Management Service (KMS) is a cloud-hosted key management service that allows you to manage symmetric and asymmetric encryption keys for your cloud services in the same way as onprem. It lets you create, use, rotate, and destroy AES 256, RSA 2048, RSA 3072, RSA 4096, EC P256, and EC P384 encryption keys.

3. Check for anonymously or publicly accessible Cloud KMS keys

Anyone can access the dataset by granting permissions to allUsers or allAuthenticatedUsers. Such access may not be desirable if sensitive data is stored in that location.

In this case, make sure that anonymous and/or public access to a Cloud KMS encryption key is not allowed. By default, Cloud KMS does not allow access to allUsers or allAuthenticatedUsers.

List all Cloud KMS keys:

gcloud kms keys list --keyring=KEY\_RING\_NAME --location=global --format=json | jq '.\[\].name'

Remove IAM policy binding for a KMS key to remove access to allUsers and allAuthenticatedUsers:

gcloud kms keys remove-iam-policy-binding KEY\_NAME --keyring=KEY\_RING\_NAME --location=global --member=allUsers --role=ROLE

gcloud kms keys remove-iam-policy-binding KEY\_NAME --keyring=KEY\_RING\_NAME --location=global --member=allAuthenticatedUsers --role=ROLE

The following is a Cloud Custodian rule for detecting the existence of anonymously or publicly accessible Cloud KMS keys:

\- name: anonymously-or-publicly-accessible-cloud-kms-keys

description: |

It is recommended that the IAM policy on Cloud KMS cryptokeys should

restrict anonymous and/or public access.

resource: gcp.kms-cryptokey

filters:

- type: iam-policy

key: "bindings\[\*\].members\[\]"

op: intersect

value: \["allUsers", "allAuthenticatedUsers"\]

Cloud Storage

Google Cloud Storage lets you store any amount of data in namespaces called “buckets.” These buckets are an appealing target for any attacker who wants to get hold of your data, so you must take great care in securing them.

4. Ensure that Cloud Storage buckets are not anonymously or publicly accessible

Allowing anonymous or public access gives everyone permission to access bucket content. Such access may not be desirable if you are storing sensitive data. Therefore, make sure that anonymous or public access to the bucket is not allowed.

List all buckets in a project:

gsutil ls

Check the IAM Policy for each bucket returned from the above command:

gsutil iam get gs://BUCKET\_NAME

No role should contain allUsers or allAuthenticatedUsers as a member. If that’s not the case, you’ll want to remove them with:

gsutil iam ch -d allUsers gs://BUCKET\_NAME

gsutil iam ch -d allAuthenticatedUsers gs://BUCKET\_NAME

Also, you might want to prevent Storage buckets from becoming publicly accessible by setting up the Domain restricted sharing organization policy.

Compute Engine

Compunte Engine provides security and customizable compute service that lets you create and run virtual machines on Google’s infrastructure.

5. Ensure VM disks for critical VMs are encrypted with Customer-Supplied Encryption Keys (CSEK)

By default, the Compute Engine service encrypts all data at rest.

Cloud services manage this type of encryption without any additional action from users or applications. However, if you want full control over instance disk encryption, you can provide your own encryption key.

These custom keys, also known as Customer-Supplied Encryption Keys (CSEKs), are used by Google Compute Engine to protect the Google-generated keys used to encrypt and decrypt instance data. The Compute Engine service does not store CSEK on the server and cannot access protected data unless you specify the required key.

At the very least, business critical VMs should have VM disks encrypted with CSEK.

By default, VM disks are encrypted with Google-managed keys. They are not encrypted with Customer-Supplied Encryption Keys.

Currently, there is no way to update the encryption of an existing disk, so you should create a new disk with Encryption set to Customer supplied. A word of caution is necessary here:

⚠️ If you lose your encryption key, you will not be able to recover the data.

In the gcloud compute tool, encrypt a disk using the --csek-key-file flag during instance creation. If you are using an RSA-wrapped key, use the gcloud beta component:

gcloud beta compute instances create INSTANCE\_NAME --csek-key-file=key-file.json

To encrypt a standalone persistent disk use:

gcloud beta compute disks create DISK\_NAME --csek-key-file=key-file.json

It is your duty to generate and manage your key. You must provide a key that is a 256-bit string encoded in RFC 4648 standard base64 to the Compute Engine. A sample key-file.json looks like this:

\[

{

"uri": "https://www.googleapis.com/compute/v1/projects/myproject/zones/us-

central1-a/disks/example-disk",

"key": "acXTX3rxrKAFTF0tYVLvydU1riRZTvUNC4g5I11NY-c=",

"key-type": "raw"

},

{

"uri":

"https://www.googleapis.com/compute/v1/projects/myproject/global/snapshots/my

-private-snapshot",

"key":

"ieCx/NcW06PcT7Ep1X6LUTc/hLvUDYyzSZPPVCVPTVEohpeHASqC8uw5TzyO9U+Fka9JFHz0mBib

XUInrC/jEk014kCK/NPjYgEMOyssZ4ZINPKxlUh2zn1bV+MCaTICrdmuSBTWlUUiFoDD6PYznLwh8

ZNdaheCeZ8ewEXgFQ8V+sDroLaN3Xs3MDTXQEMMoNUXMCZEIpg9Vtp9x2oeQ5lAbtt7bYAAHf5l+g

JWw3sUfs0/Glw5fpdjT8Uggrr+RMZezGrltJEF293rvTIjWOEB3z5OHyHwQkvdrPDFcTqsLfh+8Hr

8g+mf+7zVPEC8nEbqpdl3GPv3A7AwpFp7MA=="

"key-type": "rsa-encrypted"

}

\]

Other GCP security best practices for Compute Engine include:

- Ensure that instances are not configured to use the default service account.

- Ensure that instances are not configured to use the default service account with full access to all Cloud APIs.

- Ensure oslogin is enabled for a Project.

- Ensure that IP forwarding is not enabled on Instances.

- Ensure Compute instances are launched with Shielded VM enabled.

- Ensure that Compute instances do not have public IP addresses.

- Ensure that App Engine applications enforce HTTPS connections.

Google Kubernetes Engine Service (GKE)

The Google Kubernetes Engine (GKE) provides a managed environment for deploying, managing, and scaling containerized applications using the Google infrastructure. A GKE environment consists of multiple machines (specifically, Compute Engine instances) grouped together to form a cluster.

6. Enable application-layer secrets encryption for GKE clusters

Application-layer secret encryption provides an additional layer of security for sensitive data, such as Kubernetes secrets stored on etcd. This feature allows you to use Cloud KMS managed encryption keys to encrypt data at the application layer and protect it from attackers accessing offline copies of etcd. Enabling application-layer secret encryption in a GKE cluster is considered a security best practice for applications that store sensitive data.

Create a key ring to store the CMK:

gcloud kms keyrings create KEY\_RING\_NAME --location=REGION --project=PROJECT\_NAME --format="table(name)"

Now, create a new Cloud KMS Customer-Managed Key (CMK) within the KMS key ring created at the previous step:

gcloud kms keys create KEY\_NAME --location=REGION --keyring=KEY\_RING\_NAME --purpose=encryption --protection-level=software --rotation-period=90d --format="table(name)"

And lastly, assign the Cloud KMS “CryptoKey Encrypter/Decrypter” role to the appropriate service account:

gcloud projects add-iam-policy-binding PROJECT\_ID --member=serviceAccount:service-PROJECT\_NUMBER@container-engine-robot.iam.gserviceaccount.com --role=roles/cloudkms.cryptoKeyEncrypterDecrypter

The final step is to enable application-layer secrets encryption for the selected cluster, using the Cloud KMS Customer-Managed Key (CMK) created in the previous steps:

gcloud container clusters update CLUSTER --region=REGION --project=PROJECT\_NAME --database-encryption-key=projects/PROJECT\_NAME/locations/REGION/keyRings/KEY\_RING\_NAME/cryptoKeys/KEY\_NAME

7. Enable GKE cluster node encryption with customer-managed keys

To give you more control over the GKE data encryption / decryption process, make sure your Google Kubernetes Engine (GKE) cluster node is encrypted with a customer-managed key (CMK). You can use the Cloud Key Management Service (Cloud KMS) to create and manage your own customer-managed keys (CMKs). Cloud KMS provides secure and efficient cryptographic key management, controlled key rotation, and revocation mechanisms.

At this point, you should already have a key ring where you store the CMKs, as well as customer-managed keys. You will use them here too.

To enable GKE cluster node encryption, you will need to re-create the node pool. For this, use the name of the cluster node pool that you want to re-create as an identifier parameter and custom output filtering to describe the configuration information available for the selected node pool:

gcloud container node-pools describe NODE\_POOL --cluster=CLUSTER\_NAME --region=REGION --format=json

Now, using the information returned in the previous step, create a new Google Cloud GKE cluster node pool, encrypted with your customer-managed key (CMK):

gcloud beta container node-pools create NODE\_POOL --cluster=CLUSTER\_NAME --region=REGION --disk-type=pd-standard --disk-size=150 --boot-disk-kms-key=projects/PROJECT/locations/REGION/keyRings/KEY\_RING\_NAME/cryptoKeys/KEY\_NAME

Once your new cluster node pool is working properly, you can delete the original node pool to stop adding invoices to your Google Cloud account.

⚠️ Take good care to delete the old pool and not the new one!

gcloud container node-pools delete NODE\_POOL --cluster=CLUSTER\_NAME --region=REGION

8. Restrict network access to GKE clusters

To limit your exposure to the Internet, make sure your Google Kubernetes Engine (GKE) cluster is configured with a master authorized network. Master authorized networks allow you to whitelist specific IP addresses and/or IP address ranges to access cluster master endpoints using HTTPS.

Adding a master authorized network can provide network-level protection and additional security benefits to your GKE cluster. Authorized networks allow access to a particular set of trusted IP addresses, such as those originating from a secure network. This helps protect access to the GKE cluster if the cluster’s authentication or authorization mechanism is vulnerable.

Add authorized networks to the selected GKE cluster to grant access to the cluster master from the trusted IP addresses / IP ranges that you define:

gcloud container clusters update CLUSTER\_NAME --zone=REGION --enable-master-authorized-networks --master-authorized-networks=CIDR\_1,CIDR\_2,...

In the previous command, you can specify multiple CIDRs (up to 50) separated by a comma.

The above are the most important best practices for GKE, since not adhering to them poses a high risk, but there are other security best practices you might want to adhere to:

- Enable auto-repair for GKE cluster nodes.

- Enable auto-upgrade for GKE cluster nodes.

- Enable integrity monitoring for GKE cluster nodes.

- Enable secure boot for GKE cluster nodes.

- Use shielded GKE cluster nodes.

Cloud Logging

Cloud Logging is a fully managed service that allows you to store, search, analyze, monitor, and alert log data and events from Google Cloud and Amazon Web Services. You can collect log data from over 150 popular application components, onprem systems, and hybrid cloud systems.

9. Ensure that Cloud Audit Logging is configured properly across all services and all users from a project

Cloud Audit Logging maintains two audit logs for each project, folder, and organization:

Admin Activity and Data Access. Admin Activity logs contain log entries for API calls or other administrative actions that modify the configuration or metadata of resources. These are enabled for all services and cannot be configured. On the other hand, Data Access audit logs record API calls that create, modify, or read user-provided data. These are disabled by default and should be enabled.

It is recommended to have an effective default audit config configured in such a way that you can log user activity tracking, as well as changes (tampering) to user data. Logs should be captured for all users.

For this, you will need to edit the project’s policy. First, download it as a yaml file:

gcloud projects get-iam-policy PROJECT\_ID > /tmp/project\_policy.yaml

Now, edit /tmp/project_policy.yaml adding or changing only the audit logs configuration to the following:

auditConfigs:

- auditLogConfigs:

- logType: DATA\_WRITE

- logType: DATA\_READ

service: allServices

Please note that exemptedMembers is not set as audit logging should be enabled for all the users. Last, update the policy with the new changes:

gcloud projects set-iam-policy PROJECT\_ID /tmp/project\_policy.yaml

⚠️ Enabling the Data Access audit logs might result in your project being charged for the additional logs usage.

10. Enable logs router encryption with customer-managed keys

Make sure your Google Cloud Logs Router data is encrypted with a customer-managed key (CMK) to give you complete control over the data encryption and decryption process, as well as to meet your compliance requirements.

You will want to add a policy, binding to the IAM policy of the CMK, to assign the Cloud KMS “CryptoKey Encrypter/Decrypter” role to the necessary service account.

gcloud kms keys add-iam-policy-binding KEY\_ID --keyring=KEY\_RING\_NAME --location=global --member=serviceAccount:PROJECT\_NUMBER@gcp-sa-logging.iam.gserviceaccount.com --role=roles/cloudkms.cryptoKeyEncrypterDecrypter

Cloud SQL

Cloud SQL is a fully managed relational database service for MySQL, PostgreSQL, and SQL Server. Run the same relational databases you know with their rich extension collections, configuration flags and developer ecosystem, but without the hassle of self management.

11. Ensure that Cloud SQL database instances are not open to the world

Only trusted / known required IPs should be whitelisted to connect in order to minimize the attack surface of the database server instance. The allowed networks must not have an IP / network configured to 0.0.0.0/0 that allows access to the instance from anywhere in the world. Note that allowed networks apply only to instances with public IPs.

gcloud sql instances patch INSTANCE_NAME --authorized-networks=IP_ADDR1,IP_ADDR2...

To prevent new SQL instances from being configured to accept incoming connections from any IP addresses, set up a Restrict Authorized Networks on Cloud SQL instances Organization Policy.

BigQuery

BigQuery is a serverless, highly-scalable, and cost-effective cloud data warehouse with an in-memory BI Engine and machine learning built in.

12. Ensure that BigQuery datasets are not anonymously or publicly accessible

You don’t want to allow anonymous or public access in your BigQuery dataset’s IAM policies. Anyone can access the dataset by granting permissions to allUsers or allAuthenticatedUsers. Such access may not be desirable if sensitive data is stored on the dataset. Therefore, make sure that anonymous and/or public access to the dataset is not allowed.

To do this, you will need to edit the data set information. First you need to retrieve said information into your local filesystem:

bq show --format=prettyjson PROJECT\_ID:DATASET\_NAME > dataset\_info.json

Now, in the access section of dataset_info.json, update the dataset information to remove all roles containing allUsers or allAuthenticatedUsers.

Finally, update the dataset:

bq update --source=dataset\_info.json PROJECT\_ID:DATASET\_NAME

You can prevent BigQuery dataset from becoming publicly accessible by setting up the Domain restricted sharing organization policy.

Compliance Standards & Benchmarks

Setting up all the detection rules and maintaining your GCP environment to keep it secure is an ongoing effort that can take a big chunk of your time – even more so if you don’t have some kind of roadmap to guide you during this continuous work.

You will be better off following the compliance standard(s) relevant to your industry, since they provide all the requirements needed to effectively secure your cloud environment.

Because of the ongoing nature of securing your infrastructure and complying with a security standard, you might also want to recurrently run benchmarks, such as CIS Google Cloud Platform Foundation Benchmark, which will audit your system and report any unconformity it might find.

Conclusion

Jumping to the cloud opens a new world of possibilities, but it also requires learning a new set of Google Cloud Platform security best practices.

Each new cloud service you leverage has its own set of potential dangers you need to be aware of.

Luckily, cloud native security tools like Falco and Cloud Custodian can guide you through these Google Cloud Platform security best practices, and help you meet your compliance requirements.

Top comments (1)

you can find more on our website :

tech-computer.fr/societe-securite-...