I've been frustrated with my various news feeds for showing the same type of content, with sensational headlines, re-enforcing my echo chamber. Also, I often read a headline and wonder how "the other side" would report the same story. I wanted to have a simple website with a sampling of headlines representing liberal, conservative, and some international perspectives.

Given my fear of all things frontend, I created a simple site with Hugo, a static site generator. I used Python to read a set of RSS feeds and create content markdown files based on the first few entries. The code is in GitHub and the site is at https://onlineheadlines.net

Here are a few highlights:

Reading the feeds

My original vision was to visit each news site and effectively scrape the H1 as the top headline. But, assuming that sites would be too different to do that effectively, I decided to focus on RSS feeds (which are still alive and kicking!).

I used the feedparser module to read the feeds. I iterate over an array of feeds and read them.

for feed in FEEDS:

count = count + 1

NewsFeed = feedparser.parse(feed)

Like most things in Python, the data is returned in beautiful dictionary objects.

{

"title": "The warning isn't new. Experts have long cautioned the months ahead will be challenging. Here's why.",

"link": "http://rss.cnn.com/~r/rss/cnn_topstories/~3/b-gJezqWSTM/index.html",

"summary": "Nearly 30 US states are reporting downward trends in...",

"media_content": [

{

"url": "https://cdn.cnn.com/cnnnext/dam/assets/200911014935-us-coronavirus-friday-0906-restricted-super-169.jpg"

}

],

...

}

Creating the content markdown files

To create the markdown files, I grabbed key fields from the RSS JSON and put them into a formatted string. Python has an object called f-strings that allow you to format multiline strings and easily insert variable values.

template = f"""---

title: "{title}"

date: {published}

{image_url_front}

target_link: {link}

type: {content_type}

categories:

- {content_type}

---

{summary}"""

The resulting string looks like standard front matter and markdown format for website content, like here on Dev.to.

---

title: "The warning isn't new. Experts have long cautioned the months ahead will be challenging. Here's why."

date:

target_link: http://rss.cnn.com/~r/rss/cnn_topstories/~3/b-gJezqWSTM/index.html

type: first_headline

categories:

- first_headline

---

Nearly 30 US states are reporting downward trends in...

I just save the files into the appropriate Hugo subdirectory.

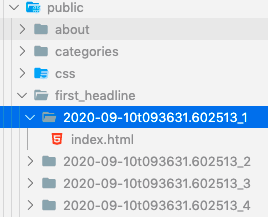

Generating the site

Hugo is a typical static site generator that pre-generates all of the HTML and folders to provide a simple, pretty-url navigation experience. Building the site generates the full website in the public/ folder.

Hugo has lots of community-created themes. I picked hugo-xmag because it looked like a newspaper.

The theme creator has an interesting perspective on how he created the theme to avoid the clutter of modern-websites and focus on the articles.

Building the site

This is where the fun turned into self-inflicted pain. There are simple options for CI and hosting, like Netlify, that would build and deploy based on changes to master. But, I wanted to try to get a static S3 site running, with a custom domain name and SSL cert. I had failed at doing this once before and wanted to see if I could get it to work.

I usually use AWS CodePipeline for builds since it's so easy to set up. But, I have a process that sends build progress emails for every CodePipeline build and I didn't want a flood of emails if I was going to build the site every hour. Since CodePipeline and CodeBuild are essentially just short lived containers to execute your build step, I created a custom container with Python, Hugo, my Hugo site, and my build script.

...

RUN apt-get install -y curl wget

# Install Hugo

RUN curl -L -o hugo.deb https://github.com/gohugoio/hugo/releases/download/v0.70.0/hugo_0.70.0_Linux-64bit.deb

RUN dpkg -i hugo.deb

# Install AWS CLI

RUN pip install awscli

# Copy directory files for Hugo site

COPY ./ ./

RUN ls -la headlines_site/

...

I also created a script to build the container locally and push it to AWS ECR as my container registry. I scheduled a Fargate task to run the container every hour. I knew Fargate was a "serverless" (read: "managed") service to run containers and thought it would be easy to set up. But, it took me a while to get the services, tasks, and container all working together.

Hosting the site

This is where most of the frustrations occurred, which surprised me since I'm just hosting a static S3 site. Here are the issues I ran into:

- Using a custom domain name with an S3 website requires CloudFront (as far as I could tell)

- CloudFront doesn't automatically automatically respect index.html in subfolders which prevented the Hugo pretty URLs from working (like https://onlineheadlines.net/about). Luckily, digital-sailors created standard-redirects-for-cloudfront to give the URLs the respect they deserve.

- Using Route 53 and CloudFront for the domain name and SSL cert requires that you know what you're doing with DNS (A and CNAME records, with and without "www."). Unfortunately, my fear of networking is just as healthy as my fear of frontend.

Even with all of the frustrations, it was a good learning experience with some lessons learned that I can apply at work when I'm helping other teams.

Top comments (0)