A challenging problem is tracking individuals using machine learning solutions. I will investigate several possible solutions for my open source sports analysis project.

For each solution I will use the same short basketball video which starts with several isolated players and ends with multiple occlusions. The output video is in slow motion so we can more easily observe what's happening.

Occlusion occurs if an object you are tracking is hidden (occluded) by another object. Like two persons walking past each other, or a car that drives under a bridge.

First the players need to be localised, this is often done using a convolutional neural network (for example Mask-RCNN). In the second phase the tracking needs to happen where often key points of the human body are linked to a unique person and visualised.

If we would have multiple cameras we could actually track each player based on his/her face recognition. See my article on Face Recognition at Devoxx.

As always, please don't hesitate to suggest any other possible techniques or solutions which I should investigate.

Tracking with OpenCV

OpenCV has build-in support to track objects and the actual implementation is very straight forward. With just a couple of lines of code you can track a moving object as follows:

import cv2

# ...

# Create MultiTracker object

multiTracker = cv2.MultiTracker_create()

# Initialize MultiTracker

for pBox in players_boxes:

# Add rectangle to track within given frame

multiTracker.add(cv2.TrackerCSRT_create(), frame, pBox)

# ...

# get updated location of objects in subsequent frames

success, boxes = multiTracker.update(frame)

# draw new boxes on current frame

# ...

As you can see from the annotated video it does a pretty good job right until a player passes (overlaps) another player (= occlusion).

A total of 6 persons were tracked, only the referee and one yellow player was tracked correctly until the end. Hmmm not the result I was hoping for.

Video result can be viewed on YouTube.

Tracking with Key Points

The next experiment is with COCO R-CNN KeyPoints which can easily be enabled using Detectron2.

As you can see from the output below, the rectangle around each person uses the same colour as long as it's tracking the same person. However when occlusion happens the same result is experienced as with OpenCV.... chaos.

Video result can be viewed on YouTube.

Tracking with Pose Flow

Pose Flow shows a unique number for each person that it tracks and uses the same coloured rectangle around the same person in addition to the key points of the human body.

It starts out really confident but again (as with the previous experiments) when occlusion appears it basically looses track of the overlapping players.

Video result can be viewed on YouTube

BTW Full scientific details on the Pose Flow algorithm can be download here.

Shout out to Jarosław Gilewski for his Detectron2 Pipeline project which allowed me to rapidly run the above simulations!

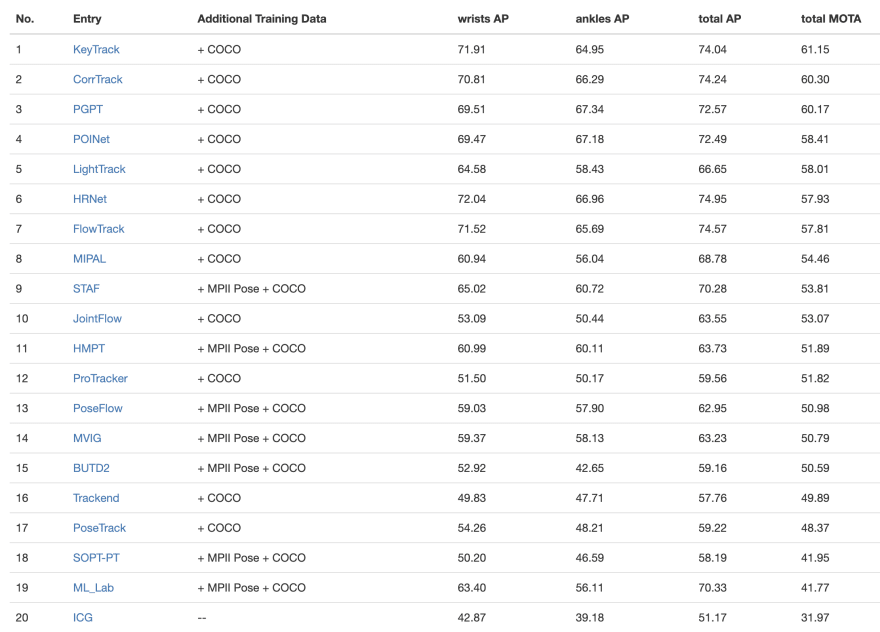

The Pose Track challenge

PoseTrack is a large-scale benchmark for human pose estimation and articulated tracking in video. We provide a publicly available training and validation set as well as an evaluation server for benchmarking on a held-out test set.

PoseTrack organises every year tracking and detection competitions for single frame and multiple frames pose datasets.

In 2018 the "Pose Flow team" ended on the 13th position and the "Key Track" team won the multi-person tracking challenge.

Pose tracking is an important problem that requires identifying unique human pose-instances and matching them temporally across different frames of a video. However, existing pose tracking methods are unable to accurately model temporal relationships and require significant computation, often computing the tracks offline.

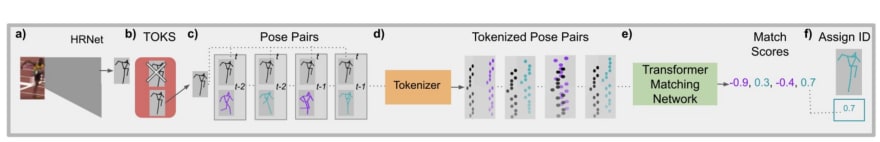

The Key Track solution

KeyTrack introduces Pose Entailment, where a binary classification is made as to whether two poses from different time steps are the same person.

Unfortunately I was unable to find an (open source) Key Track implementation. If you know where to find it please let me know and I will try it out.

The scientific paper can be downloaded here.

The ideal camera setup?

One idea is to change the angle of the stationary camera(s) so you get a birds-eye view hopefully limiting the number of occlusions that can happen.

The ideal setup would be a top-down stationary camera above the middle of the court. The IP enabled camera should need a super wide angle lens. Similar to the European APIDIS basketball project where the Université catholique de Louvain were involved.

The APIDIS project used seven 2 megapixel colour IP cameras (Arecont Vision AV2100) recording at 22 fps with timestamp for each frame at 1600x1200 pixels.

And (as shown below) an additional two cameras, each recording a side of the basketball court.

Top comments (0)