“To parse” means to analyze strings of symbols or data elements and map semantic relationships between them for sense-making. Practical parsing applications are the home ground for researchers, developers, and data scientists.

Conceptually, parsing is a complex set of methods in Formal Logic, Computer Science, and Linguistics. To keep it to the point, we focus specifically on the parsing involved in web scraping for business needs.

What is Data Parsing?

To understand parsing, you need to understand the difference between information and data. Parsing helps transform one into another.

Data is information with defined connections or syntax. The critical difference is that data allows us to draw inferences and perform logical operations.

For example, a list of names and another list of invoice totals are just two pieces of information, meaningless on their own. But once you connect each name with the corresponding number, they turn into your customer data.

“Extracting data” is the term you often encounter in parsing discussions. It refers to detecting specific pieces of information in large, messy sources and reorganizing them according to the rules set by the user.

The names and invoice amounts from the example above could be scattered across your accounting app, among hundreds of other data strings. Your parser found them and “copied” to a spreadsheet next to each other.

Here are some examples of the jobs parsers do:

- Format translation: extracting data from online survey results and saving it into a spreadsheet.

- “Cleaning” information by selectively removing irrelevant parts: parsing an HTML file and saving movie titles and their schedule into the database.

- Structuring and organizing: extracting data from time trackers and arranging them into a monthly report.

What Can Data Parsing Be Used for?

Computer parsing is used whenever we utilize big data, once there is too much of it to organize it manually.

In Programming

Parsing is used as a part of the compilation process to “translate” high-level code into the low-level machine language a CPU can understand and execute (except for interpretable languages, where the process is slightly different.)

For Web Scraping

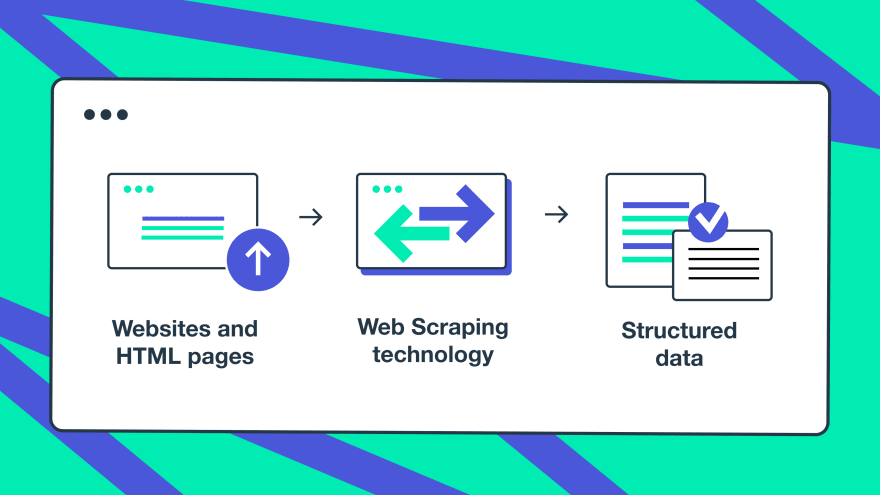

We already mentioned parsing in the context of web scraping before. There, parsing is a specific stage in the web scraping workflow.

A short reminder: the parts of the web scraping workflow are crawling, scraping, parsing, mathematical operations, and feeding it to a database.

HTML is not easily interpretable by a human. When scrapers retrieve HTML files, parsers transform them into a clean and readable form: numerical data, text fields, images, and tables. They even make it searchable. Or they can skip the human-readable form and change it into a format suitable for an analytical tool.

For Feeding Machine Learning Models

Parsing is commonly used in NLP, AI, and ML tasks. Rules are not enough to learn the probability and manner of elements’ cooccurrence. Computers need many, many examples. Parsers extract that information from scraped files and feed it to the machine learning model. Eventually, the AI learns to associate the word “pug” and a picture of the breed.

For Scientific and Business Analysis

An analysis is the main reason we parse information. Investment analysis, marketing, social media, search engine optimization, scientific studies analysis, stock markets… It is easier to name a discipline where parsing is not used.

Imagine trying to read all the news published on the web or adding stock prices to a spreadsheet by hand every day. Even if you can, it will take too long, and the information will become outdated before you finish collecting it.

Parsers detect relevant pieces of information, extract, and summarise them under categories for the analysts or intelligence specialists to review. Analysts can focus on thinking instead of trying to push through the clutter of raw data.

Education and Data Visualisation

Manually collecting all the mentions about a specific subject, individual, or business would take far too long. A program, however, can scan the web, scrape all the mentions, and then parse only relevant pieces.

Google Knowledge graph is one example: when you search a name, you receive the resulting relevant URLs and a block with organized information about that person.

Opinion and Sentiment Mining

Intelligence or PR agencies regularly scrape social media for opinions related to their clients. Parsers organize it into a readable form and flag positive, negative, neutral, or extreme views. At the current scale of SMM, manual extraction is not practicable.

Banking and Credit Decisions

Fintech and legacy banks utilize “enriched context” to improve their risk assessment accuracy. It might include phone bills or current property values. Bank analysts can make more granular and contextual decisions without seeing the person (in an ideal world, anyway).

Sales and Lead Generation

Parsed data can empower lead generation and personalized sales. Health struggle, marriage date, interests, purchase reviews, bills, education, travel history, event attendance, and awards become customer insights once parsed into a CRM.

Logistics and Shipping

Parsers can be used to create shipping labels. You fill out the online form and place the order. A parser reads it and arranges it into a slip, invoice, and instructions for the warehouse.

Grammar Checking Apps

Good old grammar checkers that remind you when you forgot a comma or misspelled a word use parsing, too. They compare your input to a grammatical or statistical model, detect errors, and notify the user.

What Technologies and Languages Can be Parsing Methods Used With?

Parsers range from very simple to powered by an advanced AI. There is an immense number of parsers for most applications and languages. You can find ones for emails, CRM, customer data, HTML, big data, accounting apps, etc.

Where to Get a Data Parser?

You can program your own data parser or purchase an existing tool. Neither is “good” or “bad.” They just fit different situations. When writing your own, you can use any language, including SQL.

A few things to remember when getting a data parser:

- Parsers often come as a part of the web scraping tools stack. When you do not require web scraping, a parser will be integrated into your existing system.

- You might need to hire a specialist to handle the setup and models, modify, and troubleshoot your parser.

- You might need a server and a proxy service. Unless you are parsing data from in-house tools or purchased datasets, you want to protect your IP from getting blocked.

Pros and Cons of Building Your Own Data Parser

Like any ready-made tool, parsers and web scrapers you can purchase have their limitations. They are less flexible and serve most common tasks. Anything beyond that will need to be custom-built.

Advantages of building your own parser:

- You will not be limited in either the source pool or the task complexity;

- Easier to integrate with the white-label in-house system or parse the data you produce;

- Essential when the data analysis is your competitive advantage or your main product: it will not be as easy to repl icate.

Disadvantages of building your own parser:

- Significant upfront costs: development, server, model training;

- No help in teaching and supporting your team;

- Costly to maintain: will require a specialist to take care of manual adjustments.

Pros and Cons of Buying a Data Parser

When scraping tasks involve only a few specific websites or trivial tasks, it might be cost-efficient to purchase a data parser or web scraping tool.

Advantages:

- Most commonly comes with a server;

- After the upfront costs of the purchase, the tool is low maintenance;

- Limited options mean straightforward configuration and a user-friendly setup process;

- Well-designed user training and specialized troubleshooting support.

Downsides:

- Generic solutions, less flexibility, less control over the settings and models;

- It still comes with maintenance costs;

- It will be the same for all your competitors;

- Not all open-source tools support essential IP rotation or Proxies;

- No control over the direction and priorities of the updates.

What are the Most Popular Web Scraping Tools?

On top of ready-made tools, there are intermediate solutions like API or programming libraries. You will have to do manual coding, but it will be easier.

- Web Scraper programming libraries for different languages:Puppeteer, Cheerio, BeautifulSoup;

- Web Scraper applications and extensions: PySpider, Parsehub, Octoparse, ScrapingBee, DiffBot, ScrapeBox, ScreamingFrog, Scrapy, Import.io, Frontera, Simplescraper.io, DataMiner, Portia, WebHarvy, FMiner, ProWebScraper.

Parsing organizes “raw” information blocks into a structured and usable form. It uses relationship logic or rules (i.e., syntax) to connect its elements, making it “more digestible” for humans or other applications.

Parsing allows you to get more value from the data abundance by making the process more accessible and cost-efficient. When the big data has been parsed, we can analyze data and notice details that are hard to detect amongst the clutter.

Originally published on SOAX proxies blog

Top comments (0)