Prompt : Before reading this blog, you should be somewhat familiar with concepts of docker(container-engine) such as containers, images, etc.

Kubernetes(K8s) is an open-source containers management tool i.e. it is used in container Orchestration.

Before moving further, i would like to tell you about traditional application architecture(monolithic) and the new microservices-architecture.

For an easy example, consider a website which consists of multiple components such as FrontEnd, BackEnd and Database.

Monolithic-Architecture : In monolithic architecture we compile and deploy all the components of our application on a single server.

In monolothic architecture suppose we want to make changes to the frontend of the application, then in order to do so we would have to redeploy the whole application again after making the changes, or in other scenario suppose we want some components of our application deployed on more number of servers than other components, for example - let us say we want to deploy backend(component-1) and database(component-2) on 5 servers but we want to deploy frontend(component-3) on only 2 servers but the monolithic architecture does not allow this behaviour since it's components are compiled and deployed on single server, hence monolithic architecture makes our applications way less scalable.

So, comes micro-services which solved the problem of scalability.

Micro-services : In micro-services architecture we distribute(split) and deploy the components of our application on different servers so as to modify the components individually and easily without touching the rest of the components thus increasing scalability.

This concept of working or modifying components in independent environments is called as faut-isolation, means we isolate the components in different environments so that the change in the environment of one component does not affect other component's environment.

Above is an example demonstrating the architecture difference between monolithic and micro-services.

Now the qustion arises how to manage these micro-services and how do these micro-services communicate with each other ?

The answer is kubernetes(k8s), since it is an orchestration tool.

Orchestration means performing different operations on containers or deploying and managing containers.

The main features of an orchestration tool include :

1.Deployment

2.Zero-downtime

3.Scalability

4.Self-heal containers

Let's now talk about k8s cluster :

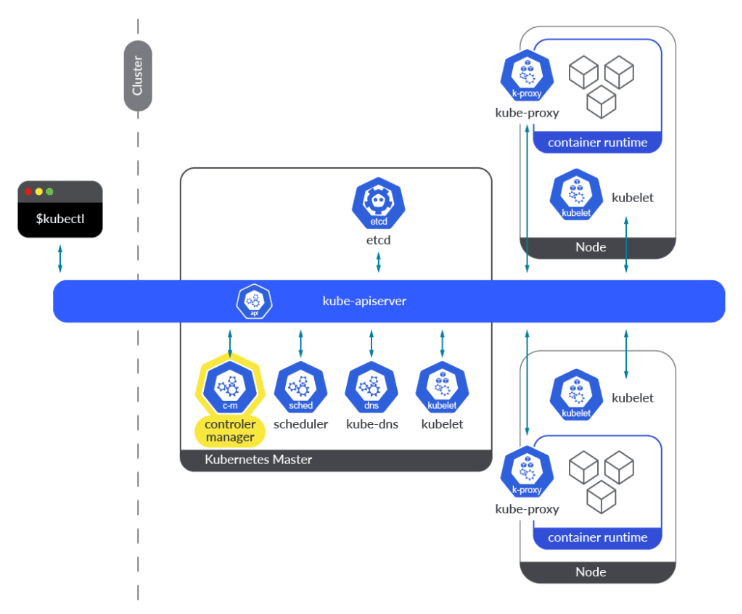

A Kubernetes cluster is a set of nodes that run containerized applications :

1.kubectl: It is a CLI interface to interact with the control-plane(earlier known as Master Node).

2.Control-Plane: It is used to manage worker-nodes since it is the main node.

3.Worker-Nodes: Micro-services run in these nodes which are running as containerized applications on a deployed server.

Before moving forward let me tell you about some services provided by k8s. I will be explaining about k8s services in-depth in some other blog but for now you can check them all out at : https://kubernetes.io/docs/concepts/services-networking/

One of the examples from these Kubernetes Service is Ingress, so basically what ingress does is, when a user requests a service it routes to a specific micro-service inside the cluster depending upon the rules defined for routing of these microservices.

Here is the technical defination and an example of ingress from k8s documentaion:

Let us take an example to understand ingress:

Suppose you go to any website say google.com, so you basically make a http request and the k8s service ingress handles this request since the application is running on containers and routes this request to the specific micro-service and then that micro-service sends back a response to the user.

Now let us talk about pods, where these k8s services are used:

Pods : These are the smallest scheduling units in k8s cluster, containers run in k8s pods.

A pod consists of one or more containers, storage volumes, a unique network IP address, and options that govern how the containers should run.

A pod is the smallest execution unit in Kubernetes. A pod encapsulates one or more applications. Pods are ephemeral by nature, if a pod (or the node it executes on) fails, Kubernetes can automatically create a new replica of that pod to continue operations.

Pods can be used in either of the following ways:

1.A container is running in a pod. This is the most common usage of pods in Kubernetes. You can view the pod as a single encapsulated container, but Kubernetes directly manages pods instead of containers.

2.Multiple containers that need to be coupled and share resources run in a pod. In this scenario, an application contains a main container and several sidecar containers, as shown in the pod image. For example, the main container is a web server that provides file services from a fixed directory, and a sidecar container periodically downloads files to the directory.

In Kubernetes, pods are rarely created directly. Instead, k8s controllers such as Deployments and jobs, are used to manage pods. Controllers can create and manage multiple pods, and provide replica management, rolling upgrade, and self-healing capabilities. A controller generally uses a pod template to create corresponding pods.

NOTE : A worker-node can have multiple pods running inside it.

For more detailed explanation on pods you can visit this huawei-cloud documentation on k8s pods :

https://support.huaweicloud.com/intl/en-us/basics-cce/kubernetes_0006.html

Now since I have discussed with you pods and a little bit about worker-nodes(having micro-services running as container) , let us deep-dive into components of worker-nodes:

Worker-nodes in k8s consist of kubelet, kube-proxy and pods with containers running inside of them as described earlier and in the figure above.

Let me tell you about a technical term, CRI ?

CRI : The Container Runtime Interface (CRI) is the main protocol for the communication between the kubelet and Container Runtime.

NOTE : Kubernetes uses CRI-O and containerd which are some examples of CRI(Container Runtime Interface).

This above figure demonstrates the 3 components(kubelet, kube-proxy and pods) of a worker-node.

Let us now discuss in detail about kubelet and kube-proxy :

Features/definition of Kubelet:

1.Kubelet is the primary worker-node agent which is present on every k8s cluster worker-node, it registers the node with the kube-apiserver and it can create,delete,run and monitor the status of the pods and reports about the status to the kube-apiserver.

2.Kubelet uses podSpec which is a yaml or json file which consists of the containers, environment variables for the container and other properties, as I already told you earlier that these are the properties which are needed by a pod to run containers. This podSpec file is primarily received from kube-api server. Using this podSpec file kubelet monitors the behavior of the pod regularly and ensures the pod is running and healthy according to the specifications mentioned in podSpec file. Kubelet reports about the current health status of the pod to kube-api server regularly.

For more information about what are some other ways to send podSpec file to Kubelet instead of from kube-api server, you can visit this documentation on kubelet by k8s :

https://kubernetes.io/docs/reference/command-line-tools-reference/kubelet/#:~:text=The%20kubelet%20is%20the%20primary,object%20that%20describes%20a%20pod.

K-Proxy : It manages the network rules within the kubernetes cluster so that request for a specific micro-service reaches the correct pod.

Features of k-proxy :

1.If worker-nodes want to communicate with outside network then k-proxy is used.

2.K-proxy ensures that every worker-node has unique IP address assigned to it.

For more detailed explanation you can go to :

https://kubernetes.io/docs/reference/command-line-tools-reference/kube-proxy/

Let us now discuss about k8s-controller-manager and k8s controllers :

Kubernetes-Controller-Manager : It continuously monitors various components of the k8s cluster and works towards managing/restoring to the desired state. It consists of multiple controllers inside it. The controller monitors the current state of the cluster via calls made to the kube-api Server, and changes the current state to match the desired state described in the cluster's declarative configuration.

So now what are controllers ?

In robotics and automation, a control loop is a non-terminating loop that regulates the state of a system.

In Kubernetes, controllers are control loops that watch the state of your cluster, then make or request changes where needed. Each controller tries to move the current cluster state closer to the desired state by communicating with controller-manager via kube-api server.

K8s controllers are programs which perform specific task for which they are made and are handled by controller-manager. Each controller also has a spec-field which specifies the desired state of the k8s components/resources.

What does kube-api server do ?

1.It is used to authenticate users, validate requests, retreive data, update ETCD and communicate with other components of the cluster.

2.We can communicate with kube-api server via kubectl cli. No other component in k8s cluster except kube-api server communicates with ETCD.

Let us now discuss about kubernetes-inbuilt controller and custom-controller :

Example of the inbuilt-controller(deployment-controller) :

Deployment controller deploys and maintains a specific number of replicas of pods which are specified in the spec file of the controller, if suppose one or more pods go down then the controller will communicate with kube-api server and spin-up new pods so as to reach the desired state from the current state.

What are custom-controllers ?

Custom controllers are controllers written by the user to use k8s resources according to the way in which controller was designed.

Now let us discuss Master-Node in detail :

Controller-manager : I have already explained it.

Kube-API server : I have already explained it.

ETCD : It is the datastore of kubernetes cluster. It is a datastore which is commonly used on distributed-systems since it is replicated(highly-available) and secure datastore. Since micro-services are a distributed system hence etcd is used in kubernetes.

Features of ETCD :

1.Contains all the information of cluster related to nodes, pods, configs, secrets, bindings, etc.

2.It stores all the data in {key:value} format hence called a key-value datastore.

3.It is replicated since data from leader node in etcd is replicated to neighbour nodes, means if leader-node goes down the data is still available in the neighbour-node and it becomes the new leader-node, etcd works on this raft algorithm.

Scheduler : It always listens to kube-api server and schedules the new pods on worker-nodes.

Features of Scheduler :

1.It just decides which pod to place on which node based on CPU, ram, resources on the node.

2.Suppose if scheduler is unable to find a free worker-node then the scheduler responds with pending-request.

Kube-dns : It is also a part of the master-node, it is useful for assigning DNS names to pods and services instead of IP addresses.

Features of kube-dns :

1.You can contact Services with consistent DNS names instead of IP addresses.

2.Kubelet configures Pods' DNS so that running containers can lookup Services by name rather than IP.

Workflow of Master-Node :

We provide the command for creation of a pod via kubectl to kube-api server, it stores the info about request in etcd data-store, the scheduler in master-node regularly communicates with kube-api server and then it detects the info about new pod creation, so the scheduler searches for a worker-node for that pod, this worker-node info about the pod is stored in etcd and finally kube-api server creates a pod communicates with kubelet of the worker-node and kubelet sends info about creation of new pod, finally this info is stored in etcd that new pod is created.

This picture above demonstrates the k8s cluster and all that we have learned till now.

Congrats on making it this far, if you have clearly understood till here you can say you are now somewhat familiar with the k8s architecture, but still if you want more detailed explanation you can visit : https://kuberentes.io documentation.

Top comments (0)