This article was originally posted on SigNoz Blog and is written by Muskan Paliwal.

Modern-day software systems emit millions of log lines per minute. Cloud computing and containerization have made it easy to have distributed systems. Distributed systems emit logs from multiple sources. While developers have always used logs to debug stand-alone applications, centralized logging solves the challenges of modern-day distributed software systems.

What is centralized logging?

Centralized logging is a method of collecting and storing log data from multiple sources in a central location. This makes managing and analyzing log data effective and efficient for organizations. Centralized logging was developed as a way to improve the management and analysis of log data in large and complex systems.

As organizations grow and their products become more popular, they often need to scale their systems to handle the increased demand. One way to do this is by using distributed systems, which allow an organization to spread workloads across multiple servers or devices. This can help improve performance, reliability, and scalability.

However, implementing and managing a distributed system can be challenging. One key challenge is log management and analysis. In a distributed system, log data is typically generated by multiple servers, applications, and devices, often spread across different locations or even different regions. By implementing centralized logging, organizations can more easily search and analyze the logs from multiple sources in a single place, identify trends and patterns in the logs, and troubleshoot issues more quickly.

Why are logs essential?

Logs play a critical role in the functioning and management of any system or application. They provide valuable insights into the state and activity of the system and are essential for debugging and troubleshooting issues, meeting compliance requirements, and making informed decisions.

By collecting and analyzing log data, organizations can identify and address problems, monitor performance, and gather valuable data about their operations. Therefore, logs are a vital part of any system or application, and their proper management is crucial for the smooth operation and success of the organization.

Components of centralized logging system

The components of a centralized logging system typically include:

Log collection: This involves gathering logs from various sources, such as servers, applications, and devices. There are various methods for collecting logs, including Syslog, filebeat, and custom log shippers. OpenTelemetry collectors can also help in collecting logs from multiple sources.

Log storage: This involves storing the collected logs in a central repository, such as a log server or a cloud-based log storage service. The logs are typically structured and indexed in a way that makes them easy to search and analyze.

Log analysis and visualization: This involves using specialized tools and processes to analyze and visualize the logs. This can include setting up index patterns, creating visualizations, and setting up alerting and notification rules.

Why is centralized logging important?

Centralized logging is a key tool for managing and analyzing log data more efficiently and effectively. By collecting and storing log data from multiple sources, organizations can easily:

- Improve security and compliance by more easily monitoring and auditing the logs to meet regulatory requirements.

- Enhance visibility and debugging capabilities by allowing for easier search and analysis of the logs from multiple sources. Since it can be tough to aggregate all the logs from multiple sources, it is easy to have all the logs in one location.

- This can help organizations identify trends and patterns, troubleshoot issues, meet compliance requirements, and improve the performance and reliability of their systems without struggling much with the log data of various locations, especially in the context of scaled systems.

- Centralized logging can help protect the privacy and security of log data by storing it in a central location that is carefully secured and monitored.

Overall, centralized logging is essential for the smooth operation and success of any organization that generates log data.

Best practices for centralized logging

To ensure that centralized logging is effective, efficient, and secure, organizations should follow best practices such as:

- Setting up log rotation and retention policies: Establish policies for rotating and deleting old logs to ensure that the log data does not consume too much storage space and that logs are retained for the appropriate length of time for compliance and other purposes.

Log rotation policies help ensure that logs are periodically rotated or archived to keep the storage from filling up. Retention policies can help ensure that logs are retained for the appropriate length of time for compliance and other purposes, such as auditing or troubleshooting.

- Setting up alerting and notification systems: Configure alerting and notification systems to notify you when certain conditions are met in the logs, such as when an error occurs or a security threat is detected. This can help organizations quickly identify and respond to issues and can improve the efficiency and effectiveness of the logging system.

- Ensure data privacy and security: Take steps to protect the privacy and security of log data, such as encrypting the logs in transit and at rest and restricting access to the logs to authorized personnel only. This can help prevent unauthorized access to the logs and protect sensitive information contained in the logs.

- Choose the right logging solution: Select a logging solution that meets the needs of your organization in terms of scalability, performance, and features. Consider factors such as the volume and variety of log data, the needs of the organization in terms of log analysis and visualization, and the budget and resources available for the logging infrastructure.

- Implement log parsing and analysis: Set up log parsing rules to extract important fields from the logs, and implement log analysis and visualization tools to help you search and analyze the logs more effectively. Log parsing can help extract relevant data from the logs, such as timestamps, severity levels, and error messages, making it easier to search and analyze the logs.

Log analysis and visualization tools can provide graphical representations of the log data, allowing organizations to identify trends and patterns in the logs and troubleshoot issues more efficiently.

- Monitor and maintain the logging infrastructure: Regularly monitor the logging infrastructure to ensure that it is functioning properly, and take steps to maintain it and keep it up to date. This can include monitoring the health and performance of the log server, applying updates and patches, and testing the logging system to ensure that it is working as expected.

- Implement policies and procedures for log management: Establish policies and procedures for managing log data, including guidelines for log collection, storage, analysis, and retention. These policies and procedures can help ensure that the logging infrastructure is properly configured and maintained and that log data is collected, stored, and analyzed consistently and efficiently.

By following these best practices, organizations can ensure that their centralized logging setup is effective in managing and analyzing log data and secure in protecting the privacy and security of the log data.

Implement centralized logging using OpenTelemetry and SigNoz

SigNoz is an open source APM that provides logs, metrics, and traces under a single pane of glass. You can also correlate the telemetry signals to drive contextual insights faster. Using an open source APM has its advantages. You can self-host SigNoz in your environment to adhere to data privacy guidelines. Moreover, SigNoz has a thriving slack community where you can ask questions and discuss best practices.

SigNoz uses OpenTelemetry to collect logs. OpenTelemetry is a Cloud Native Computing Foundation ( CNCF ) project aimed at standardizing how we instrument applications for generating telemetry data(logs, metrics, and traces).

OpenTelemetry provides a stand-alone service known as OpenTelemetry Collector, which can be used to centralize the collection of logs from multiple sources.

Apart from OpenTelemetry Collector, it also provides various receivers and processors for collecting first-party and third-party logs directly via OpenTelemetry Collector or existing agents such as FluentBit. Collecting logs with OpenTelemetry can help you set up a robust observability stack.

Let us see how you can collect application logs with OpenTelemetry.

Collecting application logs with OpenTelemetry

There are two ways to collect application logs with OpenTelemetry:

-

Via File or Stdout Logs

Here, the logs of the application are directly collected by the OpenTelemetry receiver using collectors like filelog receiver. Then operators and processors are used for parsing them into the OpenTelemetry log data model.

For advanced parsing and collecting capabilities, you can also use something like FluentBit or Logstash. The agents can push the logs to the OpenTelemetry collector using protocols like FluentForward/TCP/UDP, etc.

- Directly to OpenTelemetry Collector In this approach, you can modify your logging library that is used by the application to use the logging SDK provided by OpenTelemetry and directly forward the logs from the application to OpenTelemetry. This approach removes any need for agents/intermediary medium but loses the simplicity of having the log file locally.

For centralized logging, you can use various receivers and processors provided by OpenTelemetry along with OpenTelemetry collector to collect logs from multiple sources. You can find more details on how to collect logs with OpenTelemetry here.

Using SigNoz to visualize logs data

OpenTelemetry provides instrumentation for generating logs. You need a backend for storing, querying, and analyzing your logs. SigNoz, a full-stack open source APM is built to support OpenTelemetry natively. It uses a columnar database - ClickHouse, for storing logs effectively. Big companies like Uber and Cloudflare have shifted to ClickHouse for log analytics.

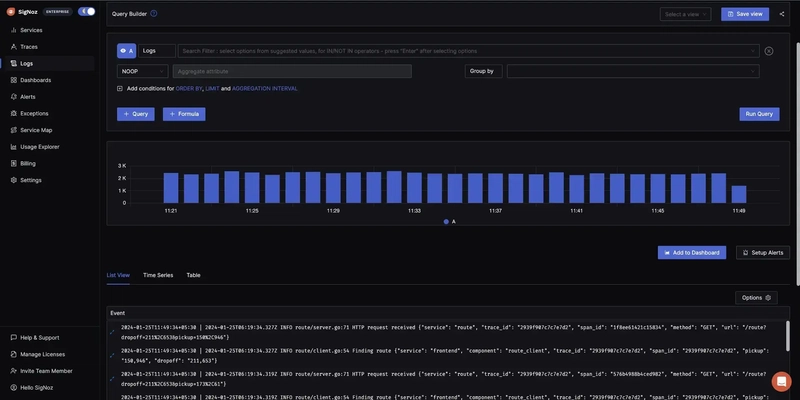

The logs tab in SigNoz has advanced features like a log query builder, search across multiple fields, structured table view, JSON view, etc.

Getting started with SigNoz

SigNoz can be installed on macOS or Linux computers in just three steps by using a simple install script.

The install script automatically installs Docker Engine on Linux. However, on macOS, you must manually install Docker Engine before running the install script.

git clone -b main https://github.com/SigNoz/signoz.git

cd signoz/deploy/

./install.sh

You can visit our documentation for instructions on how to install SigNoz using Docker Swarm and Helm Charts.

Conclusion

Centralized logging is a critical component of any logging infrastructure, as it helps organizations manage and analyze their log data more effectively. By collecting and storing log data from multiple sources in a central location, centralized logging improves the security, visibility, and manageability of log data and enables organizations to make more informed decisions based on the log data.

It is a difficult task to handle logs at scale. A microservices architecture emits millions of log lines per minute. Having a structured approach to logging, adding observability to your software systems, and making it easy for developers to analyze and drive insights from logs data is key to having high-performing applications.

SigNoz and OpenTelemetry can help you manage centralized logging effectively. A unique logging model with centralized logging ensures that all developers use the same fields in their log messages.

As developers, logs will always be your friend!

Related posts

OpenTelemetry Logs - A Complete Introduction & Implementation

OpenTelemetry Collector - architecture and configuration guide

Top comments (0)