Overview

Playing around with Elixir in side projects, I'm always reminded of one of the reasons I love it - how little you have to write. It's perfect for the lazy dev!

This GET endpoint I've made, I think, really highlights that fact. Or maybe it's versatility. I don't know, I think it's cool anyway and wanted to show it off.

The endpoint is for getting a collection of records on a database with a query. Such as domain.com/users?location=Earth. Pretty trivial stuff right? But being able to set up an endpoint like this and throw any field/property at it and sorting by any field/property all dynamically, I think is pretty neat.

TL;DR

Here's the endpoint:

get "/users" do

params = parse_params(conn.params)

resp = User.fetch(params) |> struct_to_json()

send_resp(conn, 200, resp)

end

Here's the query to the database:

def fetch([search, sort]) do

User

|> where(^search)

|> order_by(^sort)

|> Repo.all()

end

How to do it

I'll be starting from the beginning. Creating the elixir project and going all the way to the end. The vast majority of which will be set up and configuration but I'll try to keep this to a minimum and only explain as little as is needed for the point of this article. If you're new to Elixir, this might be beneficial to you, but this is supposed to show you how I personally achieved this cool endpoint rather than a lesson per se.

If you already feel confident with Elixir, feel free to browse the Github repository and enjoy your day 😄

Dependencies

First, we'll create our project with a supervision tree mix new super_get --sup. We'll go into our project folder named super_get and put the following dependencies into the deps function within the file mix.exs

defp deps do

[

{:jason, "~> 1.2"},

{:plug_cowboy, "~> 2.1"},

{:ecto_sql, "~> 3.4"},

{:postgrex, "~> 0.15.3"}

]

end

then run mix deps.get in your terminal to download them. Whilst we're in mix.exs, let's add the cowboy plug to extra_applications like the following:

extra_applications: [:logger, :plug_cowboy],

We're telling the project that we want cowboy up and running before the rest of the code.

DB

We're going to need a DB to store and retrieve records, so let's create a quick local one with docker-compose:

version: "3"

services:

db:

image: "postgres"

env_file: database.env

container_name: "superduper_DB"

ports:

- "5432:5432"

volumes:

- dbdata:/var/lib/postgresql/data

volumes:

dbdata:

and an env file for convenience:

POSTGRES_USER=postgres

POSTGRES_PASSWORD=postgres

POSTGRES_DB=users

all in the root of our super_get project. In the terminal running docker-compose up, hopefully everything should go well. Open up your favourite DB client (that supports PostgreSQL) and we'll just connect to it for now as a sanity check.

This is how I did it. You can see the information from the database.env file from earlier for the details and the rest is defaults. Hopefully, you were able to connect like me.

Connecting Elixir to DB

In our terminal we're going to run mix ecto.gen.repo -r SuperGet.Repo. This will generate a few files, one of which we're going to change - config/config.exs - to the following:

import Config

config :super_get, ecto_repos: [SuperGet.Repo]

config :super_get, SuperGet.Repo,

database: "users",

username: "postgres",

password: "postgres",

hostname: "localhost"

Now we can create the DB that Ecto, the DB dependency from before can use. Run mix ecto.create -r SuperGet.Repo. At this point, you might want to run iex -S mix to jump into the interactive elixir terminal to see if there are any errors as another sanity check. ctrl+c twice to exit out of it.

Cowboy config

Now we're going to add some configuration information into lib/super_get/application.ex like so:

defmodule SuperGet.Application do

use Application

def start(_type, _args) do

repo = [SuperGet.Repo]

children =

[

Plug.Cowboy.child_spec(

scheme: :http,

plug: SuperGet,

options: [port: 4000]

)

] ++ repo

opts = [strategy: :one_for_one, name: SuperGet.Supervisor]

Supervisor.start_link(children, opts)

end

end

Let's add an endpoint to check if the cowboy is working as expected. In lib/super_get.ex add the following:

defmodule SuperGet do

use Plug.Router

plug(Plug.Logger)

plug(:match)

plug(Plug.Parsers, parsers: [:json], json_decoder: Jason)

plug(:dispatch)

get "/ping" do

send_resp(conn, 200, "pong!")

end

match _ do

send_resp(conn, 404, "oops... Nothing here :(")

end

Check out

Elixir: Building a Small JSON Endpoint With Plug, Cowboy and Poison

Jon Lunsford ・ May 10 '19 ・ 6 min read

for some good explanations about the plugs at the top of the file.

Let's check it. In the terminal, let's run interactively iex -S mix so we can see any messages a bit easier. Then, visit localhost:4000/ping and hopefully you see pong!.

Creating the user table

For this whole example, we're going to create a classic guide of generic users. Let's create an Ecto migration file in the terminal, mix ecto.gen.migration users. This will generate folder and files, priv/repo/migrations/[date time]_users.exs. Open up that file and we can specify what the columns will be.

defmodule SuperGet.Repo.Migrations.User do

use Ecto.Migration

def change do

create table(:users) do

add(:name, :string)

add(:location, :string)

add(:occupation, :string)

timestamps()

end

end

end

If you're not familiar with using Ecto or Elixir in general timestamps() is a nice freebie that adds inserted_at and updated_at for us and we don't have to do anything with it - Ecto has our backs.

With that in place, let's migrate with mix ecto.migrate in the terminal. In your DB client, you could run a query, select * from users and see those columns; no data yet. Let's add a record manually for testing. For now, one will do. I did the following:

insert into users(name, location, occupation, inserted_at, updated_at)

values ('Tom', 'Birmingham', 'Dev', now(), now())

Get a user

Time to write some actual code 😄. Let's create a new file named lib/user.ex to interact with this data on the DB. Most of this file will be Ecto boilerplate, so if you are following along see the full file . The lines of importance to fetch that 1 record from an endpoint are:

def get(id: id), do: get(%{id: id})

def get(%{id: id}), do: Repo.get(User, id)

The first line just saves us from typing 3 extra characters in the future. See what I mean about being lazy and making life as easy as possible... Anyway, now we should be able to go into our Elixir terminal with iex -S mix again and when in there SuperGet.User.get(id: 1) and hopefully you get a similar return as me:

%SuperGet.User{

__meta__: #Ecto.Schema.Metadata<:loaded, "users">,

id: 1,

inserted_at: ~N[2020-09-26 16:57:28],

location: "Birmingham",

name: "Tom",

occupation: "Dev",

updated_at: ~N[2020-09-26 16:57:28]

}

So, we now have a function in our application that can retrieve from the DB that's a bit easier. Let's create an endpoint for this.

Returning a user to client

Hold your horses' kiddo (10/10 cowboy pun). If we look at that return, there are a few reasons why we can't just return that; mainly because it's not valid JSON. So let's do that next.

Our JSON encoder, aptly named, Jason, won't let us. It needs a map and we have a struct. There are better ways of doing this (Jason deriving) but for the sake of transparency, we'll create a couple of helper functions at the bottom super_get.ex

defp struct_to_json(%{} = struct) do

struct |> struct_to_map() |> Jason.encode!()

end

defp struct_to_map(%{} = struct) do

struct

|> Map.from_struct()

|> Map.drop([:__meta__])

end

Now, let's finish that endpoint. In the same file, perhaps above get "ping" do line. Add the following:

get "/user/:id" do

user = User.get(id: id)

case user do

%{} -> send_resp(conn, 200, struct_to_json(user))

nil -> send_resp(conn, 404, "Not found")

_ -> send_resp(conn, 400, "Bad request")

end

end

You've seen how that SuperGet.User.get(id: 1) works. Then we pattern match (❤️) on that result for the response and send back the response. Now, we should be able to go to localhost:4000/user/1 and receive something like the following:

{

"id": 1,

"inserted_at": "2020-09-26T16:57:28",

"location": "Birmingham",

"name": "Tom",

"occupation": "Dev",

"updated_at": "2020-09-26T16:57:28"

}

We now have a successful endpoint getting a single record from the DB.

Adding a bunch of data to the DB

We don't just want one record though, we're here for the mega queries. For that, we need lots of records. You could manually put in the data from your DB client as we did before, buuut that's a lot of effort and we might need to repeat that later down the line. Let's make some seed data for the DB and improve our experience.

This calls for a new file, lib/seeds.ex with all the records we might want. It's going to be big but here's a snippet of what we want. See the full file here

defmodule SuperGet.Seeds do

alias SuperGet.{Repo, User}

def add_default_users(users) do

users |> Enum.map(&Repo.insert(&1))

end

def default_users do

[

%User{

name: "Tom",

location: "Birmingham",

occupation: "Dev"

}

]

end

end

Just with many more %User{}. Next, as this is outside of the scope of our application, we're going to create an exs file to execute this, priv/repo/seeds.exs. And add the following 1 line:

SuperGet.Seeds.default_users() |> SuperGet.Seeds.add_default_users()

Assuming there are cases where you want to drop and re-set up your ecto tables, we'll create an alias in mix.exs. In the project function, add aliases: aliases() and at the bottom of the file the following:

defp aliases do

[

"ecto.reset": ["ecto.drop", "ecto.setup"],

"ecto.setup": ["ecto.create", "ecto.migrate", "run priv/repo/seed.exs"]

]

end

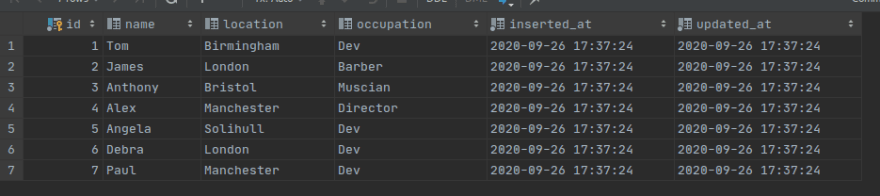

These work much the same as "scripts" in a package.json file, if you're familiar with that. Anyway, now we can run our shiny new alias. In your terminal run mix ecto.reset. Be warned, you might need to disconnect your DB client from the DB server. Once the command is successful we can reconnect and query the data select * from users

Generating SQL queries

Now we're (finally) getting to the tasty bits. In lib/user.ex we're going to add a function.

def fetch([search, sort]) do

User

|> where(^search)

|> order_by(^sort)

|> Repo.all()

end

We expect a list of 2 lists. These will be sorted before they arrive here so we can keep this function a little cleaner with just [search, sort]. search may look like [location: "London", occupation: "Dev"] and when it's interpolated with where(^search) ecto will form the query to look something like:

where: u0.location == "London" and u0.occupation == "Dev"

Likewise with sort and order_by/1 then it all gets wrapped up and sent to our DB.

Parsing the query

I mentioned how

We expect a list of 2 lists. These will be sorted before they arrive

Let's create some more helper functions to do just that. At the bottom of super_get.ex with the other helper functions earlier we're going to add the following:

defp parse_params(%{} = params) do

sort = parse_sort_params(params)

params = Map.delete(params, "asc")

params = Map.delete(params, "desc")

search = for {key, val} <- params, into: [], do: {String.to_atom(key), val}

[search, sort]

end

defp parse_sort_params(%{} = params, result \\ []) do

case params do

%{"asc" => val} ->

parse_sort_params(Map.delete(params, "asc"), result ++ [{:asc, String.to_atom(val)}])

%{"desc" => val} ->

parse_sort_params(Map.delete(params, "desc"), result ++ [{:desc, String.to_atom(val)}])

_ ->

result

end

end

The first step is checking whether asc or desc is present in the query parameters so is sent to parse_sort_params which is not a pretty sight I grant you, but as we're only going to have asc, desc or neither, this approach will work for us. Then, back in parse_params we delete any sign of asc or desc as we don't want that in our search value and then just convert that into an atom list. For some examples, %{"elixir" => "is cool"} might go in but [elixir: "is cool"] will come out.

Endpoint time, part 2: electric boogaloo

As we're hoping on returning many records, instead of just 1, we need to add to that helper function that converted our %User{} struct to valid JSON. We can leverage the one from before though with the following next to it:

defp struct_to_json(structs) when is_list(structs) do

Enum.map(structs, &struct_to_json/1) |> Jason.encode!()

end

With these in place, let's create our endpoint and bring this all to a close. In super_get.ex add the following:

get "/users" do

params = parse_params(conn.params)

resp = User.fetch(params) |> struct_to_json()

send_resp(conn, 200, resp)

end

We take the connection parameters, parse them in our helper, send them to the fetch function that generates our SQL and then we encode the result and send it back from whence it came.

Results

With everything in place, open the terminal and once again run iex -S mix. These results are going to depend on what values you put into your seeds file but here's mine.

So when I try something simple first, such as, localhost:4000/users?occupation=Director I get

[

{

"id": 4,

"inserted_at": "2020-09-26T17:37:24",

"location": "Manchester",

"name": "Alex",

"occupation": "Director",

"updated_at": "2020-09-26T17:37:24"

}

]

Nice, let's ramp it up a bit with localhost:4000/users?occupation=Dev&asc=location

[

{

"id": 1,

"inserted_at": "2020-09-26T17:37:24",

"location": "Birmingham",

"name": "Tom",

"occupation": "Dev",

"updated_at": "2020-09-26T17:37:24"

},

{

"id": 6,

"inserted_at": "2020-09-26T17:37:24",

"location": "London",

"name": "Debra",

"occupation": "Dev",

"updated_at": "2020-09-26T17:37:24"

},

{

"id": 7,

"inserted_at": "2020-09-26T17:37:24",

"location": "Manchester",

"name": "Paul",

"occupation": "Dev",

"updated_at": "2020-09-26T17:37:24"

},

{

"id": 5,

"inserted_at": "2020-09-26T17:37:24",

"location": "Solihull",

"name": "Angela",

"occupation": "Dev",

"updated_at": "2020-09-26T17:37:24"

}

]

Pretty cool, I thought. Worst case scenario, time localhost:4000/users?occupation=Director&desc=location&name=Alex even though with our dataset that result is still the following result from before:

[

{

"id": 4,

"inserted_at": "2020-09-26T17:37:24",

"location": "Manchester",

"name": "Alex",

"occupation": "Director",

"updated_at": "2020-09-26T17:37:24"

}

]

but you get my point.

Well folks, I hoped you enjoyed the ride. I'm sure there are many improvements to be found throughout all of this (let me know) it was a lot of fun playing around with this, especially when you compare it to other languages.

Thanks for reading ❤️

Check out the repo for any more information you might need. As well as the superb elixir docs that exist.

Top comments (0)