by Udaybhaskar Sarma Seetamraju

ToSarma@gmail.com

Dec 31 2023

Highest-level Context

If you are into “Shift-Left” (whether re: Testing, Security, or Replicating-problems-on-developer-laptop, etc ..), then this article is for you.

Objective: Towards enabling up to 5x developer-productivity by allowing developers to robustly SIMULATE the Cloud-environment on a Windows-Laptop.

Quick Summary

Aiming for very simple single command, based on python-scripts --> to execute your python-code as a Glue Job’s build locally on your Microsoft-Windows Laptop.

Even if your company disallows AWS CLI credentials, I have a section on how to significantly raise your productivity, in debugging/developing your python-code.

To state the obvious, everything in here is 100% Python-Scripts.

Note: You should aim to have your software work on arm64 containers, which invariably is cheapest compute on cloud. More below.

Problem Statements

- Using Git for capturing ALL code-changes while simultaneously copying-n-pasting into AWS Glue-Studio (for testing/troubleshooting) is painful, error prone and frustrating.

- As time progresses, your Glue Job will become complicated and require more than one Python-script.Worse, you have one or more folder-hierarchies, all of which contain

PYfiles that you need toimport! - Many developers prefer to develop/test/troubleshoot their code as “plain python”, and Not as a Glue-Job.There is No good reason to deny such developers from doing just that.. .. while ensuring that code will work without any issues inside AWS Glue running locally on Windows-Laptop, and eventually work without issues inside Glue on AWS.

- When running as “plain python”, there should be No runtime dependencies (like “

import awsglue”). - When running as “plain python”, there should be No

spark-dependency. - When running as “plain python”, all inputs/files should be on local laptop’s filesystem. All output should be written to local filesystem only.

- When running as “plain python”, all information from Glue-Catalog should be available OFFline (as a Python

Dictobject)

- When running as “plain python”, there should be No runtime dependencies (like “

- How to proactively ensure the Glue Job will work on all chip-architectures - without having to scramble later? How to explicitly utilize all

x86_64/amd64/arm64architectures locally on your Windows-Laptop? - If the Enterprise does Not allow Laptops to have AWS-Credentials (in

~/.aws/credentialsfile);Even so, how can I EFFICIENTLY test/debug the python-code file locally on my laptop, even as it needs access to Glue Catalog and/or S3 buckets?

Get started!

Based on your needs on AWS choose between these 2 lines below:

$env:BUILDPLATFORM="linux/amd64"

$env:BUILDPLATFORM="linux/arm64"

Then run these commands in a Powershell window:

$env:DOCKER_DEFAULT_PLATFORM="${BUILDPLATFORM}"

$env:TARGETPLATFORM="${DOCKER_DEFAULT_PLATFORM}"

$WORK_AREA=~

cd $WORK_AREA

git clone https://gitlab.com/tosarma/macbook-m1.git

To try out a sample ..

cd macbook-m1

cd AWS-Glue/src

pip3 install docker

python3 $WORK_AREA/macbook-m1/AWS-Glue/bin/run-glue-job-LOCALLY.py sample-glue-job.py

WARNING: Without the benefit of “docker cli”, you get ZERO visibility into the progress of docker-activity. This is due to use of un-friendly Docker’s Python APIs, because of which the python-code _ WILL _ _ HANG _ for a long time!

To repeat, “run-glue-job-LOCALLY.py” will hang with NO output, for roughly 2-to-5 minutes (depending on how much CPU and MEMORY you have allocated to the Docker-Desktop, as well as speed of your internet connection).

Important: I only tested using Python3.11; No other Python version tested.

Ready to use it for your own Glue-Script?

- First, read the full details within the

macbook-m1/AWS-Glue/README.mdfile. - Copy the

*.pyfiles in themacbook-m1/AWS-Glue/src/commonsubfolder into --> YOUR project’s TOPMOST-folder.- ATTENTION: the files

./src/common/*.pymust exist in your project, after you are done copying.

- ATTENTION: the files

- Make sure to edit your

PYfile, to look like the example file provided (sample-glue-job.py) - From your project root, run:

cd <your-project-ROOT-folder>

python3 $WORK_AREA/macbook-m1/AWS-Glue/bin/run-glue-job-LOCALLY.py `

path/to/your/file.py

If you are having problems importing the new files under ./src/common, then try adding this command below and then retry the above command.

$env:PYTHONPATH="your-project's-root-folder"

Is your Python code-base consisting of multiple files across multiple folder-hierarchies?

See section below titled “Complex folder-hierarchies?”

Want to change the CLI-arguments?

Three simple steps:

- Edit the file

macbook-m1/AWS-Glue/src/common/cli_utils.py - Look inside “

process_all_argparse_cli_args()” and make changes in that function. - Look inside “

process_std_glue_cli_args()” and make changes inside that function.- Note: make sure to make similar changes in above steps 2 & 3.

Example:-

-

You would like to support a new cli-arg as:

--JOB_NAME 123_ABC

-

Insert a new line at (say) line # 125 for

JOB_NAME.- This will ensure your code will get the value

123_ABCwhen running __ INSIDE __ AWS-Glue !!

- This will ensure your code will get the value

-

Insert a new line at (say) line # 78 for

--JOB_NAME- This will allow you to run your python-code as a PLAIN python-command and read this CLI-arg.

- See more re: this in a following section titled “running as a PLAIN python-command”

Never edit the file:

macbook-m1/AWS-Glue/src/common/glue_utils.py

The files “common.py” and “names.py” in that same folder can be edited. Feel free to play around with them.

Tips, Issues & Errors

See Appendix sections, for tips on configuring Docker-DESKTOP.

Question: Want to automatically cleanup/delete the Docker-containers - after they exit?

Answer: INSERT the cli-arg “--cleanup” BEFORE the python-script-name, to that “run-glue-job-LOCALLY.py” script.

Advanced User - Complex folder-hierarchies?

Is your Python code-base consisting of multiple files across multiple folder-hierarchies?

Are you aware that Glue requires you to ZIP up all those OTHER python-files into a single Zip-file?

FYI only - this requirement is driven by Spark!

That script “run-glue-job-LOCALLY.py” will automatically do that for you --> that is, it will automatically look UNDER the current-working-directory, and find all **/*.py files and put them in a temporary ZIP-file.

The script will then automatically pass it on to Glue-inside-Docker (running on your laptop).

If you need to import PY files in parent/ancestor levels, you are in an UN-fortunate NO-Solution sitution. FYI: Unlike on MacOS/Linux, you are _ NOT _ able to take advantage of MacOS/Linux “symlink” (“ln -s”) to those parent/ancestor files, where git CLI can preserve these “symlinks” as exactly just that.

No AWS Credentials on your laptop?

For security-reasons, many companies are denying developers the AWS-credentials for AWS-CLI use.

That means you have a showstopper -> re: locally testing/debugging your python-code, for scenarios like:

- COPY INPUT-files from S3-buckets --> into the “current-working directory”.

- COPY OUTPUT-files from the “current-working directory” --> into S3-buckets.

- Lookup Glue Catalog.

- .. etc ..

To workaround this restriction ..

You need to write code that detects whether its running on a Windows-Laptop -versus- actually running inside AWS-Cloud.

In other words, you need to “Short-Circuit” all that code that interacts with AWS-APIs (Glue-Catalog, S3, ..) and mock the expected response from those AWS-APIs.

If you use my script “run-glue-job-LOCALLY.py”, it automatically sets an environment-variable called “running_on_LAPTOP” when running your python-code inside a Docker-Glue container on your laptop!!

How-To “short-circuit”:

if ( os.environ.get('running_on_LAPTOP') ):

print( "!!!!!!!!!!!!!!!!! running on laptop !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!" )

.. ### assume S3-get is already done and file is available in current-directory

.. ### assume Glue-Catalog-Query is already done and ..

### the "JSON-Response" is available in current-directory as a JSON-file

..

else:

..

..

To state the obvious, on AWS-Cloud, AWS-GLUE does __ NOT __ support environment-variables.

So, this environment-variable called “running_on_LAPTOP” will be UN-defined when running inside AWS-Cloud.

Running as a plain python command

EXAMPLE:

I’m going to use the same “macbook-m1/AWS-Glue/src/sample-glue-job.py” file, to show how to run as PLAIN Python-program.

FYI: My python-code in that sample-glue-job.py expects the following 6 CLI-arguments (with their values).

If you do _ NOT _ like this list of CLI-args, see section above titled “Want to change the CLI-args?”

python3 sample-glue-job.py --ENV sandbox ^

--commonDatabaseName MyCOMMONDATABASENAME ^

--glueCatalogEntryName MyDICT ^

--rawBucket MyRAWBUCKET ^

--processedBucket MyPROCESSEDBUCKET ^

--finalBucket MyFINALBUCKET

If you want the ability to run both as a plain python-script as well as run it inside AWS-glue, you __ MUST __ replicate the structure and code within this “macbook-m1/AWS-Glue/src/sample-glue-job.py”.

APPENDIX

Running out of Disk-space or Memory?

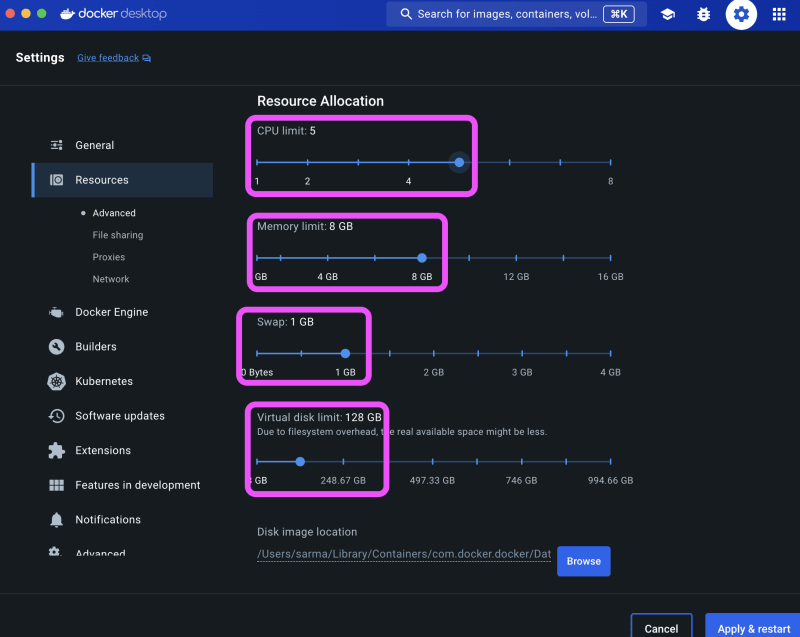

Screenshot below shows the recommended “high” settings.

After building images, you can reduce:

- “CPU” can be lowered to “2”.

- “Memory” can be lowered to “4GB”.

End of Article.

Top comments (0)