I need a tool to handle post-deployment testing properly

I've got a project where I'm not handing post-deployment testing with any real grace. It's been on the list of things to resolve, and I'm happy with it for the moment because of the pre-release tests, manual release testing, and monitoring post-release, but it does need solving.

I stumbled upon the newman cli tool from the good folks at getpostman.com. It's a CLI, open source tool that runs the tests you have saved in your Postman collections, and gives the typical error state output / console output you expect from any modern testing tool, which means you can integrate it into your CI/CD workflow.

For anyone who's not used Postman, it's a stunning tool for making requests to services, keeping collections of connections and such, and if you're doing almost any web-based development you need it. If you're too old-school and like using cURL for everything? Fine, it'll import and export cURL commands for you. Go check it out.

The only problem for me - I don't use Postman like that. I don't keep collections of things really, I just use it ad-hoc to test things or for a quick bit of debugging. We've got a nice collection of integration tests build around our OpenAPI specs that we rely on, so I've not had to do what others have done and create a large collection of API endpoints.

The trick here is going to be keeping the duplication down to a minimum.

Getting started: We're going to need an API to test against:

I've stored everything for this project, you can see all the files on GitHub

// src/index.js

const express = require('express')

const bodyParser = require('body-parser')

const addRequestId = require('express-request-id')

const app = express();

app.use(addRequestId())

app.use(bodyParser.json())

app.get('/', (req, res) => {

res.json({message: 'hello world', requestId: req.id});

})

app.get('/foo', ({ id, query }, res, next) => {

const { bar } = query

res.json( { bar: `${bar}`, requestId: id })

})

app.post('/foo', ({ id, body }, res, next) => {

const { bar } = body

if (typeof bar === 'undefined' ) {

return res

.status(400)

.json({ error: 'missing `bar`', requestId: id})

}

res.json( { bar: `${bar}`, requestId: id } )

})

const server = app.listen(8081, function () {

const port = server.address().port

console.log("Example app listening to port %s", port)

})

So we've got three endpoints to use: / and /foo as GET, and /foo as POST. There's a little validation in the POST /foo endpoint. I've added express-request-id in and added it to the responses so we can make sure they're unique.

Starting the Collection

I'm learning this as I blog here, so forgive any backtracking! I've gone into postman and created a new collection called postman-newman-testing.

I went through and created and saved a request for each of the three endpoints, adding a little description for each:

Adding some tests:

Remembering the goal here is to create something that can help us run some post-deployment tests, so we're going to define some simple tests in the collection for each of the endpoints. I want to ensure:

- I get a

requestIdback for each response - I get a

200response for each - I can trigger a

500response when I expect there to be an error - The expected values come back for the

POST /fooandGET /fooendpoints

The documentation for the test scripts is all in the Postman Learning Center as you would expect, and thankfully it's going to be really familiar for anyone who's worked with tests and JS before.

So, after a little hacking around, I discovered something cool; when you make the tests, they're executed each time you execute that request, so if you're using Postman to dev with, you can't 'forget' to run the tests.

Variations

I want to test two different outputs from an endpoint, success and failure, but I don't think I should have to save two different requests to do that, so how are we going to test our POST /foo endpoint? I'm going to come back to that at some point once I understand more.

Automate all the things

I've got my collection set up with all the happy-path tests, and if open the Collection Runner, and run my collection (..), then I get a nice board of green boxes telling me my API is, at a very basic level, doing what I expect it to be doing.

Let's work out newman.

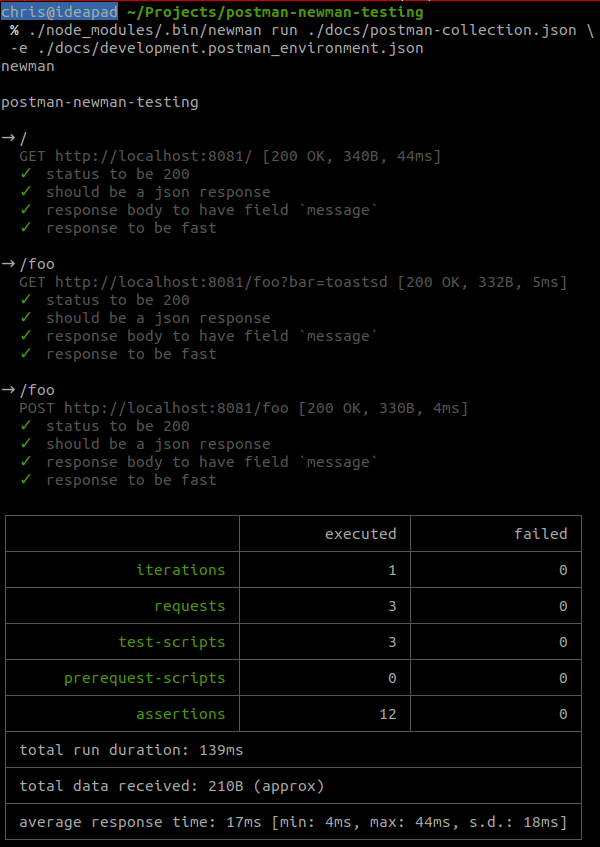

I've exported the collection from Postman and stored it under docs/postman-collection.json in the project root, installed newman ($ npm install --save-dev newman), and ran the command to run the tests:

So that's amazing, I've made some simple API tests, but it's not going to do me any good for the simple reason, that in my collection all my URLs are set to http://localhost:8081, so I need to work out how to change that.

After a bit of clicking and Googling, we can do this. Postman has support for Environments - you can see them at the top-right of the main window. I created a couple ('development' and 'staging') and created a value called host in them with the http://localhost:8081 for development, and https://api.mydomain.com:3000 for the staging environment. These are a bit fiddly, the same as some of the other parts of Postman's UI, but it's possible ;)

Next, we go into the Collection and change the host names in the saved requests to use {{host}} - the {{ }} method is how Postman handles environment variables, and could be used for things like API keys.

So let's translate to the newman tool.

Ahh. Ok.

So exporting the Collection doesn't bring any of the environment variables with it. We have to export those as well.

And now, we're going to want to use those environment configs with our newman execution:

Boom! Git controlled, command line executed testing for APIs in different environments, using a tool all devs should be using anyway for a simple post-deployment check. There's the obvious steps of adding this to your Jenkins / Gitlab / whatever pipeline which I'm not going to cover here, but I'm happy with what's been discovered over the last couple of hours.

One last thing, lets put this into the package.json file so we can re-use:

{

"name": "postman-newman-testing",

"version": "1.0.0",

"description": "",

"main": "index.js",

"config": {

"environment": "development"

},

"scripts": {

"debug": "nodemon src/index.js",

"start": "node src/index.js",

"test": "echo \"Error: no test specified\" && exit 1",

"test-post-deploy": "newman run ./docs/postman-collection.json -e ./docs/$npm_package_config_environment.postman_environment.json"

},

"author": "Chris Williams <chris@imnotplayinganymore.com>",

"license": "ISC",

"dependencies": {

"body-parser": "^1.19.0",

"express": "^4.17.1",

"express-request-id": "^1.4.1"

},

"devDependencies": {

"newman": "^4.5.5",

"nodemon": "^1.19.3"

}

}

Then we can handle configs for environments as we like, and run

npm run test-post-deploy

to execute the test!

Conclusion

While it may be another set of tests and definitions to maintain (I would really like this to be based from our OpenAPI spec documents, but I'll figure that out later), this seems to be a great way of achieving two things:

- A really simple set of tests to run post-deployment or as part of the monitoring tooling

- The collection file can be handed out to devs working with the APIs: The're going to be using Postman (probably) anyway, so give them a head-start.

Postman is just one of those tools that you have to use if you're doing web-dev, or app-dev. Given that it's just 'part' of the dev toolkit, we may as well use the familiarity and use it as part of the testing tools as well.

There are some things that I would like to know a bit more about:

- Be able to store the output in a file, maybe, so it's visible quickly in Jenkins

- Set the severity of individual tests - so if we fail some it's an instant roll-back, if we fail some others it's a loud klaxon in the engineering office for someone to investigate, but it may be resolved by fixing forwards

- Test for sad-paths , make sure that the right error response codes are coming back for things without having to create the responses for them: I think there's something you can do with the Collection Runner and a file of sample data, and then flag if tests should be red or green, but I didn't get around to that.

Thanks also to those who've replied to my on-going tweets about all things Postman over the last couple of hours, esp Danny Dainton, who also has his own Dev.to articles over at https://dev.to/dannydainton

Thanks again for the comments on previous articles, I would love to hear how you use this in your projects! Get me on https://twitter.com/Scampiuk

Top comments (9)

Thanks for the shout out, I very rarely create content on here but I live the site. ❤️

I see from your images that you're using an old version of the app.

Version 7 includes a new API tab (next to the Collections tab) that will allow you to import you OpenAPI spec and auto generate a Collection from these.

That's something I'll take a look into! We work with the OpenAPI spec to do much of our Dev work, so it's the single-source-of-truth: I'll look if we can use some cli or API way of importing and maintaining the collection from that 👍

Ahh so the Ubuntu snap is still installing 6.7.5 as the latest

Yeah, there's an open issue around that, you could do this instead to get the 7.8.0 version:

sudo snap switch --channel=candidate postman

sudo snap refresh postman

This is a very cool idea, I will have a handson! 😊

Even though it’s an example:

If the client misses a required field, please respond with a client error (4XX) and not with a server error (5XX). There is nothing the server/service could do (heal, scale, reboot) to make that request pass.

Mortified! I'll fix this now!

You're also the only person to mention this in a few thousand people reading it 😀

I use this my day to day work it's been two years since I have started this.

I would like to see more on how do you save response in Jenkins or any particular string write back to Jenkins if it fail or even if it's not falied

Great Article, Thank you Chris

Hi, I want to hide the iterations , only the final table should gets displayed at the end of the execution, can you help me on this which cli parameters I have to add for this? Thanks