nut.js - Two years recap

A little over two years ago I began to evaluate possibilities to perform desktop automation with Node.js. I compared various existing libraries but at the end of the day, none of them really convinced me. They all had their pros and cons, but none of them met all of my requirements, which where:

- The library is actively maintained

- Fast and easy to install

- Fully cross-platform compatible

- Provides image matching capabilities

While the first three requirements could be met, the fourth one ruled out every single lib I was checking out. It seemed like no desktop automation library for node provided image matching capabilities - and that was when I decided to build one myself.

Two years later and I’m still actively maintaining nut.js, so I thought it might be a good time for a recap on what happened within these two years.

The Early Days - native-ui-toolkit

The first prototype I pieced together received the working title native-ui-toolkit. It combined robot-js for OS level interactions (grabbing screen content, keyboard / mouse input, clipboard access) with opencv4nodejs for image matching. Despite being an initial working prototype, this first draft already revealed some major issues.

robot-js only supported node up to version 8, which was quite a bummer with node 10 becoming the latest LTS version on Oct. 30th 2018. Additionally, development seemed to have stalled (and as I have checked today, there has been no new release since March 2018).

The second major problem came with opencv4nodejs. It required either a properly installed version of OpenCV on your system, or alternatively gives you the possibility to let opencv4nodejs compile OpenCV for you. Both options did not play well with my requirement of a fast and easy installation. Either have the user struggle with installing the correct version of OpenCV, which is not equally easy on all platforms, or have the lib recompile OpenCV on installation, which requires a full C++ toolchain and takes 30+ minutes.

Last, but no least, both opencv4nodejs and robot-js are native node add-ons. So essentially we’re dealing with shared libraries here, which means they have to be provided for every target platform, and depending on the technology used, the targeted node version. Since both libs did not provide a way to provide pre-built binaries out of the box, the only solution at that time was to recompile them on install. This in turn required a C/C++ toolchain and a working Python 2 installation. Again, not my kind of ”fast and easy installation”.

However, facing these problems lead to one of the most substantial design decision regarding the architecture of nut.js.

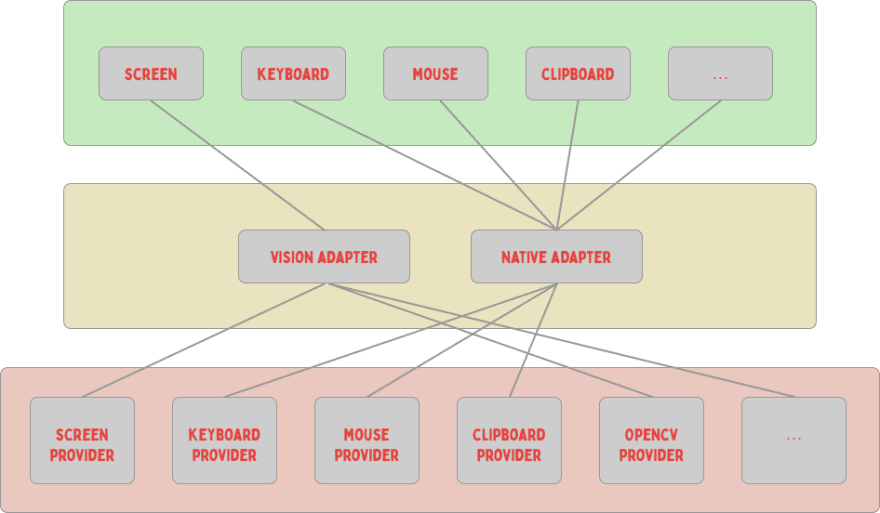

Instead of spreading 3rd party packages throughout the library, dependencies are limited to so called “provider packages“, which deal with library specifics. From there on, only user-defined types are used, fully hiding any external dependency.

These providers are used in an adapter layer, where they can be mixed and matched to implement desired functionality. User-facing API only relies on these adapters. This way a new / different provider wont ever be able to require changes in the user-facing API and changes are limited to the adapter layer (at most). It may sound like a glorious example of over-engineering at first, but in hindsight it proved to be one of the best design decisions I made with nut.js. Following this scheme I changed the native provider implementations three times so far, requiring minimal effort.

Growing Up - Moving Out

What started as a single repository under my GitHub account was about to grow into a dedicated organization with its own repo. I made plans on how to continue development on nut.js and decided to group upcoming repos under the nut-tree GitHub organization. Prior to moving the repo I ditched robot-js in favor of robotjs, a similar lib which provided pre-built binaries, so it didn't need to be built on install.

With its own organization and repo, nut.js also earned its own logo:

The one thing I was still struggling with was how I could provide a ready to use package of opencv4nodejs. As already mentioned, installing the correct version of OpenCV can be tedious and my understanding of great usability required some way to install the lib without including a compile step. So in addition to shipping a pre-compiled version of OpenCV I also had to provide pre-compiled bindings for various platforms and node versions, since opencv4nodejs uses nan for its bindings.

Building on-top of what it already provided I forked both opencv4nodejs and npm-opencv-build. I didn't require all of OpenCV, so I dug into it's build config until it fit my needs and started configuring CI pipelines. When running on CI, platform specific packages containing a pre-compiled version of OpenCV should be published following the @nut-tree/opencv-build-${process.platform} scheme. A first step in the right direction.

These OpenCV wrapper packages paved the way for shipping fully pre-built OpenCV bindings. My fork of opencv4nodejs, opencv4nodejs-prebuilt would install OpenCV libs for the current target platform and link against them during build. After reading and learning *a lot* about subtle differences regarding linking on macOS, Linux and Windows, I modified the opencv4nodejs build process in a way which made it possible to ship pre-compiled node bindings including the correct, pre-built OpenCV lib. Thanks to Travis CI and Appveyor I’m able to run a total of currently 39 jobs to pre-build these bindings, supporting node versions >= 10 as well as Electron >= 4 on three platforms.

Continuous Change

One big problem solved, time for another one! Node 12 was about to become the new LTS version, so naturally, my goal was to support node 12 as well. However, development of robotjs stalled. The original maintainer seemed to have move on and there hasn’t been a proper release since 2018.

Facing this issue, I decided to take care of it myself and forked the project. Once I got aquatinted with the project, I realised that node 12 support meant more than just an updated CI setup for pre-builds. Since robotjs also used nan for its bindings, it required code changes to stay compatible with node 12.

Given this fact, I decided to make the leap and migrated from nan to N-API. Along this way I also switched build systems, replacing node-gyp with cmake-js. The result of all these changes is libnut, which supports future node versions out of the box due to N-API's ABI stability.

After migrating to libnut, the current dependency tree of nut.js looks like this:

Continuous Improvement

Now that nut.js had a solid foundation, it was time for improvements.

In a first step, I added documentation.

Besides an improved readme file, I also added auto-generated API docs which are hosted via GitHub pages.

The next thing I tackled were pre-releases.

Every push to develop now triggers a pre-release build which publishes a development release to npm.

Whenever a new tag is pushed, a stable release will be published.

Stable releases are available under the default latest tag, development releases are published under the next tag.

Along the way I constantly improved the CI setup to make my life easier.

As a third improvement, I added a samples repository.

This monorepo holds several packages which demonstrate various use-cases of nut.js.

Samples range from keyboard and mouse interaction to Jest and Electron integration.

New Shores

So far, nut.js wrapped clipboardy, libnut and opencv4nodejs-prebuilt in a (in my opinion) nice API.

Initially, libnut was just a port of robotjs, so it provided exactly the same functionality.

And since I wanted libnut to be truly cross-platform compatible, new features had to either work on all platforms or wouldn’t be added, so when I started working on adding a desktop highlighting feature, I suddenly found myself digging through Xlib, Win32 and AppKit documentation, writing C/C++ as well as Objective-C/Objective-C++.

An exciting experience which really made me smile like a little kid on Christmas Eve once I saw windows appearing on each platform!

Exactly the same thing happened when I added support for window interactions.

Being able to determine open windows and their occupied screen region paves the way for additional features, which again made me smile in front of my machine!

Testing this feature also really made me appreciate the JavaScript ecosystem.

What seemed like a pretty tough task at first could be achieved with a single implementation for all platforms by starting an Electron application on the fly during a test.

A single test now verifies my native implementation works on every platform - isn’t this awesome?

Conclusion

So, how are things going after two years?

To be honest, I still enjoy working on nut.js very much!

I automated quite a lot early on, so now I’m able to concentrate on features and bugfixes.

I’m also still happy with my API design decisions.

API design is hard, and I guess you can’t make everybody happy, but I’m enjoying it myself, so that’s fine for me!

I’ve been able to fulfill my own requirement of a fast and easy install on every platform by spending some extra time on a pre-build setup, something I’m still proud of today!

As I already mentioned, automation plays a major role for nut.js.

But not only did I automate a lot of things, I also spent time working on a proper test infrastructure using multiple CI sytems and multi-staged pipelines to ensure I do not break things.

Being able to release fast and with confidence is really worth the invest!

Last, but not least, I’ve been super excited once I noticed RedHat picked up nut.js for their vscode-extension-tester.

Call me a fanboy, but seeing a company I’d known for almost 20 years for their Linux distribution and open source work starting to use my framework is quite a thing for me!

Two years and still going strong! 💪

Top comments (0)