I really enjoy working with apache spark, but if there is one thing that makes me tired is having to repeat the name of methods and commands, for me it is totally unproductive, and it is based on this complaint made by my person that I decided to create this post. But let's take it easy, this is just an experiment.

Requirements:

- Python >= 3.9

- Apache Spark >= 3.2 download installer here

- Vscode

The first thing we do when working with spark is create a session.

That's it, and then we load any file.

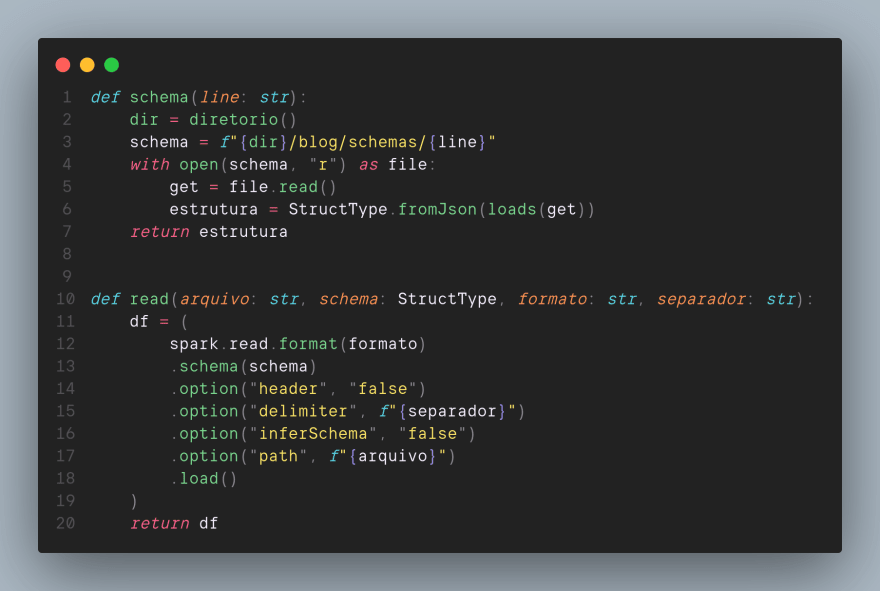

nice, but, have you ever thought if we had to upload more files and every time we had to repeat the same command above? I know, "copy, paste and modify the code, blah, blah, blah". But we don't want that, because let's face it, this is pure kornojob, but, how can we improve this? In my project I created a folder called functions and inside it I created a file called core.py

And a file called main.py which will be our main program, where we will import the methods from core.py, the coding looks like this

And in main.py we implement it like this

Notice how everything became easier, with the read() method I can load innumerable files just by passing parameters

What if I need to create a view? Simple, in core.py write this

And in main.py

Simple, and in the case where we have schemas in .json files how do we load them?

main.py

And finally, we need to write the files somewhere, which is relatively simple too, see

No main.py

complete code for core.py

I hope you enjoyed the joke, I hope that in some way this example will help you. bYe

Top comments (0)