Our pal the computer is a real workhorse. It executes a lot of different instructions concurrently & in parallel, a lot of which is aided by how its operating system handles things. Let's take a second to understand a little bit about how some of moving parts fit together, specifically regarding programs, processes, and threads.

A program is a set of instructions.

A process is the execution of those instructions.

A thread is a single sequence execution of a process that is managed independently.

Let's map this to an analogy. Imagine a recipe for some very delicious bread.

The recipe itself is the program.

Preparing and baking the bread is the process.

An individual instance of baking the bread is a thread.

Now, consider this: a process can have multiple threads, just like two folks can independently work on different tasks to make the very delicious bread - one may be preparing the dough and the other might be pre-heating the oven. Maybe there are two loaves to be made, and they are both working on them independently at different stages. In any case, they are both executing from the same set instructions, perhaps with overlap.

This analogy and definition is very high-level, so let's drop it and move a little closer to the metal.

As mentioned earlier, programs are a sequence of instructions to be executed when the program actually runs. Sometimes this is referred to as a passive set of instructions. When the program is not currently running, it resides on disk space as opposed to in memory (RAM).

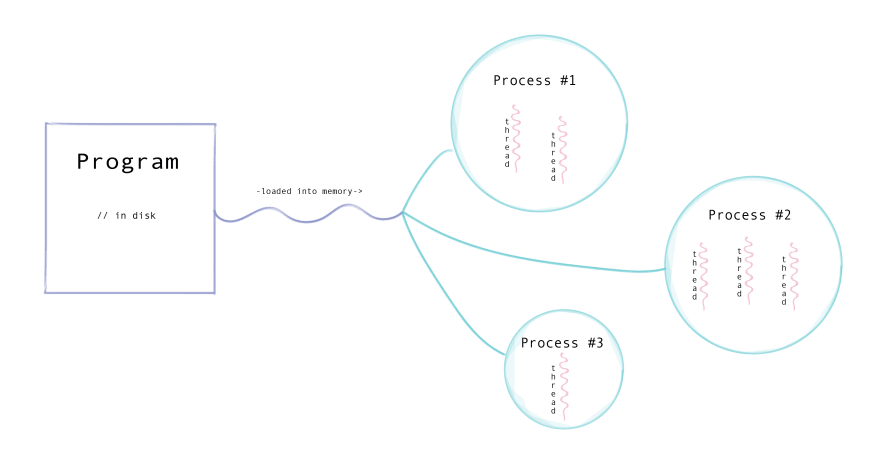

When the program starts running, it is loaded into memory and then begins one or more processes - an instance of the running program. This can range from background tasks - like a spell-checker - or an application such as a web browser or photo editor. It isn't always a one-to-one mapping between a program and a process. A program can have many processes just as a process can have many threads - and, if both are simple, they may only be one of each.

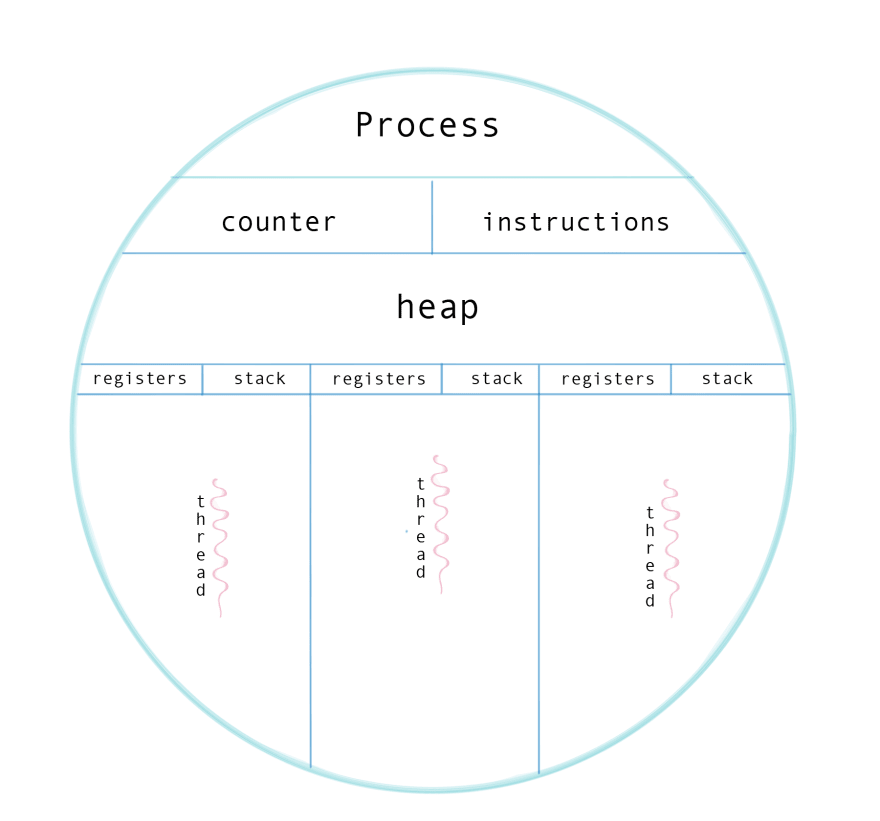

A process has its own maintained data structure - a process control block, or PCB. This contains information such as its process ID (PID), CPU registers, scheduling information, state, privileges, memory management and all that good stuff. The stack is a defined allocation of memory at compile time, whereas the heap is used for dynamic memory during execution. Each process independently has its own PCB. Information such as the counter and registers is used by the operating system to determine how everything is working together smoothly, both with other processes and independently. Here's a graphic representation of a multi-threaded process:

A thread exists within a process. It may be more accurate to say that is part of the process itself - a unit of its execution. A process very well may have multiple threads, but it can likewise only have one. In the case of a single threaded process, the thread and the process are one and the same. The process is the execution of a sequence of instruction, and the single thread is the only sequence being executed. So, what about multi-threaded processes? Well, in that case, each thread in an individual process will be executing their own sequence of instructions at (more or less) the same time.

How about memory? Well, as you may recall from above, each process has their own PCB that includes how its memory is managed. A thread generally has its own stack, but all threads within a process will share the memory heap in the PCB.

As the number of threads within a process grows, its complexity scales too. One must consider how threads are allocating a shared memory space, how their executions may affect each other at run time, each threads' lifecycle and so on. Concurrent programming can be difficult to write because it challenges our default linear style of thinking. But, of course, multi-threaded processes are at times necessary, or at least ideal for a process' execution. Since a program can also launch multiple processes, there's a lot to consider in terms of how one might want to architect their program. For instance, processes have their own PCB, meaning they have their own dynamic memory space. Threads share the dynamic memory space of the process they belong to, meaning sharing resources between two threads will be much faster than between two processes. But, it's all about trade-offs and need considerations. Individual memory spaces may be desirable to protect data from one another. This is also just one example of a trade-off to consider between the two, but it represents how operating systems and computer architecture play a part when designing software.

Sometimes it's easy to forget that there's a lot of moving parts abstracted away from us, tiny and otherwise. This is just a high-level overview of it all, but hopefully it provides a little insight for your curious minds!

Here are some good resources for further learning:

- Wikipedia - program, process, thread

- TutorialSprint for Operating Systems

- More in-depth article between the three

- Video - OS Basics

- Playlist - Semester Long OS Class

With <3, happy coding!

Top comments (0)