If you're just joining us, please take a look at part 1 in this 3-part series.

This blog post is about the Proxy. Remember we have 3 services in this architecture. Proxy -> Server <- Origin

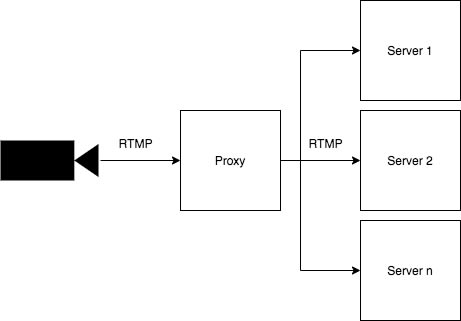

How do we route stateful RTMP traffic to a fleet of RTMP Servers?

We need a single endpoint like rtmp.finbits.io.

A user will push RTMP using a URL like rtmp.finbits.io/stream/<stream key>

RTMP is stateful which makes load balancing and scaling much more challenging.

If this were a stateless application we'd use AWS ALB with an Auto Scaling Group.

Can we use AWS ALB? Nope, ALB doesn't support RTMP.

An Auto Scaling Group might work but scaling down will be a challenge. You wouldn't want to terminate a Server that a user is actively streaming to.

Why not just redirect RTMP directly to the Servers? This would be great but RTMP redirects are fairly new and not all clients support it.

So, how do we route stateful RTMP traffic to a fleet of RTMP Servers?

We can use HAProxy and weighted Route53 DNS routing. We could have n number of Origin (HAProxy) services running, all with public IPs, and then route traffic to all instances via Route53 weighted record sets.

The only gotcha with this service is making sure it picks up any new Servers that are added to handle load.

When the service starts we fetch the list of Servers (IP:PORT) and add them to the HAProxy configuration. Now we're ready to route traffic.

We then run a cron job to perform this action again, to pick up any new Servers. HAProxy is reloaded with zero downtime.

Let's take a look at the HAProxy config.

global

pidfile /var/run/haproxy.pid

maxconn <%= servers.length %>

defaults

log global

timeout connect 10s

timeout client 30s

timeout server 30s

frontend ft_rtpm

bind *:1935 name rtmp

mode tcp

maxconn <%= servers.length %>

default_backend bk_rtmp

frontend ft_http

bind *:8000 name http

mode http

maxconn 600

default_backend bk_http

backend bk_http

mode http

errorfile 503 /usr/local/etc/haproxy/healthcheck.http

backend bk_rtmp

mode tcp

balance roundrobin

<% servers.forEach(function(server, index) { %>

server media<%= index %> <%= server %> check maxconn 1 weight 10

<% }); %>

As you can see we're using EJS template engine to generate the config.

global

global

pidfile /var/run/haproxy.pid

maxconn <%= servers.length %>

We store the pidfile, so we can use it to restart the service when our cron job runs to update the Servers list.

'maxconn' is set to the number of Servers. In our design each Server can only accept 1 connection. FFMPEG draws a lot of CPU and we're using Fargate with lower CPU tasks.

You could use EC2's instead of Fargate with highly performant instance types. Then you could handle more connections per Server.

It might be cool to also use NVIDIA hardware acceleration with FFMPEG, but I didn't get that far. It was getting kinda complicated with Docker.

frontend

frontend ft_rtpm

bind *:1935 name rtmp

mode tcp

maxconn <%= servers.length %>

default_backend bk_rtmp

Here we declare the RTMP frontend on port 1935. It leverages the RTMP backed below.

backend

backend bk_rtmp

mode tcp

balance roundrobin

<% servers.forEach(function(server, index) { %>

server media<%= index %> <%= server %> check maxconn 1 weight 10

<% }); %>

The backend does the routing magic. In our case it uses a simple roundrobin load balancing algorithm.

http

The http frontend and backend serve a static http response for our Route 53 healthcheck.

Service

Now it's time to deploy our Proxy.

The Proxy service has 2 stacks:

First create the docker ECR registry.

sh ./stack-up.sh ecr

Now we can build, tag, and push the Docker image to the registry.

First update the package.json scripts to include your AWS account id.

To build, tag, and push the Docker image to the registry, run the following command.

yarn run deploy <version>

Now we can deploy the service stack which will deploy our new image to Fargate.

First update the Version here.

Then run:

sh ./stack-up.sh service

Your Proxy should now be running in your ECS cluster as a Fargate task.

Now on to Part 3, the Origin, to learn how we route HTTP traffic to the appropriate HLS Server.

Original published here: https://finbits.io/blog/live-streaming-proxy/

Top comments (0)