Using the cloud reduces on-premises infrastructure costs and related maintenance. Instead of deploying more servers, storage, and networking components to your own datacenter, you are now deploying these as cloud resources. Using the cloud is supposed to reduce infrastructure and maintenance costs. However, deploying cloud resources also risks over-commissioning, under-usage, and keeping resources running that are not always needed or, even worse, no longer in use.

To help you avoid wasting these unused resources, Puppet created Relay. This tool enables you to automate DevOps cloud maintenance, including automatically cleaning up resources you no longer need. This reduces resource waste while saving DevOps time, helping your team focus on delivering exciting new product features.

Cleaning up Azure

In this article, we walk you through a common scenario. You may be using Azure Infrastructure components, like Virtual Machines and related Virtual Networking resources, together with Azure Load Balancers. When you no longer need the Virtual Machine, and delete it, you may forget about the Azure Load Balancer. The Load Balancer then continues to use your valuable cloud computing resources. Relay workflow helps you clean up.

To use Relay:

- Activate your Relay account at relay.sh.

- Define a connection between Relay and Azure, using an Azure service principal.

- Create your Relay workflow, which then performs the cleanup.

Let’s examine each of these steps with step-by-step guidance. If you already have an Azure subscription with administrative access, you can follow these steps in your own environment.

Setting Up Relay

Setting up a Relay account is rather straightforward. It is a hosted-cloud service with nothing to download, install, update, or maintain.

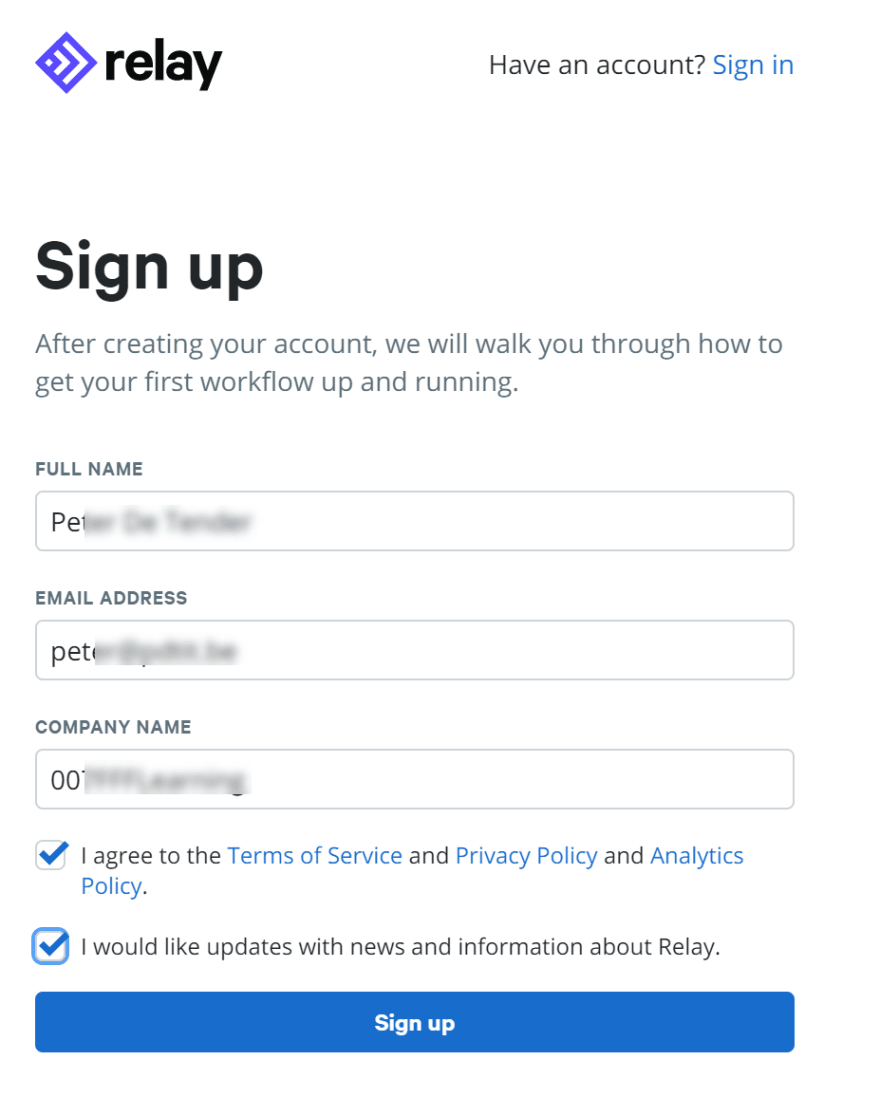

First, create a new Relay account by completing the required fields on the signup page.

After accepting the activation link in your email, create a complex password, confirm it, and that’s all it takes.

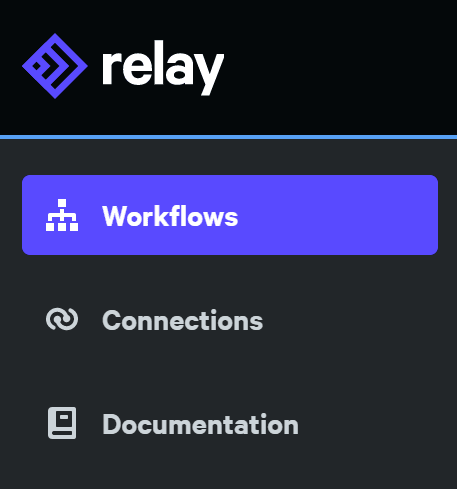

Then, after successfully logging on to the Relay platform, you are ready to start. From the Relay portal, browse sample workflows or create a new one from scratch.

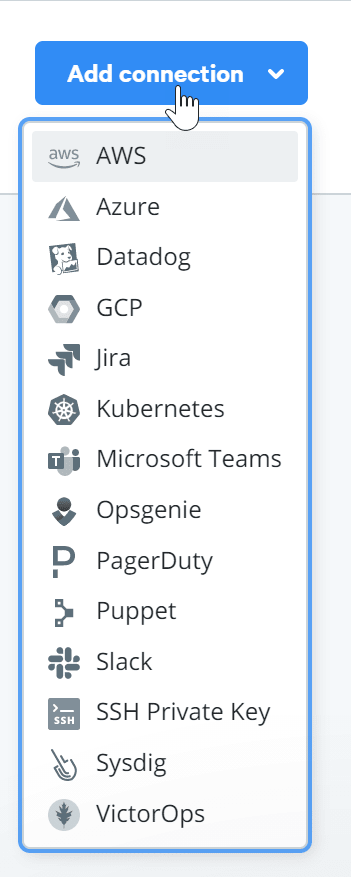

There is also an option to define connections, where you specify the service account of your cloud platform. Relay supports many different public and private cloud environments, including Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and Kubernetes. In our example, we will use Azure, but the process is similar in all environments.

Configuring Access to Azure

Before creating a workflow, let’s start by defining a connection for Azure. This relies on an Azure Active Directory service principal. Think of this object as just another user account, used by an application and similar to an Azure administrative account.

Once you create the service principal, apply Azure Role Based Access Control (RBAC) permissions, limiting this account’s administrative capabilities to keep your production environment secure. Optionally, you can read more about service principal objects.

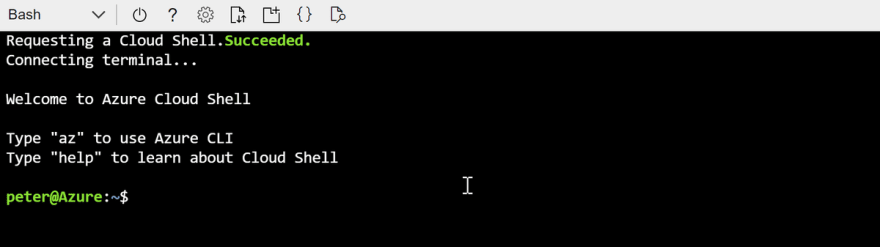

There are several ways to create a service principal, such as Azure Portal, Azure Cloud Shell, PowerShell, ARM Template, or REST API. We will show you how to create the service principal using Azure Cloud Shell.

First, navigate to the Azure Portal, and open Azure Cloud Shell from the top right menu. Note: if this is the first time you use Azure Cloud Shell, it will ask you to create an Azure Storage Account and Azure FileShare – complete this step to continue. Select Bash as the interface.

Type the following Azure command-line interface (CLI) command:

az ad sp create-for-rbac -n "Relaysp"

This creates the service principal in your Azure Active Directory, and shows the actual account credential details as Shell output. Copy this information for later use.

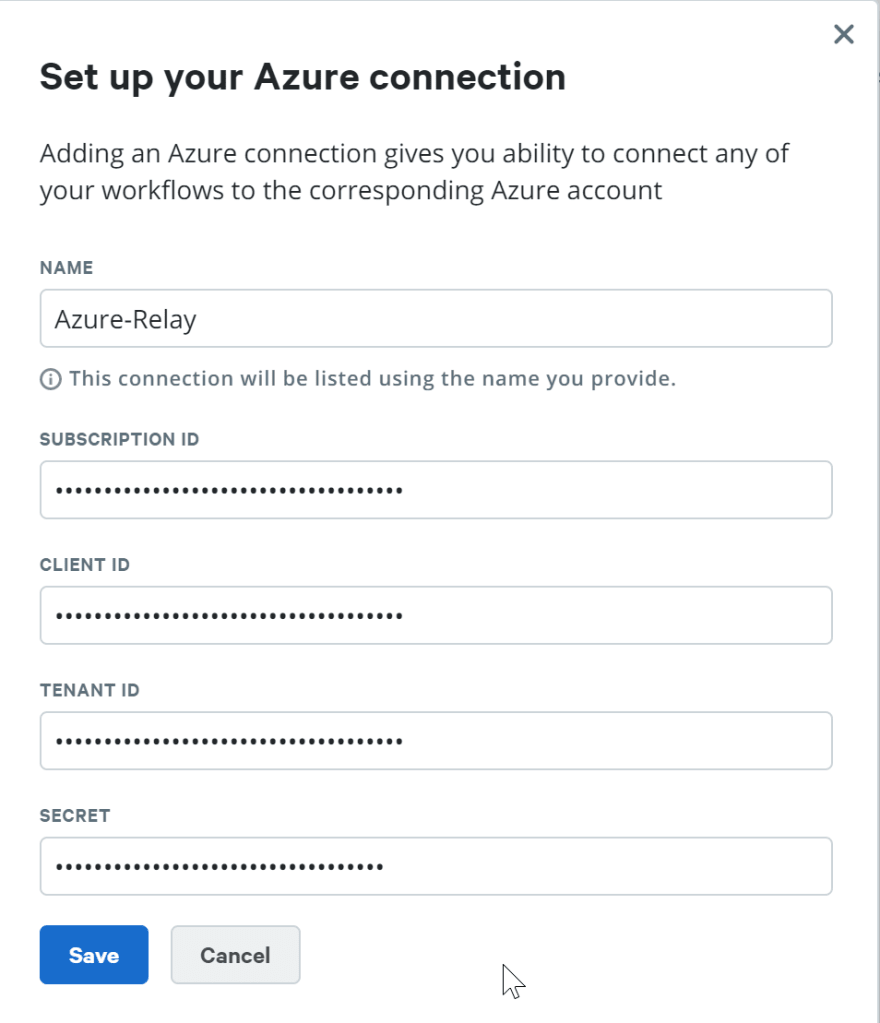

Go to the Relay portal and select “Connections”. Next, click the “Add Connection” button and choose Azure from the list. This opens the “Set up your Azure Connection” window. Complete the fields, copying the information from the Cloud Shell output, as shown in the below example:

Relay Names Azure Service Connection Names

- Subscription ID - Subscription

- Client ID - appId

- Tenant ID - tenant

- Secret - password

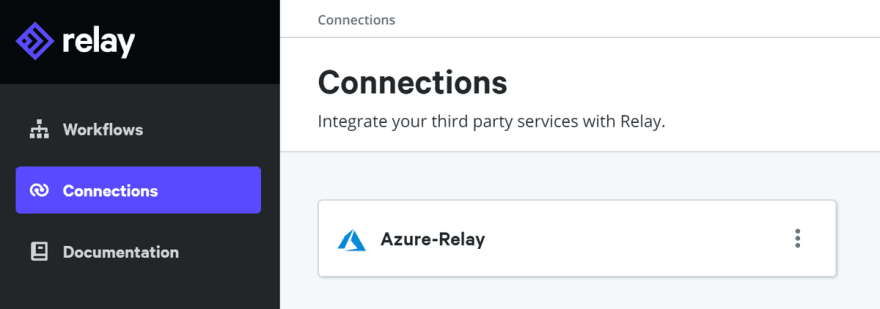

Save the information. This creates your connection.

We are now ready to create our workflow.

Creating a Workflow

There are a couple of different ways to create the workflow. Remember, it is a YAML dialect, allowing us to author the file in any intelligent text editor, like Visual Studio Code or similar.

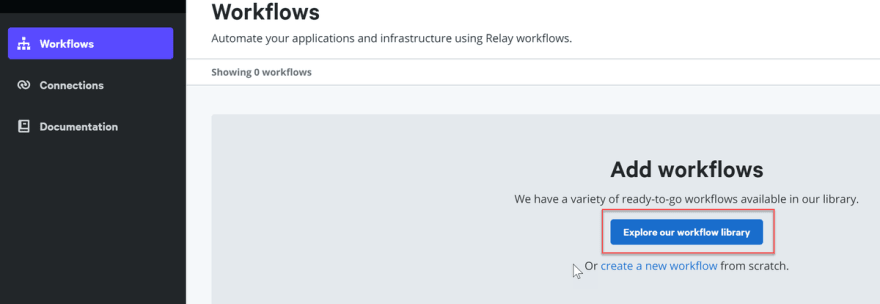

You could write the YAML from scratch, but Relay provides an extensive open source library of sample workflows on GitHub, integrated into the Relay portal. So, let’s have a look.

First, from the Relay portal, navigate to Workflows. Select “Explore our workflow library_”._

From the category list, select “Cost optimization”.

Locate the “Delete empty Azure Load Balancers” workflow.

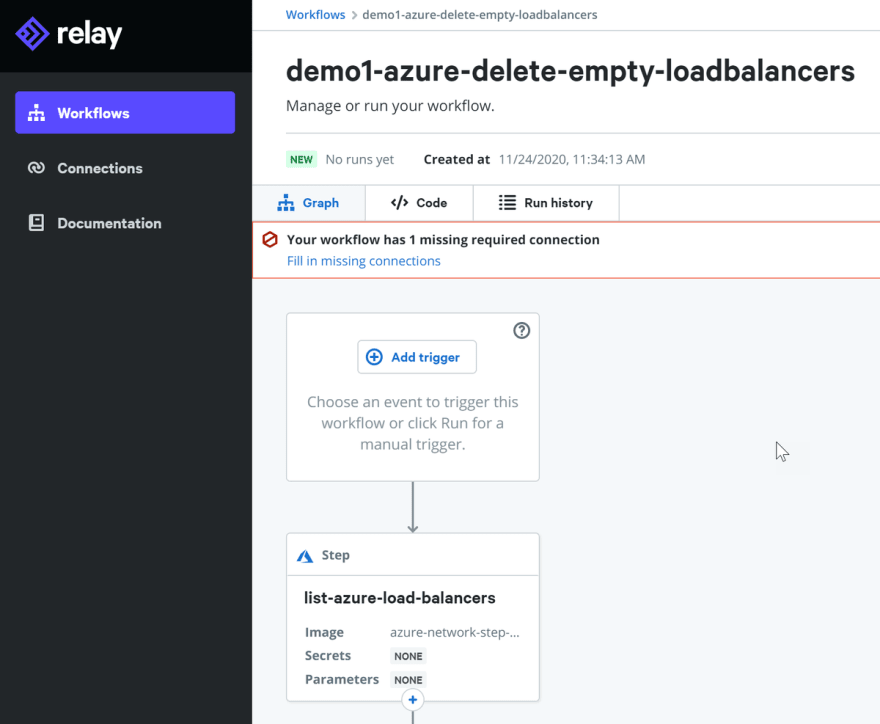

Click “Use this workflow”, provide a unique name, and confirm by pressing “Create workflow”.

This imports the workflow into your dashboard. From here, you need to complete some minimal configuration settings.

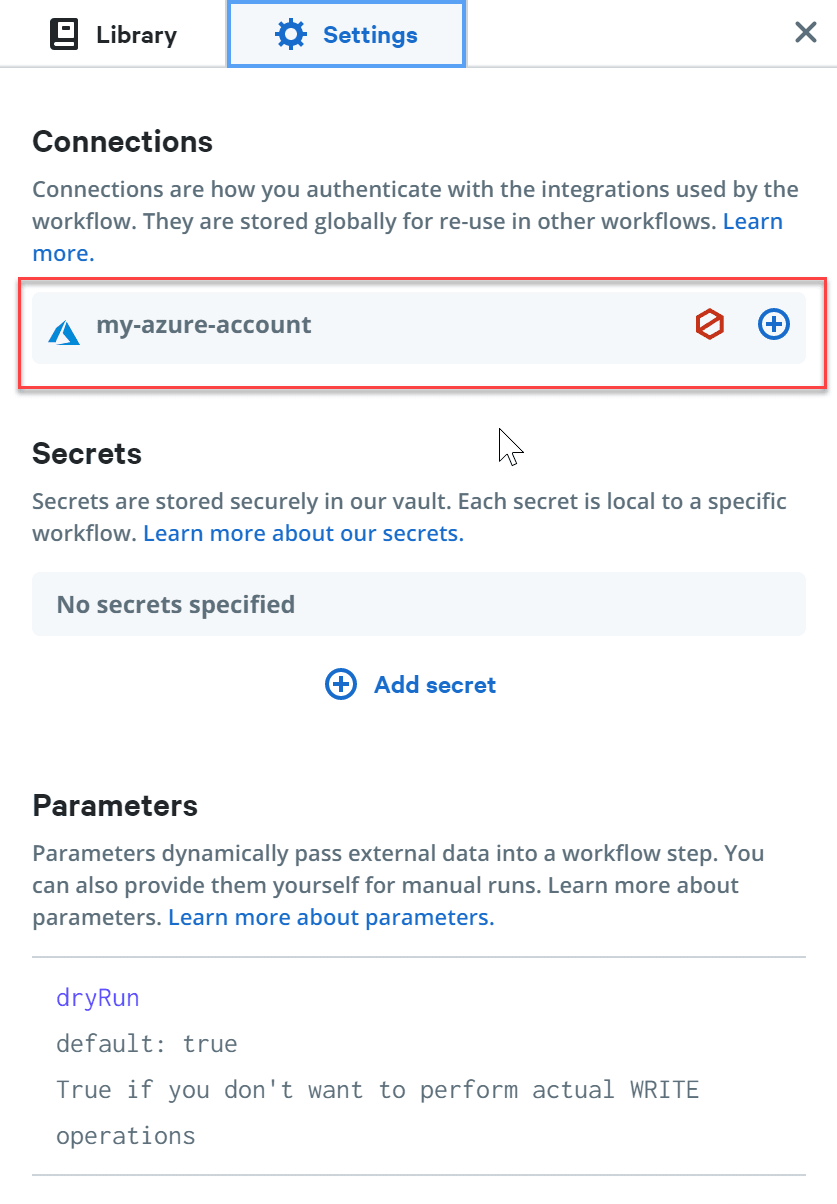

The first warning we get is “missing required connection”. You need to specify which Azure Connection the workflow should use, namely, the one you created in the previous step. To fix this error, click the “Fill in missing connections” link.

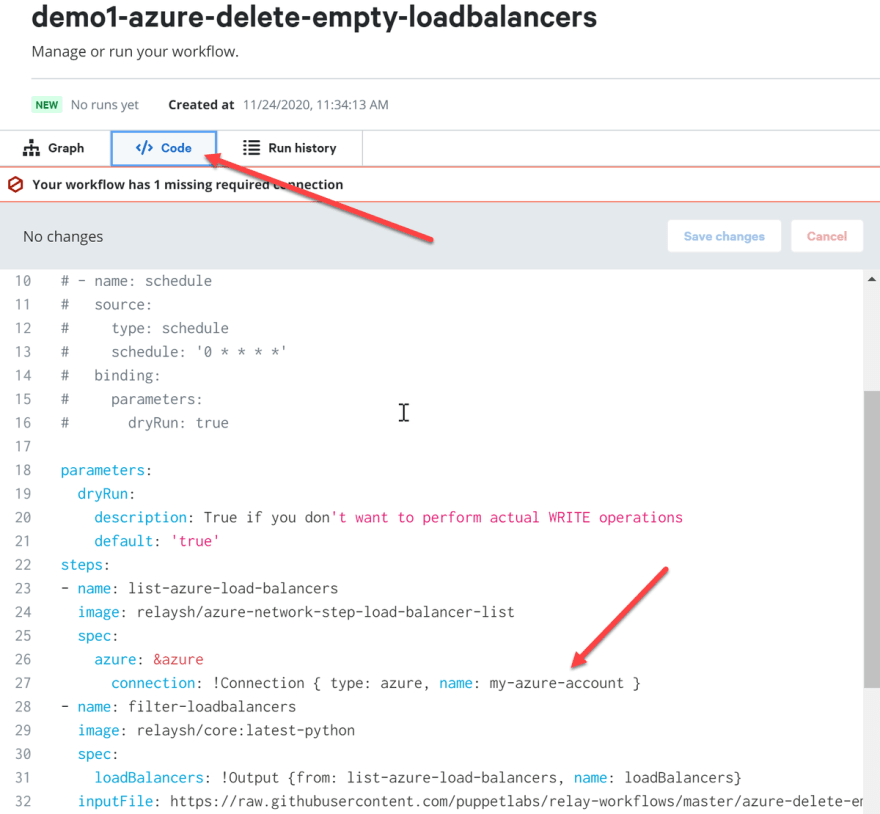

Here, it shows a default Connection of “my-azure-account”. You could add a new connection here, however, we already created one. To use it, we need to switch back to the Code view of the workflow and make a change in the YAML.

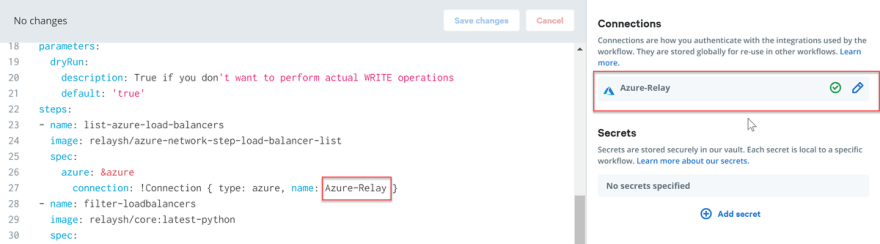

In the code editor, search for the keyword “connection” (line 27 in the sample file), and replace the name “my-azure-account” with the name of the Azure Connection you created earlier (Azure-Relay in our setup). Make sure you save the changes.

Notice how the connection is ‘recognized’ under the settings pane to the right.

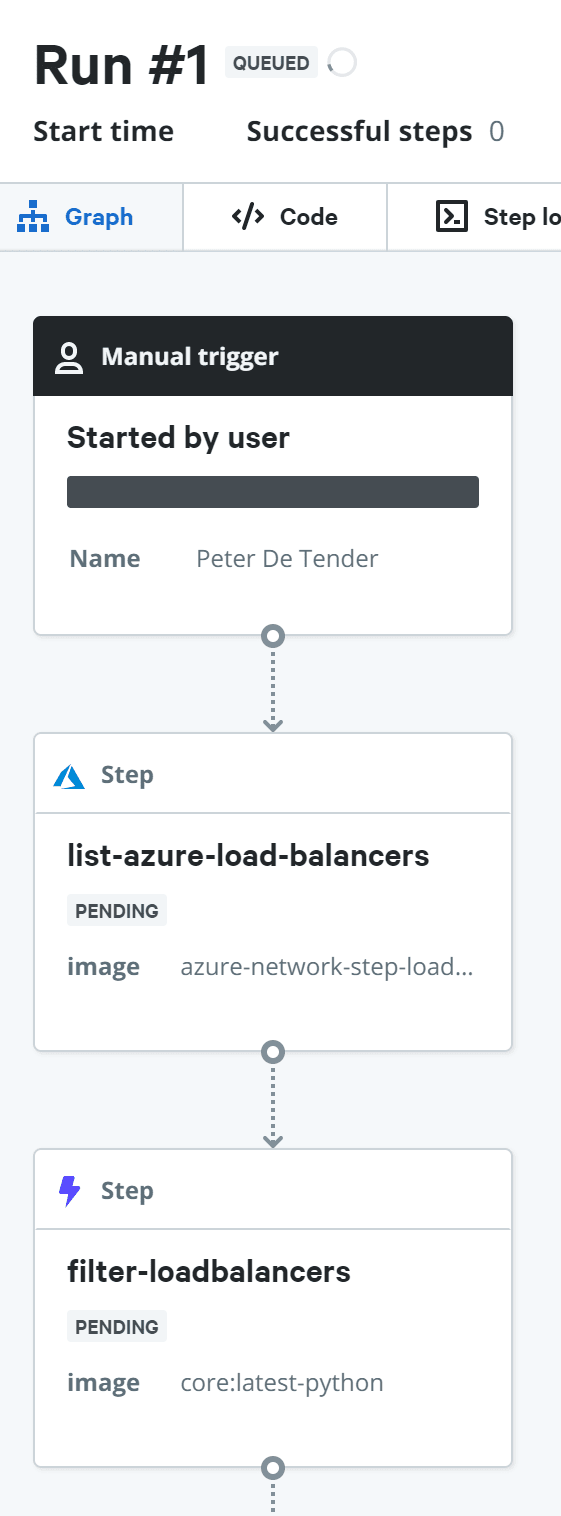

From here, let’s test the workflow to ensure it can successfully connect to our Azure subscription and detect Load Balancers. Click “Run” and confirm by pressing “run workflow” in the popup window. Also, notice this flow can run in dryRun mode, which means it won’t actually change anything in our environment.

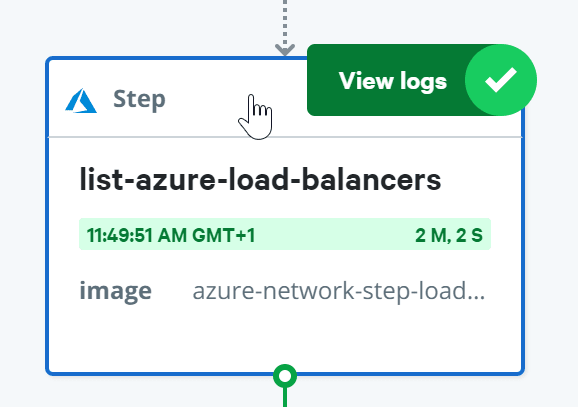

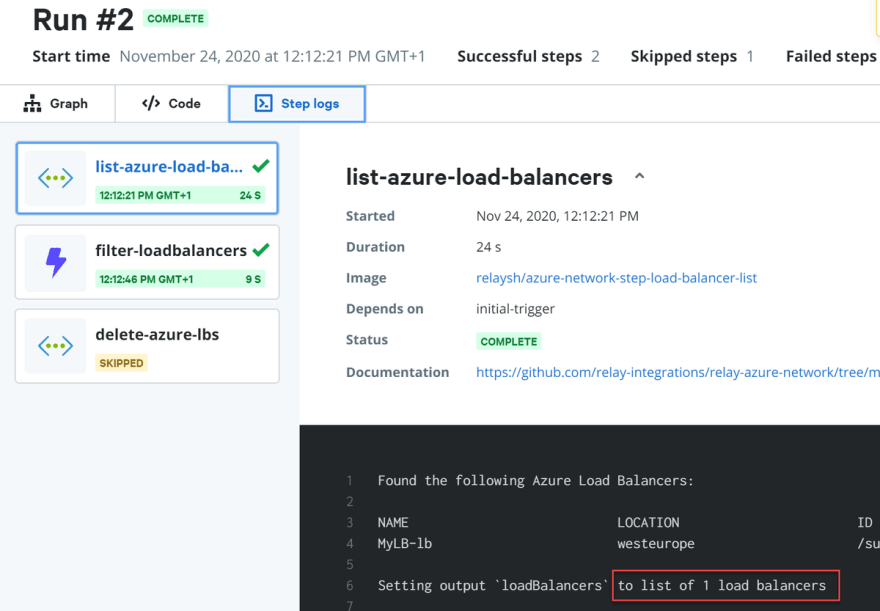

The workflow kicks off and displays the step-by-step sequence:

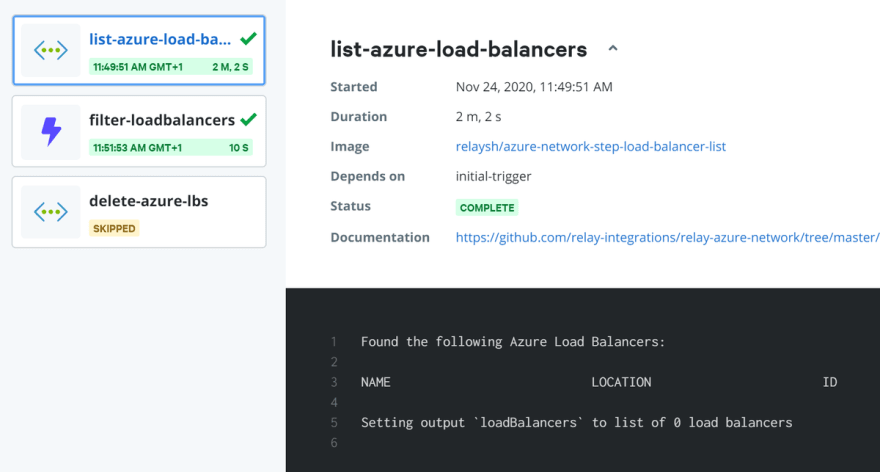

The dry run workflow worked fine. To look at each step in a bit more detail, hover over the step, for example, “list-azure-load-balancers”, and select view logs.

The following output is displayed:

As you can see, the workflow lists the valid load balancers. Note: we didn’t deploy a Load Balancer yet, so it is normal that the result is zero, but at least it ran fine.

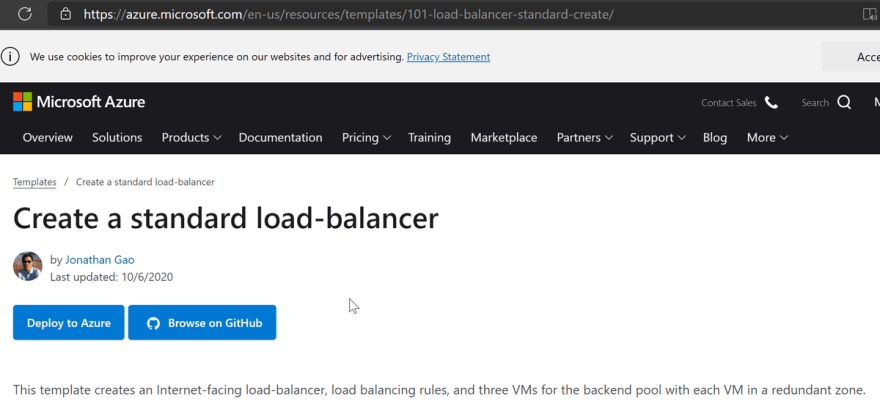

Remember, this workflow is checking for “empty load balancers”, which means it looks for Azure Load Balancers without any endpoint connection parameters. To make this a more viable test, let’s deploy a Load Balancer in Azure. If you need some assistance on how to do this, you might use this sample from Azure QuickStart Templates.

This deploys a Standard-type Azure Load Balancer, together with three Virtual Machines (VMs) as a back-end pool. If you want, you can update the Azure deployment template to deploy only a single VM if you don’t want to test three.

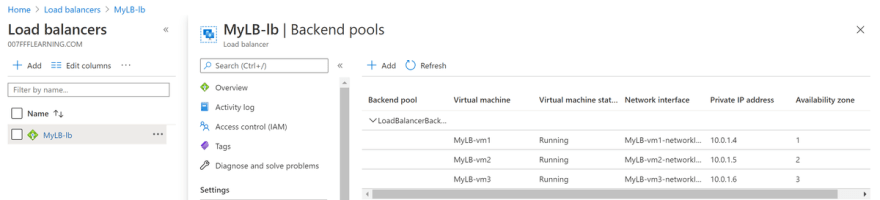

After deploying this template, the setup looks similar to this:

Let’s go back to Relay and run our workflow once more. Notice that, this time, the Load Balancer is actually detected.

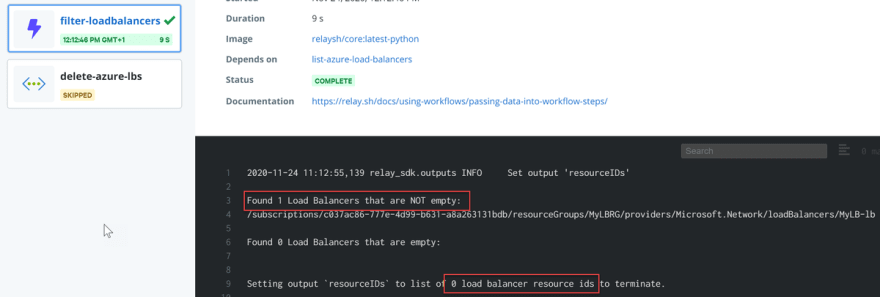

Next, select the next step in the flow, filter-loadbalancers, and view logs. This reveals that the detected Load Balancer is not empty.

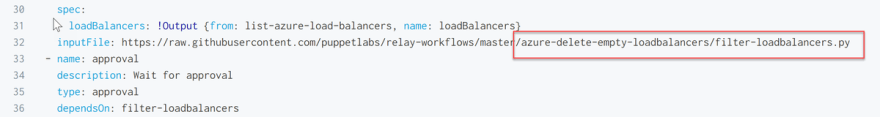

It is interesting to know how Relay identifies if the Load Balancer configuration is empty or not. Let’s have a look at the actual YAML code.

The script includes a pointer to a Python script, which looks like this:

You can see it is checking for any Load Balancers with empty “backend_address_pools”.

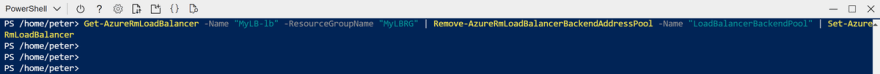

To run a more valid test, let’s go back to the Azure Load Balancer, and clear the backend pool configuration. The easiest way to remove this is by using the following PowerShell cmdlet:

Remove-AzureRMLoadBalancerBackendAddressPoolConfig

For more information on doing this, refer to the following Microsoft Doc:

Remove-AzureRmLoadBalancerBackendAddressPoolConfig

Note: if you used the default settings from the Azure Quickstart Template sample deployment earlier, use the following PowerShell script:

Get-AzureRmLoadBalancer -Name "MyLB-lb" -ResourceGroupName "MyLBRG" | Remove-AzureRmLoadBalancerBackendAddressPool -Name "LoadBalancerBackendPool" | Set-AzureRmLoadBalancer

The Load Balancer configuration shows an empty BackEndPool now:

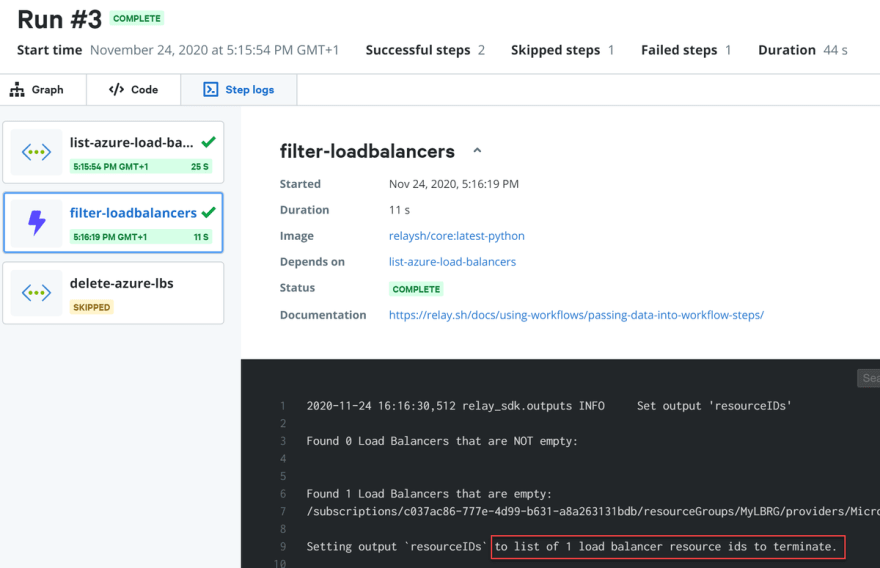

Run the Relay workflow once more to see what happens with the Azure Resource. To test, make sure you keep the DryRun value set as True. The result is the following:

This time, the empty Load Balancer is detected for removal.

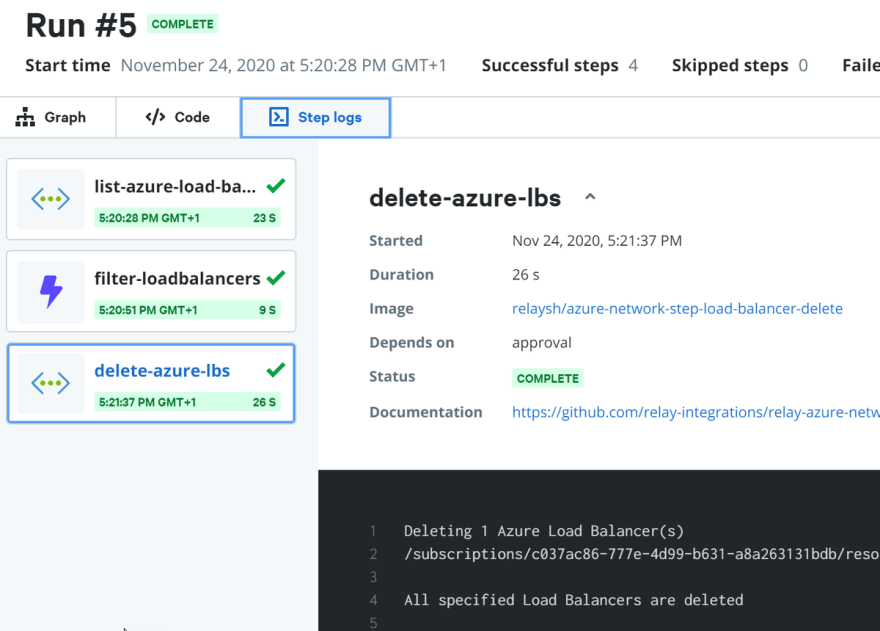

Next, trigger the workflow once more, this time setting the DryRun to False, which means it will actually force the removal of the Azure Load Balancer resource. You will also need to confirm “Yes” on the approval step in the workflow.

After about a minute, we get positive confirmation saying all specified load balancers are deleted. Validating from the Azure Portal confirms this.

As a last step in this process, I want to highlight the trigger option at the beginning of the workflow. Up till now, we kicked off the workflow manually. This works fine for testing, but doesn’t work well in production. You may want to schedule this cleanup, validating your environment every week or maybe every night.

To schedule your load balancer cleanup, first, select the first step in the workflow, “Add trigger”.

This opens a list with different triggers to choose from, like running a “per day” job or triggering the workflow using an HTTP interaction. This could be interesting if you want to automate the process from your systems management tool. Whenever you connect to the trigger-HTTP-URL, the workflow executes. Let’s go back to the day-trigger scenario for now.

Select the “Run a trigger every day” option, which reflects the corresponding code. The most important setting here is “schedule”, using the standardized cron notation to define the scheduling format.

For more information on the cron definition, I can recommend this helpful link. You now have one less thing to monitor. You can check it off your busy DevOps team’s to-do list.

Next Steps

In this article, we introduced you to Relay, a product Puppet created to automate cloud management tasks. From first configuring the cloud connection, we explored an example scenario, cleaning up empty Azure Load Balancers. We then learned how to trigger the workflow as a daily scheduled task.

Other example workflows enable you to easily set up maintenance of VMs, network interface controllers (NICs), and Disks.

While this example detailed how to clean up Azure, Relay also works with other cloud environments. You can adjust the example above to develop workflows for any Azure, AWS or GCP resources.

Automating your load balancer cleanup and other DevOps tasks saves you time and money as your team instead focuses on creating great new features for your software applications. Check out Relay to automate your DevOps maintenance today.

Top comments (0)