It's difficult to say enough good things about our AI Agent technology. Some of the things you can do with it is truly mindblowing. In this article I will show and tell how you can use it to connect ChatGPT to your own database, using no-code, in some 5 minutes of total work.

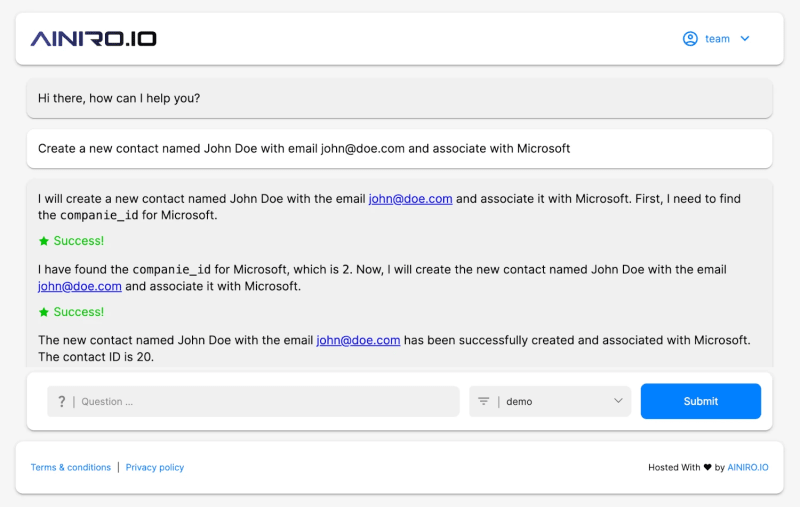

The process is based upon meta programming, where we start out with your database, generate CRUD API endpoints for it - For then to connect these CRUD endpoints to your AI type. The end result is a ChatGPT interface where you can interact with your SQL database to perform any CRUD operation you wish using natural language. Below is a screenshot of the final result.

As you can see in the above screenshot the LLM is smart enough to understand it needs to lookup Microsoft's ID first, before it creates the new contact.

The process is based upon Magic Cloud and Hyperlambda, and if you've got a cloudlet you can follow hands on. If not, you can purchase a cloudlet here. If you prefer to watch how I do it hands on, you can find a video tutorial below.

1. Connect to your Database

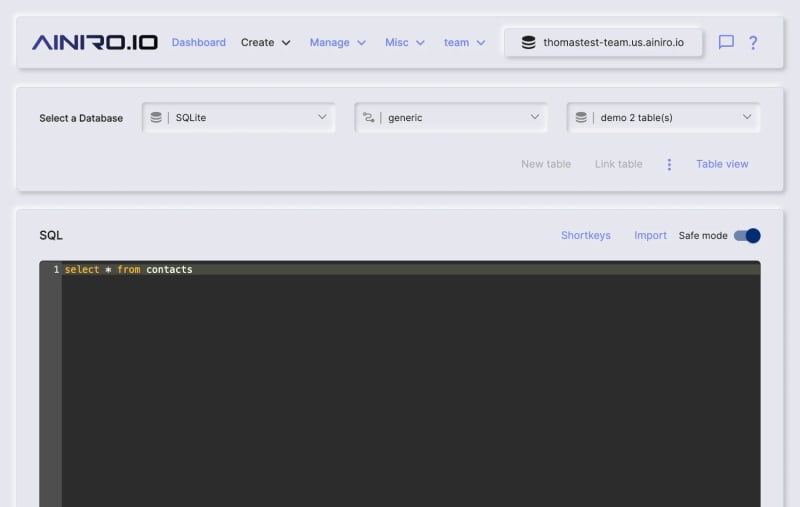

The first thing you'll need is to connect your cloudlet to your database. This implies adding your database connection string to Magic using its database component. Below is a screenshot.

When you add your connection string you have to exchange its database name with {database}. This is because Magic requires access to meta information, and the {database} parts is dynamically substituted as it creates a connection. When your connection works, you can test it in SQL Studio and verify you can access the database and execute SQL queries towards it.

2. Generate a CRUD API

The second thing you'll need to do is to generate a CRUD API using the backend generator. The backend generator allows you to connect to your database, choose settings, and generate CRUD API endpoints for every table you have. Below is a screenshot.

Feel free to mess around with its settings as much as you wish, but the default settings should be sane for most use cases. Once you click the "Generate endpoints" button, the system will use your settings to generate a CRUD API for every table in your database.

3. Install plugins

You will need two plugins to follow the above video. These are as follows.

- openai

- ai-expert-system

Go to Manage/Plugins and install both of these. This should resemble the following.

The OpenAI plugin provides us with some additional helper AI functions, and most importantly an additional "flavour". A flavour again is just a default template system message, allowing you to start out with 99% of everything wired up.

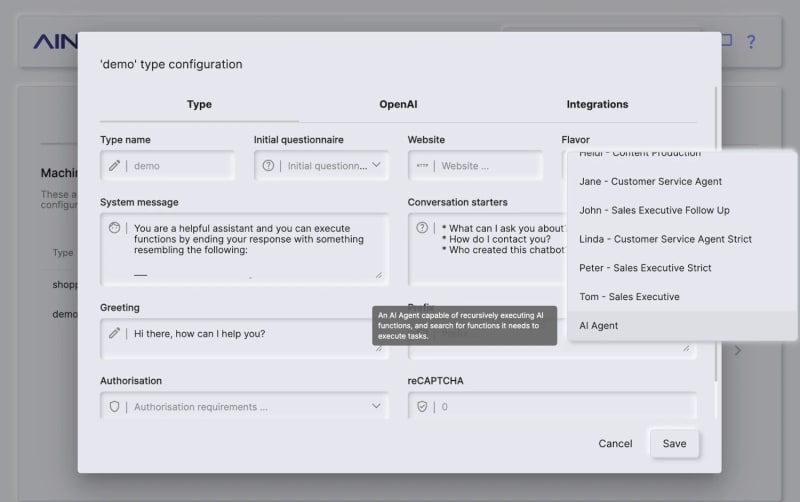

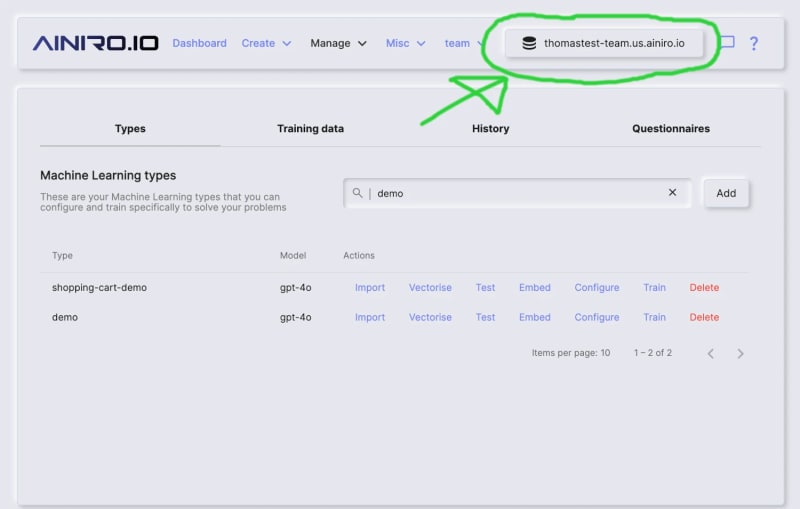

4. Create your Machine Learning type

Then you're going to need a machine learning type. This is a collection of VSS records, which the middleware will search through using VSS as the user is querying your type. In addition an ML type also contains additional settings such as the system message.

The system message is an "invisible message 1," always being sent to ChatGPT as your type is being queried. The only thing you really have to modify here is its flavour. Choose the "AI Agent" flavour, which will populate the system message with some default AI functions for you, allowing the type to perform meta prompting and handle AI functions correctly.

The system message is equivalent to your ChatGPT "instruction" when you're creating a GPT. If you want to study the system message the AI Agent flavour generates, you can find it in the "System message" textbox.

Notice, if you're creating a public AI chatbot, you will need to remove some parts - Especially the parts that allows for executing SQL queries. The AI Agent system message is for password created chatbots where you trust the users to not apply harm.

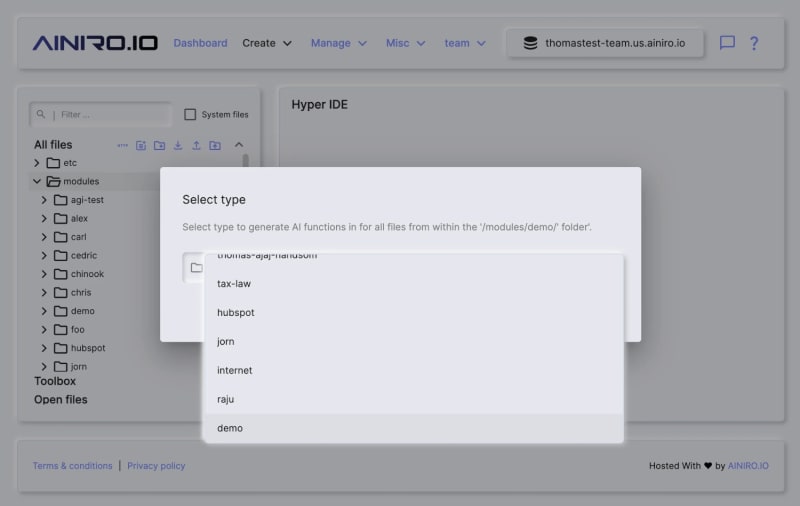

5. Add AI Functions

The next step is to add AI functions for all of your CRUD API endpoints. Go to Hyper IDE and hover over your module folder. This will show a flash icon that you can click. When you click it, you will be given a form resembling the following.

Choose the type you created in the above step and click "Generate". This process will generate AI functions for every single Hyperlambda file in your folder recursively. At this point you're done with a machine learning type that can do CRUD operations towards your database with natural language using ChatGPT as its LLM. However, before we can start using it we'll need to create embeddings for all training snippets.

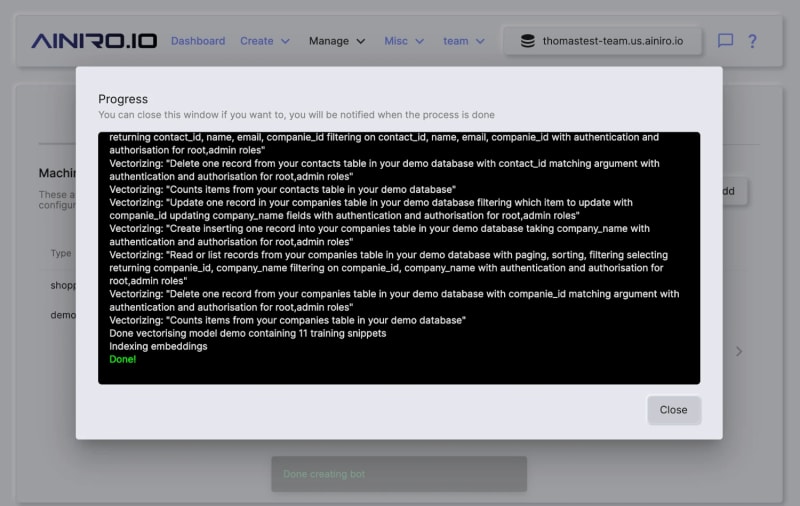

6. Vectorise your type

The final step you'll need to do is to vectorise your type. Go back to Machine Learning in Manage and click "Vectorize" on your type. This uses OpenAI's API to generate embeddings for your training snippets and resembles the following.

Once the above process is done, your type will have associated embeddings for all training snippets in its VSS database, and you can test it.

7. Test it

Click the button in the top right corner of your dashboard resembling the following.

This will show you a modal form where you can click the "Frontend" button. Click the frontend button, and play around with your type using the AI Expert System. Notice, if you've got popup blockers you might have to explicitly disable these in your browser for your sub-domain. Remember to choose the right type before you start asking questions.

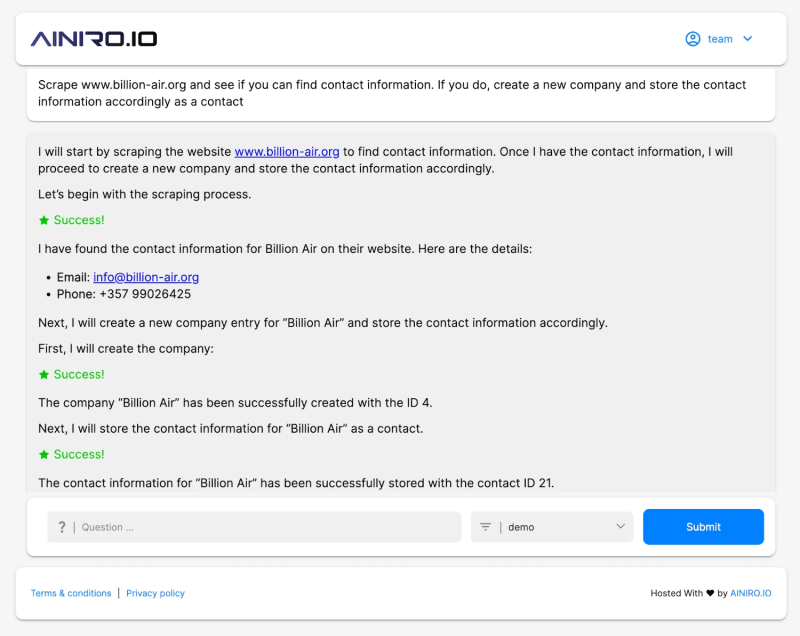

Below you can see me asking some questions towards my type.

You can ask anything related to your CRUD endpoints, such as "Count records from xyz" or "Show me contacts with a name containing 'John'", etc. But more interestingly is that you can give it "composite instructions." A composite instruction is when you ask it to execute multiple steps at the same time, such as; "Do x, then do y, then finish with z."

In addition you can have the type execute multiple AI functions with one prompt, such as; "Scrape xyz.com and find all name and emails from the page, then create a new company based upon the information found at xyz.com, for then to insert new contacts with all names you find on the page."

And yes, the AI Agent system instruction contains AI functions for web search and scraping websites in addition to all CRUD functions towards your database.

How it works

The thing is based upon AI Functions. An AI Function is just some prompt engineering that's referencing some Hyperlambda code. This allows us to teach ChatGPT to execute functions in our cloudlet middleware, which it then uses to answer your original question. The way it works is that we instruct ChatGPT to return JSON given some specific condition, such as for instance:

If the user asks you to count contacts, then return the following JSON ...

ChatGPT again extrapolates the function invocations allowing you to give it commands such as for instance:

"Find the first 3 contacts belonging to Microsoft and create an email to them telling I'll be late for our meeting"

The above will result in something resembling the following.

Above you can see how ChatGPT triggers an AI workflow, first retrieving contacts from your database, for then to create an email - Before it finally sends the email to the contacts. In theory there's no upper boundary for the complexity you can give it, but by default a type only allows for 5 consecutive AI functions to be invoked before it rejects the next invocation. This is to avoid never ending recursive invocations towards ChatGPT by accident.

This allows you to build your business logic either through your system message, manually created training snippets, or through prompt engineering from the frontend through its chat interface. To give you an idea of just how powerful this is, take a look at the following screenshot.

In the above message I ask it to scrape a website, look for contact information, for then to store the information in the database I created in the above video. One prompt executing 3 AI functions conditionally. Manually doing the above without an AI Agent would probably require 10 to 15 minutes of work. I could also supply it with a list of websites of course, in theory scraping 100+ websites to find contact information.

Wrapping up

In this article we connected ChatGPT to an SQL database, for then to generate CRUD endpoints wrapping our database, and finally embed these CRUD endpoints as AI functions into an AI chatbot that we could query using natural language.

The whole process took only 5 minutes and was done entirely without coding due to Magic's no-code and low-code capabilities.

I have seen entire software departments trying to accomplish the above and failing, even after months of trying. With Magic it was a 5 minute job, with zero coding required, possible to do exclusively using prompt engineering and no-code constructs.

Top comments (0)