Introduction

The MISP platform is an OpenSource projet to collect, store, distribute and share Indicators of Compromise (IoC). The project is mostly written in PHP by about +200 contributors and currently has more than 4000 stars on GitHub.

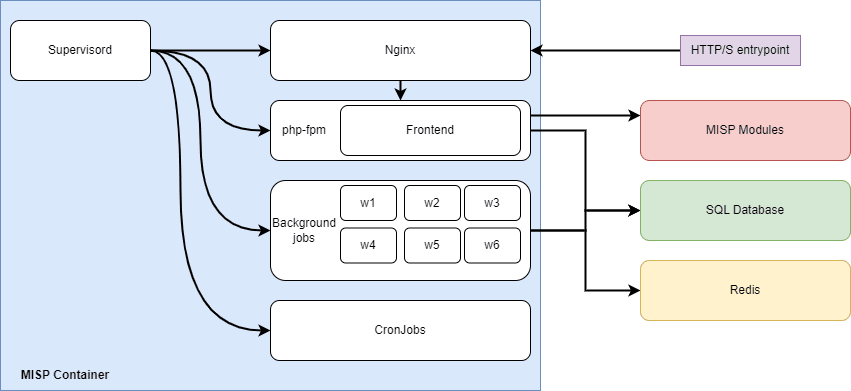

The MISP’s core is composed of:

- Frontend: PHP based application offering both a UI and an API

- Background jobs: Waits for signals to download IOCs from feeds, index IOCs, etc

- Cron jobs: Triggering recurrent tasks such as IOCs downloads and update of internal information

It also relies on external resources like a SQL database, a Redis and an HTTP frontend proxying requests to PHP.

At KPMG-Egyde, we operate our own MISP platform and enrich its content based on our findings which we share with some of our customers. We wanted to provide a scalable, highly available and performant platform; this is why we decided to move the MISP from a traditional virtualized infrastructure to Kubernetes.

As per writing this article, there is no official guide on how to deploy and operate the MISP on Kubernetes. Containers and few Kubernetes manifests are available on GitHub. Despite the great efforts made to deploy the MISP on containers, the solutions isn’t made to scale -yet-.

State of the art

Traditional deployments

The MISP can be deployed on traditional Linux servers running either on-premises or cloud providers. The installation guide provides scripts to deploy the solution. External services such as the database are also part of the installation script.

Cloud images

The project misp-cloud is providing ready to use AWS AMI containing the MISP platform as well as all other external component on the same image. They may provide images for Azure and DigitalOcean in the future.

Containers

MISP-Docker

The official MISP project is providing a containerized version of the MISP where all elements except the SQL database are included in a single container.

Coolacid’s MISP-Docker

The project MISP-Docker from Coolacid is providing a containerized version of the MISP solution. This all-in-one solution includes the frontend, background jobs, cronjobs and an HTTP Server (Nginx) all orchestrated by process manager tool called supervisor. External services such as the database and Redis aren’t part of the container but are necessary. We decided that this project is very a good starting point to scale the MISP on Kubernetes.

Scaling the MISP

v1. Running on an EC2 instance

In our first version of the platform, we deployed the MISP on a traditional hardened EC2 instance where the MySQL database and redis were running within the instance. Running everything in a VM was causing scaling issues and requires the database to be either outside the solution or synced between the instances. Also, running an EC2 means petting it by applying latest patches, etc.

v2. Running on EKS

In order to simplify the operations ,but also for the sake of curiosity, we decided to migrate the traditional EC2 instance based MISP platform to Kubernetes, but more specifically the AWS service EKS. To fix the problems with scaling and synchronizing, we decided to use AWS managed services like RDS (MySQL) and ElastiCache (Redis).

After few months of operating the MISP on Kubernetes, we struggled with scale the deployment due to the design of the container:

- Entrypoint: The startup script copies files, updates rights and permissions at each start up

- PHP Sessions: by default, PHP is configured to store sessions locally causing the sessions to be lost when landing other replicas.

- Background jobs: Each replica are running the background jobs causing the jobs not being found by the UI and appear to be down.

- Cron jobs: Jobs are scheduled at the same time on every replica causing all pods executing the same task at the same time and loading external components.

- Lack of monitoring.

v3. Running on EKS

When it comes to use containers and Kubernetes, it also comes with new concepts such as micro-services and single process container. We realized that the MISP is actually two programs written in a single base code (frontend/ui and background jobs) sharing the same configuration. In our quest to scale, we decided to split the containers into smaller pieces. As mentioned above, this version of our implantation is based on the Coolacid’s amazing work which we forked.

Frontend

In this version, we kept the implementation of Nginx and PHP-FPM from Coolacid’s container and removed the workers and cron jobs from it. Nginx and PHP-FPM are started by Supervisor and the configuration of the application is mounted as static files (ConfigMap, Secret) to the container instead of dynamically set.

Background Jobs aka workers

Up to version 2.4.150

Up to version 2.4.150, the MISP background jobs (workers) were managed by CakeResque library. When starting a worker, the MISP is writing its PID into Redis. Based on that PID, the frontend shows worker’s health by checking if the /proc/{PID} exists. When running multiple replicas, the last started pod writes its PIDs to Redis and may not be the same as the others. Refreshing the frontend on the browser may show an unavailable worker due to inconsistent PID numbers (for example, latest PID of process prio registered is 33 and the container serving the user’s request know process prio by its PID 55).

Our first idea was to create two different Kubernetes deployments: a frontend with no workers that could scale and a single replica frontend which is also running the workers.

Using the Kubernetes' ingress object, we can split the traffic based on the path given in the URL: /servers/serverSettings/workers forwards the traffic to the single-replica of the frontend pod running the workers and all other requests are forwarded to the highly available frontend.

Background jobs are managed by the platform administrators, it’s acceptable that the UI isn’t available for a short period of time whereas the rest of the platform must remain accessible by our end-users and third party applications.

From version 2.4.151

As stated in the MISP documentation, from version 2.4.151, the library CakeResque became deprecated and is replaced by the SimpleBackgroundJobs feature which rely on the Supervisor.

Supervisor is configured to expose an API over HTTP on port 9001. Hence, the workers can be separated from the frontend which can be easily scaled.

Wait, what about the single process container and micro service mentioned earlier ? As per writing this article, the MISP configuration allows to configure a single supervisor endpoint to be polled and not an endpoint per worker.

Yes but … the frontend/ui is still trying to check the health of each process by checking in /proc/{PID} like in previous and shows that the process maybe start but it couldn’t check if it’s alive or not. An issue was created and we’re waiting for the patch to be integrated in a future version.

CronJobs

The MISP relies on few cron jobs used to trigger tasks like downloading new IOCs from external sources or content indexing. When running multiple replicas of the same pod, the same jobs are all scheduled at the same time. For example, the CacheFeed task happens every day at 2:20 am on every replicas degrading the performance of the whole application when running.

Thanks to the Kubernetes CronJob object, those jobs are running only once following the same schedule and independently from the number of replicas. All CronJobs are using the same image but the command parameter is specific for every jobs.

apiVersion: batch/v1

kind: CronJob

metadata:

name: cache-feeds

...

spec:

...

jobTemplate:

...

spec:

...

spec:

containers:

- command:

- sudo

- -u

- www-data

- /var/www/MISP/app/Console/cake

- Server

- cacheFeed

- "2"

- all

...

Other improvements across all components

- Entrypoint: All files operations are done at the dockerfile level speeding up the startup phase instead of the entrypoint script.

- MISP configuration: Configuration of the software is done dynamically done with Cakephp CLI tool and using sed commands based on environment variables. We replaced this process by mounting static configuration files as Kubernetes object ConfigMap

- PHP Sessions: PHP was configured to store sessions into Redis as it’s already used for the core of the MISP

- Helm chart: All the Kubernetes manifests were packaged into a helm chart

- Blue/Green deployment: Shorten the downtime during update of the underlying infrastructure or application

Next steps

We’re already working on a v4 on which we’ll focus on the security aspects as well as matching with the Kubernetes and Docker best practices.

Conclusion

We read quite often from Kubernetes providers that moving monoliths to containers is easy, but they tend to omit that scaling isn’t an easy task. Also, monitoring, backups and all other known challenges on traditional VMs still exist with containers and can be even more complex at scale.

Through the whole project, we learned a lot about how the MISP works by testing numerous parameters but also by reading a lot of code to better understand what and how to scale.

Top comments (0)