In today's rapidly growing world, every enterprise faces the challenge of matching its pace with customer expectations and software delivery speed. Continuous Integration/Continuous Delivery (CI/CD) is a part of DevOps that can help to achieve rapid software changes while maintaining system stability and security. In this post you will understand how CI/CD works on AWS.

This post is an excerpt taken from the book by Packt Publishing titled ‘AWS Certified Developer - Associate Guide - Second Edition’ and written by Vipul Tankariya and Bhavin Parmar. This book will take you through AWS DynamoDB A NoSQL Database Service, Amazon Simple Queue Service (SQS) and CloudFormation Overview, and much more.

There are various individual AWS web services for DevOps, such as CodeCommit, CodeBuild, CodeDeploy, and CodePipeline. Respectively, these AWS services act as a source code repository and continuous integration service that is responsible for compiling source code, running tests, and producing software packages that are ready to deploy. The deployment service can automate software deployment on various AWS compute services and on-premises servers, and automate the build, test, and deploy phases for the release process.

To implement an Agile process and DevOps culture in the enterprise's IT, manually moving code from development to production may be time consuming and error prone. Also, in the case of failure at any step, it may be time consuming to undo the change and restore the previous stable version. If such an error occurs in a production environment, it may also directly impact the business's availability. On the other hand, we can think of CI/CD as an assembly line. The heart of CI/CD is writing or designing the CodePipeline.

Steps can be managed from the very beginning when a new piece of code is merged with the master branch from the development (usually, the master branch in the repository consists of the final code for use in the business) till the last step of deploying the compiled and tested software to the production environment. CI/CD stops the delivery of bad code to the production environment by embedding all the necessary tests, such as unit tests, integration tests, load tests, and so on, in the pipeline.

It is a best practice to carry out such tests on the test environment, which is identical to the production environment, to calibrate and test code more precisely. Implementing continuous delivery or continuous deployment is one of the options in CI/CD practices where passing builds are deployed directly to the production environment and application changes run through the CI/CD pipeline. Let's look at some basic terminology with the help of the following screenshot:

Basics of Continuous Integration

When CI is implemented, each of the developers in a team integrates their code into a main branch of a common repository several times during a day. AWS CodeCommit or third-party tools such as GitHub and GitLab can be used as a common source code repository to store developing code. Earlier, in traditional software, the development took place in isolation and was submitted at the end of the cycle. The main reason for frequently committing code several times to a main branch of a common repository is to reduce integration costs. Since, developers can identify conflicts between the old and the new code at an early stage, it is easy to resolve these conflicts and less costly to manage.

Any time developers commit a new code to the common branch at the common repository, no manual methods exist to make sure that the new code quality is good, doesn't bring any bug to the software, and doesn't break the existing code. To achieve this, it is highly recommended you perform the following automatic tasks every time a new change is committed to the repository:

- Execute automatic code quality scans to make sure the new code adheres to enterprise coding standards.

- Build the code and run automated tests

The goal of the CI is to make new code integration simple, easy, and repeatable to reduce overall build costs and identify defects at an early stage. Successful implementation of CI will make changes in the enterprise and will act as a base for CD. CI refers to the build and unit testing stages of the software release process. Every new release commit triggers an automated build and test.

Basics of Continuous Delivery

CD is the next phase of CI, and it automates pushing the applications to the various environments, from development to test and pre-production. It is also possible to have more than one development and testing environment for application changes that are staged for various individual tests and review. Even if code has passed all the tests, CD doesn't automatically push the new change to the production. But it makes sure that the code base is deployable and builds are ready to deploy to the production environment at any time.

Teams can be confident that they can release the software whenever they need to without complex coordination or late-stage testing. Amazon CodePipeline can be used to build pipelines, and Amazon CodeDeploy can be used to deploy newly built code to the various AWS compute services or in various environments from development to production.

In general, with the help of the CD release schedules (daily, weekly, fortnightly, or monthly) as per the business requirements, new features are deployed to production on these specific schedules. If the business permits, then develop software in small batches, as it is easy to troubleshoot if there is a problem

Continuous deployment : An extension of CD

Continuous deployment is an extension of continuous delivery. Continuous deployment automatically deploys each build and makes sure that the build has passed the full life cycle to the production environment. This method doesn't wait for human intervention to decide whether to deploy a new build to the production environment; as soon as it has passed the deployment pipeline, the build will be deployed to the production environment. The following diagram helps us understand this process:

The preceding diagram helps to understand the difference between continuous delivery and continuous deployment. Continuous delivery requires human intervention to finally deploy newly built software to the production. This method may help business people decide when to introduce a new change to the market. On the other hand, continuous deployment doesn't require any human intervention to deploy newly built software that has passed all the tests in the deployment pipeline and will be directly deployed to the production.

Based on the business needs, either of these models can be implemented in DevOps. It is important to understand that at least continuous delivery has to be configured to successfully implement DevOps.

AWS tools for CI/CD

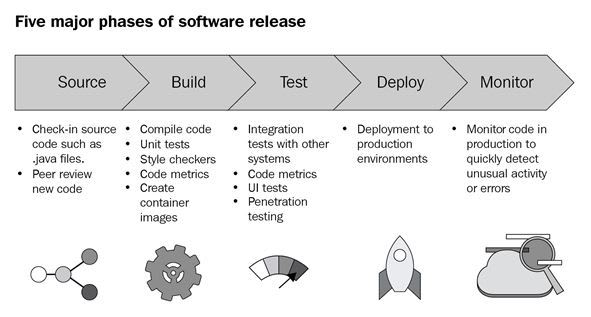

At a higher level, the software development life cycle can be broken down into five steps, which are as source, build, test, deploy, and monitor, as shown in the following screenshot:

We will go through each of these steps in detail:

- Source: At this first stage of the software development life cycle, developers commit their source code many times a day to a common branch in the common source code repository to identify integration errors at the early stages. Once a feature, development, bug fix, or hotfix is complete on the relevant branch, it will be merged with the master branch to release the development into production, passing through various environments, such as development and test, and passing all the tests specified in the CodePipeline.

- Build: In an earlier stage, once development is peer reviewed and merged to the production, it usually triggers the build process. The source code will be fetched from the specified source code repository and branch, and the code quality will be checked against enterprise standards. Once the code quality is accepted, the code is ready to compile. If the source code is written in a compiler programming language such as Java, C/C++, or .NET, it will be converted to binary. This binary output is also called artifacts and is stored in the artifacts repository. The Docker container files are converted into the Docker container image and are stored to the container image repository.

- Test: At this stage, artifacts are fetched from the artifacts repositories and deployed to one or more test environments to carry out one or more tests, such as load testing, UI testing, penetration testing, and so on. In general, it is recommended to have a test environment that's identical to the production environment in order to carry out precise tests.

- Deploy: Once source code has been successfully passed through all the build and tests phases that are defined in the CodePipeline, it is ready to be deployed into production. In the case of continuous delivery, the source code will wait for human intervention before it is deployed into the production environment at the pre-defined released date. If continuous deployment has been configured, then it doesn't matter that an output artifact of one action is used in consecutive stages or some future stage. The code will be deployed to production automatically.

-

Monitor: Once the revised software has been deployed to production, it is time to monitor the application and infrastructure resources for the errors and resource consumption respectively.

Now let's see the same software development life cycle with AWS services:

Looking at the preceding diagram, AWS services can be explained as follows:

- AWS CodeCommit: This is a fully managed version control source code repository to host private Git repositories. It is well suited for most of the version control needs in enterprises. It doesn't have any limitations on file size, file type, or repository size.

- AWS CodeBuild: This is a fully managed continuous integration service that compiles source code, runs tests, and produces software packages called artifacts. Artifacts are ready to deploy to production. It builds automatically to support running multiple builds concurrently. Each commit or merge to a master or configured branch will trigger an individual build process.

- AWS CodeBuild + third-party tools: AWS CodeBuild can be used with third-party tools to satisfy custom build and test requirements.

- AWS CodeDeploy: This is a fully managed deployment service that automates software deployment to AWS compute services such as Amazon EC2, AWS Farget, AWS Lambda, or on-premises servers. This service eases the frequent release of new application features by managing the complexity of updating applications. It eliminates the time-consuming and error-prone process of updating applications. It works seamlessly with various configuration management, source control, CI, continuous delivery, and continuous deployment tools.

AWS CodeStar: This combines various DevOps offerings from AWS web services under one roof, such as AWS CodeCommit, AWS CodeDeploy, AWS CodeBuild, and AWS CodePipeline. Each of these AWS web services can be used individually from their web console. But rather than jumping from one web console to another and dealing with individual resources in each of these AWS web services, AWS CodeStar provides a seamless experience by combining all the DevOps services in a single place as a project.

CodeStar provides a ready GUI-based step-by-step template to quickly and easily deploy DevOps for a new project. CodeStar provides a sample template for the web application, web service, Alexa skills, Static website, and AWS Config Rule using C#, Go, HTML 5, Java, Node.js, PHP, Python, or Ruby to deploy on AWS Elastic Beanstalk, Amazon EC2, or AWS Lambda.

AWS X-Ray: This service provides a platform to view, filter, and gain insights into the collected data to identify issues and optimization opportunities. AWS X-Ray helps developers to analyze and debug applications using distributed tracing. In a nutshell, this service helps us to understand the root cause of issues, errors, and bottlenecks in the application. The following screenshot is an example of application data collection and reporting:

Amazon CloudWatch: This is a service that monitors AWS resources and customer applications running on AWS in real-time. It is automatically configured to provide some default metrics for each AWS service. These metrics vary for each AWS service and resource type. It collects monitoring and operational data in the form of logs, metrics, and events.

AWS Cloud9: This is a rich cloud-based Interactive Development Environment (IDE) for writing, running, and debugging code in the web browser. It also has a built-in terminal preconfigured with AWS CLI. It saves you from installing and configuring an IDE on a developer's local machine. It also sets developers free to use the same IDE from any machine or device through a web browser. It is secured and also has collaboration features.

To summarize, CI/CD is a mechanism that optimizes and automates the development life cycle. This post introduced you to CI/CD on AWS and described how you can use it with your AWS workloads. To further understand Elastic Beanstalk and AWS Lambda, you can read the book AWS Certified Developer - Associate Guide - Second Edition by Packt Publishing.

Top comments (0)