Authored by Conductor Community Member Ankit Agarwal

OpenMF’s Payment Hub is based on the microservice architecture. Payment Hub uses orchestration to have workflow designs and to communicate between microservices, and it is based on Camunda Zeebe.

Since Camunda Zeebe is not Digital Public Goods (DPG) compliant, we wanted to modify our microservices architecture by updating the orchestration layer with a DPG-compliant orchestration platform, and we ended up using Conductor OSS as a parallel option for getting the DPG-compliant version.

Payment Hub

Payment Hub has a production-ready architecture supporting deployments of real-time payments and switches. It is an enterprise-grade tool with extensible architecture to connect and integrate with multiple third-party payment providers.

The Payment Hub is based on a microservice architecture that uses orchestration to have workflow designs and to communicate between microservices. Payment Hub utilizes Camunda Zeebe as the orchestration tool.

POC

The POC was to use Conductor OSS as the orchestration tool for the Payment Hub System to have a DPG-compliant version.

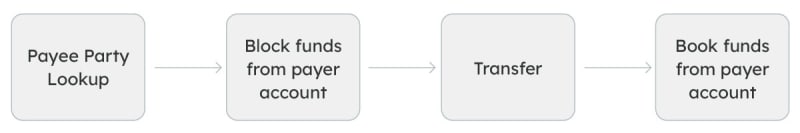

The POC goal is to transfer money from the payer account to the payee account. The scope of POC is to mock the payee parts (i.e., deposit) and execute the payer parts (i.e., withdrawal).

So the flow is as follows:

The POC included the following steps:

- Setup Conductor OSS

- Design Workflow

- Create Exporter Module

- Write Importer Module

- Reuse Operation app of Payment Hub for MySQL

- Channel Connector microservice (Only modifying the orchestrator parts)

- Ams Mifos Connector microservice (Only modifying the orchestrator parts)

The idea is only to make changes related to the orchestration tool, not other logical components.

Architecture Design of Payment Hub with Conductor OSS as Orchestrator

Here is the system design for POC:

Conductor Setup

We required to have following components in our setup:

- Conductor Server

- Conductor UI (Was Optional, but we found it beneficial to have it in debugging and stuff)

- Database - PostgreSQL

- Indexer - ElasticSearch

- Cache - Default is redis but instead we used PostgreSQL though we still deployed redis

The requirement was to deploy ph-ee-dpg (application) to Kubernetes in the AWS cloud environment. Since we use Helm to manage our cluster deployments, we also had to create a barebone Helm chart for this system.

To set up:

helm dep up netflix-conductor

helm upgrade -f netflix-conductor/values.yaml {release-name} netflix-conductor/ --install --namespace {namespace-name}

Exporter Module

In this module we created a custom workflow status listener and custom task status listener which implements WorkflowStatusListeners and TaskStatusListeners respectively.

These listeners receive the workflow and task payload at different status changes. They simply put these on Kafka.

Then, we added a configuration file that ensures that this particular implementation of listeners is used instead of default stubs if the property conductor.workflow-status-listener.type is set to custom.

@Configuration

public class CustomConfiguration {

@ConditionalOnProperty(name = "conductor.workflow-status-listener.type", havingValue = "custom")

@Bean

public WorkflowStatusListener workflowStatusListener() {

return new CustomWorkflowStatusListener();

}

@ConditionalOnProperty(name = "conductor.task-status-listener.type", havingValue = "custom")

@Bean

public TaskStatusListener taskStatusListener() {

return new CustomTaskStatusListener();

}

}

And then we added the following property in our Helm chart:

conductor.workflow-status-listener.type=custom

conductor.task-status-listener.type=custom

This exporter module is passed on as a dependency in the Conductor server.

Importer Module

The importer module is something very intrinsic to the PH solution. It receives payloads from Kafka and parses them to make them ready to be put in the database.

- Extract input parameters from task status listeners when status is scheduled.

- Fetch output parameters from payload when status is marked as completed.

We have a JSON parser and Inflight transaction managers to convert variables(parameters) into meaningful values to be saved in the database.

It has:

- A variable table, to save all unique sets of parameters(input/output) throughout the workflow.

- A task table, to save all the task details executed in the flow.

- A transfer table that is specific to the business requirement, which is populated through the workflow execution.

Operations App

We set up the database with initial scripts and provided APIs to fetch operations data. (Used without any significant modification from the existing payment hub).

Channel Connector

It is one of the microservice where the transfer API will be triggered, which will eventually start the workflow.

We created a generic class to start any workflow. It just takes workflowId and Input parameters.

So, the Conductor part is abstracted, making it easier for others to work.

AMS Mifos Connector

This contains the implementation of the simple tasks in the workflow.

Workflow

We used Orkes playground to design workflow; it's easier in Orkes than in the default Conductor UI. We then used the generated JSON in Conductor OSS.

Here’s how the workflow looks like:

To brief the entire execution flow:

- A request is sent to the transfer API in the channel connector. Channel Connector processes the request and starts the Conductor workflow.

- A variable task - party lookup is setting a variable partyLookupFailed as false. This is done to mock this step.

- Then, a switch task terminates the flow if part lookup fails. (Not possible here).

- A simple task - block funds have its implementation in another microservice AMS connector. It blocks money from the AMS (Account Management Service).

- Then again we are using variables to mock transfer.

- Then, the book funds tasks are implemented in the Ams mifos connector and books money from the payer account.

- We have switched tasks to terminate the flow if the output parameter from the block and book funds task has transferPrepareFailed and transferCreateFailed as true, respectively.

- Otherwise, the happy flow moves to completion.

We marked the POC as successful after an integration test, where the workflow status was asserted to be completed, and there was a successful reduction in the transfer amount from the payer's account.

Check out the integration test here.

In a nutshell, Conductor OSS made our orchestration layer easier and helped us comply with the DPG-compliant payment hub.

Top comments (0)