I will not explain how transformer models work but to show their applications on multilingual use-cases, I will be using Swahili datasets to train multilingual transformers and you can access the data from here to understand the problem collected to solve.

I assume that the reader has prior knowledge of classical Machine learning and Deep learning.

What are transformers?

Transformers are transfer learning deep learning models that are trained with a large set of datasets to perform different uses cases in the field of Natural Language Processing such as text classification, question answering, machine translation, speech recognition, and so on.

According to Wikipedia, Transformers are deep learning model that adopts the mechanism of self-attention, deferentially weighting the significance of each part of the input data. Are used primarily in the fields of Natural Language Processing(NLP) and Computer Vision(CV).

Training Deep Learning models with small datasets is more likely to face overfitting because Deep Learning is essential for complex pattern recognition with a lot of parameters, they do require large datasets to perform/generalize well with the nature of challenges.

The advantage of using transformers is training over a large corpus of data from Wikipedia and different book collections which makes things smooth when you what to validate this model with the new set of data for your specific problem. Some of the techniques I know when it comes to working with other challenges using datasets that are not in English such as Arabic sentiments, Swahili sentiments, German sentiments, and so on.

- Translate your data(data of your own language) into English then solve the challenge using English-trained models. This depends on the nature of the problem you're trying to solve or if you think there is a perfect model to perform the translation task.

- Augment your training data(data of your own language). This can be done by taking a large English dataset then translating it into your own language to cope with the nature of the challenge you're solving then combine with the small data of your own language and after fine-tune the transformers models.

- The last technique is to retrain the large models(transformer) with the data of your own language most known as Transfer Learning in Natural Language Processing and this is the technique I will be working with today.

Let's dive into the main topic of this article, we are going to train a transformer model for Swahili news classification, Since transformers are large to make the task simple we need to select a wrapper to work with, if you are good with PyTorch you can use PyTorch Lightning a wrapper for high-performance AI research, to wrap the transformers but today lets go with ktrain from Tensorflow Python Library.

ktrain is a lightweight wrapper for the deep learning library TensorFlow Keras (and other libraries) to help build, train, and deploy neural networks and other machine learning models. Inspired by ML framework extensions like fastai and ludwig, ktrain is designed to make deep learning and AI more accessible and easier to apply for both newcomers and experienced practitioners. With only a few lines of code, ktrain allows you to easily and quickly.

If you want to gain more about ktrain here we go.

I recommend using google colab or kaggle kernel, in order to make the task simple and computational power from these platforms can make things go in a smooth way.

The first task is to update pip and install ktrain in you're working environment, This can be done simply by running a few commands below

# update pip package & install ktrain

!pip install -U pip

!pip install ktrain

Then import all the required libraries for preprocessing text data and other computational purposes.

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

import os

from nltk import word_tokenize

import string

import re

import warnings

warnings.filterwarnings("ignore")

It's time to load our dataset and inspect how they look like which features are contained with it.

# loading datasets from data dir

df = pd.read_csv("data/Train.csv")

# Total Records

print("Total Records: ", df.shape[0])

# preview data from top

df.head()

data preview in first 5 rows

You can see the datasets have 3 columns ID as a unique identification of each sentiment/news, content which contains the news, and category which contain labels of each specific news/sentiment. Then let's visualize the target column to understand the distribution of each class news from the dataset.

# Let's visualize the Label distiributions using seaborn

plt.figure(figsize=(15,7))

sns.countplot(x='category',data=df)

plt.title("DISTRIBUTION OF LABELS")

plt.show()

Labels distribution

You can see, the dataset we are working on contains 5 classes(labels) and is not well balanced because the majority of the news collected is of kitaifa while a minority of them were of kimataifa and Burudani.

Then we can consider first cleaning text before fitting to our transformer by removing punctuation, removing digits, converting to lower case, removing unnecessary white spaces, removing emojis, removing stopwords, Tokenization, and so on. I created the clean_text function to perform such tasks.

# function to clean text

def clean_text(sentence):

'''

function to clean content column, make it ready for transformation and modeling

'''

sentence = sentence.lower() #convert text to lower-case

sentence = re.sub('‘','',sentence) # remove the text ‘ which appears to occur flequently

sentence = re.sub('[‘’“”…,]', '', sentence) # remove punctuation

sentence = re.sub('[()]', '', sentence) #remove parentheses

sentence = re.sub("[^a-zA-Z]"," ",sentence) #remove numbers and keep text/alphabet only

sentence = word_tokenize(sentence) # remove repeated characters (tanzaniaaaaaaaa to tanzania)

return ' '.join(sentence)

Then, it is time now to apply our clean_text to the content

# Applying our clean_text function on contents

df['content'] = df['content'].apply(clean_text)

df.head()

Now you can notice the difference in the content of our datasets.

Then let's split datasets into training and validation sets, training set will be used to train our model and the validation set for model validation of its performance on new data.

df = df[['category', 'content']]

SEED = 2020

df_train = df.sample(frac=0.85, random_state=SEED)

df_test = df.drop(df_train.index)

len(df_train), len(df_test)

85% of the datasets will be used to train the model and 15% will be used to validate our model to see if will generalize well and evaluate the performance. Always don't forget to set seed value to help get predictable, repeatable results every time. If we do not set a seed, then we get different random numbers at every invocation.

With the Transformer API in ktrain, we can select any Hugging Face transformers model appropriate for our data. Since we are dealing with Swahili, we will use multilingual BERT which is normally used by ktrain for non-English datasets in the alternative text_classifier API in ktrain. But you can opt for any other multilingual transformer model.

Let's import ktrain and set some common parameters for our model, the important thing is to specify which transformer model are you going to use, then make sure that is compatible with the problem you're solving. Let's set our transformer model to bert-base-multilingual-uncased and parameters such as

-

MAXLEN specifies to consider the first 128 words of each news content. This can be adjusted depending on the computational power of your machine, be careful with it whenever you set high means you want to cover large content and if your machine is not capable to handle such computation it will cry with

Resource exhausted error, so make sure to take this into consideration. - batch_size as the number of training examples utilized in one iteration, let's use 32

- learning_rate as the amount that the weights are updated during training, let's use learning_rate of 5e-5, also you can adjust to see how your model can behave, small it is recommended.

- epochs the number of passes of the entire training dataset the algorithm has to be completed, let's use 3 epochs for now so that our model will use a few minutes.

import ktrain

from ktrain import text

# selecting transformer to use

MODEL_NAME = 'bert-base-multilingual-uncased'

# Common parameters

MAXLEN = 128

batch_size = 32

learning_rate = 5e-5

epochs = 3

After parameter settings. It's time to train our first transformer with Swahili datasets by specifying the training and validation set to let the preprocessor function work with the text and then after to fit our model so that it can learn from the datasets. The process can take a couple of minutes to complete depending on the computational power of your choice.

t = text.Transformer(MODEL_NAME, maxlen = MAXLEN)

trn = t.preprocess_train(df_train.content.values, df_train.category.values)

val = t.preprocess_test(df_test.content.values, df_test.category.values)

model = t.get_classifier()

learner = ktrain.get_learner(model, train_data=trn, val_data=val, batch_size=batch_size)

learner.fit(learning_rate, epochs)

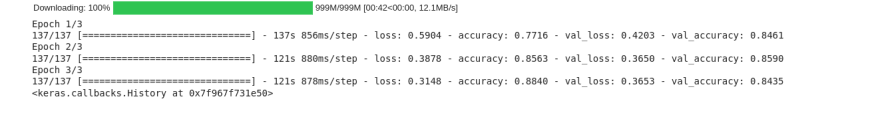

Wow, congrats the task of training transformers for Swahili datasets is over now it's to test the performance of our Swahili model that we have trained.

Now you can see our trained model with an accuracy score of 88.40% of train_set and 84.35 of the validation set

I have copied the first news content from the Train.csv file to see how the Swahili model can work with it and it does the right classification because the sentence is long you can check on the notebook.

Let's try to predict with short contents which range within those categories(Kitaifa, michezo, Biashara, Kimataifa, Burudani) of the datasets used to train this model and then see what will be the output.

Swahili = "Simba SC ni timu bora kwa misimu miwili iliyopita katika ligi kuu ya Tanzania"

English = "Simba S.C are the best team for the last two seasons in the Tanzanian Premier League "

correct Swahili model predictions

It's true that the content of this text is based on sports(Michezo), so that is how you can train your own transformer model for Swahili news classification.

If you want to access the full codes used in this article here you go.

Thank you, hope you enjoyed and learned a lot from this article, feel free to share with others.

Top comments (1)

Thanks for the articles,,,I will repeat the whole process to get a deep understanding.