Introduction

I have been exploring and playing around with the Apache OpenNLP library after a bit of convincing. For those who are not aware of it, it’s an Apache project, supporters of F/OSS Java projects for the last two decades or so (see Wikipedia). I found their command-line interface pretty simple to use and it is a great learning tool for learning and trying to understand Natural Language Processing (NLP). Independent of this post, you can find another perspective on exploring NLP concepts using Apache OpenNLP, all of this directly from the realms of your command-prompt.

I can say almost everyone in this space is also aware and familiar with Jupyter Notebooks (in case you are not, have a look at this video or [1] or [2]). Here onwards we will be doing the same things you have been doing with your own experiments from within the realms of the notebook.

Exploring NLP using Apache OpenNLP

Command-line Interface

I’ll refer you to the post where we cover the command-line experience with Apache OpenNLP, and it’s a great way to familiarise yourself with this NLP library.

Jupyter Notebook: Getting started

Do the following before proceeding any further:

$ git clone git@github.com:neomatrix369/nlp-java-jvm-example.git

or

$ git clone https://github.com/neomatrix369/nlp-java-jvm-example.git

$ cd nlp-java-jvm-example

And then see Getting started section in the Exploring NLP concepts from inside a Java-based Jupyter notebook part of the README before proceeding further.

Also, we have chosen the JDK to be GraalVM by default, you can see this from these lines in the console messages:

<---snipped-->

JDK_TO_USE=GRAALVM

openjdk version "11.0.5" 2019-10-15

OpenJDK Runtime Environment (build 11.0.5+10-jvmci-19.3-b05-LTS)

OpenJDK 64-Bit GraalVM CE 19.3.0 (build 11.0.5+10-jvmci-19.3-b05-LTS, mixed mode, sharing)

<---snipped-->

Note: a docker image has been provided to be able to run a docker container that would contain all the tools you need. You can see the *shared* folder has been created which is linked to the volume mounted into your container, mapping your the folder from the local machine. So anything created or downloaded into the shared folder will be available even after you exit your container!*

Have a quick read of the main README file to get an idea of how to go about using the docker-runner.sh shell script, and take a quick glance at the Usage section of the scripts as well*.*

Running the Jupyter notebook container

See Running the Jupyter notebook container section in the Exploring NLP concepts from inside a Java-based Jupyter notebook part of the README before proceeding further.

All you need to do is run this command after cloning the repo mentioned in the links above:

$ ./docker-runner.sh --notebookMode --runContainer

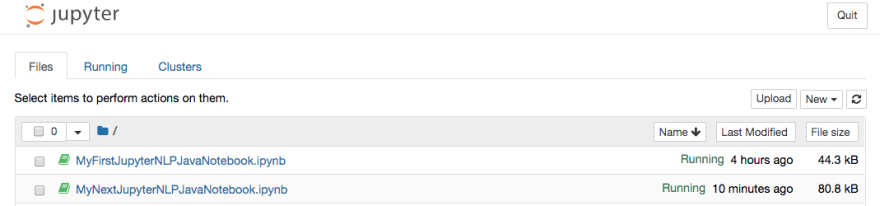

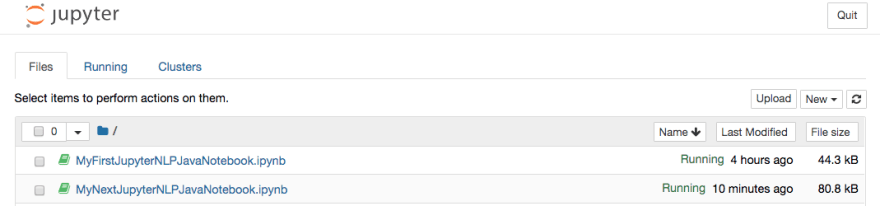

Once you have the above running, the action will automatically open load the Jupyter notebook interface for you into a browser window. You will have a couple of Java notebooks to choose from (placed in the shared/notebooks folder on your local machine):

Installing Apache OpenNLP in the container

When inside the container in the notebook mode, you have two approaches to install Apache OpenNLP:

From the command-line interface (optional)

See From the command-line interface sub-section under the Installing Apache OpenNLP in the container section in the Exploring NLP concepts from inside a Java-based Jupyter notebook part of the README before proceeding further.From inside the Jupyter notebook (recommended)

See From inside the Jupyter notebook sub-section under the Installing Apache OpenNLP in the container section in the Exploring NLP concepts from inside a Java-based Jupyter notebook part of the README before proceeding further.

Viewing and accessing the shared folder

See Viewing and accessing the shared folder section in the Exploring NLP concepts from inside a Java-based Jupyter notebook part of the README before proceeding further.

This will also be covered in a small way via the Jupyter notebooks in the following section. You can see directory contents via the %system Java cell magic and then from the command prompt a similar files/folders layout.

Performing NLP actions in a Jupyter notebook

While you have the notebook server running you will see this launcher window with a list of notebooks and other supporting files show up as soon as the notebook server launches:

Each of the notebooks above have a purpose, MyFirstJupyterNLPJavaNotebook.ipynb shows how to write Java in a IPython notebook and perform NLP actions using Java code snippets that invoke the Apache OpenNLP library functionalities (see docs for more details on the classes and methods and also the Java Docs for more details on the Java API usages).

The other notebook MyNextJupyterNLPJavaNotebook.ipynb runs the same Java code snippets on a remote cloud instance (with the help of the Valohai CLI client) and returns the results in the cells, with just single commands. It’s fast and free to create an account and use within the free-tier plan.

We are able to examine the below Java API bindings to the Apache OpenNLP library from inside both the Java-based notebooks:

- Language Detector API

- Sentence Detection API

- Tokenizer API

- Name Finder API (including other examples)

- Parts of speech (POS) Tagger API

- Chunking API

- Parsing API

Exploring the above Apache OpenNLP Java APIs via the notebook directly

We are able to do this from inside a notebook, running the IJava Jupyter interpreter which allows writing Java in a typical notebook. We will be exploring the above named Java APIs using small snippets of Java code and see the results appear in the notebook:

So go back to your browser and look for the MyFirstJupyterNLPJavaNotebook.ipynb notebook and have a play with it, reading and executing each cell and observing the responses.

Exploring the above Apache OpenNLP Java APIs via the notebook with the help of remote cloud services

We are able to do this from inside a notebook, running the IJava Jupyter interpreter which allows writing Java in a typical notebook. But in this notebook, we have taken it further and used the %system Java cell magic and the Valohai CLI magic instead of running the Java code snippets in the various cells like the previous notebook.

So that way the downloading of the models and processing of the text using the model does not happen on your local machine but one a more sophisticated remote server in the cloud. And you are able to control this process from inside the notebook cells. This is more relevant when the models and the datasets to process are large and your local instance(s) do not have the necessary resources to support long-standing NLP processes. I have seen NLP training and evaluations to take long to finish and hence high-spec resources are a must.

And again go back to your browser and look for the MyNextJupyterNLPJavaNotebook.ipynb notebook and have a play with it, reading and executing each cell. All the necessary details are in there including links to the docs and supporting pages.

To get a deeper understanding of how these two notebooks were put together and how they work operationally, please have a look at all the source files.

Closing the Jupyter notebook

Make sure you have saved your notebook before you do this. Switch to the console window from where you ran the docker-runner shell script. Pressing Ctrl-C in the console running the Docker container gives you this:

<---snipped--->

[I 21:13:16.253 NotebookApp] Saving file at /MyFirstJupyterJavaNotebook.ipynb

^C[I 21:13:22.953 NotebookApp] interrupted

Serving notebooks from local directory: /home/jovyan/work

1 active kernel

The Jupyter Notebook is running at:

http://1e2b8182be38:8888/

Shutdown this notebook server (y/[n])? y

[C 21:14:05.035 NotebookApp] Shutdown confirmed

[I 21:14:05.036 NotebookApp] Shutting down 1 kernel

Nov 15, 2019 9:14:05 PM io.github.spencerpark.jupyter.channels.Loop shutdown

INFO: Loop shutdown.

<--snipped-->

[I 21:14:05.441 NotebookApp] Kernel shutdown: 448d46f0-1bde-461b-be60-e248c6342f69

This shuts down the container and you are back to your local machine command-prompt. Your notebook stays preserved in the shares/notebooks folder on your local machine, provided you have been saving them as you kept changing them.

Other concepts, libraries and tools

There are other Java/JVM based NLP libraries mentioned in the Resources section below, for brevity we won’t cover them. The links provided will lead to further information for your own pursuit.

Within the Apache OpenNLP tool itself we have only covered the command-line access part of it and not the Java Bindings. In addition, we haven’t gone through all the NLP concepts or features of the tool again for brevity have only covered a handful of them. But the documentation and resources on the GitHub repo should help in further exploration.

You can also find out how to build the docker image for yourself, by examining the docker-runner script.

Limitations

Although the Java cell magic does make a difference and helps run commands. Even though it’s a non-Python based notebook we could still run shell commands and execute Java code in our cells and do some decent NLP work in the Jupyter notebooks.

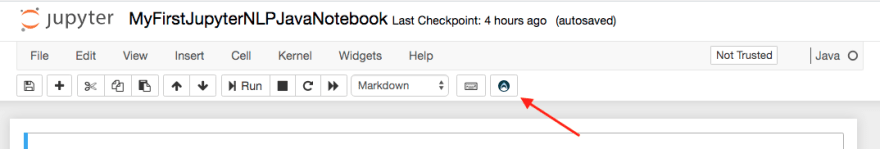

If you had a python-based notebook, then Valohai’s extension called Jupyhai specially made for such purposes would suffice. Have a look at the Jupyhai sub-section in the Resources section of this post (at the end of the post). In fact, we have been running all our actions in the Jupyhai notebook, although I have been calling it Jupyter Notebook, have a look at the icon on the toolbar in the middle part of the panel in the browser):

Conclusion

This has been a very different experience than most of the other ways of exploring and learning, and you can see why the whole industry specifically speaking areas that cover Academia, Research, Data Science and Machine Learning have taken this approach like a storm. We still have limitations but with time even they will be overcome making our experience a smooth one.

Seeing your results in the same page where your code sits is a lot assuring and gives us a short and quick feedback loop. Especially being able to see the visualisations and change them dynamically and get instant results can cut through the cruft for busy and eager students, scientists, mathematicians, engineers and analysts in every field not just Computing or Data Science or Machine Learning.

Resources

IJava (Jupyter interpreter)

- Github

- Docs

-

[%system](https://github.com/SpencerPark/IJava/pull/78)Java cell magic implementation - Docker image with IJava + Jupyhai + other dependencies

Jupyhai

- Version Control for Jupyter Notebooks

- Blogs on Jupyter Notebooks

- Version control for Jupyter Notebooks (video)

- Docs

Apache OpenNLP

- nlp-java-jvm-example GitHub project

- Apache OpenNLP | GitHub | Mailing list | @apacheopennlp

- Docs

- Download

- Legends to support the examples in the docs

- Find more in the Resources section in the README

Other related posts

- How to do Deep Learning for Java?

- NLP with DL4J in Java, all from the command-line

- Exploring NLP concepts using Apache OpenNLP

About me

Mani Sarkar is a passionate developer mainly in the Java/JVM space, currently strengthening teams and helping them accelerate when working with small teams and startups, as a freelance software engineer/data/ml engineer, more….

Twitter: @theNeomatrix369 | GitHub: @neomatrix369

Top comments (0)