RSS Bridge is my latest find in the automation space. While I already had Huginn installed as well as FAAS for creating and running serverless functions, RSS Bridge has been a great addition to this stack. What it is is a simple way to turn anything into a feed. You can get tweets by a specific user, trending projects on Github, popular shots from Dribble, and much much more. RSS Bridge can output the results as an RSS/Atom feed, HTML, or as JSON. The latter is the real killer feature when combining it with more automation using Huginn for exmaple. RSS bridge will cache the feed so no risk of hitting the underlying site too much either. It can be hosted on just about anything as it is a PHP application with no database. You can easily host it on Heroku or use Dokku like I did. On my VPS it uses about 50 mb of RAM so it is a real tiny little thing.

If you need a cheap and solid server then feel free to use my referral code to get €20 in credit at Hetzner. Read my review for more information.

Each resulting bridge is a simple URL that you can use directly or pass it along for further processing.

One of the issues is that the agents sometimes don't work. Many sites don't want this kind of scraping done to them. Instagram is a great example. RSS Bridge has a bridge to grab images from a user but it does not currently work. There is an issue to resolve it on Github but currently no go. It can be frustrating to see a bridge you want to use and then it doesn't work at all once you use it. However, what works works really well.

RSS bridge shines when combined with Huginn as I will demonstrate with a couple of examples.

Example #1: Dilbert strips to Slack

Say that you want to get the latest Dilbert strip posted to your private Slack channel. To do this you'll need Huginn, RSS Bridge and a FAAS function to extract the largest image from the page.

The Huginn scenario for this consists of three agents:

- A Website agent to fetch posts from the feed from RSS Bridge. The feed URL will be something like https://yourssbridge.com?action=display&bridge=Dilbert&format=Json

- A Website agent to receive events from 1 and use the serverless function extract the largest image from the page.

- A Slack agent to post the images to Slack.

I've added a gist with the scenario below. Do note that you have to use your own serverless function and your own RSS Bridge and Slack URLs.

https://gist.github.com/mskog/092f655ea7013fcd59db7d9c3c1fbe9f

With this you get the latest Dilbert strip image posted to your Slack channel of choice every day.

Example #2: Reddit digest

If you want to avoid refreshing Reddit all day every day then perhaps you would prefer a simple email with the content from your favorite subreddit? It is very easy to do with Huginn and RSSBridge. You do need to add an SMTP service to Huginn to use it. Sendgrid is free for up to 100 emails a day which should be plenty unless you need hundreds of subreddit digests.

https://gist.github.com/mskog/b361447167e0eb7a66ee67f1f1633eb4

Bonus Example: Myfitnesspal dashboard

I use Myfitnesspal to track my macro nutrients and caloric intake. I wanted a nice dashboard to show me this over time and some completely necessary graphs to track things. Myfitnesspal has an API that can do this but it is only for registered partners. Exporting data is only available for paying users and I don't want to do that manually. So, enter our good friend the web scraper.

To do this you need Huginn, FAAS with a Puppeteer function, Influxdb and Grafana. I wrote about the latter here. Feel free to use my function to scrape the data from Myfitnesspal. It is a real hack job but it gets the job done every day. Then all you need to do is to use a Website agent to execute the function and a Post agent to add the data to Influxdb. Then you can use Grafana to make a nice graph like so:

Bonus Example #2: Withings dashboard

Let us stick with the fitness stuff shall we. I own a Withings WIFI enabled smart scale for some reason. I weigh myself daily and the scale will upload the data to the cloud. I would like to have this data in Grafana as well. Withings does have an API but it is an oauth2 enabled atrocity that is quite painful to use for such a simple thing as getting my data. I spent a solid weekend trying to get this working before giving up and resorted to scraping again. An hour later I had my data in Grafana just like with Myfitnesspal. Once again you can use my FAAS function if you wish!

The procedure to get this going is identical to the Myfitnesspal one. Simply use a Website agent to get the data from the FAAS function and then use a Post agent to add it to Influx. I will not post an example graph because I don't want to scare anyone.

Now all I need is to get the data out of my weightlifting tracker app and we're in business.

Advanced Example: Weightlifting stats dashboard

Time for something a bit more involved. My current lifting tracker app can export the data to CSV and share it using the default Android share. Huginn has several agents that can deal with files so as long as we can get the file to Huginn we are golden. I would love to have my own data available so that I can graph it next to the other stuff above.

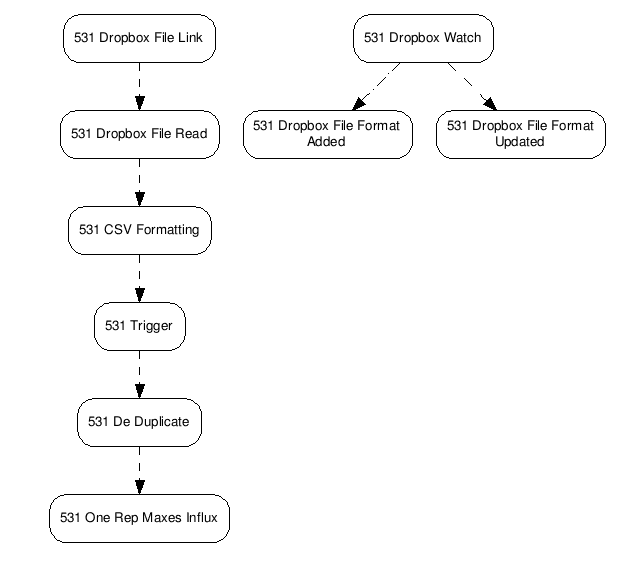

To do this we need to do three things:

- Get the CSV data into Huginn

- Format the data and remove duplicates

- Import the data in InfluxDB

To solve the first problem we will use Dropbox. Huginn has Dropbox integration built in so this step will not be too hard. You do need to register an application in the Dropbox App Console and make sure the token is set to permanent. You can use a scoped app if you wish. Then you need to setup a Dropbox Watch Agent to watch for changes in the directory as well as some trigger agents to avoid running the workflow when a file is deleted. Finally we use a Dropbox File Url Agent to create a link to the raw file that we can read in step 2.

It gets much easier now since we have a URL for the file itself. Simply use a Website agent to read the file, a CSV agent to format the data, a Trigger agent to select the correct rows, and a De duplication agent to get rid of duplicates. Finally we use a Post agent to get the data to Influx. Ok maybe not that simple.

This was a lot of work but now I have a fully automated workflow to get the data out of my app and into my own data store. All I have to do now is hit Export in the app and save the file to Dropbox. Do note that we did not need to use anything but Huginn and Dropbox to do all of this.

The complete scenario is available here:

https://gist.github.com/mskog/88a246000d1db4c28753ac76d0481627

Conclusion

I am getting more and more excited about using things like Huginn, RSS Bridge, and FAAS to create complicated automated workflows. I have even started building applications that take advantage of this to do things like scraping websites and processing data. Do you have any good ideas for what to do next? Post a comment!

Top comments (0)